Big Data — the Driving Force of Autonomous Vehicles and How We Can Help You Down the Road

Table of Contents

Big data has revolutionized nearly every industry around. From more obvious applications in media and advertising, where it is used to predict trends and examine audiences, to more subtle ones, such as healthcare or energy management, in which large quantities of information are applied in exploration of the biggest challenges we face nowadays. Automotive industry isn't an exception when it comes to big data, its analytics and IoT. They are used in many steps of a car manufacturing and marketing process. Yet, doubtlessly, the most nifty thing automotive companies can do with their vast data is invest into manufacturing, testing or use of autonomous vehicles.

According to one of the first large reports on the automotive AI companies by MarketsandMarkets, this industry's worth was expected to reach USD 10 Billion by 2025. More recent statistics have been showing even higher numbers. For example, researchers at Global Markets Insights (2019) predict an increase from USD 1 Billion in 2019 to USD 12 Billion by 2026. With pretty much all major IT companies and big manufacturers confident in the inevitable success of such vehicles, it seems a safe bet. As the famous computer scientists Qi Lu said in his interview to Wired: “In autonomous systems, the car is the first major commercial application that is going to land.” Automated cars are a futuristic sci-fi technology worth developing and investing into. And our teams at Label Your Data are here to help.

A Look Under the Hood

So how does it all work? When driving, we use many of our senses and complex processes, sometimes without even realizing it. We use sight to notice switches of the traffic light, employ spatial understanding to position the car on the road and keep it in a correct lane. We use sound to understand how close other cars are and memory to recall a road sign. When building an autonomous vehicle, developers want AI to understand all of these processes too, besides to be able to process, train and learn from its mistakes.

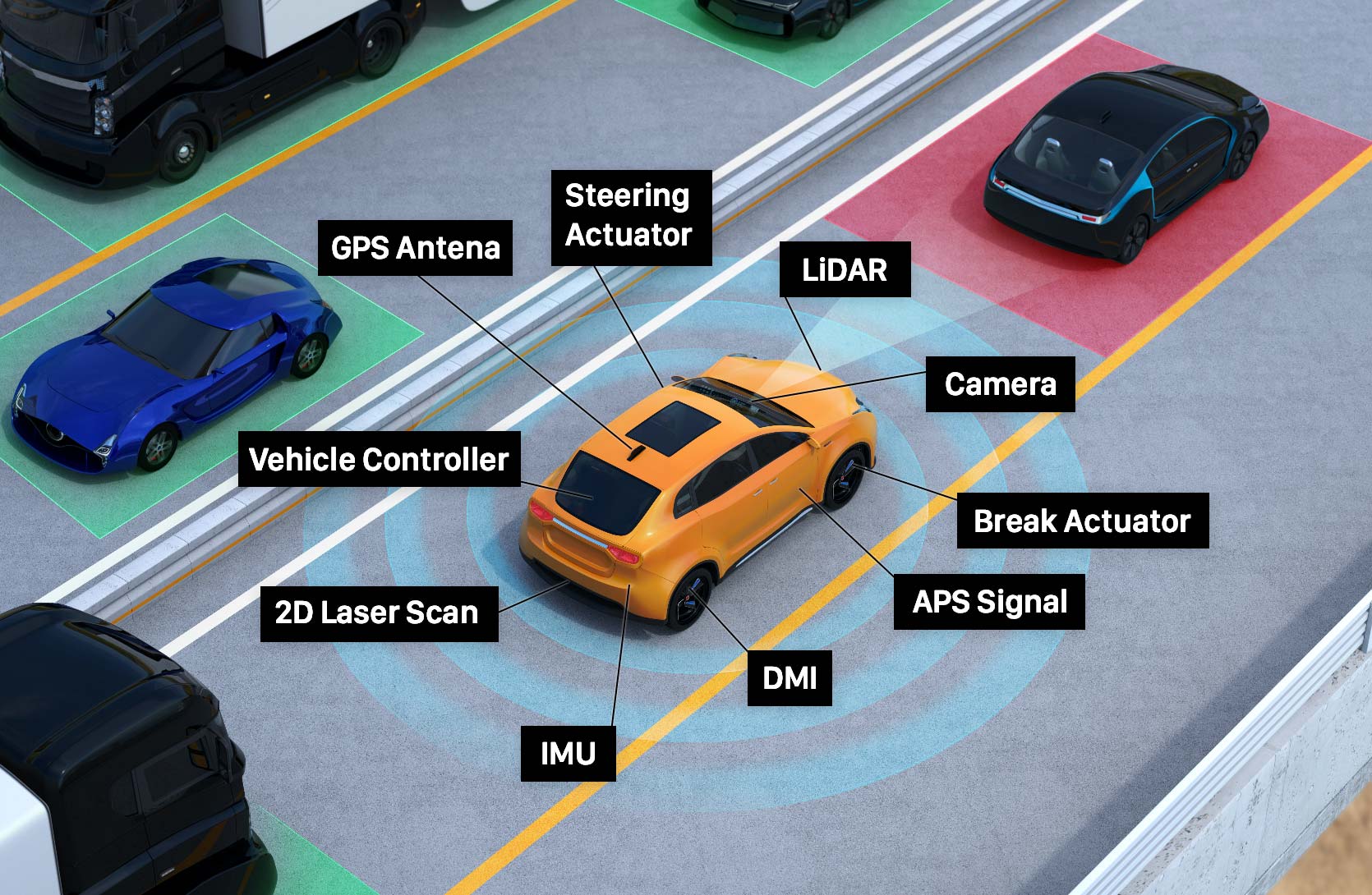

To achieve such ambitious goals, our clients use the combination of various innovative hardware and AI technologies, as well as good test, training and validation data. Some of the essential hardware for autonomous cars include: radars, LiDARs and other sensors, cameras, GPS, Central Processing Unit and many more. All of these create computer vision — how a car sees its environment. Arguably, a much more interesting part comes when we look into how exactly all of these parts communicate with each other.

Annotation Software and Data

Sensors and cameras gather a vast pool of information — files, raw images, videos, point clouds. With all this data, the computer's AI can enhance the car's original vision. Usually, an AI engineer establishes the design and structure for the software and AI and chooses how it is going to process the information. However, quite importantly, prior to giving the data to AI, it must be processed. Since AI cannot extract information and representations of objects in images or videos from the start, it must be given a hint about what's going on in them before it begins to recognize patterns. At LaberYourData we provide a whole set of services focused on processing and annotating datasets for computer vision enhancement.

- Image and Video Categorization

- A process of organizing various images or videos into a list of categories. This helps the AI system enable the correct categorization for any new images that are introduced into the system.

- 2D Bounding Boxes Annotation

- Identifying certain objects and drawing 2D boxes around them. Boxes label objects, such as cars, cyclists, signs, people, trees and animals and are used to train the AI to recognize those when operating a car.

- 3D Cuboids Annotation

- Identifying objects and drawing 3D cubes around them. In car automation, the cuboids are used for recognition and navigation purposes and to check for collision.

- Polygon Annotation

- This tool is quite similar to the 2D bounding boxes with the only difference of outlining the contour of the object (also called polygon), rather than a box.

- Sensor Fusion

- A more complex process, which uses information from both images and 3D point clouds and merges them together. Sensor fusion is often a crucial step before semantic segmentation.

- Semantic Segmentation

- Semantic segmentation is a high-level task of linking every pixel in an image to a class label. The output of this process are usually large regions that categorize each part of a certain image. This way images and objects in them are easier to analyze for an AI system.

What we at Label Your Data know firsthand, is how bothersome and time-consuming labeling and sorting of data can be — Cognilytica reports that data preparation often takes over 80% of the whole time in AI and machine learning projects. Most researchers, developers and professionals often see it as a mere chore. However, clean peer-reviewed labeled data is in the heart of every good IT project. Automated vehicle AI is not an exception. It is highly dependent on a large amount of high-quality data too.

Since the training material defines software's further ability of information processing and its output in the real world, better data means an increasingly accurate and more robust AI, which is able to solve real-time problems fast and error-free. Our experienced team at Label Your Data offers to focus on eliminating the routine of data preparation for all your business and project needs, so that you can shift your attention and prioritize developing cutting-edge technology.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.