Trusted by 100+ Customers

Use Cases for

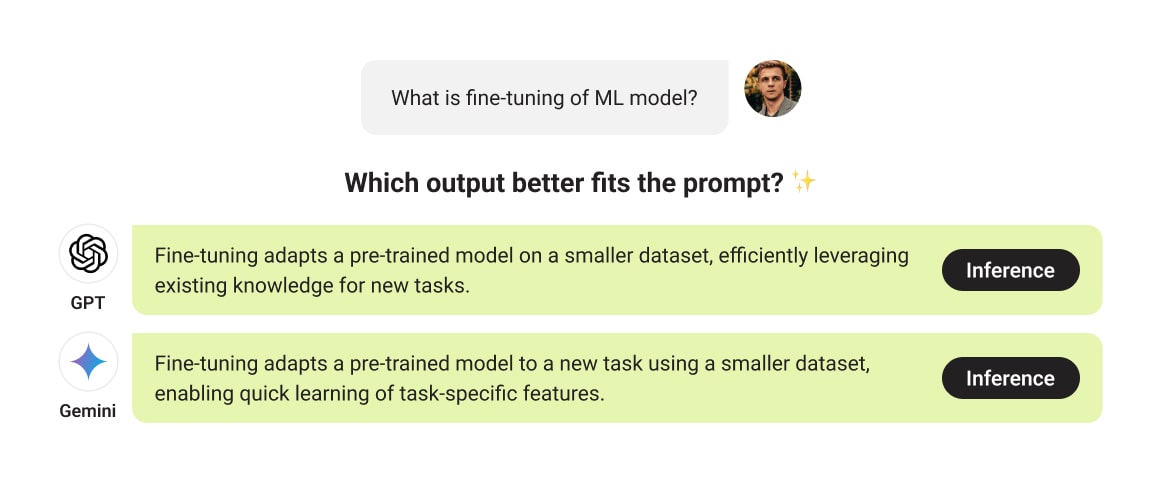

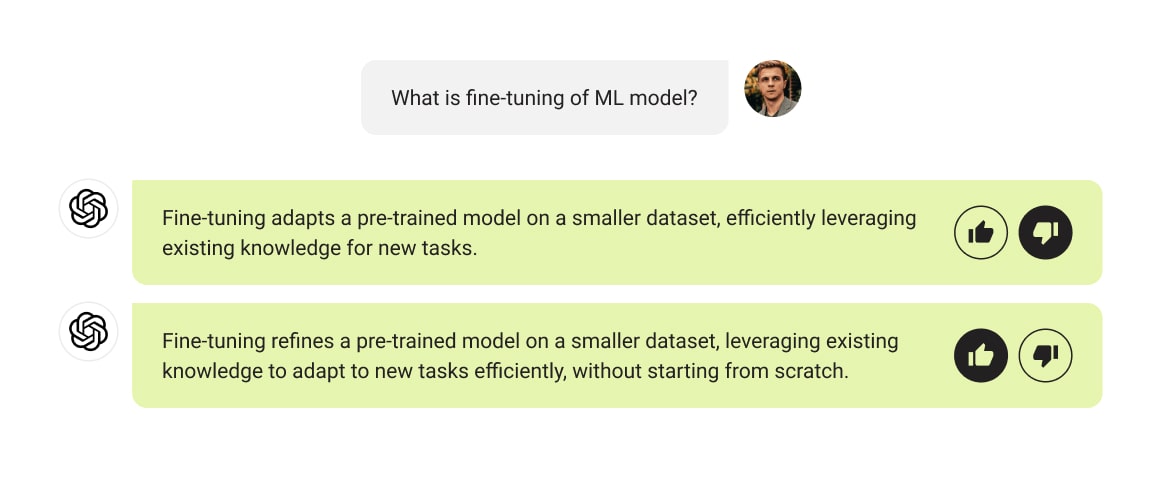

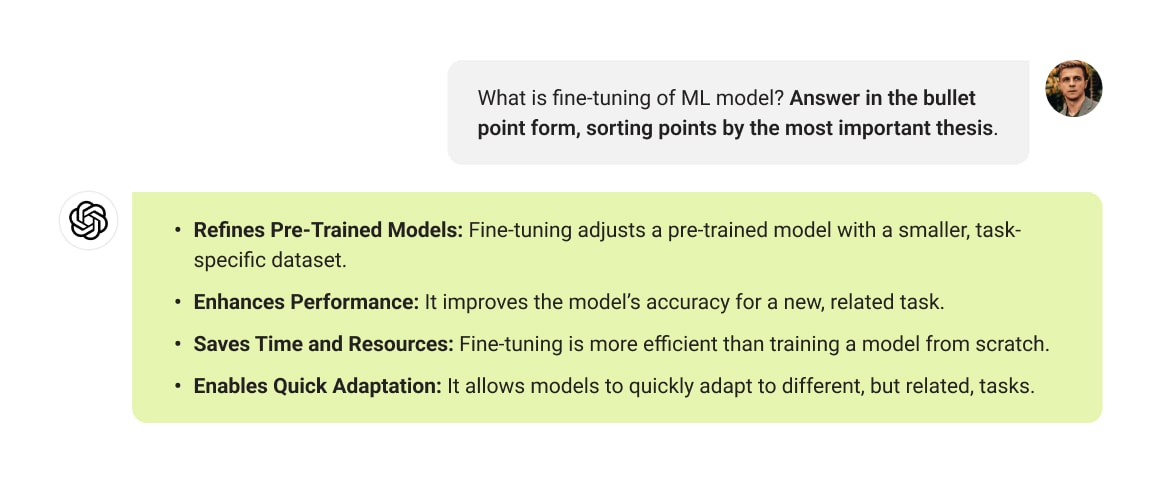

Inference Calibration

Optimize LLM for instruction adherence, error reduction, and style-specific responses

Ensure hallucination-free interactions with a tailored tone

Content Moderation

Enable LLM to filter and remove undesirable social content

Improve platform safety and compliance

Data Enrichment

Add business-specific data and domain knowledge to your LLM

Build custom models for industry use

Data Extraction

Train LLM to extract data from text documents

Streamline document data handling

Data Services for You

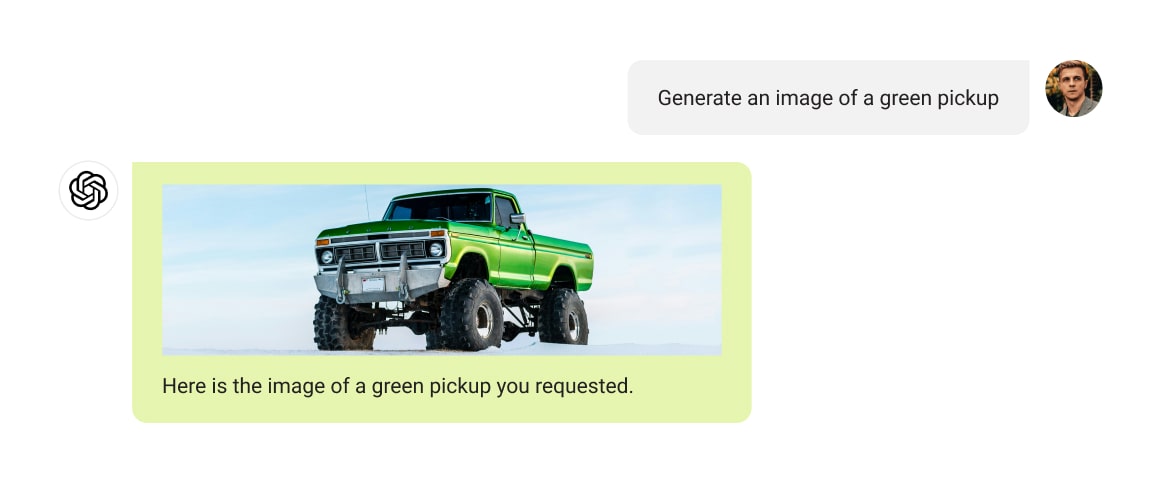

Creating detailed captions for images and assessing the relevance of the generated text.

Train your LLM and Computer Vision models to annotate objects on images and text

Creating detailed captions for images and assessing the relevance of the generated text.

Train your LLM and Computer Vision models to annotate objects on images and text

How It Works

Free Pilot

Send us your LLM data sample for free fine-tuning to experience our services risk-free.

QA

Evaluate the pilot results to ensure we meet your quality and cost expectations.

Proposal

Receive a detailed proposal tailored to your specific LLM fine tuning needs.

Start Labeling

Begin the fine-tuning process by our team.

Delivery

Receive timely delivery of fine-tuned data, keeping your project on schedule.

Calculate Your Cost

Estimates

Send your sample data to get the precise cost FREE

Why Projects Choose Label Your Data

No Commitment

Check our performance based on a free trial

Flexible Pricing

Pay per fine-tuned object or per hour

Tool-Agnostic

Working with every fine-tuning tool, even your custom tools

Data Compliance

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

Start Free Pilot

fill up this form to send your pilot request

Thank you for contacting us!

We'll get back to you shortly

Label Your Data were genuinely interested in the success of my project, asked good questions, and were flexible in working in my proprietary software environment.

Kyle Hamilton

PhD Researcher at TU Dublin

Trusted by ML Professionals

FAQs

What are the tools for LLM tuning?

Tools for LLM tuning include Hugging Face’s Transformers, OpenAI’s GPT-3 Playground, Google’s T5, and specialized platforms like Weights & Biases for experiment tracking. In addition, you can get custom scripts using PyTorch or TensorFlow for fine-tuning models on specific datasets.

What is instruction tuning LLM?

Instruction tuning LLM refers to the process of training language models to better understand and follow specific instructions provided by users. This improves their ability to generate accurate and contextually appropriate responses based on the given directives.

Why should I consider fine-tuning for my LLM?

Fine-tuning involves taking a pre-trained large language model (LLM) and further training it on a specific dataset. This improves its performance on a particular task. Besides, fine-tuning customizes your LLM to better understand and generate content relevant to specific use cases, making it more accurate and useful. This process can also significantly enhance the model’s ability to handle proprietary data and specific industry requirements.

What are the different methods of fine-tuning an LLM?

The primary methods of fine-tuning an LLM include:

Full Fine-Tuning: Retraining the entire model, which is resource-intensive but offers extensive customization.

Parameter Efficient Fine-Tuning (PEFT): Updating only a subset of model parameters to reduce computational cost, using techniques like LoRA (Low-Rank Adaptation).

Distillation: Training a smaller model to replicate the behavior of a larger one, making the process less resource-intensive and more efficient.

What are the benefits of fine-tuning over using a pre-trained model?

Fine-tuning offers several advantages. First, it tailors your model to boost its performance for specific tasks. Fine-tuning is also more cost-effective than training a model from scratch. Last but not least, you can get more relevant and accurate outputs of your LLM.

When should I consider fine-tuning an LLM?

You should consider fine-tuning an LLM when your engineering team needs accurate domain-specific terminology, or when operations managers require seamless integration with existing workflows. Fine-tuning enhances model precision, improves customer interactions, and automates tasks efficiently, providing tailored solutions and a competitive edge for your business.

How long does the LLM fine-tuning process take?

The duration of the fine-tuning process depends on various factors. They include the size and complexity of the model, the volume of training data, and the specific requirements of your project. On average, fine-tuning can take anywhere from a few days to a few weeks. Our team will provide a detailed timeline after assessing your needs and the available data.