Case Study

Landfill Detection Model Validation for a Global Impact

Introducing the Client

A team of PhD students from Yale University and Paris School of Economics sought our assistance with model validation. The objective was to fine-tune their remote sensing models, such as Random Forest and XGBoost, and test their performance in precise detection of landfills in satellite data. Here’s how we addressed global waste management challenges together with the Client.

Let the Numbers Speak: The Project Summary

Case Overview: Tackling the Waste Management Problem

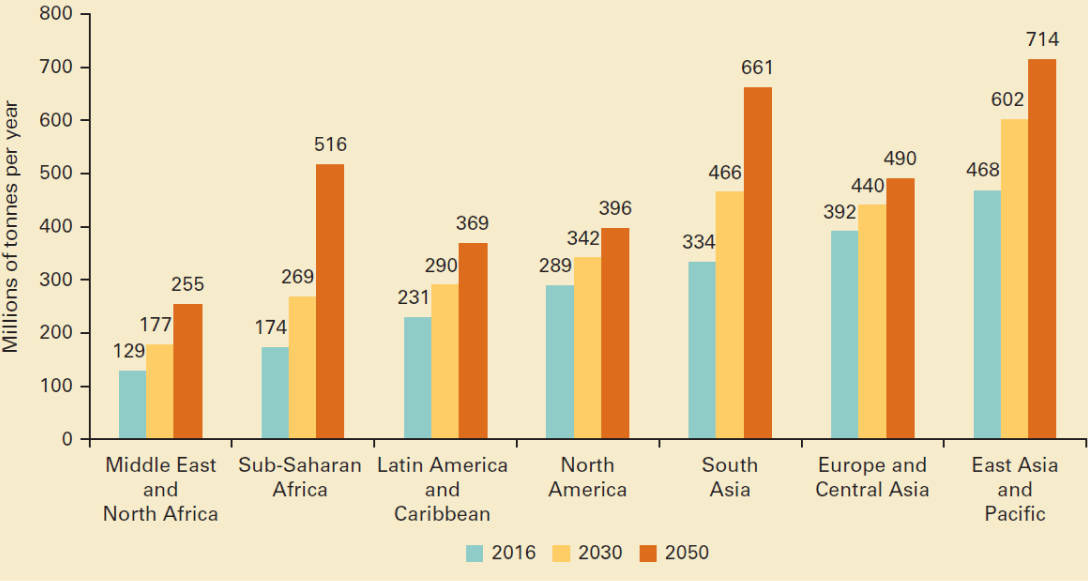

Every year, the world produces 2.01 billion tons of municipal solid waste. At least 33% of this waste is not handled in an environmentally safe way. Recognizing the urgency of this issue, our Client has sought our help in making a positive change.

The project involves the creation of various machine learning models, such as Random Forest and XGBoost, for detecting landfills in satellite imagery. These models are deployed on Google Earth Engine, a platform dedicated to satellite data. The primary goal is to use these models to understand the reasons behind and the impacts of poorly managed waste globally.

To ensure that the ML models effectively serve their purpose, a team of PhD students from Yale University and the Paris School of Economics required high-quality model validation services. This is when our collaboration at Label Your Data began.

The Main Problem and Process in Focus

The Client is developing an ML model that is able to detect landfills in satellite data. The model also has to distinguish between objects similar to landfills, including recycling facilities, animal feeding operations, quarries, or mines. Their main request was to validate the coordinates they selected to train the model in distinguishing between the above-mentioned objects and correctly detect the landfills.

Here’s what this process looked like for our team

The Client was probabilistically selecting the coordinates based on a customized active learning strategy that they were developing. The two main criteria guide their selection:

Estimated probability that it is a dump;

Prevalence of ‘similar’ types of pixels in the population.

The Client was sending to us the coordinates in batches, each representing a different location and time period.

Our dedicated team, consisting of two annotators and a QA specialist, had to validate whether the model gives the correct result. The objects to distinguish included:

Landfills

Informal dump

Recycling facilities

Animal feeding operations

Quarries or mines

To define these sites, our annotators had to have a keen eye (and a pinch of constant training and practice) for spotting piles of trash or plastic. This was crucial for the model to learn how to detect and label a landfill, informal dump, or recycling facility.

The Label Your Data Team’s Solution

Label Your Data has been providing precise model validation services for PhD students at Yale University for more than 2 months. It’s an ongoing, on-demand academia project in which we validate the results of a model that uses satellite imagery to detect landfills.

Challenges

Solutions

Accurate model validation for landfill detection through satellite imagery

Cross-reference QA and third-party tool integration to enhance model validation accuracy

Imagery unavailable or compromised: no photos from desired time, cloud-covered, or low quality

Medium confidence score (2) or estimation based on nearby areas with a low confidence score (3)

Challenges in distinguishing landfills from similar-looking objects

Additional research over an extended timeframe or putting low confidence score (3) for these areas

Utilizing third-party tools to open and view the data

Flexible approach in speeding up Google Earth’s map adjustment process for new points

Subjective nature of the task

Implementing a cross-reference method for verifying quality (0 or 1 score) with two annotators working simultaneously

Solutions

Cross-reference QA and third-party tool integration to enhance model validation accuracy

Medium confidence score (2) or estimation based on nearby areas with a low confidence score (3)

Additional research over an extended timeframe or putting low confidence score (3) for these areas

Flexible approach in speeding up Google Earth’s map adjustment process for new points

Implementing a cross-reference method for verifying quality (0 or 1 score) with two annotators working simultaneously

After speaking with the Label Your Data team, the decision was clear. Their strong security and a decade-long expertise made them the standout choice for our project.

PhD student at Yale University and Paris School of Economics

The Project Results: Landfill Detection Model for a More Sustainable Future

The goal of this landfill model development initiative for Label Your Data was to improve the model performance and for the Client to find the best code for their waste detection model. Our close cooperation on this project has ended up with the following results:

Expert model validation for landfill detection model through satellite imagery

Our team identified a certain coefficient of confidence to each of 10,400 coordinates for 16 locations in total in a short timeframe.

High client satisfaction rate with our flexible cooperation model

With 2 annotators on board, we could handle 1 location per day and finish the first batch before the Client was sending the next set of coordinates.

Tackled the major unbalanced class problem in Client’s data

Initially, the model’s AUC ranged from 82-84. Following improvements in detection capabilities through our model validation, the Client’s Random Forest model now achieves 92, while their XGBoost models consistently reach 94 or above.

Ongoing support for the Client’s landfill detection model development

The goal is to refine the Client’s model through additional labeling rounds and achieve a balance where accuracy matches or exceeds the current model while addressing bias.

team for your project!

Explore Our Diverse Services

Our annotators possess expertise in labeling spatial data for customized geospatial applications, including GIS, navigation, urban planning, and more.

As part of our data processing services, we offer data collection, precisely tailored to fulfill niche requirements for small and mid-sized datasets.

Our annotators possess expertise in labeling spatial data for customized geospatial applications, including GIS, navigation, urban planning, and more.

As part of our data processing services, we offer data collection, precisely tailored to fulfill niche requirements for small and mid-sized datasets.

More of Our Case Studies

NLP for Acoustic Target Detection

Audio annotations for the sound-based air target detection model

NATO-Compliant Annotation for Military Drone Awareness

Drone data annotation for artillery reconnaissance technologies recognized in NATO offices