How to Keep the ML Datasets Secure?

On average, it takes 50 days to discover and report a data breach. During that time, businesses risk significant harm, including unauthorized access, financial losses, and reputational damage.

Ensuring data privacy regulations are followed can be tough when labeling personal data. You need systems that keep the data private by not letting people directly interact with it.

How to De-Risk Yourself from Legal Issues with Private Data

To avoid legal headaches with private data, getting consent upfront from those who generate the raw data is essential for ethical data labeling in ML projects. This builds trust and ensures compliance with data privacy regulations.

What happens when data is used without consent

Using data without consent can have a number of negative consequences. Perhaps the most significant is the erosion of user trust. When individuals feel their data has been misused, they’re less likely to share information in the future, hindering the development of AI and other data-driven technologies.

Additionally, data breaches can expose personal information, leading to identity theft, fraud, and even physical harm. Legal repercussions are also a concern. Data privacy regulations around the world are becoming increasingly strict, and organizations that fail to obtain proper consent can face hefty fines. In the worst-case scenario, misuse of data can perpetuate discrimination or bias, leading to unfair and unethical outcomes.

What are the examples of raw data

Raw data encompasses any unprocessed information collected from various sources. Here are some common examples you might encounter in data labeling projects:

Text data: This includes emails, social media posts, documents, and chat logs. Text labeling tasks often involve sentiment analysis, topic classification, or information extraction.

Images and videos: This can be any visual data you’re working with for training computer vision models. Labeling tasks for this type of data could involve object detection, image segmentation, or scene classification.

Sensor data: Data collected from sensors can include temperature readings, GPS coordinates, or audio recordings. Sensor data labeling can be used for tasks like anomaly detection, activity recognition, or machine calibration.

Audio data: Music, speech, environmental sounds, or animal sounds are some of the examples of audio data. Speech recognition and sentiment analysis are common types of labeling tasks used for applications like automatic transcription services, music recommendation systems, and real-time voice translation tools.

How to ask consent from users

Obtaining user consent for data collection and labeling is crucial for ethical and legal reasons. Here are some key principles to follow:

Transparency: Clearly inform users about what data is being collected, how it will be used, and who will have access to it.

Granularity: Provide options for users to choose the specific types of data they’re comfortable sharing.

Control: Allow users to withdraw their consent at any time and offer an easy way for them to access or delete their data.

Clear language: Use concise and easy-to-understand language in your consent forms, avoiding technical jargon.

No dark patterns: Here are the things to avoid when you want to ensure that user consent is truly informed, freely given, and reflects a clear understanding of how their data will be used:

Pre-checked boxes: Don’t pre-check consent boxes. Users should actively opt-in to share their data.

Forced choices: Don’t force users to agree to data collection as a condition of service use. Provide a clear “opt-out” option.

Confusing language: Avoid burying consent requests within lengthy terms and conditions. Present consent information prominently and separately.

Privacy nudges: Don’t use misleading wording or pressure tactics to sway users towards giving consent.

By following these guidelines, you can ensure that your data labeling projects are conducted in a way that respects user privacy and builds trust.

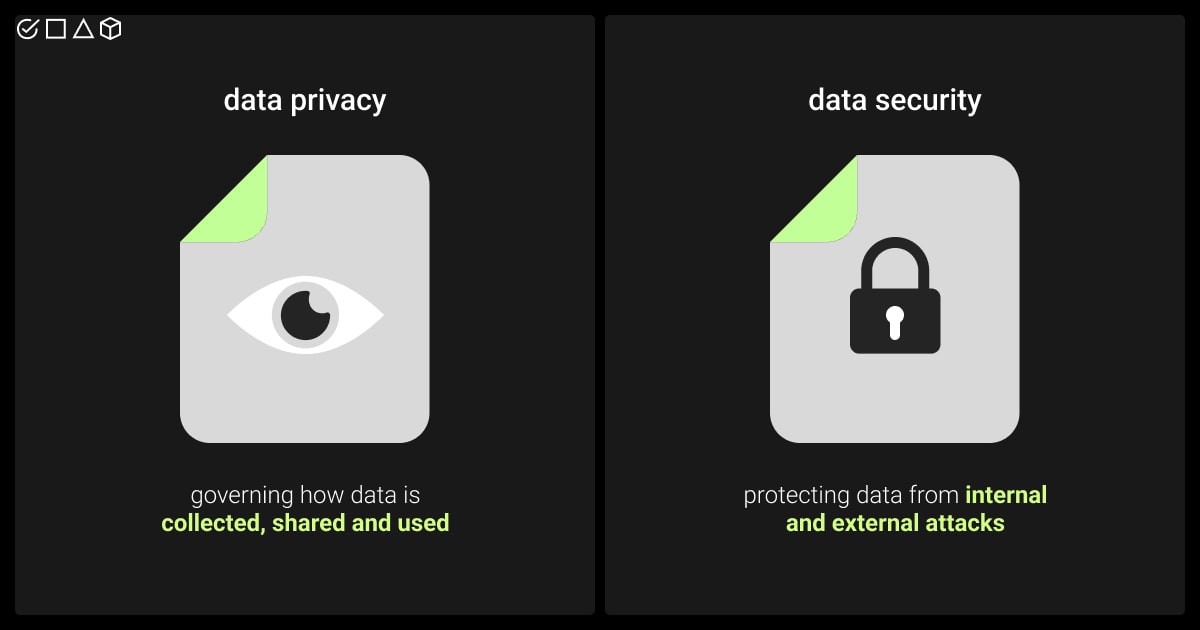

Data Security vs. Data Privacy

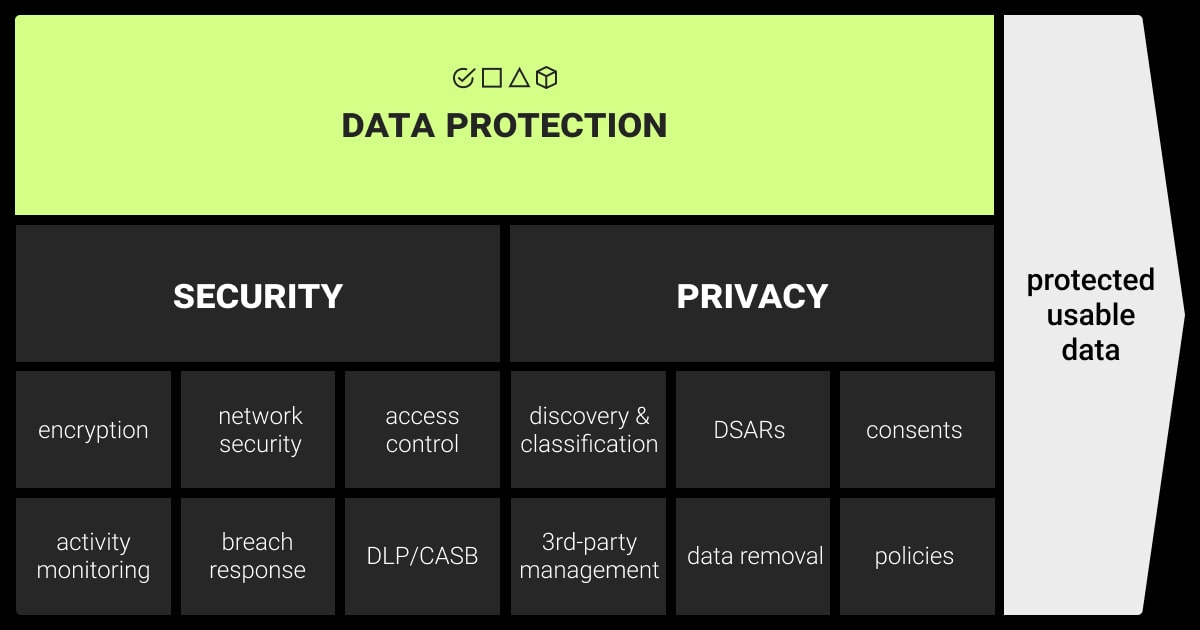

Data labeling security and privacy are related but different concepts. Data security focuses on protecting electronic information from unauthorized access, while data privacy concerns individuals’ rights to control how their personal data is collected and used.

As businesses increasingly use AI and ML technologies, the importance of data security has grown. Moreover, there are compliance issues related to data privacy that require careful consideration. Training data, which might include sensitive personal information like names, addresses, and birthdates, carries risks if mishandled, potentially leading to identity theft, fraud, or other malicious actions.

The reason these two concepts are often intertwined is that they both revolve around data protection. Besides, the link between data privacy and data security in annotation has become stronger due to the rise of regulatory frameworks such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA).

Data Privacy Protection Laws

It’s important to process private data according to data laws. Today, over 120 countries have enacted international data protection laws to better safeguard their citizens’ data.

Here’s a list of the global data privacy regulations to consider when labeling ML datasets or outsourcing to the vendor:

GDPR (General Data Protection Regulation): The most extensive data protection and privacy regulation so far is the General Data Protection Regulation (GDPR), introduced in 2018 in the European Union and the European Economic Area. According to GDPR, people have the right to know how their information is being handled and to have more control over their data online.

Applies to the European Union (EU) and regulates how personal data of EU residents is processed by any organization, regardless of location. It emphasizes transparency, individual control, and data security.

Key aspects include:

Individual Rights: EU residents have rights to access, rectify, erase, and restrict processing of their data.

Lawful Basis for Processing: Organizations need a legal justification for collecting and using personal data.

Data Breach Notification: Data breaches must be reported to authorities and affected individuals.

HIPAA (Health Insurance Portability and Accountability Act): Applies to the United States and safeguards protected health information (PHI) of patients. It focuses on securing medical records and ensuring they are used only for authorized purposes.

Key aspects include:

Covered Entities: Applies to healthcare providers, health plans, and healthcare clearinghouses.

Minimum Necessary Standard: PHI should only be used to the minimum extent necessary for the purpose.

Patient Rights: Patients have rights to access, amend, and request an accounting of disclosures of their PHI.

CCPA (California Consumer Privacy Act): While HIPAA focuses on protecting individuals’ medical records and personal health information, while CCPA aims to enhance privacy rights and consumer protection for residents of California by regulating the collection and use of personal data by businesses.

CCPA empowers California residents with control over their personal data. It grants rights to know, access, delete, and opt-out of data sales. Businesses must provide clear privacy notices and respond to consumer requests. The CCPA focuses on data sales and has exemptions, but it paved the way for stronger data privacy protections in California and beyond.

ISO 27001 (International Organization for Standardization): Not a law, but an internationally recognized standard for information security management systems (ISMS). It provides a framework for organizations to implement best practices for data security.

ISO 27001 is a global framework for managing information security in a company. Getting certified means your Information Security Management System meets international standards, assuring customers about your system’s security. Certification involves evaluating your organization against 114 requirements across 14 security categories. While not specific to privacy, achieving ISO 27001 certification demonstrates a commitment to robust data security practices, which can be helpful for GDPR or HIPAA compliance.

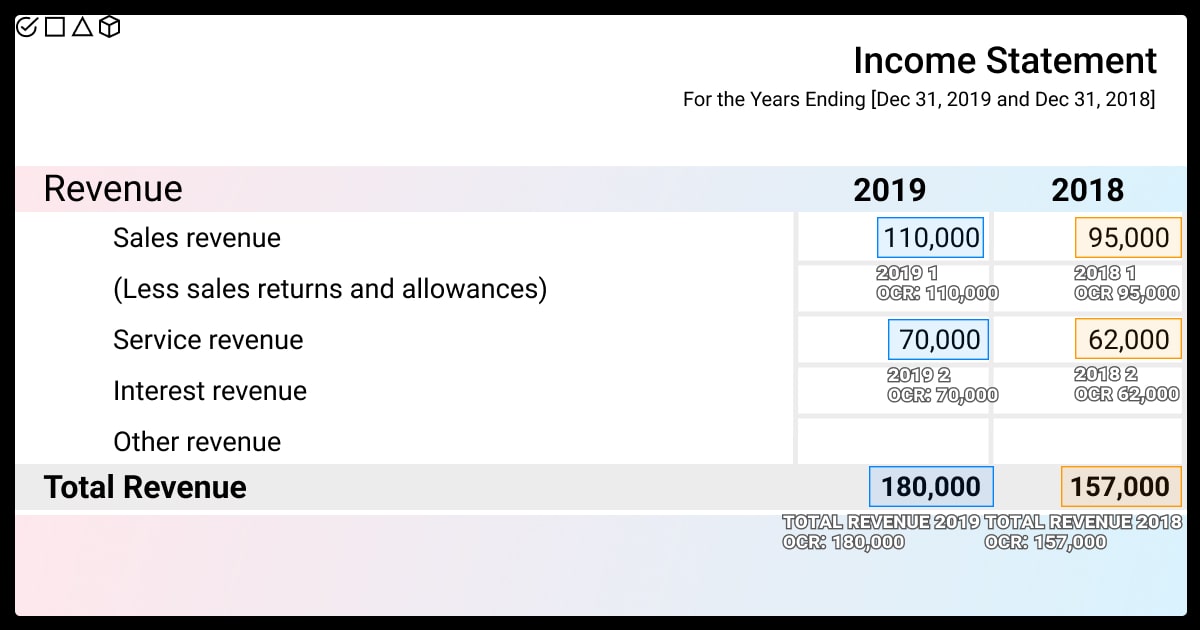

Regulation | ML dataset protection guidelines |

GDPR | Get explicit consent from individuals before using their data for labeling your ML datasets. Anonymize data whenever possible and only store what’s necessary for your project. Be prepared to answer data subject requests about their information. |

HIPAA | Only authorized personnel can access and label healthcare data. Implement strict security measures to protect patient privacy. De-identify data to the greatest extent possible before using it in your ML models. |

CCPA | Allow individuals in California to opt-out of the sale of their personal information used for labeling your datasets. Provide clear privacy notices explaining how their data is used. |

ISO 27001 | While not a regulation itself, achieving ISO 27001 certification demonstrates a robust information security management system. This can help ensure your ML datasets are protected through access controls, data encryption, and other best practices. |

When partnering with a data labeling company, both parties need to establish an agreement that outlines specific labeling details: confidentiality, compliance with laws and regulations, and the deletion or return of data after processing ends.

How to Organize Data Annotation Without Data Leaks

Even with user consent, a data leak during annotation can be a PR disaster. To prove our point, let’s take a look at the recent case with Amazon.

Amazon Ring Case

Ring, the video doorbell company owned by Amazon, has settled a lawsuit in 2023 with the US Federal Trade Commission (FTC) for $5.8 million over allegations that employees had improper access to customer videos. The FTC claimed Ring employees had unrestricted access to all customer videos and could download or share them, while Ring maintained the data was encrypted and access was restricted for customer privacy. The settlement required Ring to implement a data security program and disclose employee data access procedures.

This case highlights the importance of data security and privacy regulations in data labeling, especially when dealing with sensitive data. Data labeling often involves human oversight. In the Ring case, the concern was Ring employees potentially having unfettered access to user videos used for training facial recognition or motion detection. Therefore, it’s crucial for data labeling companies to ensure secure storage and access controls to mitigate privacy risks.

For instance, data privacy regulations like the GDPR and CCPA would require limitations on such access. These regulations mandate user consent for data collection and usage, and restrict the retention and distribution of personal data. This can also involve data anonymization techniques to minimize the amount of personally identifiable information (PII) used in labeling.

Best Practices for Keeping Data Secure

Data quality is critical, with inconsistent collection standards (50%) being the top concern, followed by compliance (48%) and data access (44%). Hence, your data labeling process must adhere to relevant regulatory standards and security levels required for the data. It should provide a secure environment equipped with appropriate training, policies, and procedures to ensure compliance and data integrity.

The key factors to consider for secure data labeling process include:

Annotators security:

Ensure that all annotators have undergone background checks and have signed non-disclosure agreements (NDAs) or similar documents outlining your expectations for data security. Managers should closely monitor compliance with these data security protocols.

Device control:

Annotators should surrender any personal devices, such as mobile phones or external drives, upon entering the workplace. The service provider should also disable any features on work devices that could allow data downloading or storage.

Workspace security:

Workers should conduct their tasks in a location where their computer screens are not visible to individuals who do not meet the specific data security requirements for your project.

Infrastructure:

Use an appropriate labeling tool based on your unique needs and security standards, ensuring it offers robust access controls and encryption to safeguard sensitive data.

The top strategies for securing data annotation:

Ensure Physical Security:

Maintain secure facilities with manned security and metal detectors.

Restrict access to the building outside office hours.

Use video cameras to monitor the physical security of the workplace.

Require identification badges and biometrics for employee entry.

Prohibit personal belongings and electronics in secure areas.

Monitor access to sensitive data and limit it to authorized project teams.

Utilize polarized monitor filters to restrict data visibility.

Post reminders of critical security measures.

Implement Internal Security Measures:

Provide consistent training sessions to educate annotators about recent data security risks, phishing, password management, and the importance of security.

Check the backgrounds of the people labeling the data.

Require employees to sign and adhere to various security policies, including codes of ethics and NDAs.

Conduct regular security audits to find weaknesses in security and implement suggestions from security experts.

Implement Technical Security Measures:

Protect data using strong encryption like AES-256 to prevent unauthorized access.

Choose annotation software with built-in security features and follow standard security practices.

Don’t allow the annotation team to use personal devices at work.

Add extra layers of security, requiring both a password and a physical item for login (Multi-Factor Authentication).

Limit access to sensitive data through role-based access control (RBAC) to reduce the risk of data leaks.

Prioritize Cybersecurity:

Restrict internet access to necessary sites for each project.

Utilize proprietary chat tools for communication.

Conduct regular penetration tests and external audits to identify vulnerabilities.

Maintain Security Compliance:

Adhere to industry-standard accreditations such as GDPR, CCPA, and ISO 27001.

Stay updated on security protocols and regulations to ensure compliance.

By following these steps, you can effectively enhance data labeling security and mitigate potential risks associated with sensitive data handling, such as:

Annotators might access your data using an unsecured network or a device without proper protection against malware.

They could save parts of your data by taking screenshots and sharing them through social media or email.

Annotators might label your data while they’re in public areas.

Workers might not have enough training, understanding, or responsibility for following security procedures.

A data annotation company must adhere to relevant regulatory standards and security levels required for the data. It should provide a secure environment equipped with appropriate training, policies, and procedures to ensure compliance and data integrity.

Here’s the list of our top strategies on how to secure data annotation:

Ensure Physical Security:

Maintain secure facilities with manned security and metal detectors.

Restrict access to the building outside office hours.

Use video cameras to monitor the physical security of the workplace.

Require identification badges and biometrics for employee entry.

Prohibit personal belongings and electronics in secure areas.

Monitor access to sensitive data and limit it to authorized project teams.

Utilize polarized monitor filters to restrict data visibility.

Post reminders of critical security measures.

Implement Internal Security Measures:

Provide consistent training sessions to educate annotators about recent data security risks, phishing, password management, and the importance of security.

Check the backgrounds of the people labeling the data.

Require employees to sign and adhere to various security policies, including codes of ethics and NDAs.

Conduct regular security audits to find weaknesses in security and implement suggestions from security experts

Implement Technical Security Measures:

Protect data using strong encryption like AES-256 to prevent unauthorized access.

Choose annotation software with built-in security features and follow standard security practices.

Don’t allow the annotation team to use personal devices at work.

Add extra layers of security, requiring both a password and a physical item for login (Multi-Factor Authentication).

Limit access to sensitive data through role-based access control (RBAC) to reduce the risk of data leaks.

Prioritize Cybersecurity:

Restrict internet access to necessary sites for each project.

Utilize proprietary chat tools for communication.

Conduct regular penetration tests and external audits to identify vulnerabilities.

Maintain Security Compliance:

Adhere to industry-standard accreditations such as GDPR, CCPA, and ISO 27001.

Stay updated on security protocols and regulations to ensure compliance.

By following these steps, you can effectively enhance data labeling security and mitigate potential risks associated with sensitive data handling.

When outsourcing your data annotation tasks, pick a vendor that cares about keeping your data safe. Send your data to us for security-compliant data annotation!

Security and Data Annotation Risks

Choosing a reliable AI partner for your ML project is critical because low-quality service that is negligent of data security in annotation might put your data at risk in several ways:

Annotators might access your data using an unsecured network or a device without proper protection against malware.

They could save parts of your data by taking screenshots and sharing them through social media or email.

Annotators might label your data while they’re in public areas.

Workers might not have enough training, understanding, or responsibility for following security procedures.

The data labeling company itself might not have certifications for data security

6 Security Questions for Your Data Labeling Provider

Here are some questions you should ask to make sure the company labeling your data takes security and privacy seriously:

How do you select and vet your data annotators? Can all of them agree to keep my data confidential by signing a non-disclosure agreement (NDA)?

What steps do you take to prevent annotators from taking screenshots, downloading, or using my data elsewhere?

Is your workplace secure? How do workers enter, and who else can access it?

Can you provide a secure location for handling sensitive data?

How do you handle data that falls under special regulations like HIPAA or GDPR?

How do you ensure the quality and accuracy of labeling across different workers and datasets?

Make sure to ask these questions to find a data labeling company that meets your data labeling security needs. This way, you can focus more on your ML model development and avoid any potential security risks in data labeling.

How We Approach Data Security at Label Your Data

We leverage our extensive experience to help companies grow faster by offering data annotation services for their ML projects. We recognize the importance of building trust with our clients, so we prioritize the security and protection of your data above all.

Because the data we handle comes from various sources, it requires careful adherence to laws and regulations. We strictly follow GDPR in all our internal processes, including data labeling and additional services, and also while using our software. Additionally, we adhere to the industry’s information security standard ISO 27001, ensuring the confidentiality and integrity of our assets, such as our workforce, IT infrastructure, and workplace.

To safeguard cardholders’ data processed through our systems, Label Your Data adheres to the Payment Card Industry Data Security Standard (PCI DSS) (Level 1) regulations. Besides, our data annotation company adheres to stringent HIPAA and CCPA compliance measures, ensuring the utmost protection and confidentiality of sensitive personal health and consumer data.

The key is finding an AI partner that values your data like you do. Our Label Your Data team offers the most secure data annotation services for your ML endeavors.

FAQ

How to address ethics in data annotation projects?

When annotating data ethically, it’s crucial to establish clear guidelines, prioritize diverse representation, obtain informed consent, and continually assess and mitigate potential biases and privacy concerns throughout the annotation process.

What are the most common security risks in data labeling, and what are the best ways to mitigate them?

The most common security risks in data labeling include:

Data exposure

Unauthorized access

Potential bias injection

To mitigate these risks, implementing strict access controls, anonymizing sensitive data, conducting regular audits, and using diverse annotation teams can help enhance data security and reduce bias.

What measures help organizations ensure the utmost importance of data security in their ML projects?

Organizations can prioritize data security in ML projects by implementing encryption protocols, access controls, regular audits, and ensuring compliance with data protection regulations like GDPR or CCPA. Additionally, fostering a culture of security and data annotation awareness among the team is crucial.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.