Types of LLMs: Classification Guide

TL;DR

- Top LLM families include GPT, BERT, PaLM, LLaMA, and Claude, tailored for diverse applications.

- Open-source models like LLaMA prioritize flexibility, while proprietary ones like GPT-4 ensure high performance.

- Domain-specific LLMs excel in healthcare, finance, and legal tasks with industry-focused precision.

- Key trends include multimodality, smaller models, and retrieval-augmented generation (RAG).

- Advances in architecture and deployment are making LLMs more efficient and accessible.

How Are Large Language Models (LLMs) Classified?

67% of companies worldwide are leveraging generative AI powered by large language models (LLMs) for content creation, customer service, and data analysis. But only 23% have fully embraced these models commercially, held back by persistent privacy and ethical concerns, and lacking understanding of types of LLMs and their applications.

In this article, we’ll delve into the various families and types of LLM models, exploring how you can apply the best LLMs, the challenges you might face, and how LLM fine-tuning plays a crucial role in adapting these models to specific use cases.

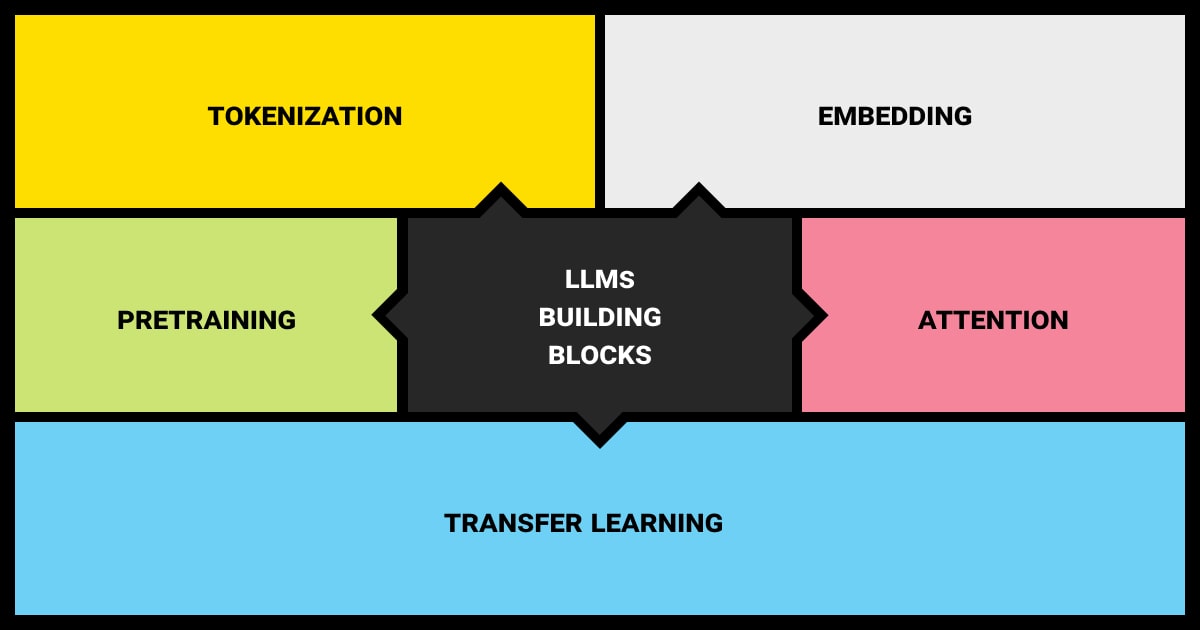

You have to learn three key factors to understand the LLM classification. They include architecture, availability, and domain specificity.

Architecture-Based LLMs

Autoregressive Models

Autoregressive models, such as the GPT (Generative Pre-trained Transformer) series, generate text by predicting the next token in a sequence based on the preceding tokens. They use a probability distribution to select the most likely next word or character.

Key Feature: These models excel at generating fluent and contextually appropriate text. However, their left-to-right prediction method can sometimes cause issues with maintaining long-term coherence.

Autoencoding Models

Autoencoding models, such as BERT (Bidirectional Encoder Representations from Transformers), grasp the context of words in a sentence by predicting masked tokens. They are trained by masking some input tokens and having the model predict these masked tokens using the surrounding context.

Key Feature: These models are particularly strong in tasks that need a deep understanding of context and semantics, including sentiment analysis, question answering, and named entity recognition.

Seq2Seq Models

Sequence-to-sequence (Seq2Seq) models, like T5 (Text-To-Text Transfer Transformer), are designed for tasks where the input and output are sequences, such as translation, summarization, and text generation. They typically consist of an encoder and a decoder. The encoder processes the input sequence, and the decoder generates the output sequence.

Key Feature: Great for tasks that involve transforming one type of text into another, such as translating languages or converting long text into summaries.

Availability-Based LLMs

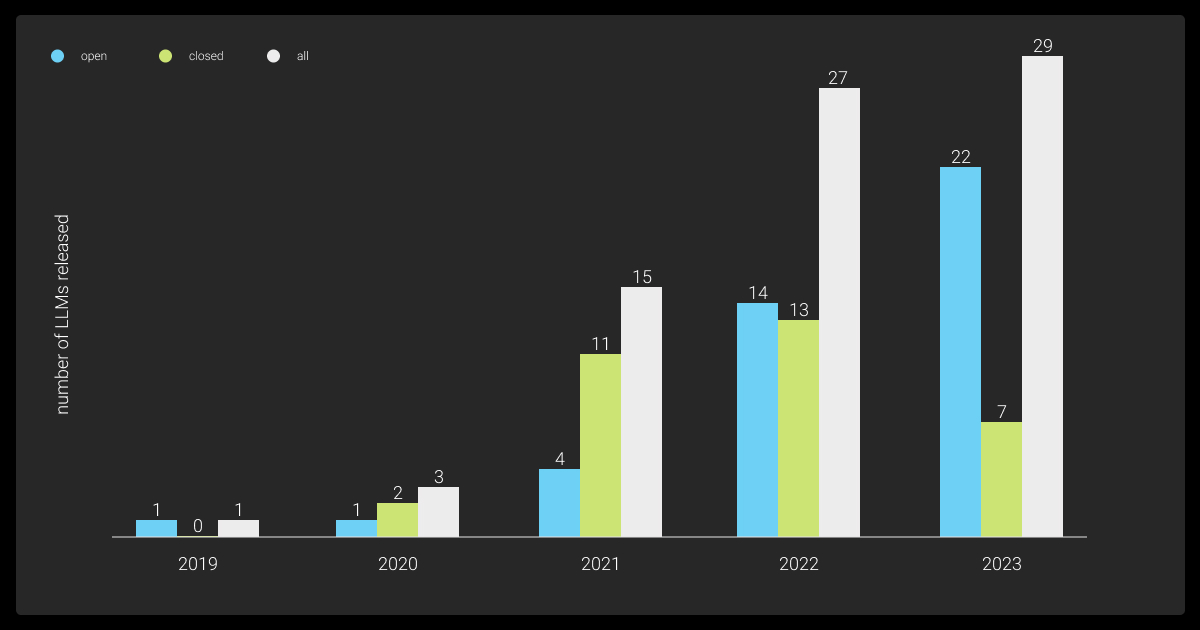

Open-Source Models

Open-source LLMs are freely available for anyone to use, modify, and distribute. They typically have a community of developers and researchers who contribute to their development and offer support.

The main advantages of open source models include transparency, flexibility, and the ability to customize the models for specific needs. The most popular examples are LLaMA (Large Language Model for AI), BLOOM (BigScience Large Open-science Open-access Multilingual Language Model), and Falcon.

Proprietary Models

These LLMs are developed and maintained by private organizations and are typically available through commercial licenses or subscriptions. Access to these models is often restricted, and the underlying code and data are not publicly shared.

Due to substantial investments in research and development of proprietary LLMs, these models demonstrate high performance and robustness. Users also receive dedicated support and updates from the provider. The well-known examples are GPT-4 (by OpenAI), PaLM (Pathways Language Model by Google), and Claude (by Anthropic).

Domain-Specific LLMs

General-Purpose Models

These models are versatile and capable of handling a wide array of tasks across multiple domains. Trained on diverse datasets, they excel in text generation, translation, summarization, and question answering, among other language-related tasks.

General-purpose LLM models are used in applications where versatility and adaptability are crucial, such as chatbots, virtual assistants, and general text analysis.

Domain-Specific Models

Domain-specific LLMs are tailored for particular industries or fields, where specialized knowledge and terminology are essential. Examples include:

- Healthcare: Models trained on medical literature and patient data to assist in diagnosis, treatment recommendations, and medical research.

- Finance: Models created to analyze financial reports, market data, and economic trends, aiding in investment decisions and risk management.

- Legal: Models that can understand legal texts, assist in contract analysis, and support legal research by identifying relevant case laws and regulations.

These models are highly optimized for their specific domains, providing more accurate and relevant results compared to general-purpose models. They incorporate industry-specific data and terminology for tackling specialized tasks.

Explore our comprehensive NLP services to step up your LLM game.

The Best LLMs Families: Everything You Need to Know

The best LLM families have their own strengths, weaknesses, and a wide range of applications. From OpenAI’s GPT series, known for powerful text generation, to Google’s BERT family, excelling in context understanding, we’ll highlight the unique features and uses of these and other top LLM models.

GPT Family (OpenAI)

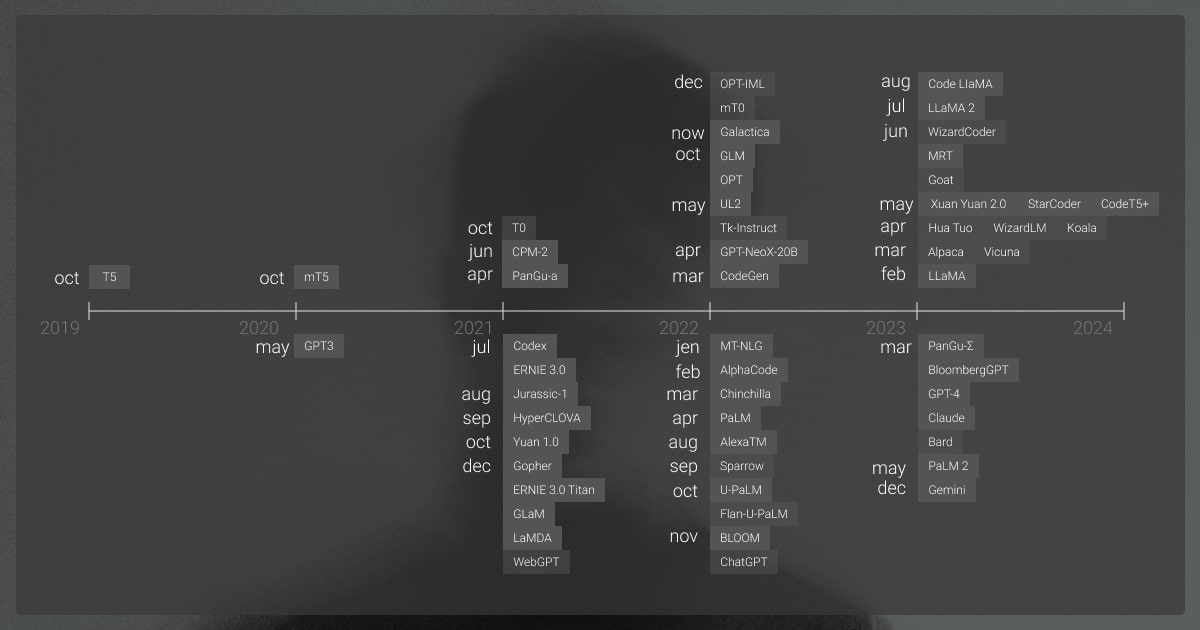

The GPT (Generative Pre-trained Transformer) family, developed by OpenAI, includes a series of autoregressive language models known for their generative capabilities:

- GPT-1 introduced the transformer architecture with 117 million parameters

- GPT-2, released in 2019, increased model size to 1.5 billion parameters, improving performance

- GPT-3, with 175 billion parameters, showcased advanced text generation capabilities

- GPT-4, released in 2023, added multimodal capabilities, processing both text and images, and improved fine-tuning techniques

Use Cases

GPT models are major LLMs used in applications like chatbots, content creation, and code generation. GPT-3 has been widely used for virtual assistants, customer service, and creative content. Performance metrics include perplexity and human evaluations for text coherence and relevance.

InstructGPT and ChatGPT

InstructGPT and ChatGPT are specialized versions of GPT-3 for following instructions and engaging in conversations. InstructGPT is fine-tuned for understanding and following user instructions, while ChatGPT is optimized for interactive tasks like customer support and tutoring.

BERT Family (Google)

BERT (Bidirectional Encoder Representations from Transformers), developed by Google, is known for its bidirectional training approach. Unlike traditional models that read text sequentially, BERT processes text in both directions, allowing it to understand context more comprehensively.

Variants:

- RoBERTa (Robustly Optimized BERT Pretraining Approach): Introduced by Facebook AI, RoBERTa enhances BERT by training on more data and for longer periods, improving performance on various NLP tasks.

- DistilBERT: Developed by HuggingFace, DistilBERT reduces BERT’s size while retaining 97% of its language understanding capabilities, making it faster and more efficient.

- ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately): This model, also from Google, focuses on detecting replaced tokens rather than predicting masked tokens, achieving high performance with less computational resources.

Use Cases

BERT and its variants are widely used for tasks like search query completion, text data annotation, and encoding. They excel in understanding the context within a text, making them ideal for improving search engines, sentiment analysis, and other tasks requiring deep text comprehension.

PaLM Family (Google)

PaLM (Pathways Language Model) is Google’s latest innovation in LLMs. Released in 2022, PaLM leverages a Mixture of Experts (MoE) architecture to train models with up to 540 billion parameters. It utilizes the Pathways scheduler to optimize training efficiency and model performance.

Use Cases

PaLM excels in various NLP tasks, including translation, summarization, and question answering. Compared to models like GPT-3, PaLM has shown superior performance in certain benchmarks due to its advanced training techniques and larger model size. However, it also highlights the importance of efficient data utilization to achieve state-of-the-art performance without disproportionately increasing model size.

Gemini (previously Bard by Google DeepMind)

Gemini is an advanced language model that was first released in December 2023. It incorporates deep reinforcement learning techniques and utilizes a unique architecture to achieve state-of-the-art performance across various NLP benchmarks. Gemini is designed to handle more complex tasks with improved contextual understanding and generative capabilities.

PaLM and Bard (now Gemini) are both advanced language models developed by Google. Bard, initially launched as a separate model and rebranded as Gemini in December 2023, builds upon advancements made by PaLM, incorporating deep reinforcement learning and unique architecture for enhanced capabilities in complex tasks like coding and creative writing. Essentially, Gemini represents a more advanced evolution of Google’s LLM technology, leveraging foundational innovations from PaLM.

LLaMA Family (Meta AI)

The LLaMA (Large Language Model Meta AI) family, developed by Meta AI, focuses on creating highly efficient and accessible LLMs:

- LLaMA 1, released in 2023, was initially for academic research but became widely used after its weights were leaked

- LLaMA 2, an improved version, was made publicly available under a license permitting commercial use

Use Cases

LLaMA models, ranging from 7 billion to 65 billion parameters, are designed to be compute-optimal, providing high performance with relatively fewer resources. They are widely used in research and commercial applications, including AI-driven chatbots, content creation, and more. LLaMA 2, in particular, has been praised for its efficiency and ease of deployment on consumer-grade hardware.

Claude Family (Anthropic)

The Claude family, developed by Anthropic, includes models like Claude 3, with variants named Haiku, Sonnet, and Opus. These models focus on safety and alignment to reduce the risks associated with the use of AI.

Use Cases

Claude models incorporate extensive safety measures and are fine-tuned to avoid generating harmful or biased content. They are used in applications where ethical considerations are paramount, such as sensitive data handling, educational tools, and healthcare support. The Claude family is notable for its focus on creating responsible AI systems that prioritize user safety and ethical use.

Ensure the success of your LLM by leveraging our additional data services for comprehensive data support.

Which LLM is the Best for Specific NLP Tasks: A Cheat Sheet

| LLM Family | Primary Use Cases | Key Features |

| GPT (OpenAI) | Content creation, chatbots, the best LLM for code generation | Autoregressive, large-scale, high-quality text generation |

| InstructGPT/ChatGPT (OpenAI) | Customer support, interactive applications | Instruction-following, optimized for dialogue |

| BERT (Google) | Search query completion, text annotation, sentiment analysis | Bidirectional, deep contextual understanding |

| RoBERTa (Facebook AI) | Enhanced text annotation, encoding | Optimized BERT, trained on more data |

| DistilBERT (HuggingFace) | Fast and efficient text analysis | Smaller, faster BERT variant |

| ELECTRA (Google) | Token classification, efficient pre-training | Detects replaced tokens, computationally efficient |

| PaLM (Google) | Translation, summarization, question answering | MoE architecture, advanced training techniques |

| LLaMA (Meta AI) | AI-driven chatbots, content creation | Compute-optimal, efficient, accessible |

| Claude (Anthropic) | Sensitive data handling, educational tools, healthcare support | Safety and alignment focused, ethical AI development |

Technical Considerations for Top LLMs Deployment

Deploying the best LLM models requires careful consideration of various technical factors to ensure they perform optimally and meet compliance requirements.

Infrastructure Requirements for LLM Deployment

Hardware

Deploying LLMs, especially large-scale ones like GPT-3 or PaLM, requires significant computational resources. This includes high-performance GPUs or TPUs, ample memory (RAM), and substantial storage to handle large datasets.

Software

The software stack includes machine learning frameworks (such as TensorFlow or PyTorch), containerization tools (like Docker), and orchestration systems (such as Kubernetes) to manage and scale the deployments effectively.

Cloud Deployment

Provides scalability, flexibility, and lower initial costs. It offers access to advanced infrastructure and services without needing physical hardware. Popular providers like AWS, Google Cloud, and Azure offer specialized AI and ML services for LLM deployment.

On-Premise Deployment

Offers greater control over data and infrastructure, essential for organizations with strict data privacy and security needs. However, it requires a significant upfront investment and ongoing maintenance of hardware and software.

Scalability and Maintenance Strategies for LLMs

Best Practices for Scaling LLM Deployments:

- Horizontal Scaling: Distributing the load across multiple machines to handle increased traffic and data volume.

- Autoscaling: Setting up policies to automatically adjust computing resources based on demand.

- Load Balancing: Using load balancers to evenly distribute requests across servers, ensuring optimal performance and reliability.

Maintenance and Version Control:

- Regular Updates: Keeping models and dependencies updated to leverage improvements and security patches.

- Monitoring and Logging: Setting up strong practices to track performance, detect problems, and fix issues.

- Version Control: Using version control systems for models and code to manage changes, rollback updates, and collaborate effectively.

LLMs Challenges and Risks

Bias, Fairness, and Ethical Considerations:

- Bias Mitigation: Using methods to find and reduce biases in LLM outputs, promoting fairness and inclusivity.

- Transparency: Providing transparency in model decisions and maintaining documentation on data sources and training processes.

Regulatory Compliance and Data Privacy Issues:

- Data Protection: Complying with GDPR and CCPA by anonymizing data and handling it securely.

- Regulatory Compliance: Following industry rules to avoid legal issues and ensure ethical practices.

Handling Large-Scale Data:

- Data Management: Efficiently managing and processing large volumes of data, including preprocessing, storage, and retrieval.

- Scalability: Ensuring the infrastructure can scale to accommodate growing data sizes and model complexities.

Ensuring Robustness and Reliability in Production Environments:

- Testing: Implementing comprehensive testing frameworks to validate model performance and reliability under various scenarios.

- Fault Tolerance: Designing systems to be fault-tolerant, with redundancy and failover mechanisms to ensure continuous operation during failures.

- Security: Protecting models and data from cyber threats through stringent security measures.

These technical considerations are essential for the successful deployment and operation of best LLMs.

Refine your LLM training data with our professional text annotation services.

Current Trends in Large Language Models

The field of large language models (LLMs) is rapidly evolving, with several emerging trends on the horizon:

Multimodality

LLMs are increasingly handling multiple data formats (text, audio, images, video), enhancing their versatility in applications like virtual assistants and content creation.

Small Language Models (SLMs)

SLMs are more efficient and can be trained using less computational power. They are suitable for deployment on devices with limited resources and can be tailored for specific tasks.

Cost Reduction

Efforts are underway to lower the cost of training and running a LLM (large language model). Companies like OpenAI and Anthropic are reducing prices, making advanced AI more accessible.

Direct Preference Optimization (DPO)

DPO is an emerging technique for aligning model outputs with human preferences more efficiently than traditional reinforcement learning methods.

Autonomous Agents

Autonomous agents that interact with LLMs to perform tasks independently are becoming more advanced, enabling complex task automation without continuous human input.

Robotics Integration

AI integration in robotics, through vision-language action models, allows robots to understand and execute commands based on visual and textual inputs.

Custom Chatbots

Platforms like OpenAI and Hugging Face are enabling users to create personalized AI assistants, tailored to specific needs.

Consumer Applications

Generative AI is being embedded in consumer applications, enhancing products like Grammarly and HubSpot with AI-driven insights and capabilities.

Retrieval Augmented Generation (RAG)

RAG enhances LLMs by connecting them to external knowledge bases, improving their ability to provide accurate and up-to-date information.

These trends indicate that top large language models will continue to integrate more deeply into various aspects of daily life and business operations, driving innovation and efficiency across multiple sectors.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is LLM and types of LLM?

An LLM, or Large Language Model, is a type of AI model trained on vast amounts of text data to understand and generate human-like language. Types of LLMs include autoregressive models (e.g., GPT), masked language models (e.g., BERT), and sequence-to-sequence models (e.g., T5).

What are LLM examples?

Popular examples of LLMs include GPT-4 by OpenAI, BERT by Google, PaLM 2 by Google, Claude by Anthropic, and LLaMA by Meta.

Which LLM model is best?

The best LLM depends on the task. For text generation, GPT-4 is highly recommended. For contextual understanding, models like BERT or RoBERTa perform well. For scalability and efficiency, consider PaLM 2.

Which LLM is the most advanced?

Currently, GPT-4 and PaLM 2 are among the most advanced LLMs, excelling in text generation, contextual understanding, and multi-modal tasks.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.