CloudFactory vs. Humans in the Loop: Selecting the Best Data Labeling Vendor

Table of Contents

- CloudFactory vs. Humans in the Loop: Company Profiles

- Services and Products

- Pricing Models

- Dataset Types

- Data Annotation Tools

- Integrations

- Annotation Process

- Quality Assurance

- Security and Data Compliance

- TL;DR

-

FAQ

- Which company offers a better combination of experience and cost for data labeling: CloudFactory or Humans in the Loop?

- If my project requires a significant increase in labeling volume, which company can better scale their workforce to meet my needs?

- Which company offers more responsive customer support?

In the rapidly expanding field of data labeling for machine learning, CloudFactory and Humans in the Loop stand out as top service providers. CloudFactory taps into a global talent pool to deliver scalable solutions for companies like Hummingbird Technologies and Drive.ai. Conversely, Humans in the Loop excels with a dedicated team focused on high-quality annotations for clients such as TrialHub and Imperial College London.

To save you time and effort, we've compared their offerings to help you determine which data labeling company is the best fit for your ML project.

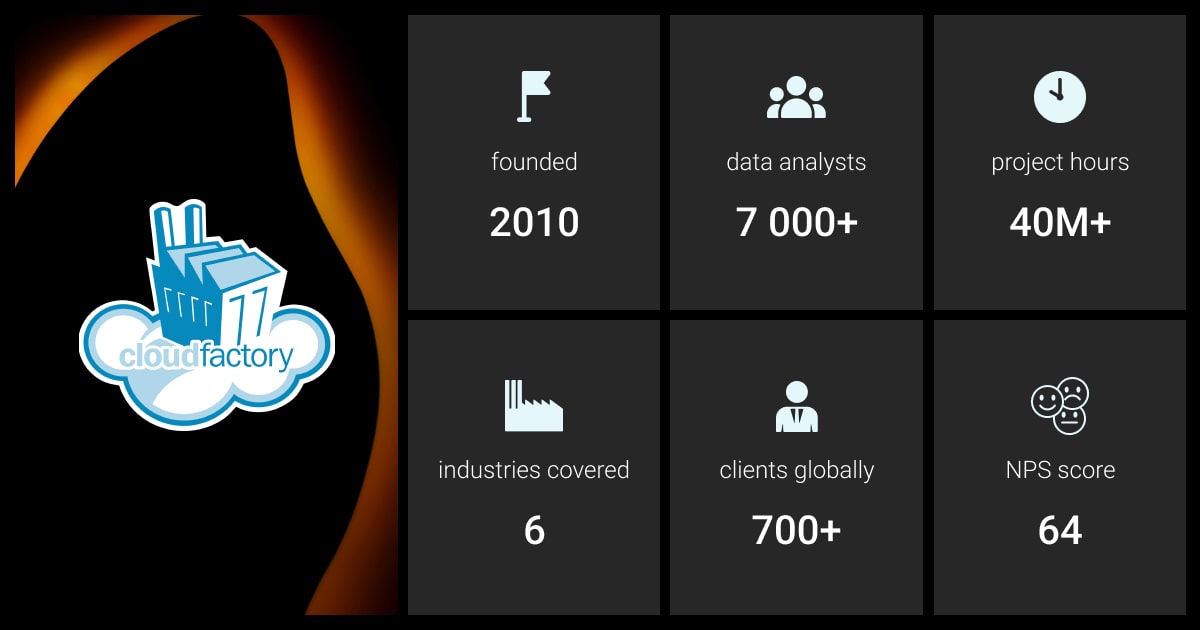

CloudFactory vs. Humans in the Loop: Company Profiles

Feature | CloudFactory | Humans in the Loop |

Founded | 2010 | 2017 |

Headquarters | Kowloon, China | Sofia, Bulgaria |

Market Focus |

|

|

Global Presence | Offices in the UK, US, Nepal, and Kenya | Specialists in Malaysia, Ukraine, Turkey, Bulgaria, and the Middle East |

Workforce Size | 7,000+ specialists | 250+ specialists |

CloudFactory Company

CloudFactory offers human-in-the-loop (HITL) data labeling solutions, leveraging a global, on-demand workforce supported by AI technology. With a talent pool of over 7,000 data annotators, CloudFactory is trusted by more than 700 AI companies. Established in 2010 by Mark Sears, the company has a presence in the UK, US, Nepal, and Kenya. As of 2024, Kevin Johnston has taken on the role of CEO, with Sears transitioning to Executive Chairman.

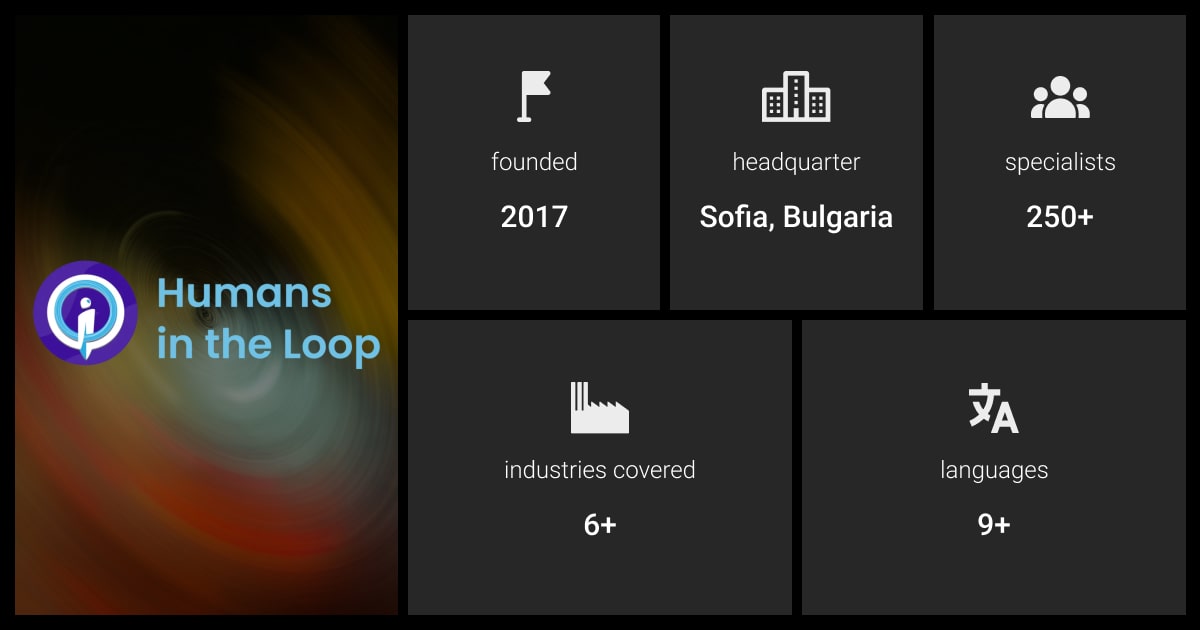

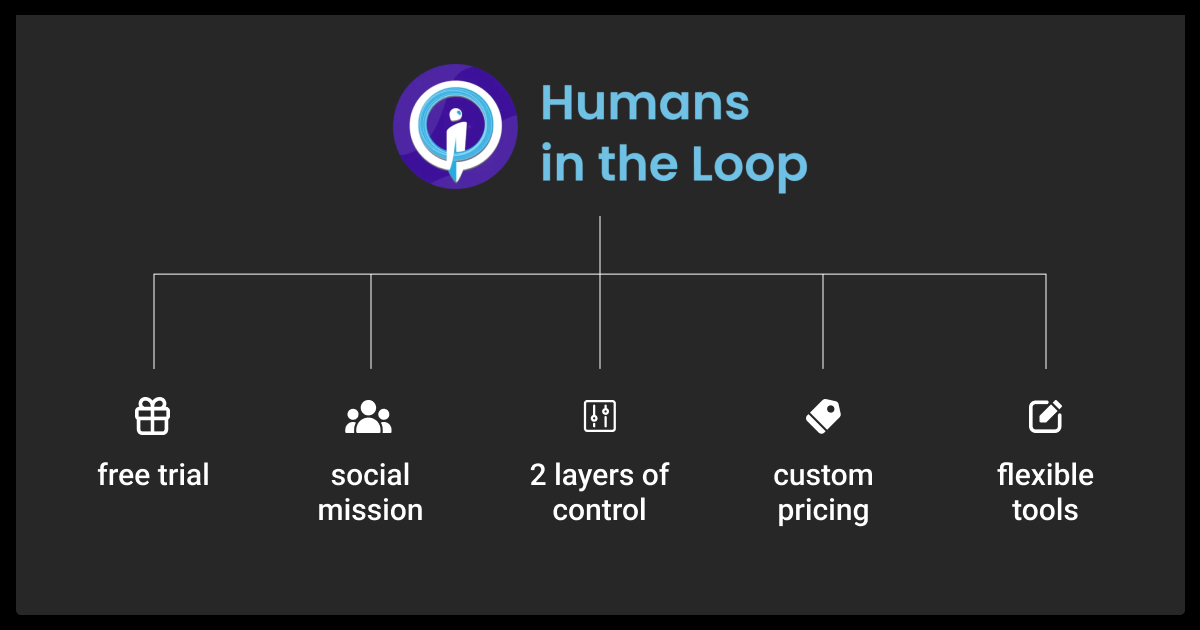

Humans in the Loop Company

Humans in the Loop is a social enterprise dedicated to ethical dataset annotation and AI model validation services. Headquartered in Sofia, Bulgaria, the company employs over 250 specialists across locations including Malaysia, Ukraine, Turkey, and the Middle East. A core mission of Humans in the Loop is to support individuals from conflict-affected regions and those displaced by providing meaningful job opportunities.

Services and Products

CloudFactory Services and Products

CloudFactory offers a wide range of human-led AI solutions, including data curation, annotation, quality assurance, and model optimization. While CloudFactory specializes mainly in computer vision data annotation, their support for natural language processing (NLP) is more limited, especially for languages other than English. Their key services consist of:

Data Labeling:

Accelerated Annotation

Workforce Plus (workforce + tech)

Vision AI Managed Workforce

NLP

Data Processing

Human-in-the-Loop Automation:

Managed Workforce

In terms of product offerings, CloudFactory expanded its portfolio by acquiring Hasty in 2022. It’s a data-centric machine learning platform tailored for computer vision applications, providing AI-powered image annotation, quality control, and a no-code model building solution.

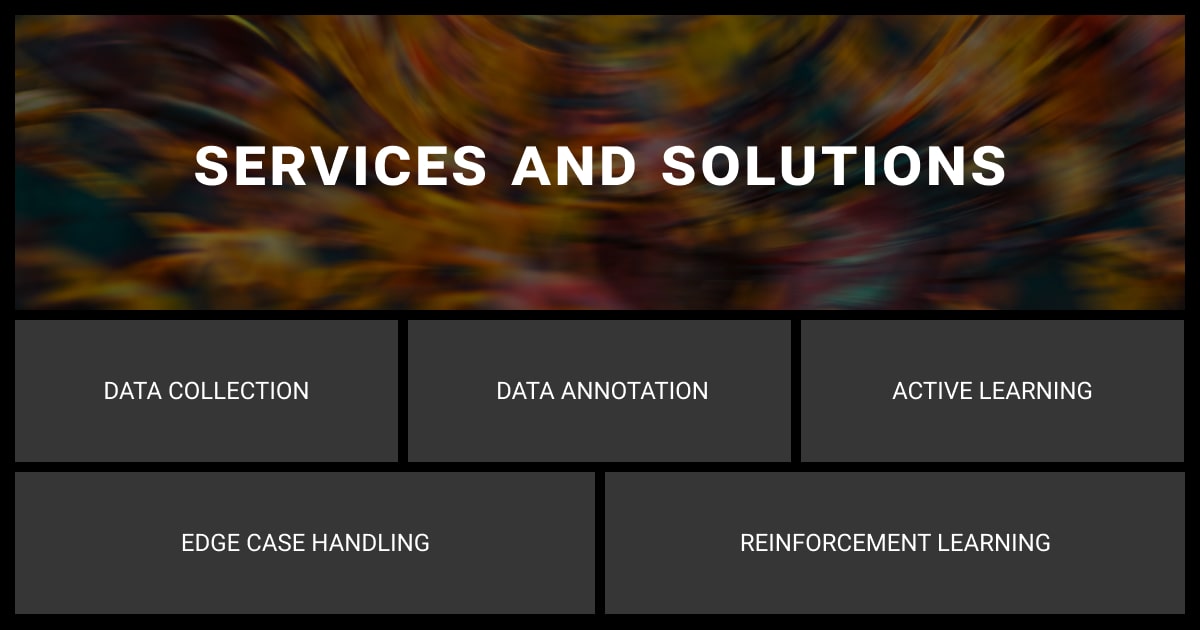

Humans in the Loop Services and Products

Humans in the Loop provides data labeling services with a strong focus on computer vision. Their core activities encompass:

Dataset annotation

Dataset collection

Human-in-the-loop for active learning

Real-time edge case handling

Reinforcement learning with human feedback

For projects centered on computer vision, Humans in the Loop offers more than just data annotation. They can also gather data, review your final models, and support testing prior to deployment.

Pricing Models

Feature | CloudFactory | Humans in the Loop |

Pricing Structure | Per-object rate for computer vision tasks, an hourly rate for NLP tasks, a yearly contract with monthly billing | Depending on the task, per task or per hour |

Pricing Details |

|

|

Free pilot | No | Yes |

Additional Notes |

|

|

Dataset Types

CloudFactory Dataset Types

CloudFactory's data labeling services cater to both computer vision and NLP datasets. They support annotation formats like PNG Masks, JSON, and COCO for import, and offer export in formats such as COCO, Pascal VOC, JSON, and PNG Masks. The company handles 2D image and video data, working with image formats including PNG, JPG, WEBM, HEIC, BMP, and TIFF, as well as all video formats compatible with FFmpeg.

Humans in the Loop Dataset Types

When labeling data for machine learning projects, Humans in the Loop handle most common dataset formats. They frequently work with JSON, Yolo, COCO, and Pascal Voc XML. Additionally, they are flexible and can accommodate specific formats for both data import and export based on your needs.

Data Annotation Tools

CloudFactory Annotation Tools

CloudFactory provides a platform along with its data annotation services, offering the flexibility to either utilize the company’s tools or integrate them with your existing software. Their data analysts can adapt to custom tools to meet your ML project requirements. This makes CloudFactory an excellent choice for those who have their own tools but need help scaling their labeling tasks.

Additionally, CloudFactory collaborates with other data labeling companies that have their own tool sets to provide a complementary workforce solution. Some of their preferred tool partners include Dataloop, Datasaur.ai, and Labelbox.

For automation, CloudFactory offers various features, such as label assistants, fully automated labeling, active learning, AI-consensus scoring, and more automation options.

Humans in the Loop Annotation Tools

Humans in the Loop utilizes a variety of tools from open sources and their partners' paid services. They collaborate with notable market players in data labeling and data management, including Alegion, Diffgram, Human Lambdas, Hasty, and Kili Technology. Additionally, they work with Lightly, V7, Manthano, Superannotate, and Segments.ai.

If you prefer the team to use your specific tool, this option is available for an additional fee. With their extensive experience, the specialists can also provide feedback on your tool and suggest any necessary improvements.

Integrations

CloudFactory Integrations

CloudFactory seamlessly integrates with major cloud storage services like AWS S3, Google Cloud Storage, and Azure Blob Storage to facilitate smooth data transfer. Moreover, they support various ML frameworks, including TensorFlow and PyTorch, to enhance the efficiency of model training workflows.

They also provide a REST API for automating and outsourcing back-office data tasks, and for programmatically managing labeling projects. This API integration enables developers to effortlessly link data tasks with their existing applications, simplifying workflow management.

Humans in the Loop Integrations

Humans in the Loop offer integration with APIs or other tools upon request as an additional service. If you integrate through APIs, you can create tasks for the team to execute. You also have the option to specify the URLs where you would like the final annotated datasets to be delivered.

Annotation Process

CloudFactory Annotation Process

CloudFactory adopts a comprehensive approach to data labeling rather than following a traditional process. Their integrated annotation workflow involves several essential steps:

Free analysis

Team onboarding

Only after that, data annotation process starts with:

Data annotation

Quality assurance (QA)

Process iteration

Project management

Additionally, for each project, CloudFactory assigns a dedicated Client Success Manager and Delivery Team Lead, along with a Channel Manager to offer continuous support.

Humans in the Loop Annotation Process

When partnering with Humans in the Loop for your machine learning projects, the annotation process follows these steps:

Introduction call

Free trial

Fine-tuning

Contract finalization

Project delivery

Quality Assurance

CloudFactory QA

Data annotators at CloudFactory ensure highly accurate annotations by employing both automated checks and human review, backed by a 100% QA guarantee. Here’s a breakdown of data quality measures at CloudFactory:

Built-in QA: Integrated quality assurance processes.

Model Feedback: Utilizing feedback from machine learning models to enhance accuracy.

Multi-layered Quality Control: A thorough approach to assessing data labeling accuracy, including:

Gold Standard: Setting benchmarks for quality.

Sample Review: Regular examination of sample annotations.

Consensus: Agreement among multiple annotators to ensure accuracy.

Intersection over Union (IoU): A metric to measure the accuracy of annotated regions.

Humans in the Loop QA

Like most data annotation companies, Humans in the Loop conducts multiple checks on annotated datasets. Their two-tiered QA process includes:

Project Supervisor: Each project is assigned a dedicated supervisor who reviews every piece of annotated data, ensuring it meets predefined acceptance criteria.

QA Team: The QA team performs a comprehensive review of the entire project before it is delivered to the client.

Security and Data Compliance

Feature | CloudFactory | Humans in the Loop |

Access Controls |

|

|

Worker Screening |

|

|

Compliance |

|

|

TL;DR

Aspect | CloudFactory Pros | CloudFactory Cons | Humans in the Loop Pros | Humans in the Loop Cons |

Services | Scalable solutions Various services in addition to data annotation | Limited support for advanced NLP tasks Focus on computer vision mostly | In addition to data annotation, there are also data collection, testing, and training services for ML models | Focus on computer vision |

Tools | User-friendly, variety of partners' tools | Fewer partner choices in comparison to Humans in the Loop | Possibility to manage multiple formats and datasets | Annotating with third-party and open-source tools |

Pricing | Flexible pricing (per object for Computer Vision, per hour for NLP) Pay only for what you use High-volume discounts | No free pilot provided Accelerated annotation is available only for computer vision tasks | Manage urgent requests | Custom pricing, dependent on numerous factors |

QA | Multiple layers of QA | Combines both human and automated checks | Two layers of QA | Fewer checks and techniques for QA than at CloudFactory |

To sum it up, both CloudFactory and Humans in the Loop can be a good data labeling partner, especially if you focus on computer vision tasks. CloudFactory is bigger, with more annotators and large-scale projects. Humans in the Loop, in their turn, have a social mission, which might interest you to support.

In terms of pricing, the two companies don't differ that match, but Humans in the Loop stands out if you're looking for a free pilot annotation. Besides, you can partner with them for other services.

However, if you’re looking for a partner who would provide high-quality data annotation in multiple languages, and who is also data compliant, then try our services running a free pilot.

FAQ

Which company offers a better combination of experience and cost for data labeling: CloudFactory or Humans in the Loop?

CloudFactory has been present in the market for more years than Humans in the Loop. They also have more numerous staff. However, both provide an individual approach to the customer, offering plans based on complexity, volume, and timelines of your task.

If my project requires a significant increase in labeling volume, which company can better scale their workforce to meet my needs?

CloudFactory excels in scalability, leveraging a global, on-demand workforce to meet large-scale project requirements efficiently. Their flexible workforce model allows them to quickly ramp up resources as needed. However, although Humans in the Loop has fewer specialists, they can also scale and manage urgent requests.

Which company offers more responsive customer support?

If you work with CloudFactory, you'll be assigned a dedicated Client Success Managers and Delivery Team Lead for your project. As intermediaries, they will provide you support and will also ensure the quality of the final result meets your expectation. Humans in the Loop follow the similar principle, assigning a Project Coordinator to you. In both cases, you should receive ongoing support during the whole project.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.