Humans in the Loop Company Review: Is It Your Next Fit?

Table of Contents

- How to Choose a Dataset Labeling Vendor?

- Humans in the Loop Overview

- Humans in the Loop Solutions and Services

- Humans in the Loop Dataset Types

- Humans in the Loop Data Annotation Tools

- Humans in the Loop Integrations

- Humans in the Loop Annotation Process

- Humans in the Loop Quality Assurance

- Humans in the Loop Pricing

- Humans in the Loop Security and Data Compliance

- Top Humans in the Loop Alternatives

- FAQ

Deploying a high-performing machine learning model requires meticulous preparation of raw data and detailed training of machine learning algorithms. By investing time and effort in annotation and preparing your data for further training will save you from the model's undesirable outputs in the end.

Knowing how cumbersome the process can be, many businesses entrust this task to outsourcing companies. In this article, we present to you the Humans in the Loop company review, one of the data labeling providers in the global market. Read on to see if this company can fulfill your ML needs.

How to Choose a Dataset Labeling Vendor?

From a number of companies providing data annotation services, it's important to find one that will meet your needs. While some focus on computer vision only, others provide more comprehensive services, including NLP, speech recognition, and more. Whilst some stake on automated tools, others still believe that human annotation provides the best quality.

We've gathered a number of benchmarks which are important in assessing the outsourcing data annotation company of your choice. Our further Humans in the Loop review is based on the following criteria:

Solutions and services

Dataset types

Data annotation tools

Integrations

Annotation process

Quality checks

Pricing packages

Security and data compliance

Let's deep dive into every one of them.

Humans in the Loop Overview

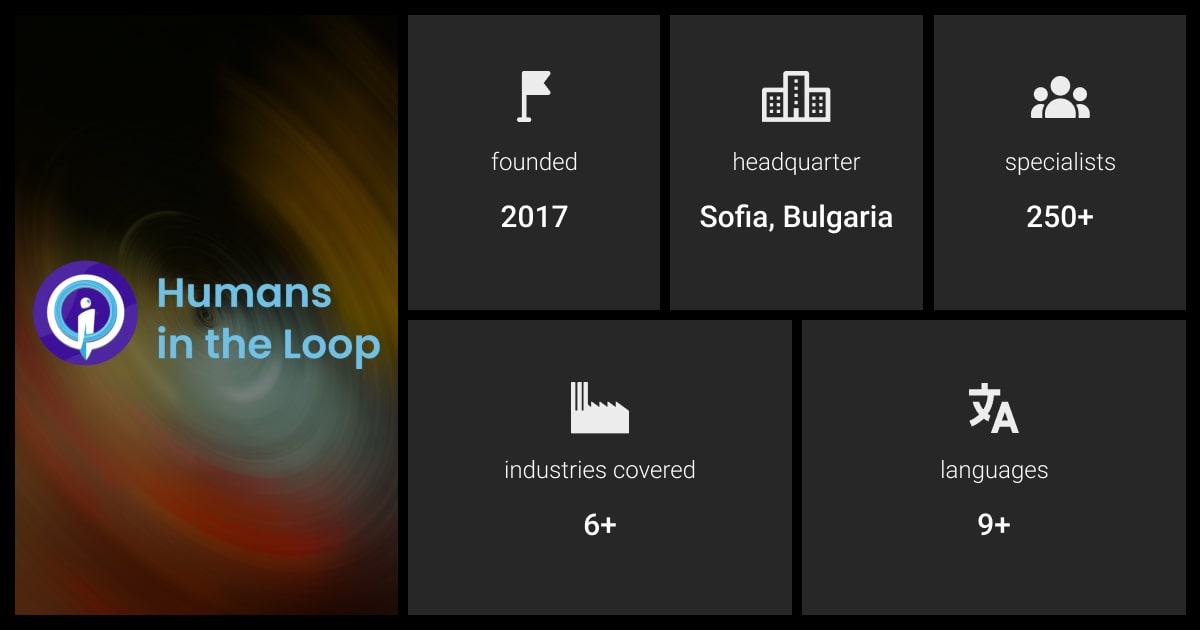

Humans in the Loop is a social enterprise which provides ethical dataset annotation and AI model validation services. From its foundation in 2017, the company has been leading its activities in two streams: offering services and job opportunities as a company and organizing training as a foundation.

Headquartered in Sofia, Bulgaria, Humans in the Loop counts over 250 specialists, with many annotators and QA specialists working from Malaysia and Ukraine, Turkey, Bulgaria, and the Middle East. One of its missions is to help people from countries that experience armed conflicts and those forced to displace. That's why its specialists from affected countries can also come from around the world.

The company accomplishes annotation for different industries and has already had the cases for clients in automotive industry, geospatial, medical, industrial, agricultural, and retail areas.

Humans in the Loop Solutions and Services

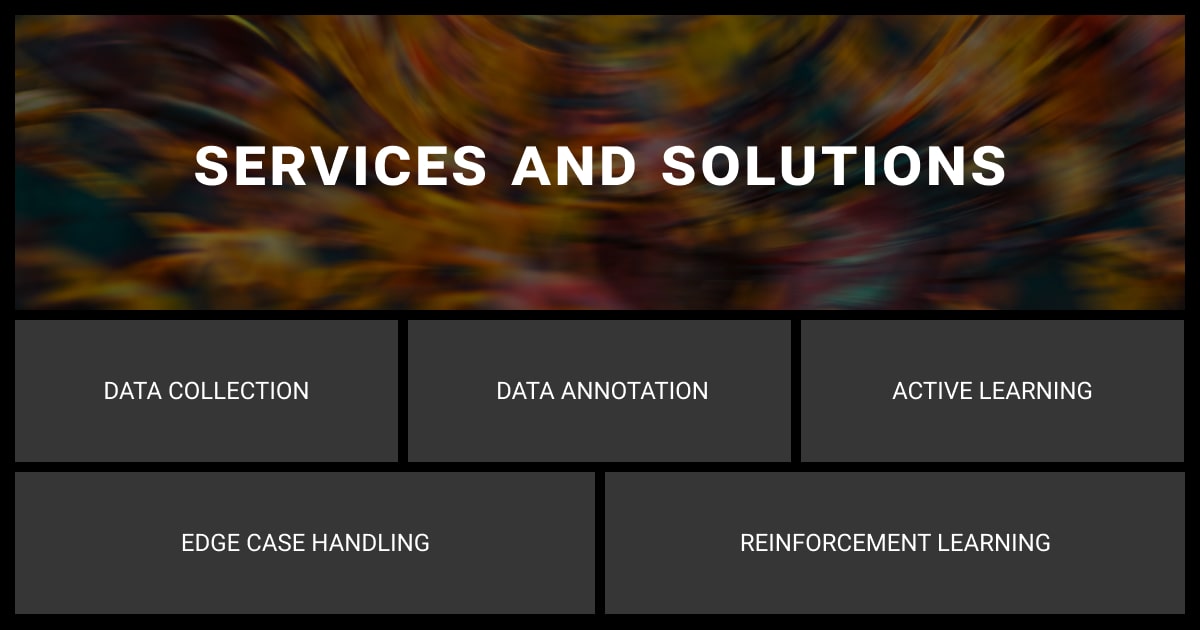

As we've done in other data labeling companies reviews, let's start this Humans in the Loop company review with their main solutions and services. The company's main expertise is computer vision, meaning that they lack data labeling services for NLP models and their core activities focus on CV. The main solutions consist of:

Dataset annotation. Humans in the Loop work with different types of data annotation. Their main types include bounding boxes, polygons, video annotation, keypoint, semantic, and 3D annotation.

Dataset collection. If you don't have the dataset ready for further annotation, Humans in the Loop company reviews all your requirements for the CV task and collects the data for you. They take into account sourcing location, custom parameters, and distribution. Thanks to the workforce, you can expect to receive data from around the world. The team of Humans in the Loop can also help you expand the existing dataset to make it more detailed and diverse.

Human-in-the-loop for active learning. Humans in the Loop can review your models to detect any anomalies or inconsistencies in model performance. They label the datasets, establishing new ground truth, which assists your model's further training.

Real-time edge case handling. Humans in the Loop serves as an intermediary between the model and the end user. This is especially relevant in cases, where the model generates a warning alarm (e.g. wildfire). The team accomplishes real-time AI model validation, reducing false responses.

Reinforcement learning with human feedback. This service focuses on ready models that need to be tested and improved before the final deployment. Humans in the Loop company reviews the model's outputs, generating various questions in different languages. The questions and inputs are designed in such a way to define your model's weak points and signal them for further improvement. The results help to retrain and enhance your model's performance.

If your ML project needs data annotation in the fields other than computer vision, leave us your request! We deliver expert annotation for NLP models, speech recognition, and more.

Humans in the Loop Dataset Types

Humans in the Loop AI model preprocessing considers various formats. This relates both to data collection and data annotation. The company works with the most popular annotation formats, including JSON, Yolo, COCO, Pascal Voc XML. At the same time, the specialists remain open and flexible for other cooperations. They can consider your format for annotation or data export.

Humans in the Loop Data Annotation Tools

Humans in the Loop work with various tools in open sources as well as their partners' paid services. Some of their partnerships in data labeling and data management include such market players as Alegion, Diffgram, Human Lambdas, Hasty, and Kili Technology. They also cooperate with Lightly, V7, Manthano, Superannotate, and Segments.ai.

If you want the team to work on your specific tool, this option is also available for an extra fee. Besides, with extensive experience in the field, the specialists can even provide you feedback on your tool, suggesting improvements, if any.

Humans in the Loop Integrations

As we continue with Humans in the Loop overview, we see that the core team of the company mainly consists of data annotators and QA specialists. Nevertheless, they have developers on site and can discuss integrations with API or other tools on demand and as an additional service.

The majority of annotated tasks are accomplished through APIs, meaning that you can create tasks which the team accomplishes afterward. You also have the possibility to indicate the URLs where you want to receive final annotated datasets.

Humans in the Loop Annotation Process

One of the main points of Humans in the Loop company overview is their annotation process. So, how does the team proceed with annotation?

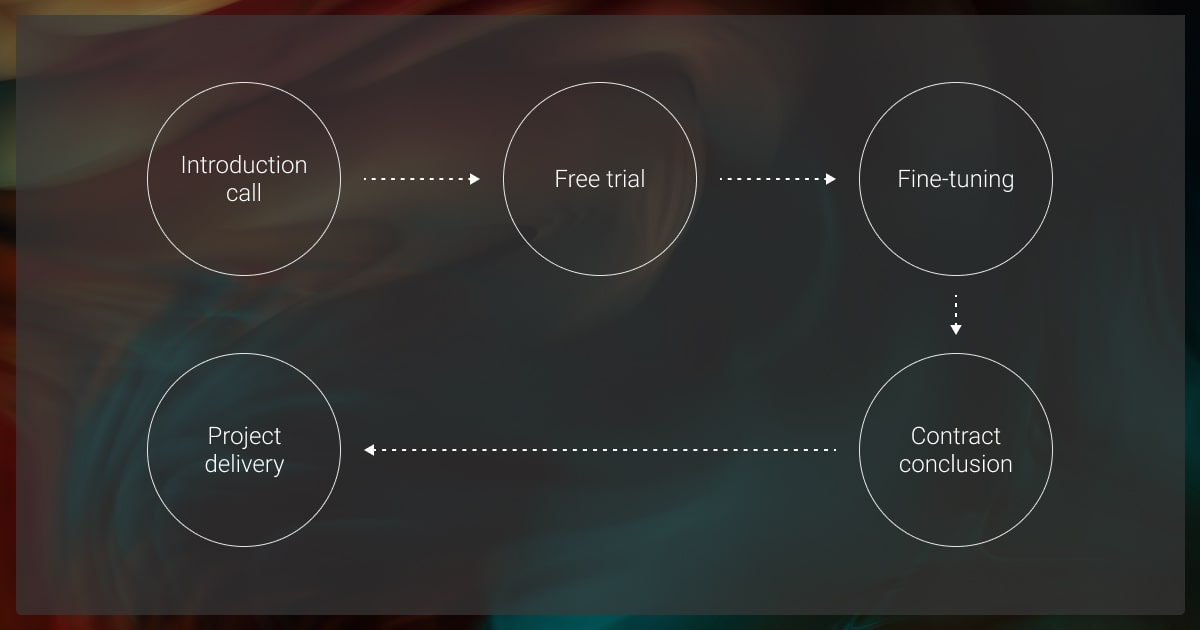

After your first point of contact, the process goes through a number of steps:

Introduction call. The first introduction call allows you to describe your project and your needs. You specify whether you need data collection or your datasets are ready for annotation. You also describe your requirements in terms of geographic location, demographics, and types of annotation.

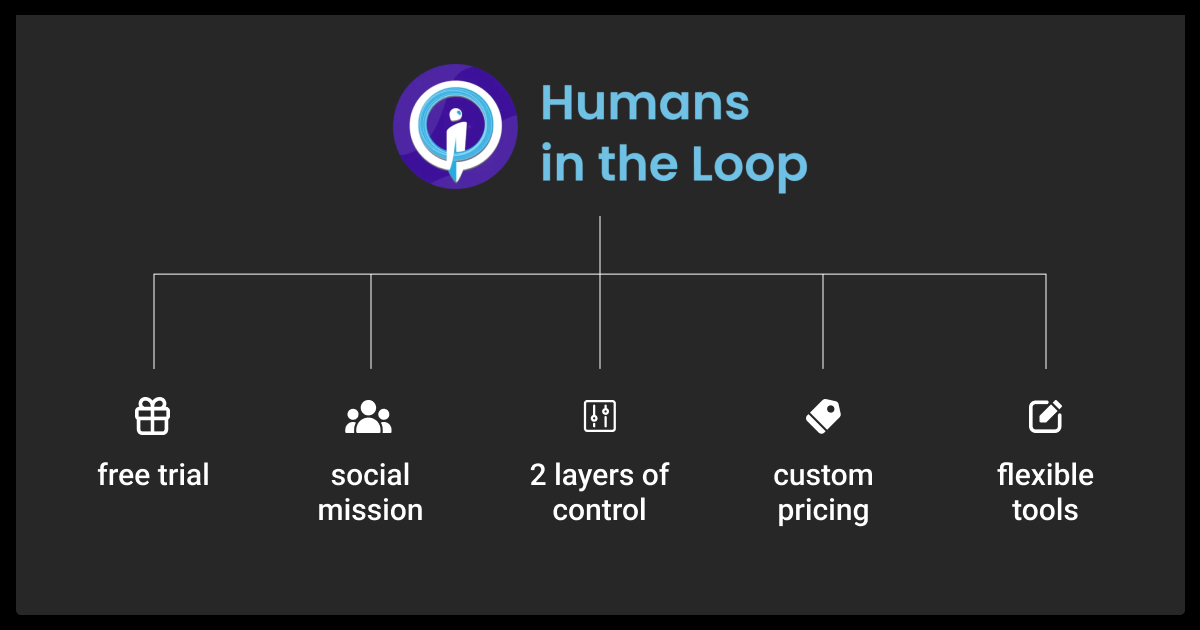

Free trial. Humans in the Loop offer a free trial on a piece of the dataset you want to annotate. It consists of 2 hours of free annotation on the sample and according to the instructions you will send to them. This allows you to assess the outputs and the team of annotators to estimate timelines and volume of the annotation. The free trial is usually ready 72 hours after you send your samples.

Fine-tuning. This stage is aimed at finalizing the requirements for annotation. Humans in the Loop reviews your task and specify the adjustments, if required. You agree on the annotation type and complete the interface set-up. This is the space where you'll send your task and receive the final result.

Contract conclusion. As soon as you sign the contract, the team of annotators starts their work. Depending on the volume, a certain amount of annotators will be assigned to your project. Assigned project coordinators will take care of the milestones. You may leave your comments during the annotation, if the tool you've chosen allows it. You can also take part in the training of annotators at the beginning of the project. Besides, the team can provide with any custom report to see the progress and accomplishment of the task.

Project delivery. Before submitting the final result, Humans in the Loop overview the requirements and assess the annotation multiple times. The delivery usually takes place through the API interface you set up previously. The company also sets up the final call to ensure the task is complete, and you receive the result you agreed upon.

Humans in the Loop Quality Assurance

Moving forward with Humans in the Loop company overview, we should say a few words about their Quality Assurance (QA) process. The company undertakes two layers of quality assurance:

They have a supervisor dedicated to every project. They check it against previously defined acceptance rates.

There is a dedicated QA team who checks the annotation before submission.

At the beginning of the project, the team of annotators also ensure that they document edge cases and come to an agreement on data interpretation and annotation. This approach allows to proactively eliminate further inconsistencies in annotation.

Humans in the Loop Pricing

The price of Humans in the Loop is based on the individual approach for every client. The free trial mentioned above is aimed to estimate difficulty, timelines, and volume of your project. After the real-time labeling and annotation services trial, the company will provide you with a custom pricing.

Their pricing model is usually calculated per task or per hour. The company also works on express, or urgent, annotation, which will be reflected in the pricing.

Humans in the Loop Security and Data Compliance

Finally, let's have a few words about security. It's important to know that Humans in the Loop are GDPR-compliant, working and storing your data in secure clouds (e.g. AWS). If your project contains personal data, the company signs data processing agreements before starting working on it.

The annotators sign a Confirmation Form and any other NDA, if needed, to gain access to your project. They will have access to the data only until they label it. Afterward, data disappears from annotators' view.

For security measures, all data annotators learn cybersecurity basics, which are also part of NDA. They also receive the training on implementation of Data Protection Policy, in accordance with the Regulation (EU) 2016/679.

For their mission of being a social company creating jobs for affected people, Humans in the Loop received a B Corp certificate. Besides, they won MIT SOLVE Global Challenges nomination in 2020 in the area of Good Jobs and Inclusive Entrepreneurship. In 2020, they were also recognized as global innovators by Expo Live.

If you seek a security-compliant data labeling provider for NLP or Computer Vision models, look no further and run your free pilot with us!

Top Humans in the Loop Alternatives

If you still miss some critical moments for your choice, we have alternative key players on the market. Here are 3 data annotation leaders, which offer practical solutions:

Label Your Data

At Label Your Data, our focus lies in providing high-quality datasets for both NLP and computer vision models. Our +13 years of expertise counts over 100 projects, allowing us to understand your pressing dataset challenges and offer solutions to solve them. With a flexible approach, our team can seamlessly integrate into your labeling software or provide our in-house solutions. . Our free pilot and adaptable pricing models meet requirements for projects of various durations and for different tools. To guarantee security of your data, we pass regular audit checks and have industry-standard certifications such as PCI DSS Level 1, ISO:2700, GDPR, and CCPA.

CloudFactory

With over 7000 of data analysts and over 10 years of experience in the field, CloudFactory provides human-in-the-loop data annotation for ML projects of various difficulty. CloudFactory covers multiple solutions, from accelerated annotation to AI automation, from NLP to computer vision tasks. Working on numerous dataset types and with its own platform, the company is a big player in the market. To get more details, read our CloudFactory Review.

Appen

Appen focuses on data labeling for large language models (LLMs). They combine innovation and human expertise to produce high-quality labeled data for further AI usage. They work with natural language processing, computer vision, audio and speech processing, and other types of data annotation. Besides, they provide services for data collection and document intelligence. Appen's AI data platform helps to customize and improve LLMs.

FAQ

Is NLP part of Humans in the Loop's services?

Although Humans in the Loop provide different services and solutions for data annotation, their main expertise is in computer vision. They offer bounding boxes annotation, polygons, keypoint, semantic segmentation, 3D, and video annotation.

Does Humans in the Loop use LLM for labeling automation?

Humans in the Loop work with various free and open source tools, but mostly to store and label datasets. They provide human annotations for every task and project. Their team of annotators comes from around the world, offering annotation in multiple languages.

How quick can Humans in the Loop annotate the task?

Humans in the Loop work with small and big projects, annotating datasets from a couple of days to a couple of weeks, depending on the volume. Your free trial will be ready in 72 hours, while bigger projects may require more annotators and more days.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.