Trusted by 100+ Customers

Use Cases for

ML Engineers

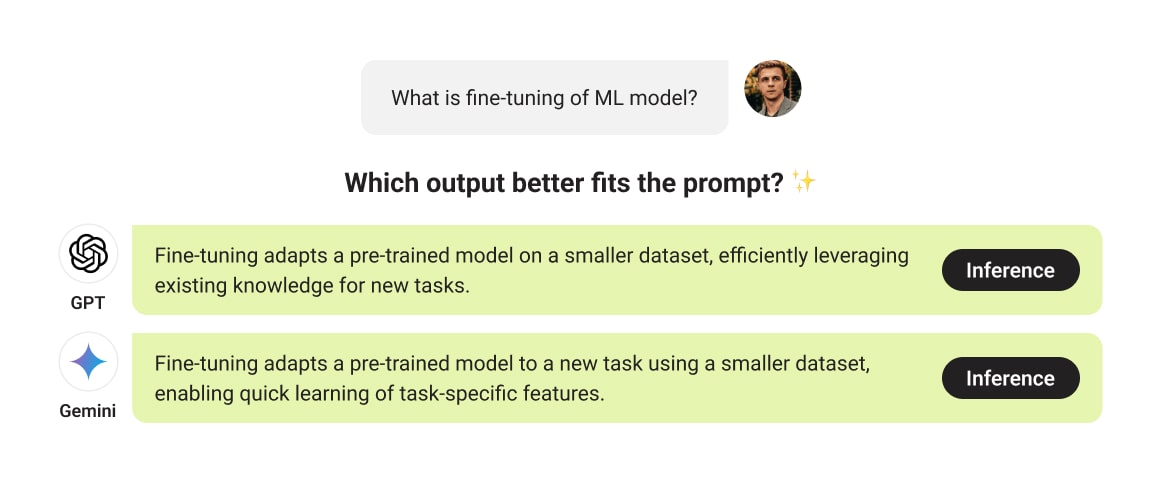

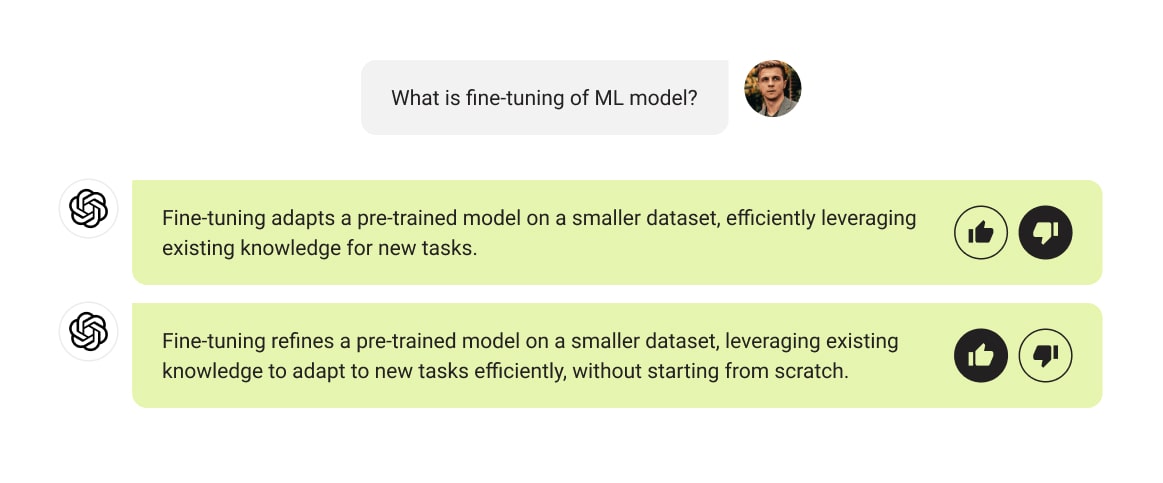

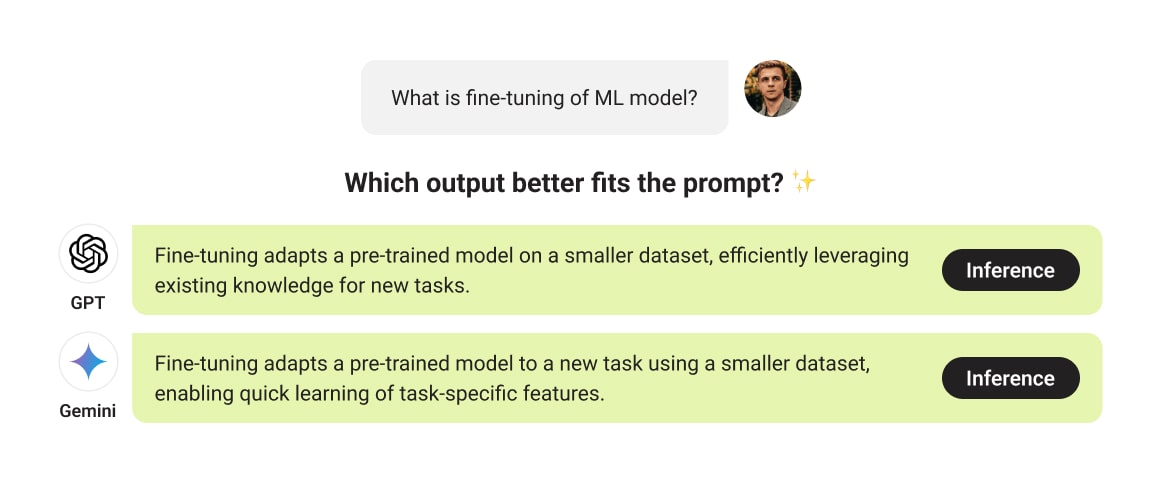

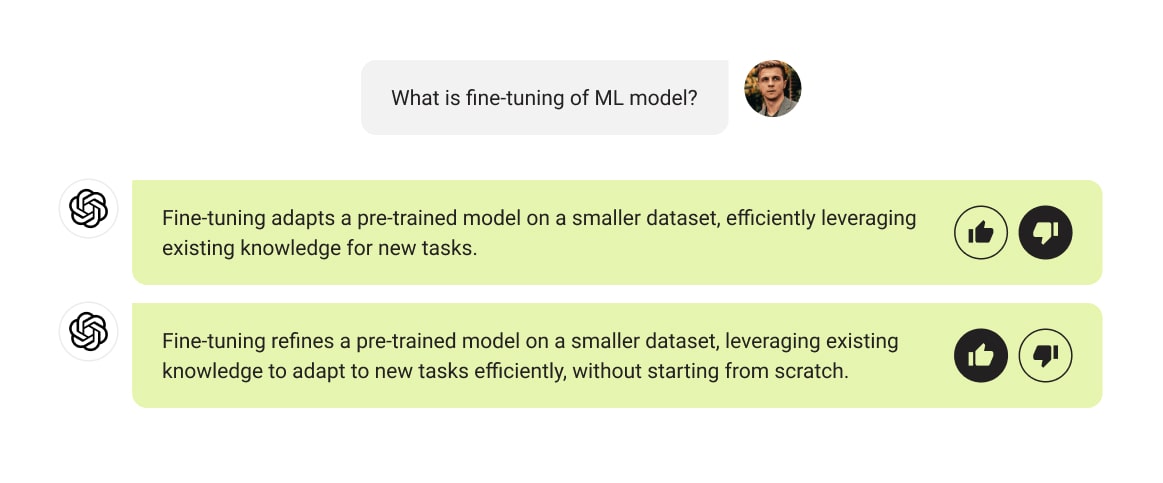

You are working on a supervised learning / RLHF project

In-house annotations take too long

Dataset Business

You are selling annotated datasets

Need to process raw datasets

AI-Powered Business

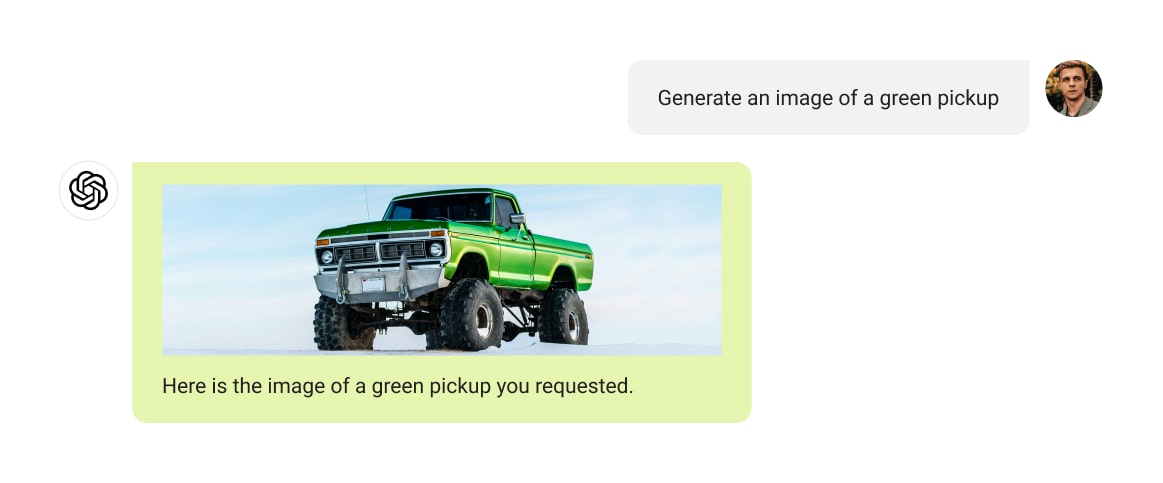

Your business is using an ML model to recognize objects

Your raw data stockpiles faster than you can process it

Academic Researchers

Peer reviewers require annotated datasets

You can’t afford to spend time on annotations

Data Services for You

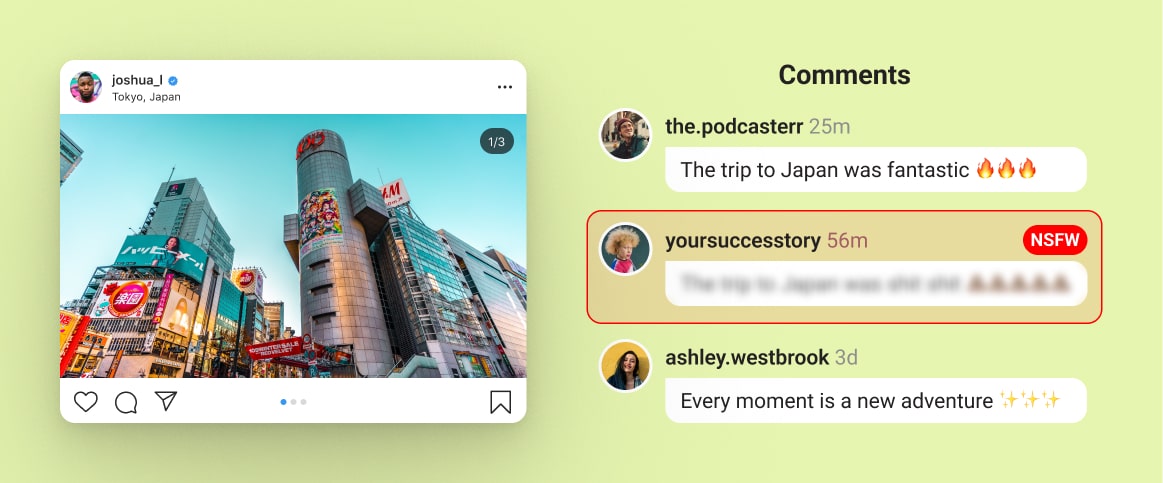

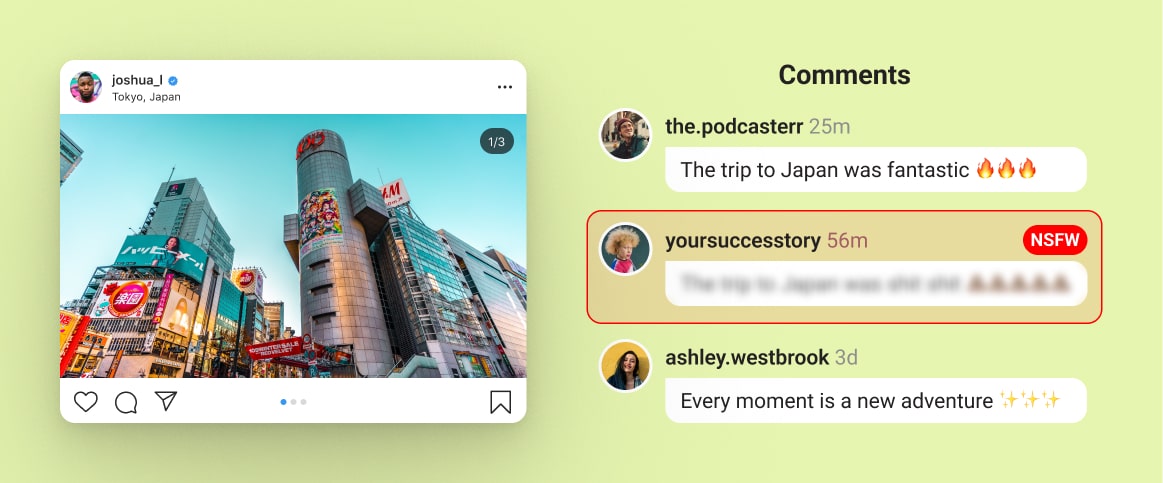

Get a dedicated content moderator or annotate your NSFW social data to train your model.

Beyond annotation, process your data with services like digitizing, parsing, and cleansing.

Get a dedicated content moderator or annotate your NSFW social data to train your model.

Beyond annotation, process your data with services like digitizing, parsing, and cleansing.

How It Works

Free Pilot

Send us your dataset sample for free labeling to experience our services risk-free.

QA

Evaluate the pilot results to ensure we meet your quality and cost expectations.

Proposal

Receive a detailed proposal tailored to your specific text annotation needs.

Start Labeling

Begin the labeling process with our expert team to enhance your NLP project.

Delivery

Receive timely delivery of your labeled data, keeping your project on schedule.

Calculate Your Cost

Estimates

For other use cases

send us your request to receive a custom calculation

Send your sample data to get the precise cost FREE

Why Projects Choose Label Your Data

No Commitment

Check our performance based on a free trial

Flexible Pricing

Pay per labeled object or per annotation hour

Tool-Agnostic

Working with every annotation tool, even your custom tools

Data Compliance

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

Start Free Pilot

fill up this form to send your pilot request

Thank you for contacting us!

We'll get back to you shortly

Label Your Data were genuinely interested in the success of my project, asked good questions, and were flexible in working in my proprietary software environment.

Kyle Hamilton

PhD Researcher at TU Dublin

Trusted by ML Professionals

FAQs

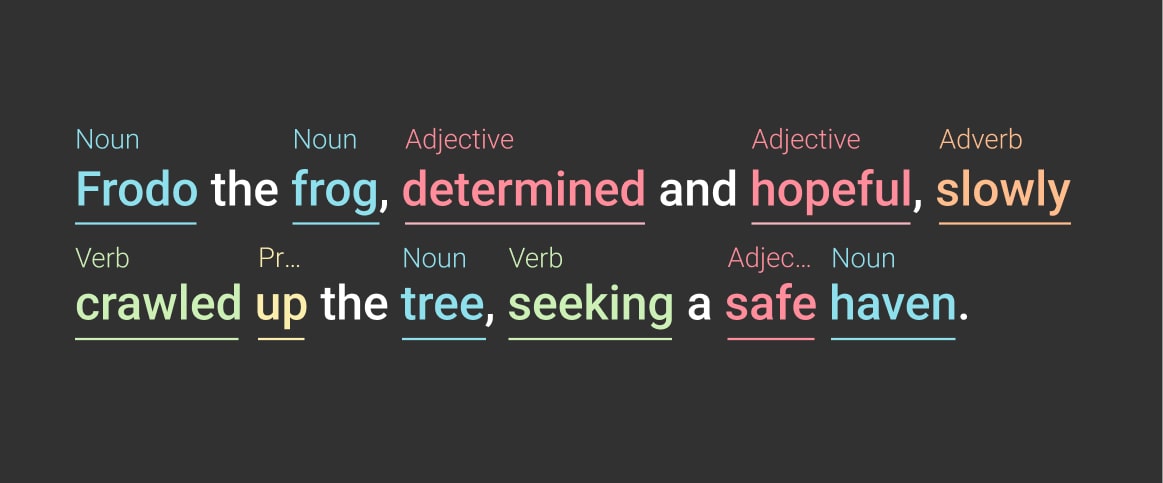

What is a data annotation service?

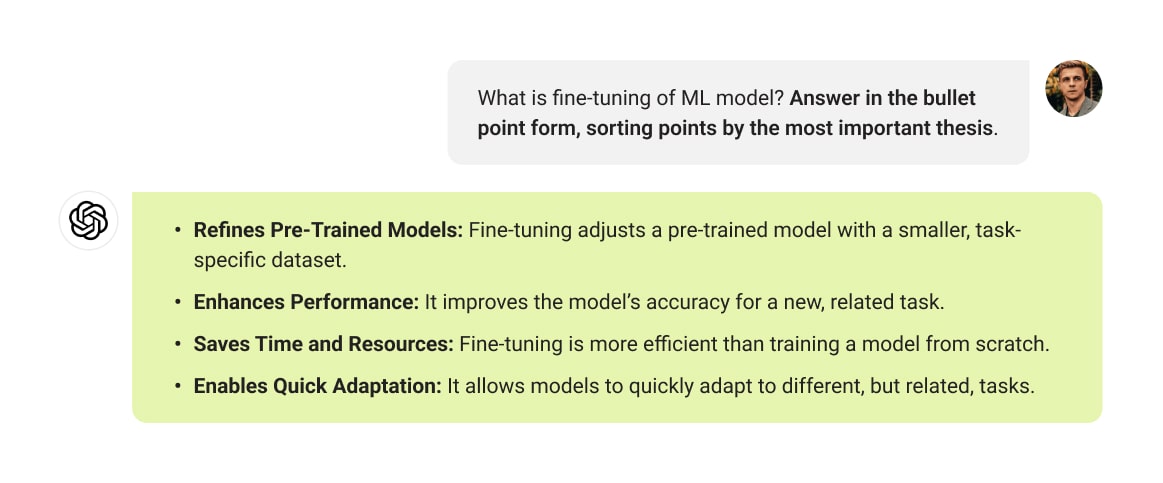

A data annotation service, like the one offered by Label Your Data, involves labeling and tagging datasets such as text, images, and videos to make them usable for training ML models. This process converts raw data into structured information, enabling AI systems to learn and improve their performance.

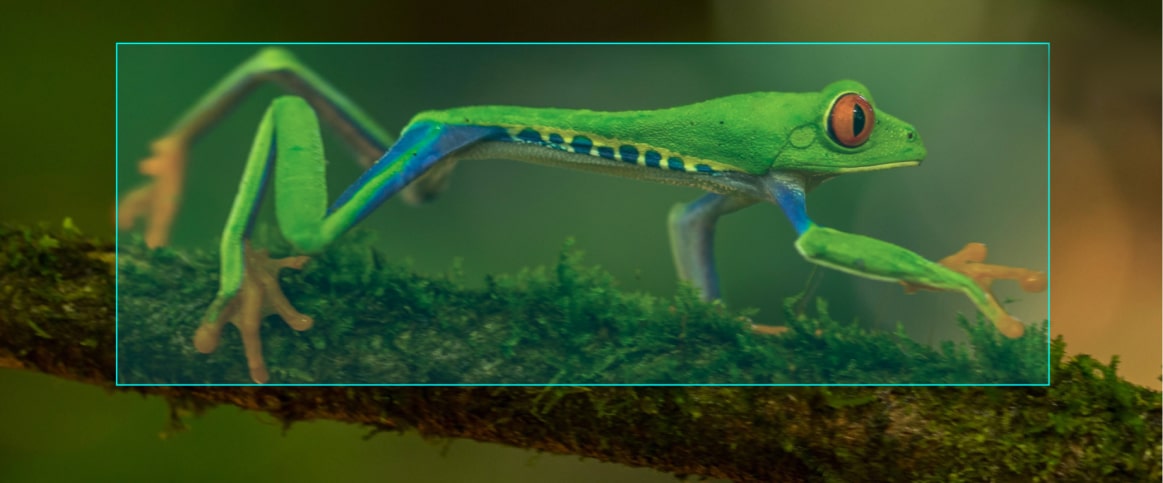

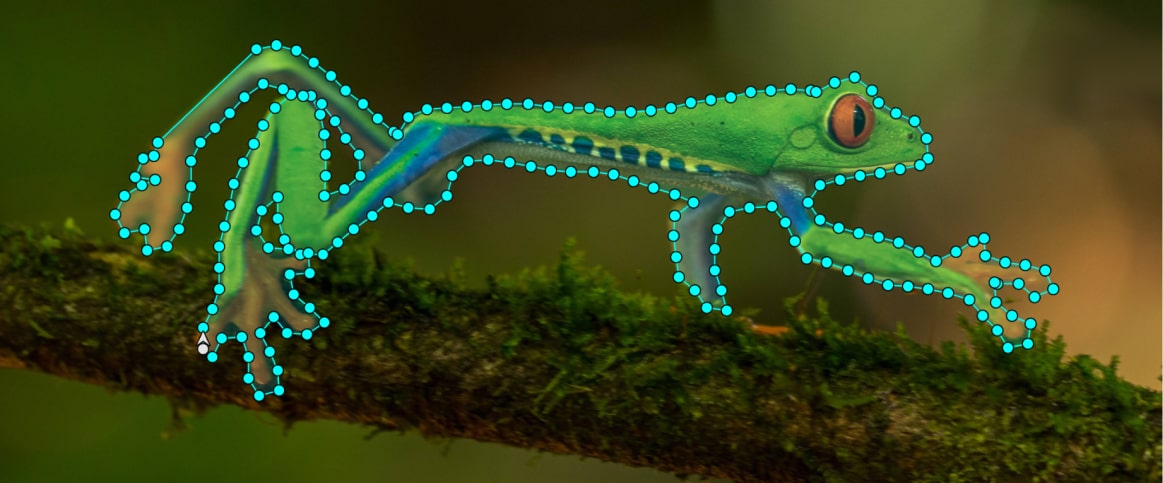

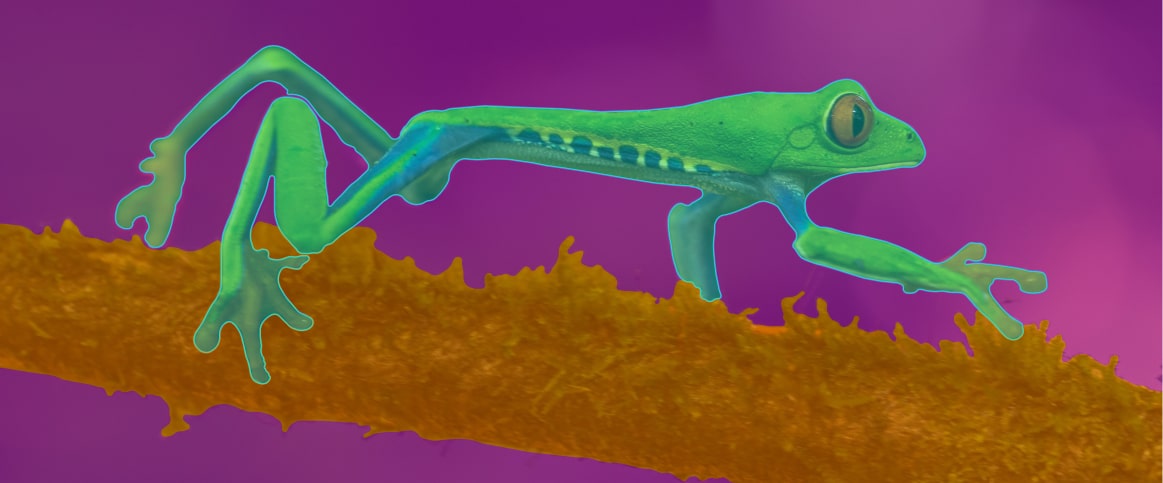

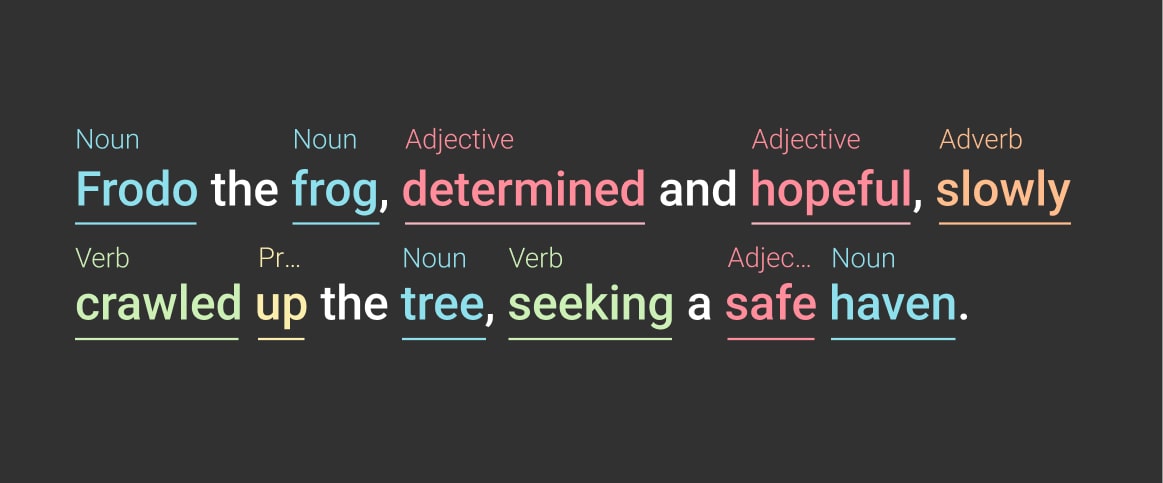

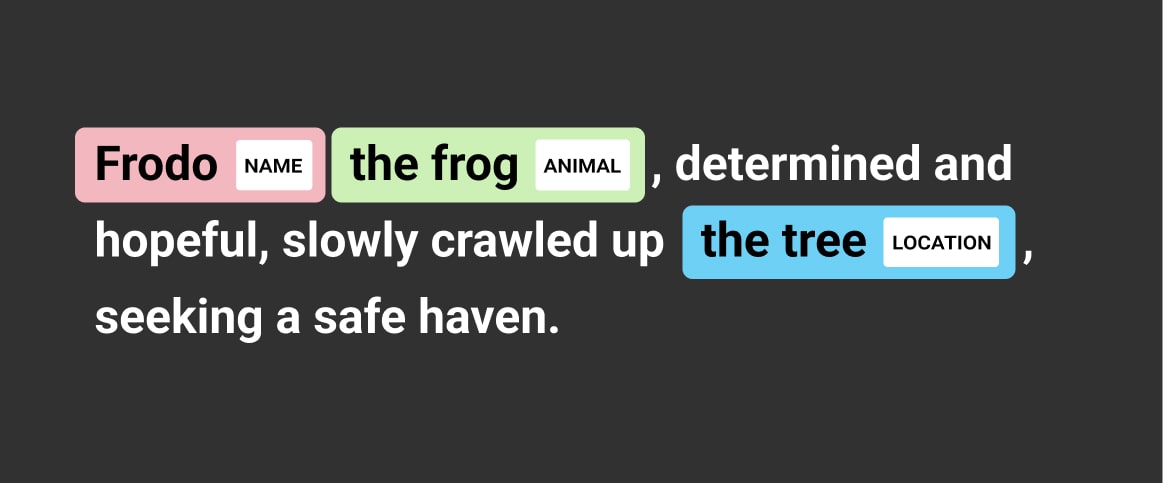

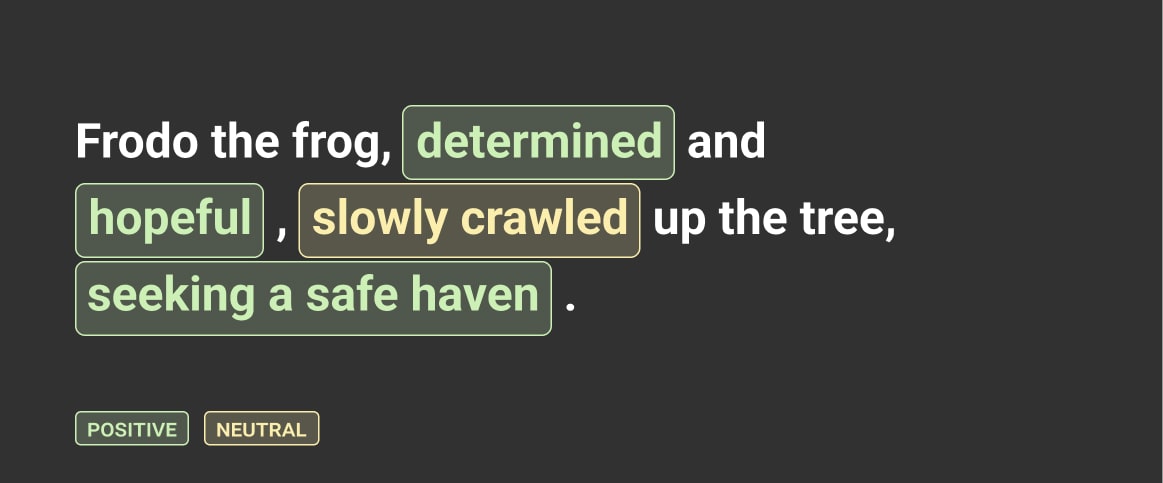

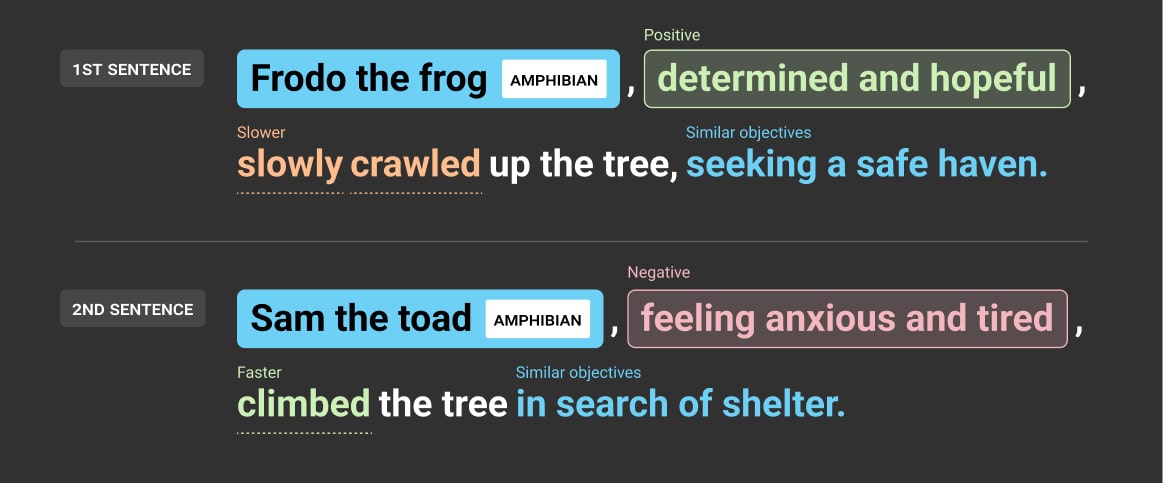

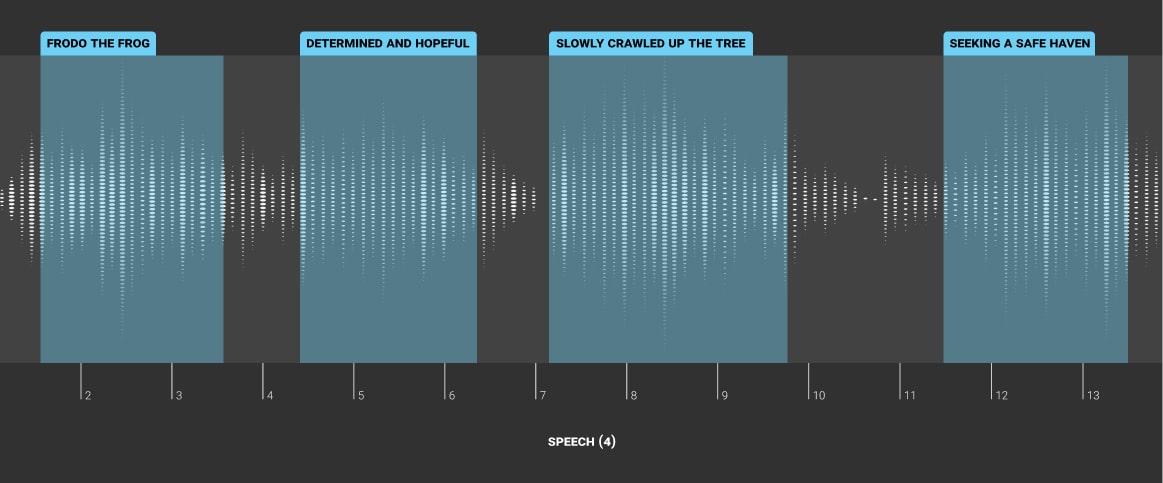

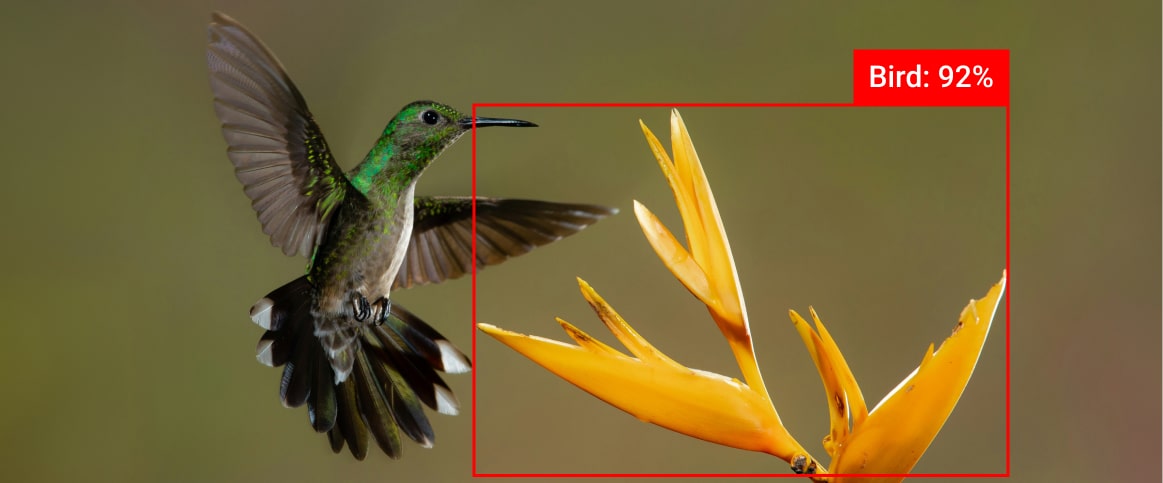

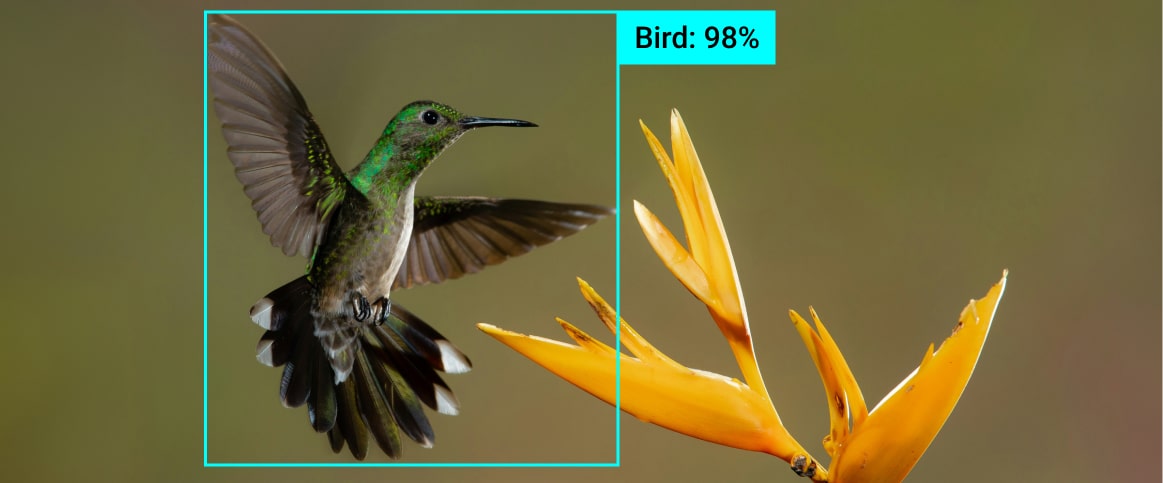

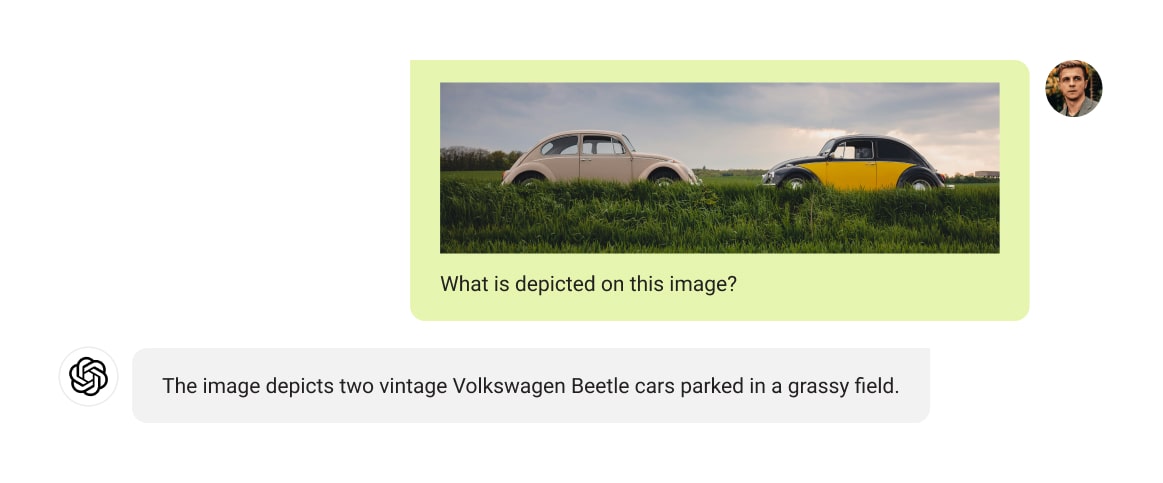

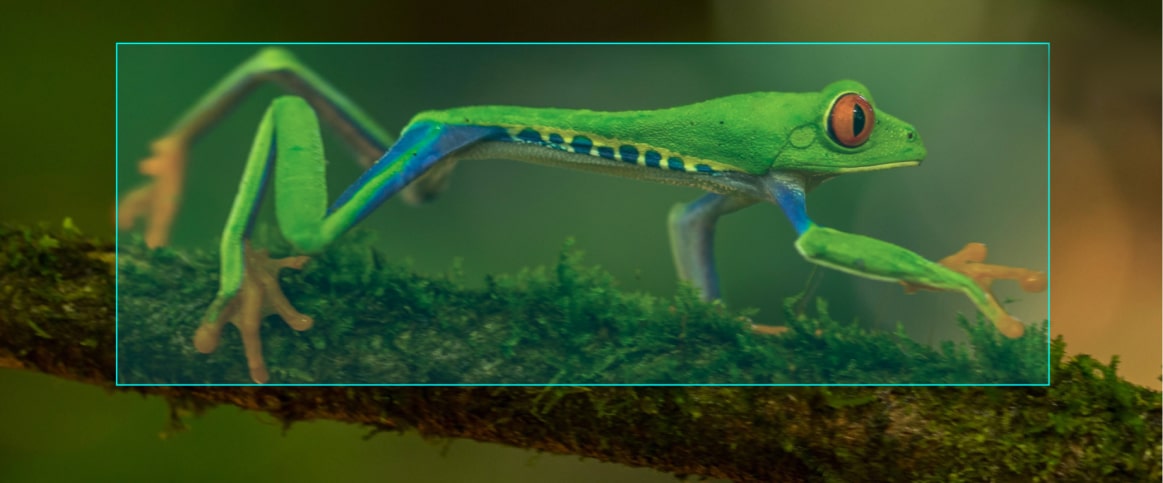

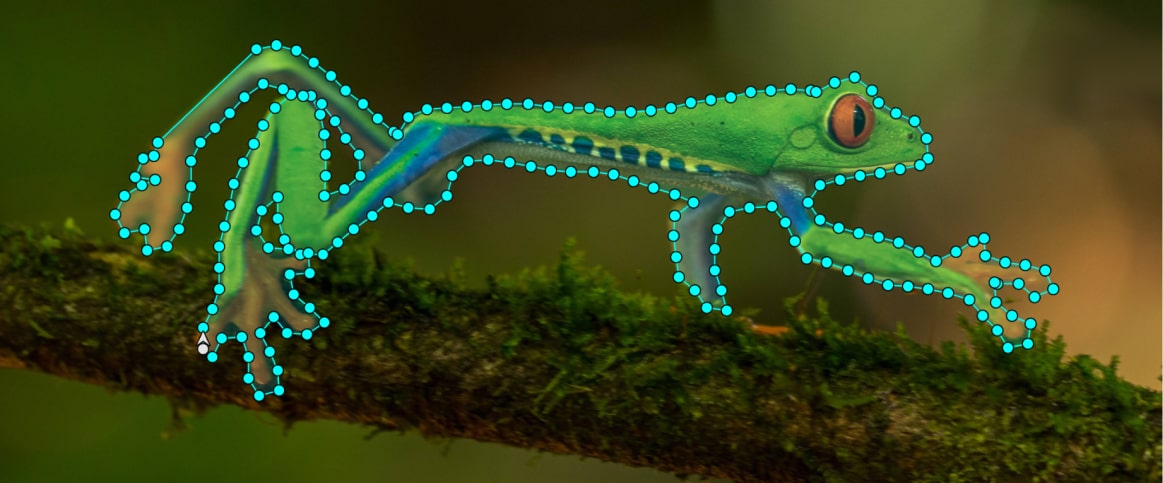

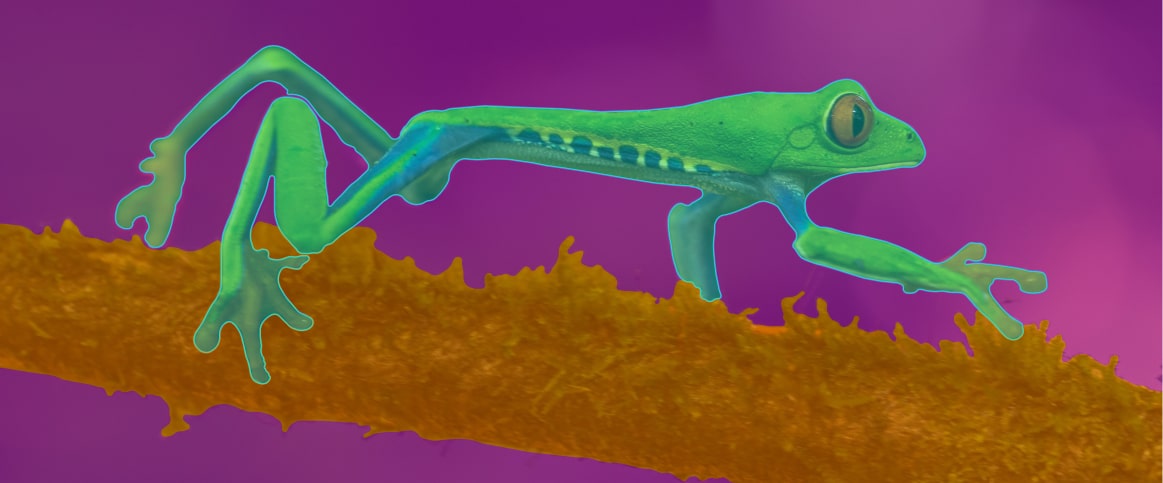

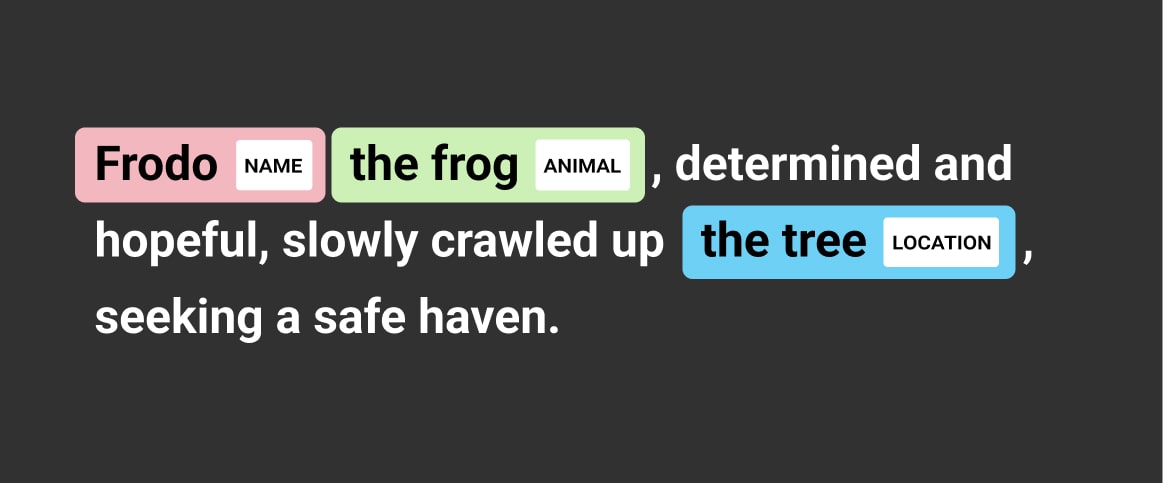

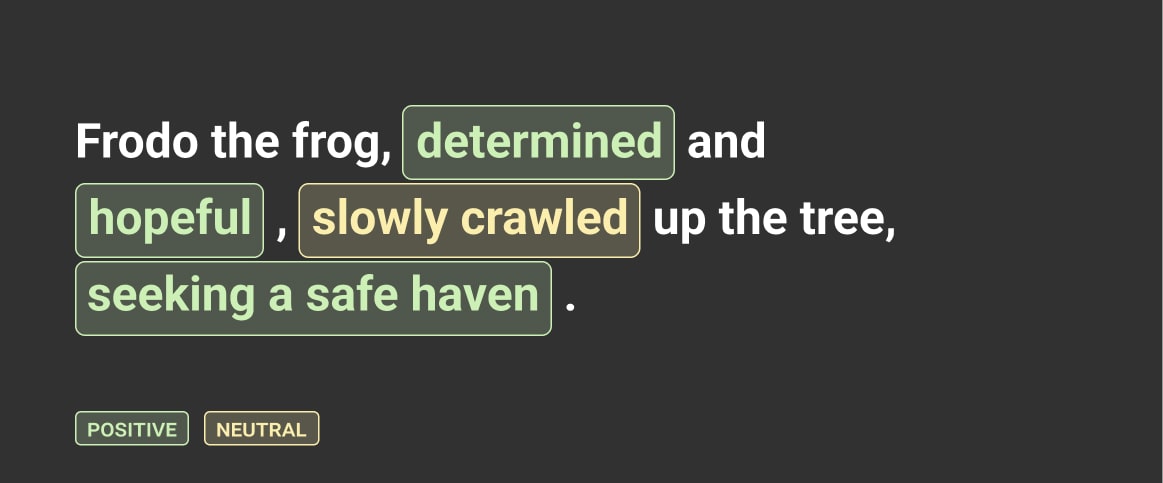

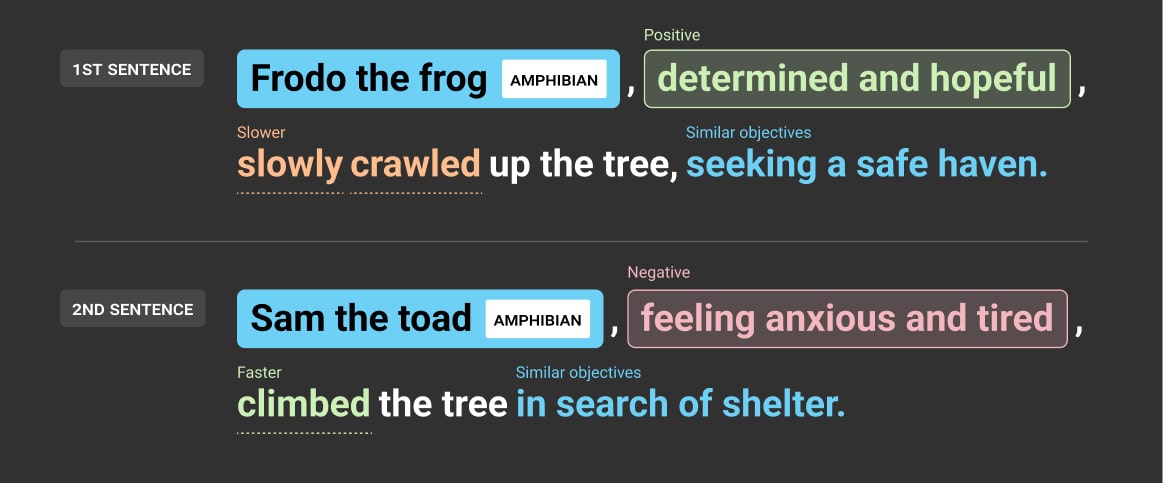

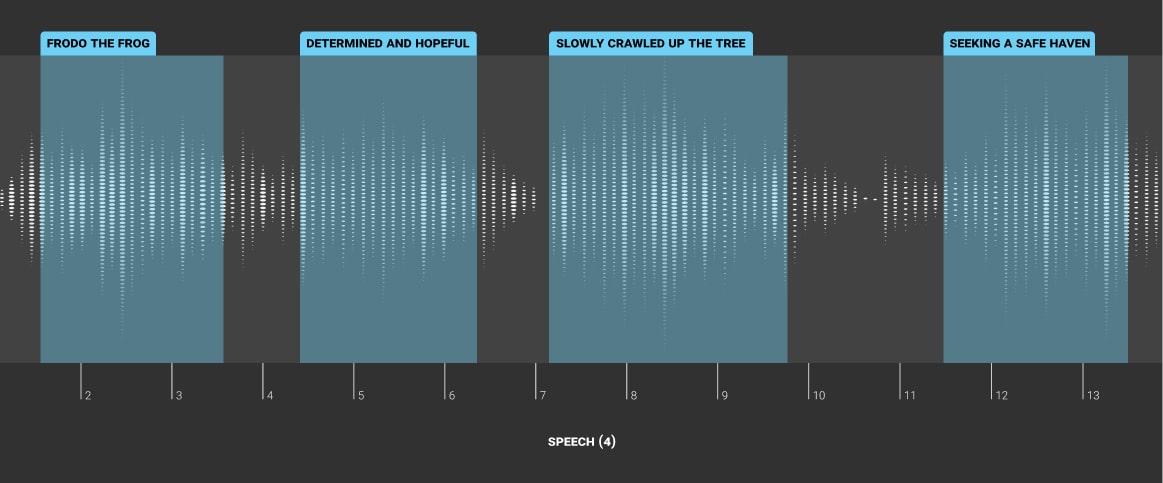

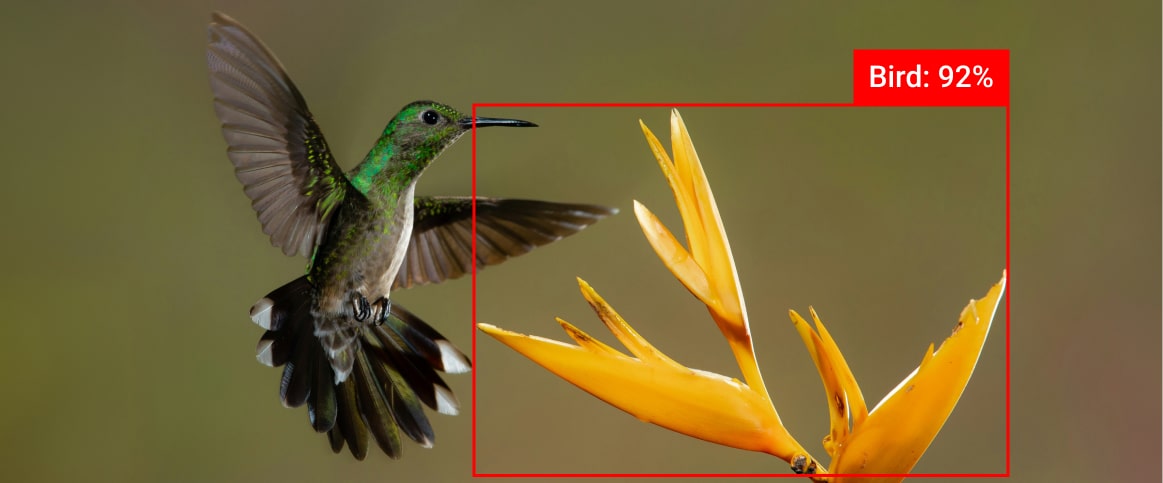

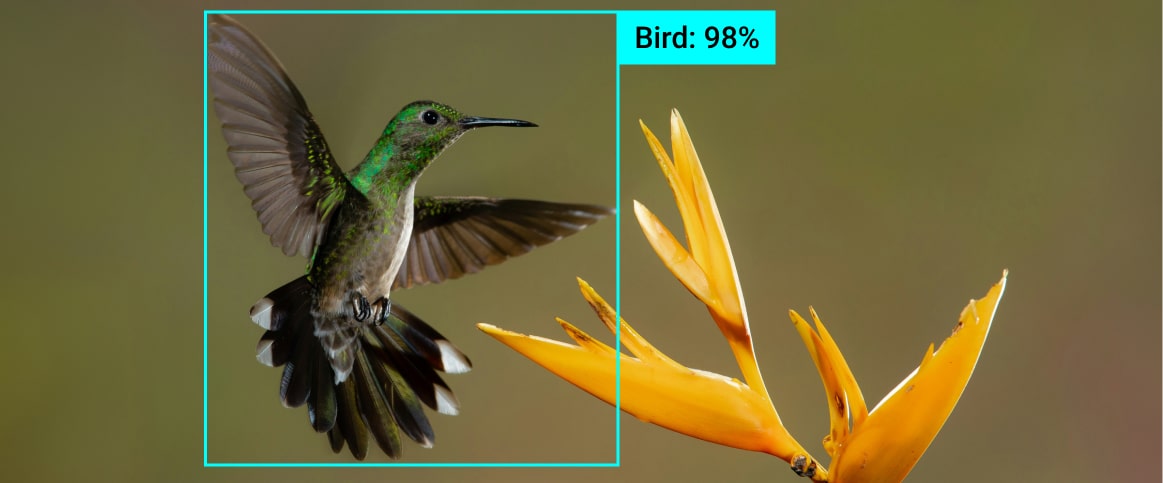

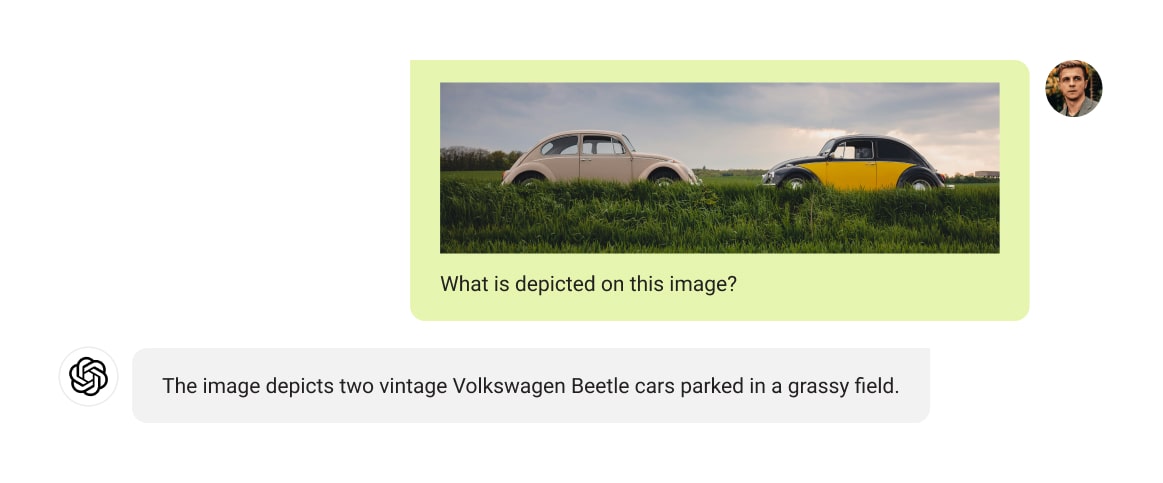

What is a data annotation example?

An example of data annotation is image labeling, where objects in an image are highlighted with colored layers, such as bounding boxes or segmentation masks, to identify features like cars, people, or animals. You can check out more examples in the data services section to see how we annotate different data types for various use cases at Label Your Data.