LLM Agents: Enhancing Automation and Efficiency

Table of Contents

- TL;DR

- LLM Agents: The Next Step in Workflow Automation

- Advanced Capabilities of LLM Agents

- Practical Applications of LLM Agents for Automating ML Workflows

- Integrating LLM Agents into ML Pipelines

- Key Benefits of LLM Agents for ML Teams

- Overcoming Deployment Challenges

- Security and Ethical Considerations in LLM Agents

- Future Trends

- FAQ

TL;DR

LLM agents automate complex workflows in AI and ML engineering.

They reduce manual tasks in data labeling, model tuning, and deployment.

Advanced features include memory systems, task management, and external tool integration.

LLM agents streamline workflows and enhance scalability across teams.

Security and ethical considerations are essential for LLM agent deployment.

LLM Agents: The Next Step in Workflow Automation

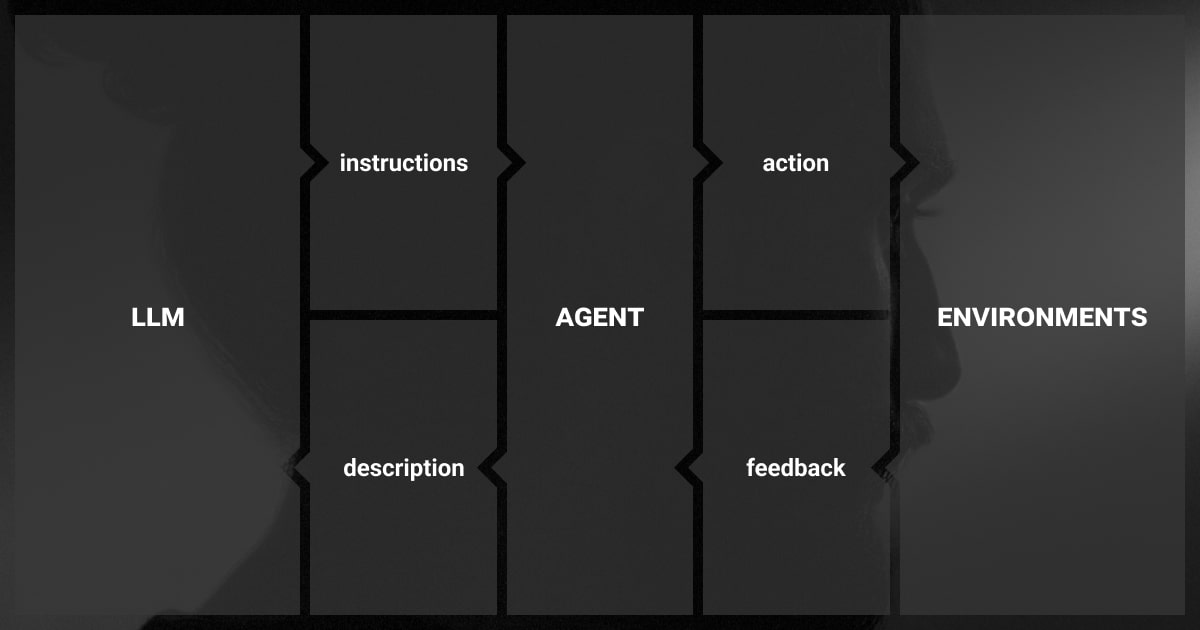

LLM agents are highly capable autonomous systems. They harness the power of large language models to automate various tasks in ML workflows. From data preprocessing to model tuning and real-time deployment, these agents help AI engineers and data scientists manage their projects.

Many companies, including Label Your Data, now offer LLM fine-tuning to help businesses customize these agents for specific tasks. These tailored models not only automate repetitive work but also improve the overall workflow. They do so by minimizing errors and optimizing performance. Such a level of automation is a game-changer for industries where speed and accuracy are pivotal.

Key Components of LLM Agents

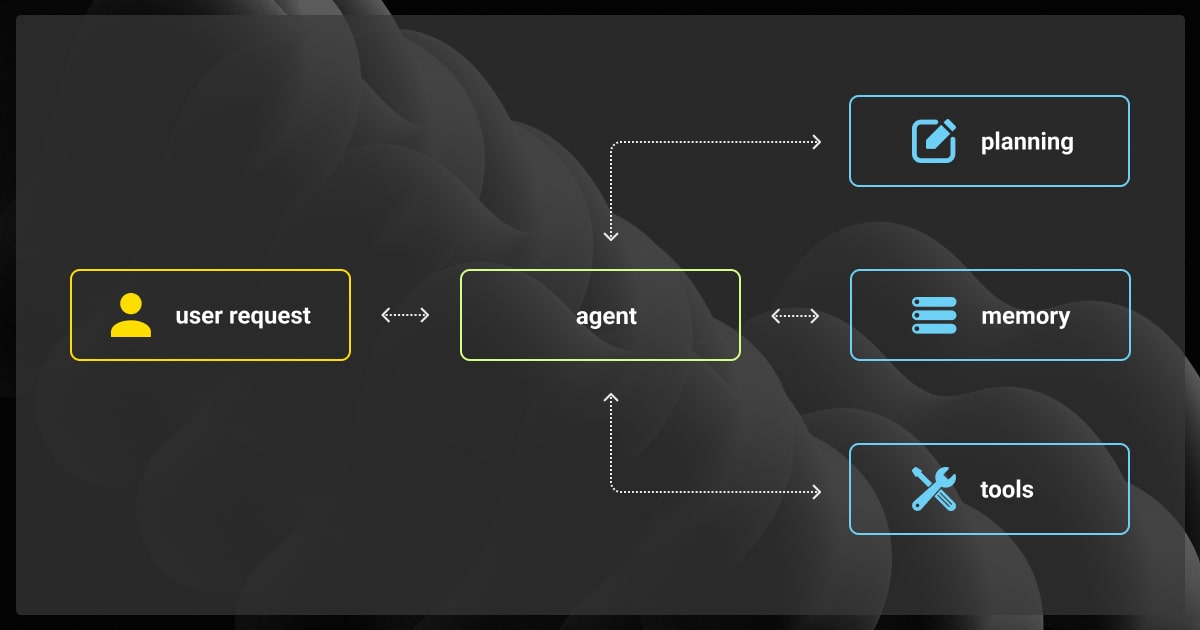

LLM agents rely on several core components to function effectively. These components work together to enable the agent to handle various tasks.

Component | Description |

Language Model Core | Powers language understanding and generation. |

Task-Specific Modules | Handles specialized tasks like retrieval or decision-making. |

Input/Output Interfaces | Links the agent to external data sources or APIs. |

Control Logic & Workflow | Manages task flow, prioritization, and error handling. |

Essential Tools for LLM Agents

These systems are enhanced by various tools that boost their functionality, from search optimization to model fine-tuning.

FAISS: Provides fast, scalable search capabilities, essential for retrieval tasks.

Hugging Face Transformers: Enables fine-tuning and deployment of models in specific domains.

OpenAI API: Allows real-time interaction with LLMs for dynamic task handling.

LLM Agent Frameworks

LLM agent frameworks simplify the process of building and deploying agents, providing structured workflows and integrations.

Framework | Key Features |

LangChain | Prompt chaining, API integration, task orchestration. |

Haystack | Modular design, retrieval-based workflows, external data handling. |

Workflow Tools | Enable multi-step task automation and error handling. |

Top LLM Agents in 2024

Several advanced LLM-based agents lead the way in automation and task management across industries:

ChatGPT (OpenAI): Widely used for customer support, content generation, and programming assistance.

Google Gemini (previously Bard): Specializes in real-time data processing and research-based tasks.

Claude (Anthropic): Focuses on AI safety and ethical considerations in automation.

Looking to implement cutting-edge LLM agents? Contact our team today to get started with tailored automation solutions!

Advanced Capabilities of LLM Agents

The capabilities of LLM agents go beyond simple automation. Let’s explore some of the most cutting-edge features transforming ML workflows across industries:

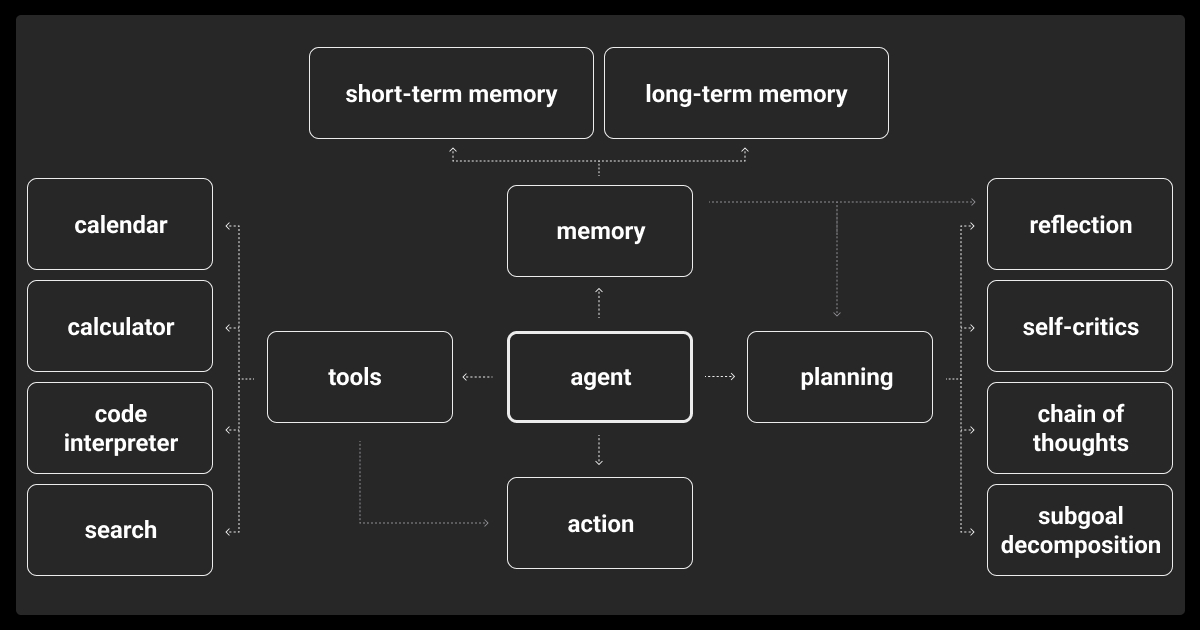

Memory Systems and Continuous Learning

One of the standout features of modern agents LLM is their ability to incorporate memory systems and continuous learning mechanisms. These agents can retain information from past tasks and integrate new knowledge as they encounter fresh data.

This continuous learning capability allows LLM agents to adapt to changing workflows and environments without requiring manual retraining. For example, LLM agents designed for data annotation can remember patterns from previous tasks, improving accuracy and reducing the time needed for labeling.

Self-Adaptive Task Management

LLM-based agents have evolved to dynamically adjust task priorities based on real-time data. This feature allows them to manage workflows autonomously, even as conditions change. For instance, if data characteristics evolve during preprocessing, the LLM agent can reprioritize tasks like data cleaning or augmentation to ensure high-quality inputs for model training.

A case in point is the use of agents LLM in model tuning. They can manage multiple experiments simultaneously, adjusting hyperparameters in real-time based on ongoing results. This self-adaptive capability significantly reduces the workload for ML engineers, who would otherwise have to intervene in each iteration manually.

Interoperability with External Tools

Integration is paramount for any modern AI system, and LLM agents are no exception. These agents can seamlessly connect with external APIs, databases, and other tools to automate complex workflows.

LLM agents can use external tools to handle tasks like data transformation, reporting, and model deployment with minimal human input. For instance, they can pull data from APIs, process it in real-time, and feed it into an ML pipeline. This capability is ideal for large-scale, real-time applications like financial markets or autonomous vehicles.

Practical Applications of LLM Agents for Automating ML Workflows

LLM agents are already being used to automate several stages of machine learning workflows, improving both speed and accuracy in various industries.

Data Preprocessing and Labeling Automation

One of the most time-consuming tasks in machine learning is data preprocessing. Agents LLM are now automating much of this process, including data cleaning, augmentation, and labeling. These agents can identify and correct errors in data, reduce noise, and even label large datasets in real-time. This not only speeds up the process but also improves the quality of the data, leading to more accurate model predictions.

Task | Before Automation | After Automation (LLM Agent) |

Data Cleaning | Manual correction | Automated detection & correction |

Data Labeling | Manual annotation | Real-time automated labeling |

Data Augmentation | Manual processing | Automated with dynamic updates |

Model Tuning and Experiment Management

LLM-based agents are playing a critical role in automating model tuning and experiment management. Through real-time monitoring and dynamic adjustments, LLM agents can fine-tune models faster and more efficiently than manual methods. They can also run multiple experiments in parallel, optimizing hyperparameters and architectures without the need for human oversight.

Real-Time Model Deployment

Deploying machine learning models in real-time is a complex task that requires constant monitoring and adjustments. LLM powered autonomous agents simplify this process by automating model deployment pipelines. These agents can manage model updates, retraining, and monitoring, ensuring that models perform optimally in real-world environments.

Integrating LLM Agents into ML Pipelines

To fully leverage the power of LLM agents, it’s crucial to understand how they integrate into machine learning pipelines. From data preprocessing to model deployment, these agents streamline processes, allowing ML engineers to focus on higher-level tasks.

Automation of Data Labeling and Preprocessing

In the context of machine learning, agents in LLM can automate both data labeling and preprocessing tasks. This is especially beneficial for teams working with large-scale datasets, where manual labeling would be both time-consuming and error-prone. LLM agents can dynamically adjust to changes in data, ensuring high-quality inputs for model training.

Model Tuning and Experimentation with LLM Agents

Another area where LLM agents shine is model tuning and experimentation. By automating hyperparameter optimization and architecture search, these agents can dramatically speed up the model development cycle. Additionally, they can identify performance bottlenecks and make adjustments in real-time, reducing the need for manual debugging.

Key Benefits of LLM Agents for ML Teams

LLM agents not only automate individual tasks but also enhance the overall efficiency and scalability of AI teams. Let’s explore some of their main benefits.

Scaling LLM Agents Across Teams and Departments

One of the advantages of LLM agents is their ability to scale across multiple teams and departments. These agents can handle large volumes of data and tasks simultaneously, allowing teams to work in parallel. Whether it’s managing data preprocessing or model tuning, agents LLM ensure that different departments can collaborate more effectively without the bottlenecks of manual processes.

Streamlining Cross-Team Collaboration

LLM-based agents act as intelligent middle layers between different teams. They automate shared tasks, such as data transformation and report generation, facilitating smoother collaboration across departments. This is particularly beneficial for large organizations where multiple teams need to work together on the same project.

Improving Operational Efficiency

LLM agents reduce the overhead associated with repetitive tasks, allowing teams to focus on higher-value activities. For example, ML engineers can dedicate more time to model development and experimentation, while the agents handle data preprocessing, labeling, and monitoring.

Ready to enhance your workflows with LLM agents? Discover how our fine-tuning services can customize solutions for your business with a free pilot.

Overcoming Deployment Challenges

Deploying LLM agents at scale is not without its challenges. However, these can be mitigated with the right strategies and technologies.

Managing Model Drift and Performance Degradation

One of the primary challenges in deploying LLM agents is managing model drift. As the data in production environments evolves, models may start to perform poorly. LLM agents help mitigate this by using self-adjusting algorithms that monitor data and retrain models automatically, ensuring that performance remains consistent over time.

Latency and Resource Management in Large-Scale Deployments

Large-scale deployments often face issues related to latency and resource management. LLM agents offer solutions by optimizing workflows, distributing tasks across multiple systems, and managing resource allocation more efficiently. This ensures that latency is minimized even as the scale of operations grows.

Security and Ethical Considerations in LLM Agents

As with any AI system, security and ethics are critical considerations when deploying LLM agents.

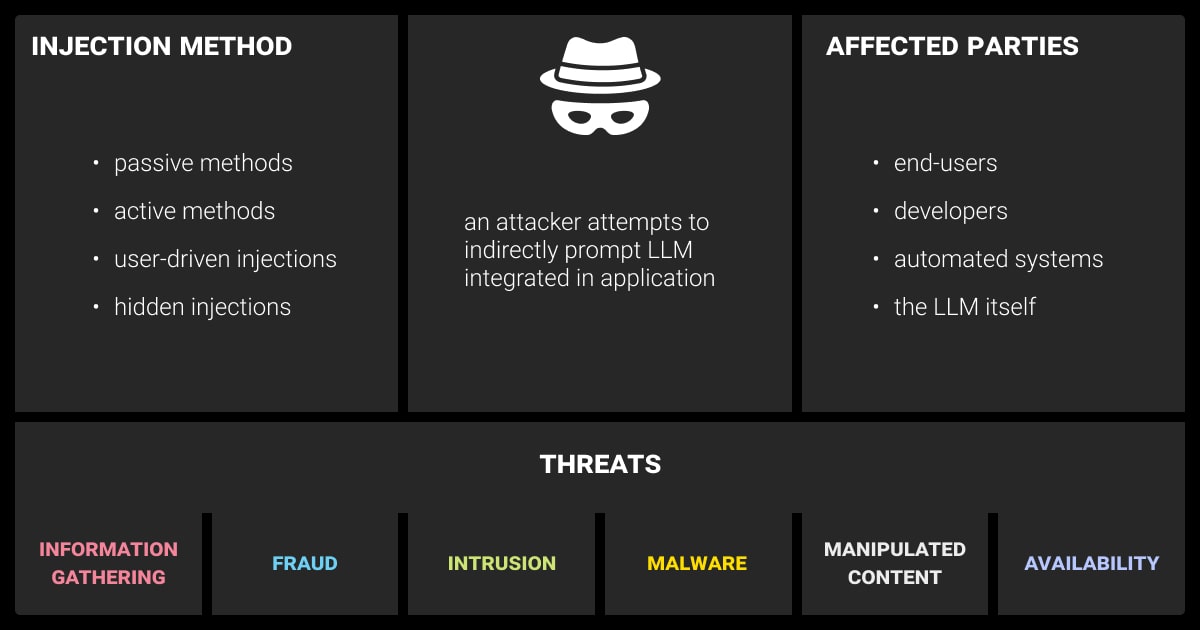

Addressing Security Vulnerabilities

LLM agents must be designed to withstand various security threats, including prompt injection attacks and adversarial inputs. Robust encryption and secure APIs are essential for preventing unauthorized data access. Additionally, adversarial training techniques can help improve the robustness of LLM agents against malicious attacks.

Ethics in Autonomous Systems

As agents in LLM become more autonomous, ethical considerations such as bias, accountability, and transparency must be addressed. Organizations should implement frameworks that ensure these agents make decisions in line with company policies and societal norms. This includes regular audits of the agents' decision-making processes to prevent biases from being reinforced over time.

Future Trends

The future of LLM agents promises even greater advancements in automation, adaptability, and task optimization. This will further revolutionize how ML teams function.

Autonomous Learning and Task Optimization

Emerging trends indicate that LLM agents will increasingly rely on fully autonomous learning mechanisms, allowing them to optimize task performance without requiring manual adjustments. These agents will be capable of self-improving over time, learning from successes and failures to enhance their accuracy and efficiency.

As one of the LLM agents examples, autonomous learning agents will be able to fine-tune their models based on feedback loops from production environments, continuously adapting to new data and tasks. This will significantly reduce the need for human intervention, making AI systems more self-reliant and capable of scaling in complex, dynamic settings.

Impacts on Workforce and Roles in AI Companies

As LLM agents become more advanced, the roles of ML engineers, data scientists, and AI teams will inevitably shift. With more tasks becoming automated, engineers will focus on higher-level strategic work. This can be designing new LLM agents architectures, improving system-level integration, or fine-tuning LLMs for specialized tasks.

This shift in workflow dynamics means that professionals in the AI industry will need to adapt by honing their skills in AI governance, ethical deployment, and system optimization. While LLM agents will handle much of the routine work, the human workforce will still be essential for overseeing AI operations. Humans will ensure compliance with regulations and optimize agents for specific tasks.

Adopting LLM agents helps businesses scale, cut costs, and boost accuracy. With our fine-tuning services, you can customize these agents and fully leverage the power of LLM automation. Run free pilot!

FAQ

What is an agent in LLM?

So, what are LLM agents? An LLM agent is an autonomous system powered by large language models that automates tasks like data preprocessing, task management, and model tuning. It reduces the need for human intervention in machine learning workflows.

What is the difference between an LLM and an AI agent?

An LLM is a machine learning model focused on processing and generating language. An AI agent, which may use LLMs, is a broader system that automates tasks, makes decisions, and interacts with external tools and environments.

What are tools and agents in LLM?

Tools in LLM refer to software or APIs that LLM agents use for tasks like data processing and model deployment. Agents are autonomous entities that manage tasks by interacting with these tools to automate workflows.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.