Satellite vs. Drone Imagery: Do They Share the Same Functions?

With ongoing development of computer vision technology, can we assert that now we have more access to what and how we see around? Satellite vs. drone imagery showed they can compete in some projects and complement in others.

Since the appearance of satellites we have observed new land, water, and climate discoveries. Not only did satellite imagery help to improve maps, but also analyze the human impact on our environment. Instead, drones shifted our focus to the problems of smaller scales. The popularity of drone imagery spread from the military industry to construction, agriculture, infrastructure, and even the entertainment industry.

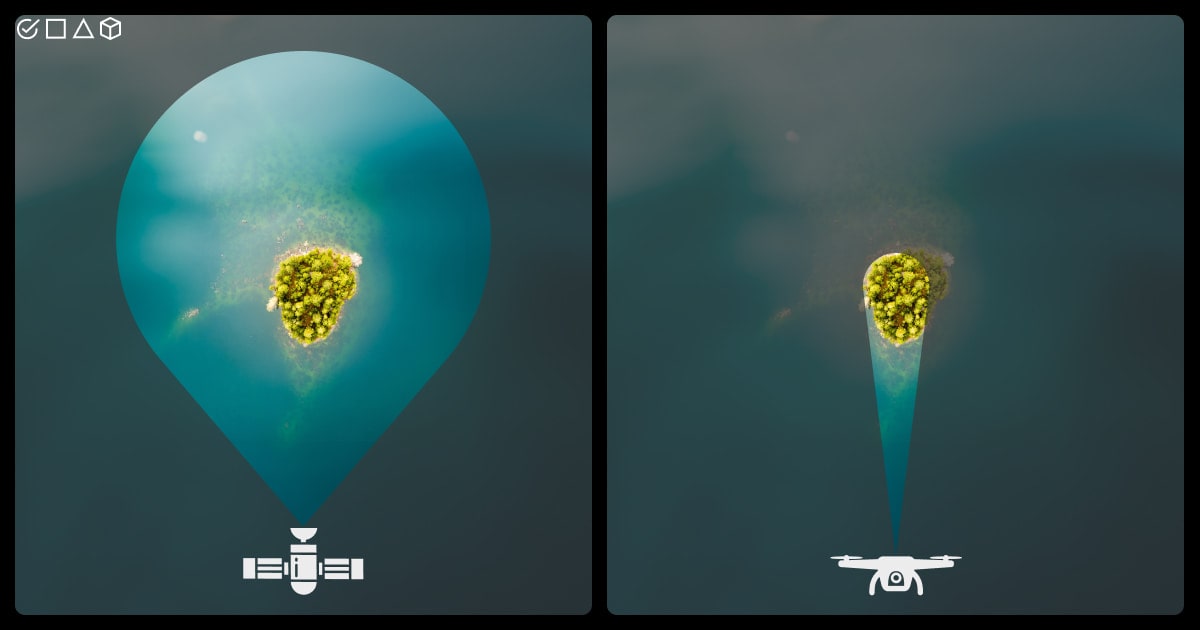

The main difference between drones and satellites lies in the final image we get. Let us show you how such data makes aerial technology work to our advantage.

Is There Any Difference between Drones and Satellites Imagery in AI?

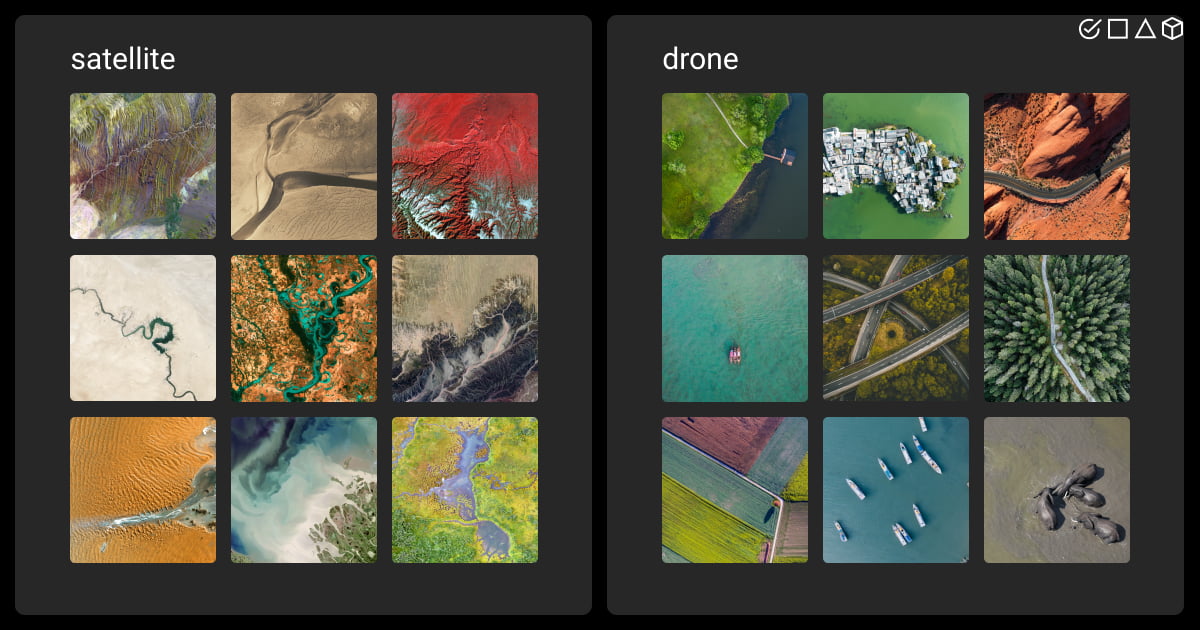

From the first glance, you couldn’t describe exactly what you see on satellite vs. drone imagery. But, as cameras and sensors process the data, we observe the Earth from different angles. Satellite imagery has gained popularity among machine learning and deep learning techniques. Well-trained models recognize objects and observe surface changes in real time.

With the help of ML algorithms, we have made a big step forward in environmental analysis and the agricultural sphere. GPS navigation systems became improved as never before. Wildlife conservation became a goal, and climate forecasts don’t surprise with their precision anymore.

Drones, also known as unmanned aerial vehicles (UAVs), brought the opportunity to explore the surface in more detail. Although limited by scale, altitude, and duration of functionality, drones capture high-resolution imagery. Their data brings more attributes and forms. The logic behind using AI algorithms for analysis is the same as in satellite imagery, but the scale and the focus differ.

Both drone and satellite data is accessible through commercial, governmental, or open source channels. The cost and availability depend on the data provider. However, open source libraries, like USGS Earth Explorer or OpenDroneMap, can be a good start for 3D interactive maps and models, and other projects creation. Let’s now take a look at how you can collect and further process such data if you wish to step up your game and create custom datasets.

Drone and Satellite Data Collection

Scale and resolution are not the only features that set drones and satellites apart. While comparing images from drones with satellite images, you’ll notice operational flexibility and coverage area that limit the first and benefit the last.

Operating drones collect data through numerous built-in cameras and sensors. While RGB cameras and zoom lenses are a must, thermal cameras, essential (EO) systems, LiDAR, and multispectral sensors differ depending on the drone type.

Satellite vs. drone photos go through the same steps of automated data collection. They include data capture through sensing instruments, data transmission, processing, and analysis.

However, satellite sensors capture data through electromagnetic radiation. To record it, satellites are equipped with radiometers, multispectral sensors, and synthetic aperture radars (SARs).

Depending on the sensor and camera used, satellite and UAV aerial photography use raw data, JPEG, TIFF formats, as well as PNG, ENVI, or HDF, which are a source for further data annotation.

To get the most valuable data for your geospatial project, get help of our experts at Label Your Data.

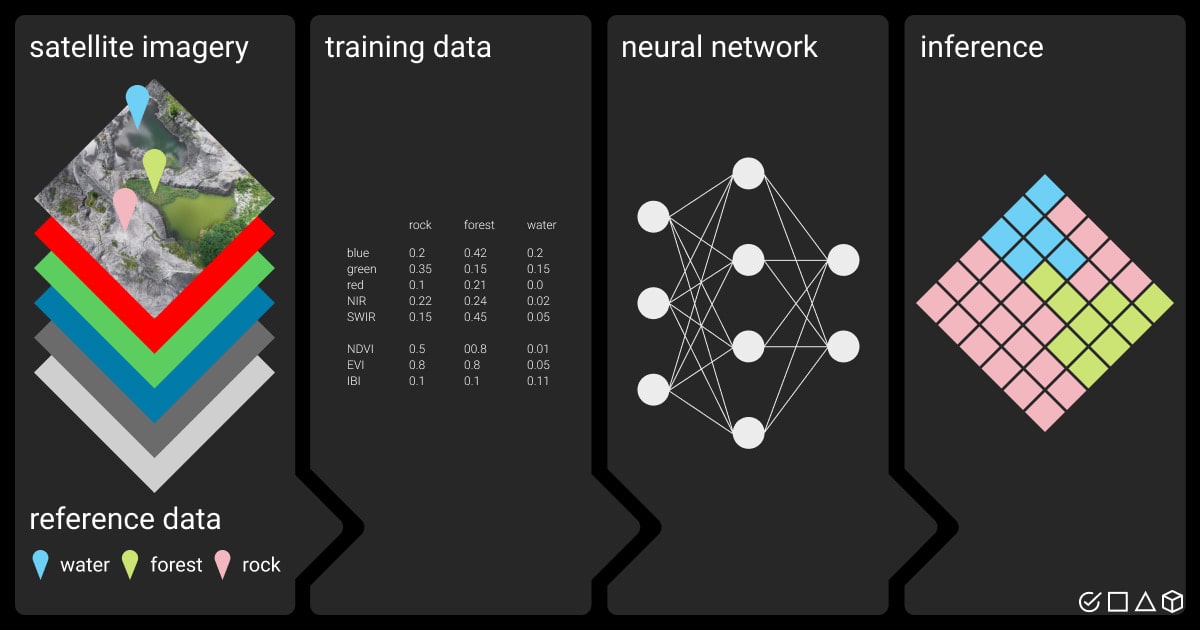

Drones vs. Satellites Data Annotation

Before building a new application, you should collect, label, and process the raw data. Data tagging services provide the annotated data that can be later used in training ML models. Drone or satellite data annotation is inevitable for future object detection, monitoring, segmentation, or image classification across major industries.

Drone and satellite image is the starting point, whether your future app will include infrastructure inspection or track the biggest farmlands in the country. No matter the scale of your project, drone data annotation services or geospatial annotation services will process your raw data as needed. Drone and satellite data augment various computer vision applications with the help of these types of data labeling:

Semantic segmentation. This type of annotation divides satellite vs. UAV images into segments, assigning each pixel a definite label. Such type of labeling splits satellite images into areas and classes.

Image categorization. Tagging specific frames or image regions allows indicating objects in drone photos.

Object detection. Usually identified with the help of bounding boxes, the objects of interest are marked with corresponding labels. This type of annotation will make satellite images more specific, ready for future AI applications.

LiDAR annotation. Images created by drones’ LiDAR sensors contain point clouds with 3D spatial information. Annotation of LiDAR images with 3D cuboids shows the depth and intensity of the object, often needed in geology or construction.

Data annotation for satellite vs. drone imagery is not exhausting. To have a full and detailed view of a picture, you’ll also refer to such parameters as spatial resolution or georeferencing. And with metadata, lighting conditions, or spectral bands you’ll be better equipped for further data usage.

Satellite vs. Drone Imagery: Technical Features That Set Them Apart

Besides data processing techniques, the difference between satellite and drone imagery lies in the technical parameters of both. When satellites orbit the Earth at much higher altitudes than drones, they cannot provide the same detailed data. In the same way, drones show you data in real time, without the need to store it in ground stations first.

Below are the main technical features for drones vs. satellites. They influence the final image we get and the image parameters that are further used in data annotation.

Technical features | Satellites | Drones |

Ground sample distance (GSD) / spatial resolution | Low (>30 m/pixel) | High (from cm to mm/pixel) |

Map scale | Low (1:10.000-1:100.000) | High (1:500-1:1000) |

Timeline of recording | Near-continuous observation | Depends on flight duration and |

Colors | Visible spectrum (RGB) | Visible spectrum (RGB) |

Data products | Thematic maps, orthorectified | Standard imagery, Lidar point |

Sensors | Optical sensors | Visible spectrum cameras |

In addition to sensors or technical characteristics, the difference between drones and satellites will depend on the day of recording, weather conditions, and other details. Let’s compare.

Comparing Images from Drones with Satellite Images: Final Data Output

There are a couple of conditions that will influence the final image you get. They should be considered while defining your purposes for image usage.

Altitude. Since satellites operate at higher altitudes, they have a broader angle and capture more area. Drones, in turn, provide higher resolution thanks to flying at lower altitudes, which gives you more details at the end.

Weather conditions. Satellites resist weather conditions and are only limited by clouds. Drones are restricted by precipitation or wind.

Control and flexibility. Since drones are controlled by humans, their altitude and paths can be adjusted, providing targeted data. Instead, satellites depend on orbit placement and on revisit time, leaving little to no space for adjustments.

The usage of drones vs. satellites images and further training of the model allows using it for automation, decision-making, and various analyses in different businesses.

Application of Drone and Satellite Images Across Industries

The valuable data gathered by drones and satellites helps us prevent issues, uncover problematic areas, and proactively work on improvements. With the help of AI, the aerial imagery data is actively used in the following industries:

Agriculture. Farming has now become more precise and with data annotation services in agriculture, you can prevent pest infections, evaluate the soil, and take into account weather conditions.

Infrastructure and urban planning. The AI is now a partner in assessing land for construction, categorizing urban planning, and inspecting the condition of buildings and roads.

Environmental studies. AI algorithms allow detecting changes in the environment, evaluating the recovery after damages, and preventing such disasters as deforestation or wildlife extinction.

Security. The biggest fields of usage are surveillance and monitoring, widely used in borders monitoring, construction, or military activities.

Transportation. Monitoring allows us to analyze traffic, improve transportation systems, and contribute to map updates.

Summary

Now that we have deeply dived into the topic of drone and satellite data, you should better understand which imagery suits your needs best. Although drones and satellites share many parameters, their application will depend on the requirements and demands of the projects.

In cases where big scales and archive data are needed, satellites will do their job. However, in comprehensive and detailed tasks, drones will take their lead. Quite often drones and satellites complement each other, providing more accurate and precise data.

To process the huge amounts of aerial raw data and prepare it for future AI applications, get help of our professionals. Send your quote request to Label Your Data, and we’ll take an utmost care of your drone or satellite data.

FAQ

What is one benefit of satellite images over drone images?

Satellite data is often ready for processing and application. Analyzing drone imagery can be more time-consuming, as it often requires on-site data processing or uploading data to cloud platforms. Satellite imagery, in contrast, can be readily accessed and analyzed through specialized software platforms, reducing the time and effort required for data processing.

What are the disadvantages of using a drone instead of a satellite?

Drones are often considered more progressive. However, their downsides include difficulties operating during bad weather conditions, restricted battery life, and environment noises, which prevent accurate monitoring and control.

How are satellite images so accurate?

Several factors influence satellite image accuracy: orbit positioning, sensor calibration, and atmospheric correction, among others. Georeferencing, for example, tracks the positioning of satellites in orbit. Accuracy, on the other hand, is the distance between the actual geographic location of an object or detail compared to the position of the object in the image. Accuracy is dependent on several factors, such as the satellite positioning technology, terrain relief, and sensor viewing angle.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.