Image Recognition: How to Train AI to Recognize Images

TL;DR

- Image recognition is a key technology driving advancements in computer vision across industries.

- Leverage CNNs for accurate and efficient image recognition in AI projects.

- Simplify images for machines by reducing noise and focusing on essential features.

- Use labeled data for training AI models to enhance recognition accuracy.

- Address hardware limitations by compressing images and optimizing storage.

- Explore use cases like facial recognition, e-commerce, and automation to integrate AI image recognition into your business.

Can AI Read Images? Understanding Artificial Intelligence Image Recognition

Image recognition is everywhere, even if you don't give it another thought. It's there when you unlock a phone with your face or when you look for photos of your pet in Google Photos. It can be big in life-saving applications like self-driving cars and diagnostic healthcare. But it also can be small and funny, like in that notorious photo recognition app that lets you identify wines by taking a picture of the label.

AI-based image recognition is the essential computer vision technology that can be both the building block of a bigger project (e.g., when paired with object tracking or instant segmentation) or a stand-alone task. Computer vision services are crucial for teaching the machines to look at the world as humans do, and helping them reach the level of generalization and precision that we possess.

How Image Recognition Works in AI?

For a machine, hundreds and thousands of examples are necessary to be properly trained to recognize objects, faces, or text characters. That's because the task of image recognition is actually not as simple as it seems. So, if you're looking to leverage the AI recognition technology for your business, it might be time to hire AI engineers who can develop and fine-tune these sophisticated models.

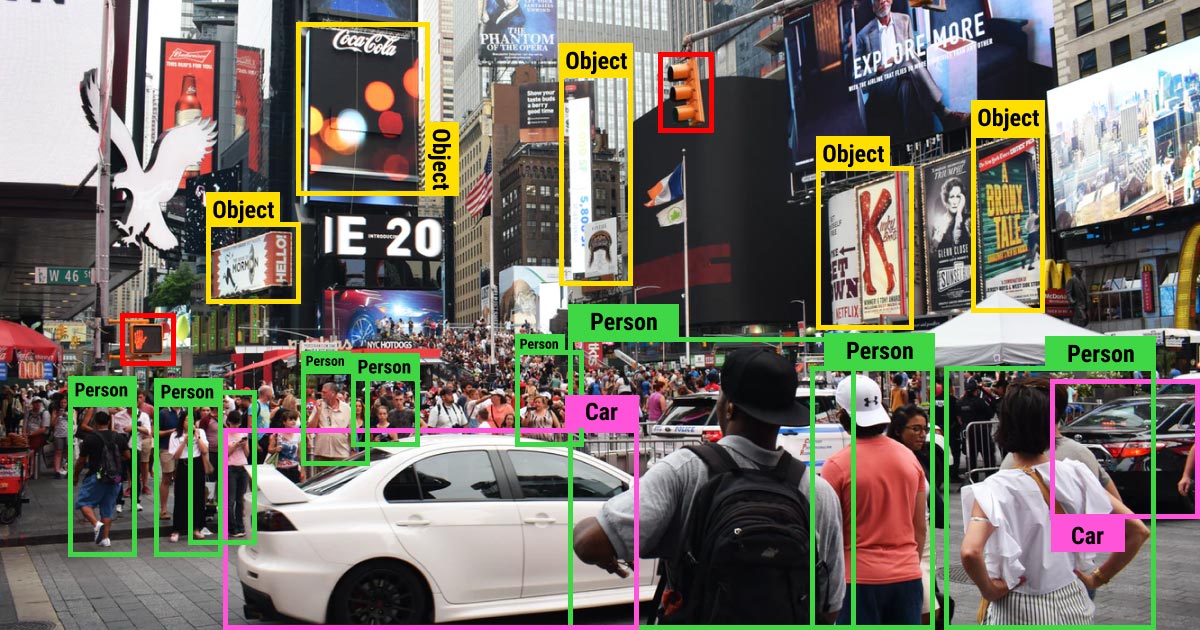

Image recognition in AI consists of several different tasks (like classification, labeling, prediction, and pattern recognition) that human brains are able to perform in an instant. For this reason, neural networks work so well for AI image identification as they use a bunch of algorithms closely tied together, and the prediction made by one is the basis for the work of the other.

Given enough time for training, AI image recognition algorithms can offer pretty precise predictions that might seem like magic to those who don't work with AI or ML. Digital giants such as Google and Facebook can recognize a person at nearly 98% accuracy, which is around as good as people can tell apart faces. This level of precision is mostly due to a lot of tedious work that goes into training the ML models. This is where the processing of the data and data annotation comes in. Without the labeled data, all that intricate model-building would be for naught. But let's not get ahead of ourselves. First, let's see: how does AI image recognition work?

How to Train AI to Interpret Images?

Let's say you're looking at the image of a dog. You can tell that it is, in fact, a dog; but an image recognition algorithm works differently. It will most likely say it’s 77% dog, 21% cat, and 2% donut, which is something referred to as confidence score.

In order to make this prediction, the machine has to first understand what it sees, then compare its image analysis to the knowledge obtained from previous training and, finally, make the prediction. As you can see, the image recognition process consists of a set of tasks, each of which should be addressed when building the ML model.

Neural Networks in Image Matching AI

Unlike humans, machines see images as raster (a combination of pixels) or vector (polygon) images. This means that machines analyze the visual content differently from humans, and so they need us to tell them exactly what is going on in the image. Convolutional neural networks (CNNs) are a good choice for such image recognition tasks since they are able to explicitly explain to the machines what they ought to see. Due to their multilayered architecture, they can detect and extract complex features from the data.

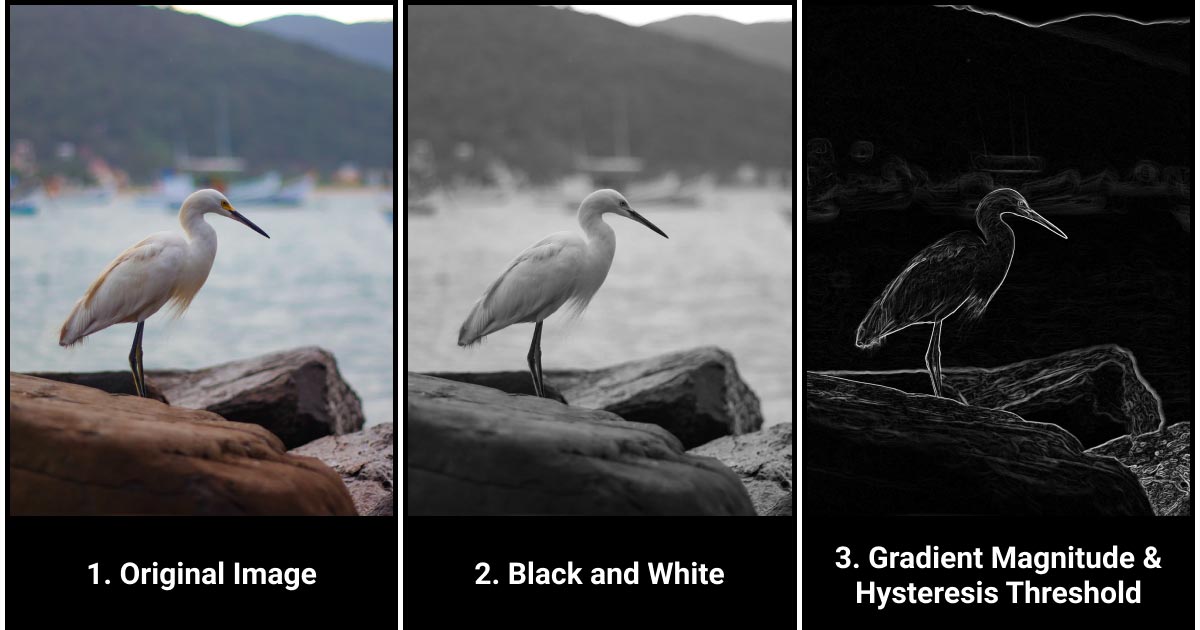

We've compiled a shortlist of steps that an image goes through to become readable for the machines:

- Simplification. For a start, you have your original picture. You turn it black and white and overlay some blur. This is necessary for feature extraction, which is the process of defining the general shape of your object and ruling out the detection of smaller or irrelevant artifacts without losing the crucial information.

- Detection of meaningful edges. Then, you compute a gradient magnitude. It allows you to get the general edges of the object you are trying to detect by comparing the difference between the adjacent pixels on the image. As an output, you will get a rough silhouette of your primary object.

- Defining the outline. Next, you need to define the edges, which can be done with the help of non-maximum suppression and hysteresis thresholding. These methods reduce the edges of the object to single, most probable lines, and leave you with a simple clean-cut outline. The output geometric lines allow the algorithm to classify and recognize your object.

This is a simplified description that was adopted for the sake of clarity for the readers who do not possess the domain expertise. There are other ways to design an AI-based image recognition algorithm. However, CNNs currently represent the go-to way of building such models. In addition to the other benefits, they require very little pre-processing and essentially answer the question of how to program self-learning for AI image identification.

Annotate the Data for Image Recognition Models

It is a well-known fact that the bulk of human work and time resources are spent on assigning tags and labels to the data. This produces labeled data, which is the resource that your ML algorithm will use to learn the human-like vision of the world. Naturally, models that allow artificial intelligence image recognition without the labeled data exist, too. They work within unsupervised machine learning, however, there are a lot of limitations to these models. If you want a properly trained image recognition algorithm capable of complex predictions, you need to get help from experts offering image annotation services.

What data annotation in AI means in practice is that you take your dataset of several thousand images and add meaningful labels or assign a specific class to each image. Usually, enterprises that develop the software and build the ML models do not have the resources nor the time to perform this tedious and bulky work. Outsourcing is a great way to get the job done while paying only a small fraction of the cost of training an in-house labeling team.

Hardware Problems of AI Photo Identification

After designing your network architectures ready and carefully labeling your data, you can train the AI image recognition algorithm. This step is full of pitfalls that you can read about in our article on AI project stages. A separate issue that we would like to share with you deals with the computational power and storage restraints that drag out your time schedule.

Hardware limitations often represent a significant problem for the development of an image recognition algorithm. The computational resources are not limitless, and images are the heavy type of content that requires a lot of power. Besides, there's another question: how does an AI image recognition model store data?

To overcome the limitations of computational power and storage, you can work on your data to make it more lightweight. Compressing the images allows training the image recognition model with less computational power while not losing much in terms of training data quality. It also coincides with the steps that CNNs will perform for your image processing with AI. Turning images black-and-white has a similar effect: it saves storing space and computational resources without losing much of the visual data. Naturally, these are not exhaustive measures, and they need to be applied with the understanding of your goal. High quality is still the required feature for building an accurate algorithm. However, you might find enough leeway to keep the schedule and cost of your image recognition project in check.

What Does Image Recognition Bring to the Business Table?

Now that we've talked about the "how", let's look at the "why". Why is image recognition useful for your business? What are some use cases, and what is the future of this form of artificial intelligence as image recognition?

The most obvious AI image recognition examples are Google Photos or Facebook. These powerful engines are capable of analyzing just a couple of photos to recognize a person (or even a pet). Facebook offers you people you might know based on this feature. However, there are some curious e-commerce uses for this technology.

For example, with the AI image recognition algorithm developed by the online retailer Boohoo, you can snap a photo of an object you like and then find a similar object on their site. But, if you want more options, you can also use AI reverse image search to explore similar products across multiple platforms by simply uploading an image instead of browsing manually. This relieves the customers of the pain of looking through the myriads of options to find the thing that they want.

Facial recognition is another obvious example of image recognition in AI that doesn't require our praise. There are, of course, certain risks connected to the ability of our devices to recognize the faces of their master. Image recognition also promotes brand recognition as the models learn to identify logos. A single photo allows searching without typing, which seems to be an increasingly growing trend. Detecting text is yet another side to this beautiful technology, as it opens up quite a few opportunities (thanks to expertly handled NLP services) for those who look into the future.

Here's one more fascinating among numerous AI image recognition examples: let's say you are in a restaurant with your colleagues. The bill arrives, and you start to type in numbers to split it fairly. That can be quite frustrating after a fine meal; instead, you could download an app that would read every position and let you split the bill automatically. Isn't AI great? And while we're talking about machines reading text, we should not forget about automation. Actually, we've dedicated a whole two-parter to the topic of automated data collection and OCR, so don't forget to visit to learn more!

Advancements and Trends in Image Recognition

AI image recognition technology has seen remarkable progress, fueled by advancements in deep learning algorithms and the availability of massive datasets. The current landscape is shaped by several key trends and factors.

Key Trends

- Convolutional Neural Networks (CNNs): CNNs are pivotal for image classification and object detection. They excel at identifying patterns and features in images, which is crucial for applications like facial recognition and autonomous driving. For example, Tesla’s autonomous vehicles use CNNs to interpret visual data for navigation and obstacle detection.

- Generative Adversarial Networks (GANs): GANs are used to generate realistic images and enhance image quality. They consist of two neural networks that compete to produce high-quality synthetic data, which has applications in entertainment for creating special effects and animations.

- Integration with AR and VR: Image recognition is increasingly integrated with augmented reality (AR) and virtual reality (VR) to create immersive experiences. In retail, AR applications such as virtual try-ons for clothing and makeup provide personalized shopping experiences.

- Transfer Learning: Transfer learning involves applying pre-trained models to new datasets, improving efficiency and accuracy while reducing the need for extensive training data. Healthcare applications, for example, use transfer learning to adapt pre-trained models for diagnosing diseases from medical images like X-rays and MRIs.

Contributing Factors

- Availability of Image Data: The proliferation of smartphones and social media has led to an exponential increase in image data, providing a rich resource for training models. Social media platforms like Instagram and Facebook use image recognition to automatically tag and organize photos.

- Advancements in Computing Power: The rapid development of GPUs and cloud computing has significantly accelerated the processing capabilities required for deep learning models, making complex image recognition tasks feasible. Cloud services like Amazon Web Services (AWS) and Google Cloud offer powerful resources for training and deploying these models.

- Demand for Automation and Intelligent Systems: Industries are increasingly adopting automation and intelligent systems to enhance efficiency and productivity. Automated checkout systems in retail use image recognition to identify and tally products without human intervention.

- Edge Computing: Edge computing is enhancing real-time processing and decision-making by deploying image recognition models on edge devices. This is crucial for applications requiring low latency and high responsiveness. Security cameras with built-in image recognition capabilities can detect and alert about suspicious activities in real time.

- Importance in Security and Surveillance: Image recognition is becoming increasingly important in security and surveillance to monitor and analyze video feeds for potential threats and incidents. Airports and public spaces use this technology to enhance security by identifying individuals on watchlists and detecting unattended objects.

These advancements and trends underscore the transformative impact of AI image recognition across various industries, driven by continuous technological progress and increasing adoption rates.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

Is there an AI that can identify images?

Yes, several AI models can identify images, including Google Lens, Apple Visual Look Up, OpenAI's CLIP, Amazon Rekognition, and Microsoft Azure Computer Vision. These tools analyze and categorize images based on extensive datasets.

How does AI recognize images?

AI recognizes images by analyzing patterns, shapes, colors, and textures using deep learning models, particularly convolutional neural networks (CNNs). These models extract features from images and classify them based on trained datasets.

Which AI technique is used for image recognition?

Convolutional Neural Networks (CNNs) are the most common AI technique for image recognition. They process visual data by applying filters that detect edges, textures, and object structures, enabling accurate classification.

How do I use Google image recognition?

You can use Google Lens for image recognition by uploading an image to Google Images or using the Google Lens app on mobile devices. It analyzes the image and provides relevant information, such as object identification, similar images, or shopping links.

What is image recognition?

Image recognition is an AI-driven process that enables machines to identify and classify objects, people, or patterns in images. It is used in applications like facial recognition, medical diagnostics, and autonomous vehicles.

How do I use Apple image recognition?

Apple’s Visual Look Up and Live Text features allow image recognition on iPhones and iPads. You can use the Photos app to identify objects, landmarks, plants, and animals or extract text from images.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.