Top Data Labeling Companies in the UK (2025 Buyer’s Guide)

Table of Contents

- TL;DR

- Essential Checks Before Hiring a Team for Data Annotation UK

- Label Your Data

- CloudFactory

- Aya Data

- DataEQ

- Wolfestone

- DataBee

- AttaLab

- Appen

- TELUS International

- Mindy Support

- Side-by-Side Comparison of AI Data Labeling Companies in the UK

- About Label Your Data

-

FAQ

- How do I measure annotation quality before signing a contract?

- Which security credentials matter for sensitive projects?

- Which vendors offer hybrid annotation teams to balance cost-efficiency with domain expertise?

- How quickly can a vendor scale if my data spikes?

- Which integration features save the most engineering time?

TL;DR

- Most UK vendors support CV, NLP, audio, and 3D tasks, but differ in QA methods, tooling, and turnaround speed.

- Security standards like ISO 27001, GDPR, and HIPAA are common, but some vendors go further with office-only work and audit logs.

- Pricing models vary: some offer per-object rates, others use hourly or subscription setups.

- Tooling support ranges from full API access and custom pipelines to managed-service models with no platform.

- Use the side-by-side comparison and Buyer’s Guide to shortlist the right vendor for your project.

Essential Checks Before Hiring a Team for Data Annotation UK

Choosing the right data annotation company can make or break your project, especially if you're training a machine learning algorithm with limited margin for error. Use this checklist to evaluate whether a vendor is equipped to deliver high-quality, secure, and scalable data annotation.

- Data Security: GDPR compliance, ISO 27001 or SOC 2, secure storage, NDAs.

- Quality & Accuracy: Measurable QA process, verified benchmarks, SLAs.

- Domain Experience: Relevant use cases, trained teams, task-specific samples.

- Transparent Pricing: Per-unit rates, no surprise fees, volume discounts.

- Project Handling: Dedicated PM, clear updates, responsive communication.

Want a full scoring framework to compare vendors head-to-head? Get the Buyer’s Guide to Data Labeling Vendors to evaluate your shortlist with confidence.

When selecting vendors, prioritize domain knowledge over generic labeling capabilities. Our biggest ROI came from working with specialists who understood CRE terminology and could accurately identify financial metrics across inconsistently formatted documents.

Label Your Data

Fully managed data annotation services for ML teams who need training data without the drag of doing it in-house. Our data annotation platform supports computer vision workflows and delivers 98%+ accuracy through layered QA. You get tool-agnostic output, secure workflows, and real support from teams who’ve labeled everything from surgical videos to financial documents.

We also support LLM fine-tuning services and offer data collection services to help you build custom datasets from scratch.

Pros of Label Your Data

- Supports image, video, text, audio, and 3D point cloud annotation

- Output works with any tool or ML pipeline

- 98%+ accuracy with human review baked into workflows

- Certified for PCI DSS, ISO 27001, GDPR, CCPA, and HIPAA

- Industry use cases include automotive, healthcare, geospatial, and e-commerce

- Flexible billing: pay per object or per hour

- Platform includes uploads, a price calculator, API access, and a free pilot option

Check our cost calculator to get rough data annotation pricing for your project.

Cons of Label Your Data

- CV workflows are platform-first; NLP and audio labeling require managed services

- Not built for fully automated, hands-off labeling

Want more detail? Read the full Label Your Data company review.

Label Your Data really stood out because of how adaptable their technology is. They offer a variety of tools and labeling options that easily adjust as project needs change... Working with a vendor that can keep up with those changes means less downtime and a smoother process overall.

CloudFactory

CloudFactory combines a managed workforce with annotation tooling for ML projects, including computer vision and NLP. They support image, video, LiDAR, and offer NLP annotation via a trained human-in-the-loop workforce. Their model emphasizes dedicated teams, structured onboarding, and a QA process that includes gold checks, consensus review, and pre-labeling using ML models. CloudFactory works best for clients who need scale, process control, and tight integration into their ML ops cycle.

Pros of CloudFactory

- Strong in computer vision: bounding boxes, segmentation, keypoints, 3D cuboids

- Built-in QA layers: gold standards, consensus, senior review, and model-vs-human checks

- Scalable teams: 7,000+ trained annotators across global hubs (UK, Nepal, Kenya)

- Platform flexibility: use their AI Data Platform or integrate with tools like Labelbox

- Enterprise-ready: ISO 27001, HIPAA, GDPR; used by clients in AV, geospatial, healthcare

Cons of CloudFactory

- NLP capabilities are available, but multilingual and domain-specific support is less documented

- 2-week onboarding may slow urgent projects

- No free pilot: offers a 10-hour test analysis, but not hands-on trial

- Pricing can climb with QA and PM overhead; better suited for long-term projects

- Platform may require ramp-up (reviewers note a learning curve with the AI tooling)

Read the full CloudFactory review to learn more about the vendor.

One UK-based data labeling company that stands out is CloudFactory. What's impressive is their ability to blend human intelligence with tech-driven workflows, delivering consistently high-quality labeled data even in complex AI training scenarios... The right vendor doesn't just annotate, they become a strategic extension of the model training process.

Aya Data

Aya Data is a UK-headquartered provider with operations in West Africa, offering annotation services alongside AI consulting and model development. They focus on quality labeling for text, image, audio, and geospatial data, often in complex or domain-specific use cases like financial document parsing or crop monitoring. With a growing team of trained annotators and data scientists, Aya Data appeals to buyers who need hands-on support and context-aware annotation.

Pros of Aya Data

- Full-service capability: from annotation to model deployment and evaluation

- Skilled workforce: domain-trained annotators in finance, agriculture, and healthcare

- Strong in low-resource and African languages; supports multilingual labeling

- Secure operations: ISO 27001, GDPR, HIPAA, and SOC 2 compliant

- Flexible for custom workflows, with founders often involved in early-stage projects

- Relies on trained in-house staff rather than anonymous gig workers

Cons of Aya Data

- Smaller scale: ~50 full-time staff; very large projects may require longer ramp-up

- Limited visibility: few public case studies or reviews outside of Africa-based work

- Still scaling: product and services teams operate in parallel, which may stretch capacity

- May be too premium for simple bulk tasks; best for nuanced or evolving datasets

DataEQ

DataEQ (formerly BrandsEye) focuses on human-in-the-loop sentiment and intent labeling for customer-facing text data (think social media, reviews, and support tickets). Their platform filters out low-signal content using automation and sends the rest to trained human analysts for fine-grained tagging. It's designed for CX, marketing, and compliance teams who need structured insights from unstructured text, not just raw labels.

Pros of DataEQ

- Great for sentiment, sarcasm, and intent classification

- Combines AI filtering with expert crowd validation for high accuracy

- Real-time dashboards and alerts for fast decision-making

- Custom taxonomies available for industry-specific tagging (e.g., ESG, compliance)

- Enterprise users include financial institutions and telecoms

- GDPR-compliant platform with crowd performance tracking and QA layers

Cons of DataEQ

- Narrow focus: only covers short-form text in CX and compliance contexts

- Not a fit for CV, audio, or general-purpose NLP labeling

- Platform-first: less flexibility for buyers who just want labeled data

- No clear pricing model for per-label or one-off projects

- Exact annotation throughput or crowd metrics are not publicly disclosed

Wolfestone

Wolfestone is a UK-based language services provider that brings its translation expertise into data annotation, with a focus on multilingual text and audio labeling. Their core strength lies in linguistic accuracy, native-language understanding, and ISO-certified workflows. While not a tech-first platform, Wolfestone appeals to ML teams working on multilingual NLP, sentiment tagging, or transcription projects that demand high precision and cultural nuance.

Pros of Wolfestone

- Native-linguist workforce covering 220+ language pairs

- ISO 27001, ISO 9001, and ISO 17100 certified — strong on data security and process quality

- Accurate labeling workflows modeled after translation QA: multiple passes, expert reviewers

- Ideal for tasks requiring cultural context or regulatory compliance (finance, healthcare)

- Dedicated PMs and documented delivery process help manage scale without losing quality

Cons of Wolfestone

- No annotation platform or API, clients rely on human-led project delivery

- Slower ramp-up and turnaround for large-volume projects

- Higher pricing aligned with expert labor, not crowd-sourced scale

- Limited visibility in ML communities; most known in localization, not data labeling

- Less suited for CV or advanced ML workflows (e.g. point cloud, LiDAR)

DataBee

DataBee is a UK-based vendor specializing in document-heavy annotation and structured data extraction. They focus on building long-term annotation teams that integrate into the client’s workflow, often working directly in client-provided tools. With a background in finance and legal data, DataBee fits teams working on complex forms, PDFs, or tabular data that require precision and context.

Pros of DataBee

- Trained annotators with domain knowledge (e.g. finance, legal)

- Flexible setup: works in your tools and adapts to your guidelines

- Consultative approach: analysts give feedback to improve labeling workflows

- Scales gradually with stable core teams, reducing retraining overhead

- Based in Bulgaria with cost-effective European labor and strong English fluency

Cons of DataBee

- Small team (~30 core staff); large projects may need more lead time

- Manual-only workflows: no automation, model-assisted labeling, or pre-labeling tools

- Low online visibility: few public reviews or case studies

- Not designed for fast, low-cost CV tasks; better for high-accuracy document work

- Limited language diversity outside European regions

AttaLab

AttaLab runs a crowd-powered annotation platform built on top of its large survey app user base, offering rapid-turnaround data labeling across vision, text, and audio tasks. With 2.5 million annotators in 43 countries, it’s designed for scale, speed, and cost control. Clients can adjust quality settings via consensus thresholds, making it a flexible choice for early-stage ML projects or high-volume labeling on a tight budget.

Pros of AttaLab

- Large-scale crowd: 2.5M contributors available across 11 languages

- Fast delivery: average 24-hour turnaround on standard tasks

- Adjustable consensus settings to balance quality, speed, and cost

- Supports a wide range of annotation types: CV, NLP, audio, OCR

- Simple setup and pricing: marketed as cheaper than AWS Ground Truth

Cons of AttaLab

- Gig-based workforce may struggle with complex or specialized tasks

- Quality control depends on client-side review; no dedicated PM or QA team

- No support for highly sensitive data; crowd access poses compliance risks

- Lacks hands-on service or annotation consulting

- New in the enterprise space; minimal documentation or tooling support beyond core UI

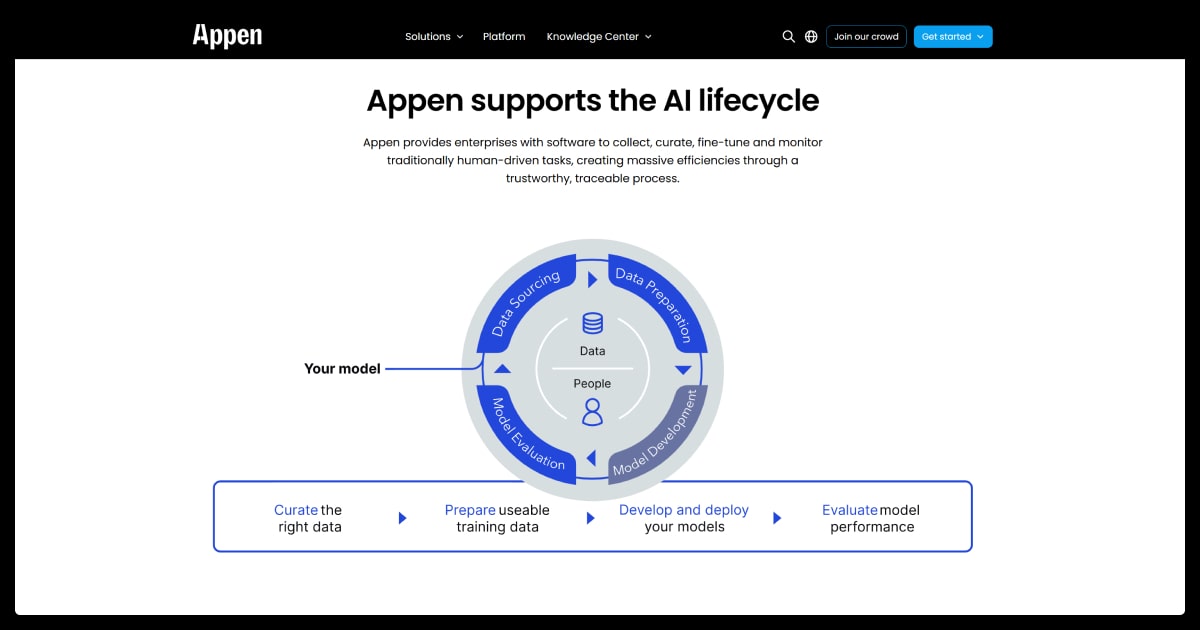

Appen

Appen is one of the largest data labeling service companies globally, offering both managed services and a self-serve platform. With over 1 million crowd workers and a legacy in search relevance and voice data, Appen handles complex, multimodal annotation projects at scale. Their platform includes tools for 2D, 3D, and 4D data, ML-assisted pre-labeling, and detailed QA workflows. Ideal for enterprise teams with large budgets and in-house project management capacity.

Pros of Appen

- Massive scale: 1M+ annotators across 170+ countries and 235+ languages

- Advanced tooling: supports 3D point clouds, temporal video, and smart pre-labeling

- Enterprise-grade compliance: HIPAA, ISO 26262, GDPR, and CCPA ready

- Flexible model: fully managed service or self-serve platform

- Long track record with Big Tech, government, and regulated sectors

Cons of Appen

- High cost: pricing often out of reach for startups or academic teams

- Quality varies: crowd-based work requires close oversight and clear QA rules

- Steep learning curve on the platform; onboarding can be time-consuming

- Less suited for fast-moving, smaller projects due to heavier process overhead

- Mixed crowd feedback on pay and support, which may impact engagement

Read the full Appen company review.

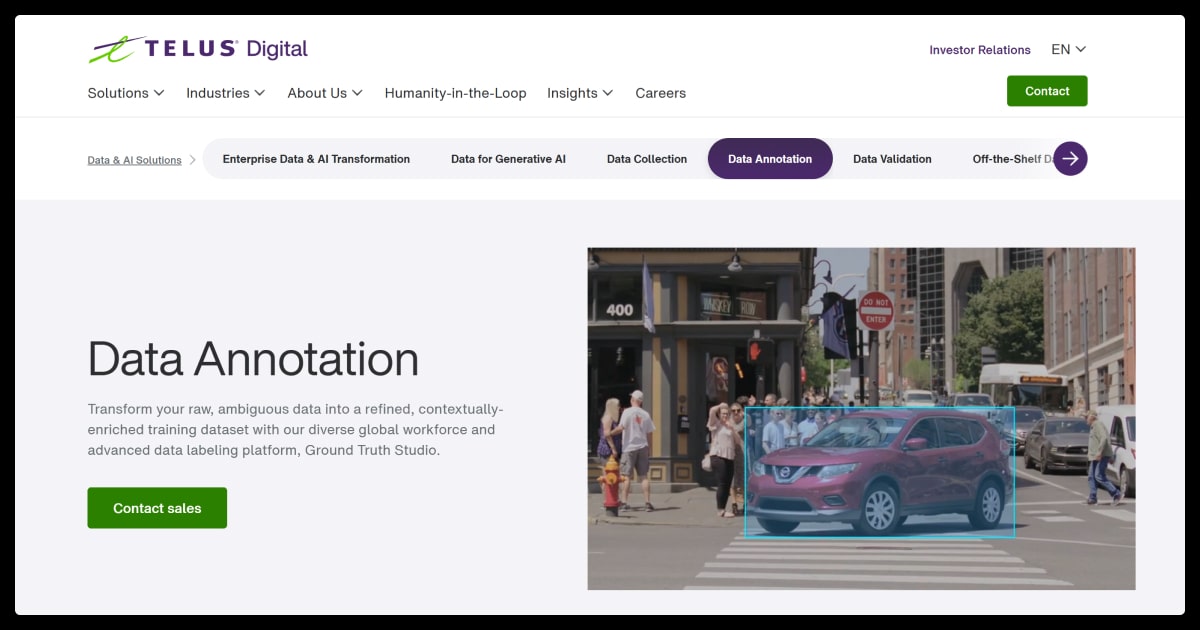

TELUS International

TELUS International offers large-scale annotation services as part of its AI Data Solutions division, built from the acquisitions of Lionbridge AI and Playment. The company supports text, audio, image, and LiDAR data annotation, with managed teams and platform tooling for enterprise-grade projects. With a global workforce and a strong compliance track record, TELUS suits buyers in regulated industries who need security, scale, and multi-language support.

Pros of TELUS International

- Enterprise-ready: GDPR, HIPAA, ISO 27001 compliant; supports secure data workflows

- Global reach: 1M+ annotators in 300+ languages and regional dialects

- Proven tools: LiDAR and image annotation powered by the Playment platform

- Strong project management: dedicated PMs and structured delivery across time zones

- End-to-end services: data collection, annotation, RLHF, and post-processing available

Cons of TELUS International

- Primarily a managed service — limited self-serve flexibility

- Slower turnaround on large-scale projects due to QA-heavy processes

- Less visibility into platform capabilities compared to standalone SaaS vendors

- May require large contract minimums; not optimized for small or one-off jobs

- Mixed crowd feedback may affect consistency on long-term projects

Mindy Support

Mindy Support is a managed data annotation provider based in Cyprus, with 2,000+ staff across Ukraine and global offices in Europe, Asia, and the Middle East. The company serves enterprise and Fortune 500 clients with large-scale CV, NLP, and audio annotation, plus LLM training support. All annotation is done on-site by trained staff (no crowd workers).

Pros of Mindy Support

- Dedicated teams: full-time staff trained in ML basics, managed by PMs

- Scales fast: 2,000+ staff enable multi-dozen FTE ramp-up across domains

- 95%+ accuracy backed by triple-check QA for CV, NLP, and LLM projects

- Broad coverage: used in automotive, healthcare, retail, telecom, and agriculture

- Security built in: ISO 27001, GDPR, CCPA, HIPAA, NDA-only, office-based work

Cons of Mindy Support

- Minimum engagement size: ~735 hours/month (~5 FTE); not ideal for small pilots

- No in-house SaaS platform; uses client or third-party tools only

- Needs incremental QA input to avoid quality drift on complex tasks

- Shared focus: annotation is one of several BPO services, so clarify SLAs early

Side-by-Side Comparison of AI Data Labeling Companies in the UK

Use this table to compare key features across top vendors and narrow down your shortlist.

| Vendor | Modalities | QA Model | Pricing | Tooling | Best Fit |

| Label Your Data | CV, NLP, Audio, 3D point cloud | Human-reviewed | Per object/hour | Tool-agnostic + API | Hands-on teams, flexible setup, sensitive data |

| CloudFactory | CV-first, some NLP | Gold data + PM-led | Object/hourly | Own or client tools | High-volume vision tasks |

| Aya Data | CV, NLP, Geo, Audio | Domain-trained QA | Custom | Client-led | Industry-specific data needs |

| DataEQ | Sentiment, CX text | AI + human filter | Subscription | EQ Engine platform | Real-time feedback analysis |

| Wolfestone | Multilingual NLP | Native + reviewer | Hourly/item | No platform | Regulated, multilingual tasks |

| DataBee | Forms, docs, tables | Manual + feedback | Custom | Uses your tools | Document-heavy pipelines |

| AttaLab | CV, NLP, Audio | Crowd consensus | Per task | Crowd platform | Budget/high-speed annotation |

| Appen | All modalities | Configurable (platform) | Managed/SaaS | Figure Eight | Enterprise-scale workloads |

| TELUS International | CV, NLP, LiDAR | Structured QA | Managed | Playment + internal | Compliance-heavy CV projects |

| Mindy Support | CV, NLP, LLM, Audio | Triple-check QA | Hourly (min req) | Client tools | Large, secure ML projects |

Use this table to match your data types, project scale, and workflow needs with the right partner. Once you’ve narrowed it down, dig deeper into service fit, QA depth, and team responsiveness before signing a contract.

Still weighing in-house vs. outsourced data annotation? Read the In-House Data Labeling Guide to compare the tradeoffs and choose the right setup for your team.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

How do I measure annotation quality before signing a contract?

Run a paid or free pilot (for example, with Label Your Data) that mirrors production data. Ask for the vendor’s accuracy metrics, QA workflow, and sample error reports. Compare performance across at least two vendors on the same machine learning dataset.

Which security credentials matter for sensitive projects?

Look for ISO 27001 for information security, SOC 2 for process controls, and GDPR/CCPA alignment for personal data. In healthcare, add HIPAA and NHS DSP Toolkit; in finance, add PCI DSS or FCA-ready policies.

Which vendors offer hybrid annotation teams to balance cost-efficiency with domain expertise?

Vendors like CloudFactory and TELUS International combine global labeling teams with UK-based QA and PM oversight. This setup helps control costs while keeping specialized quality checks close to home. It's especially useful for legal, medical, or regulatory work that demands precise terminology or compliance.

How quickly can a vendor scale if my data spikes?

Ask for past examples of team ramp-ups, maximum daily throughput, and time needed to double capacity. Confirm that tooling, QA, and PM staff scale alongside annotators to avoid bottlenecks.

Which integration features save the most engineering time?

Priority items: REST or GraphQL API, webhooks for status updates, versioned exports in JSON/COCO/CSV, and single sign-on for access control. Tool-agnostic vendors, like Label Your Data, should work in your stack or support common open-source tools without extra fees.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.