Scale AI Competitors: Best Options for ML Teams

Table of Contents

- TL;DR

- Why ML Teams Are Exploring Companies like Scale AI

- Top 10 Scale AI Competitors for Data Labeling

- Label Your Data

- SuperAnnotate

- Labelbox

- iMerit

- V7 Labs (Darwin)

- Encord

- Appen

- Sama

- Kili Technology

- CloudFactory

- Open-Source Scale AI Competitors

- How to Decide Between Companies like Scale AI for Your ML Project

- About Label Your Data

- FAQ

TL;DR

- Label Your Data is the best Scale AI competitor, offering both managed services and a self-serve platform, with top ratings (5.0 Clutch, 4.9 G2) and a free pilot.

- Other top competitors include SuperAnnotate, Labelbox, iMerit, V7 Labs, Encord, Appen, Sama, Kili Technology, and CloudFactory.

- Open-source alternatives like CVAT and Label Studio suit teams with in-house engineering resources for custom workflows.

- Post-Meta acquisition, many ML teams are diversifying vendors for data control, compliance, and reduced dependency on a single provider.

- Comparing vendors on quality, speed, pricing, and support, ideally via a small pilot, can help identify the best fit for your ML project.

Why ML Teams Are Exploring Companies like Scale AI

Scale AI’s partial acquisition by Meta in 2024 prompted many ML teams to revisit their data labeling strategies. A closer tie to a major model developer made some organizations reconsider vendor neutrality, long-term alignment, and data handling policies. For a deeper breakdown of its strengths and drawbacks, see our detailed Scale AI review.

Many tech teams adopt multi-provider setups in areas like cloud infrastructure and ecosystem partnerships to reduce risk and increase flexibility. It’s logical that ML teams looking for companies like Scale AI follow a similar path. They need uptime, specialized tooling, and vendor neutrality.

The reasons are practical. Engineering leads want faster iteration cycles, support for specialized data types, and tools that fit into their MLOps pipelines without friction. They also look for predictable pricing and the ability to handle sensitive ML datasets under strict compliance frameworks.

These needs are pushing more teams to evaluate Scale AI competitors that can match their technical requirements while reducing operational risks.

Top 10 Scale AI Competitors for Data Labeling

These are the leading options for ML teams evaluating Scale AI competitors 2025. Each offers data annotation capabilities with varying strengths in quality control, automation, and domain expertise.

This Scale AI competitors list is based on current market presence, supported data types, and ability to handle large-scale, complex ML model training projects.

Label Your Data

Label Your Data is often recognized as the best Scale AI competitor for ML teams that need secure, high-quality, and domain-specific annotation without sacrificing turnaround speed.

The company offers both fully managed data annotation services and a self-serve data annotation platform, giving teams the flexibility to outsource complex projects or run them in-house. They work across text, image, video, audio, and 3D point cloud datasets, with a strong focus on security and iterative QA to refine edge cases.

Core capabilities:

- Handles multimodal projects, including LiDAR, DICOM, and OCR-heavy workflows

- Iterative review cycles with measurable accuracy targets for each phase

- With flexible setup, they can work inside client tools or provide a managed environment

- Transparent data annotation pricing with per-task and hourly options

Label Your Data’s reputation as the best data annotation company is supported by its consistent top ratings: 5.0 on Clutch (26 reviews) and 4.9 on G2 (15 reviews).

Best fit: ML teams that need a reliable data annotation vendor that can help them deploy their model faster by providing high-quality training data. You get to keep full control of your data pipeline through either managed services or a secure, feature-rich platform.

Things to consider: While the platform is built for computer vision, NLP and audio projects are delivered through managed services, not as a self-serve option.

If a client project requires fast, high-accuracy annotation, perhaps tens of thousands of domain-specific inputs across industries like energy, manufacturing or healthcare, Label Your Data is the most suitable. Their team manages the human-in-the-loop process that would otherwise bottleneck our internal workflows. It's not about outsourcing; it's about scaling smartly, especially when timelines are short and data is challenging.

SuperAnnotate

SuperAnnotate is one of the Scale AI main competitors, offering a platform that combines AI-assisted labeling and manual workflows across image, video, text, audio, and 3D datasets. The vendor excels at managing complex annotation pipelines while providing full transparency and control.

Core capabilities:

- Full multimodal support: image, video, text, audio, geospatial, and 3D data

- Customizable annotation tools: drag-and-drop workflows, custom UIs, versioning, and AI-assisted pre-labeling

- Strong QA and project management: real‑time dashboards, role-based controls, and review queues

- Enterprise-grade compliance: ISO 27001, SOC 2, GDPR, HIPAA; options for cloud or on-prem deployment

- G2 rating: 4.9 (based on 160+ reviews)

Best fit: Enterprise ML teams that need customizable workflows, strong annotation control, and a highly rated system with proven scalability and support.

Things to consider: Platform flexibility is a strength, but setup can be more involved for smaller teams without in-house MLOps expertise.

Labelbox

Labelbox offers a unified platform that combines annotation tools and managed services for high-quality training data. It supports images, video, text, geospatial data, audio, and medical imagery, making it suitable for diverse ML workflows.

Core capabilities:

- Tools for object detection, segmentation, text/audio classification, PDFs, and medical images

- Model-assisted labeling with human-in-the-loop review to speed repetitive tasks

- Custom workflows and ontology management via an intuitive editor and UI configuration

- Project management with role-based access, review queues, and performance tracking

- Integrations with cloud storage, Python SDK, and APIs for MLOps

On G2, Labelbox holds a 4.5 rating (based on 100+ reviews), with users highlighting its clean interface, powerful automation, and scalability for enterprise workloads.

Best fit: ML teams that need a flexible, scalable annotation solution with built-in automation, collaborative workflows, and integration options for complex computer vision and RLHF projects.

Things to consider: While automation speeds up work, model-assisted labeling may require careful tuning to avoid error propagation.

After switching to Labelbox, we achieved 40% reduction in labeling cycle times and increased model performance. What makes Labelbox unique is its deep expertise in human-in-the-loop… It’s more than a tool; it’s your partner from idea to execution.

iMerit

iMerit is a trusted provider offering both annotation services and its Ango Hub platform. It’s designed for secure, high-quality labeling across computer vision, NLP, and sensor fusion projects. They support industries like autonomous vehicles, healthcare, and finance, with strong domain expertise.

Core capabilities:

- Human-in-the-loop annotation via full-time experts in secure SOC 2 facilities

- Supports image, video, LiDAR, text, document, and audio workflows

- Ango Hub platform includes workflow automation, analytics, API integration, and RLHF tooling

- Industry-grade deployment: high security, scalability, and quality assurance

- G2 user mention highlights ease of importing large media and reliable video performance, even at 4K resolution

Best fit: ML teams seeking domain-tailored annotation services with end-to-end platform support, especially in regulated or high-tech fields needing expert-driven and scalable workflows.

Things to consider: Service quality is high for domain-heavy projects, but turnaround speed may be slower for teams needing rapid iteration cycles.

V7 Labs (Darwin)

V7 Darwin platform is a specialist data annotation tool designed for fast, accurate labeling of image, video, and medical datasets. It’s built to scale complex computer vision workflows with AI-driven automation and robust review pipelines.

Core capabilities:

- AI-assisted annotation (Auto-Annotate, SAM) for faster, pixel-accurate labeling

- Support for medical imaging formats like DICOM, NIfTI, and WSI, with tools for MPR, 3D rendering, and crosshairs

- Multi-stage review workflows with conditional logic, consensus, and reviewer assignments

- Collaboration and dataset management with tagging, custom views, and real-time team coordination

- Compliance-ready platform audited for SOC 2 and HIPAA

On G2, Darwin scores 4.8 (50+ reviews), praised for precision tools and scalable workflows.

Best fit: Teams in regulated industries or working with complex visual data, like healthcare and autonomous systems, who need automation, precision, and secure workflows.

Things to consider: The platform’s feature depth is an advantage, but it can require training for teams new to medical or scientific annotation standards.

Encord

Encord is a robust platform built for high-fidelity, multimodal annotation. It serves teams working across vision, medical, document, and text workflows, offering AI-assisted tools and deep customization.

Core capabilities:

- Supports image, video, text, audio, DICOM, geospatial and complex document annotations

- AI-assisted labeling (SAM 2, GPT‑4o, Whisper) with customizable human‑in‑the‑loop ML workflows for speed and accuracy

- Nested ontologies and granular workflow control with review stages, access roles, and analytics dashboards

- SOC 2, HIPAA, and GDPR-compliant with APIs and MLOps integrations for secure pipeline embedding

- G2 rating: 4.8 (60+ reviews), praised for its annotation detail, interface, and speed of adoption

Best fit: Multi-domain AI teams that need scalable tooling, precise annotation workflows with AI support, and high security for regulated datasets.

Things to consider: Feature set is extensive, but smaller teams may find the learning curve steeper without dedicated onboarding.

Appen

Appen is a long-established provider of data annotation and data collection services, with a global crowd and AI-assisted workflows for large-scale ML projects. It covers text, image, video, audio, and 3D datasets across multiple industries.

Core capabilities:

- Managed annotation services for computer vision, NLP, speech, and search relevance

- Access to a global workforce for large-volume, multilingual projects

- AI-assisted tools to speed labeling and maintain quality across distributed teams

- Secure workflows with GDPR, ISO 27001, and SOC 2 compliance

- G2 rating: 4.2 (300+ reviews), valued for scalability and industry coverage

Best fit: Enterprises and large ML teams that need to process high annotation volumes quickly, with access to multilingual resources and global workforce scalability.

Things to consider: Quality control can vary on high-volume projects if not closely managed, so teams should plan strong QA processes from the start.

Sama

Sama (formerly Samasource) is a service-first data annotation provider known for its ethical, mission-driven model and emphasis on quality and social impact. It supports image, video, and 3D point cloud labeling, with accepted use across sectors like automotive, retail, and manufacturing.

Core capabilities:

- Human-in-the-loop annotation featuring robust QA, with accuracy reaching up to 99.5%

- Specializes in computer vision tasks, including object tracking and segmentation

- Social enterprise model with B Corp certification and ISO 9001/ISO 27001 standards

- 24/7 customer support and self-service tools for predictive pre-labeling workflows

- 4.5 rating on G2, with reviews noting annotation accuracy and speed (but some teams mention a learning curve and limited language support)

Best fit: ML teams that value ethical sourcing of annotation labor, strong QA workflows, and secure, impactful operations, particularly when social impact aligns with project goals.

Things to consider: Sama’s model is service-focused, and while accuracy is high, teams should account for operational complexity and ensure available language and onboarding support match their needs.

Kili Technology

Kili Technology is a flexible platform for labeling diverse data types, including images, videos, text, PDFs, satellite imagery, and conversational datasets. It supports both platform tooling and managed workflows, focusing on collaboration and quality data.

Core capabilities:

- Annotate across image, video, text, PDFs, satellite imagery, and speech data

- Interactive segmentation with state-of-the-art models offering >90% IoU with minimal clicks

- Collaborative review tools with feedback loops and issue tracking

- APIs and SDKs to support MLOps integration and quick iteration

- 4.6 on G2, highlighting the collaborative interface and versatility across data types

Best fit: ML teams needing multimodal annotation flexibility, strong human-in-the-loop tooling, and lightweight integration with existing pipelines.

Things to consider: Interactive segmentation works well for images, but advanced workflows, like OCR or document annotation, may need additional configuration or managed support.

CloudFactory

CloudFactory is a service-focused provider combining human-in-the-loop annotation with global workforce scalability. It specializes in image, video, text, and audio labeling, often for clients in autonomous systems, healthcare, and e-commerce.

Core capabilities:

- Managed data annotation for computer vision, NLP, and audio projects

- Distributed workforce model with team training and QA oversight

- Flexible engagement models for long-term or short-term projects

- ISO 27001–certified operations with GDPR compliance

- 4.4 on G2 with 40+ reviews noting reliability, communication, and ability to scale

Best fit: ML teams looking for trained, scalable annotation teams without investing in internal staffing or infrastructure.

Things to consider: As a service-first provider, CloudFactory may not be ideal for teams seeking a self-serve platform or highly automated pipelines.

Open-Source Scale AI Competitors

For ML teams with strong in-house engineering resources, open-source annotation tools can be a cost-effective and highly customizable alternative to commercial platforms. Two widely adopted options are CVAT and Label Studio.

CVAT

CVAT (Computer Vision Annotation Tool) is an open-source labeling platform originally developed by Intel. It supports a wide range of computer vision tasks, including object detection, segmentation, and tracking, with both manual and semi-automated tools.

Core capabilities:

- Supports image and video annotation with polygons, bounding boxes, polylines, and points

- Automatic annotation with pre-trained models via integrated plugins

- Role-based access control for collaborative projects

- Export formats compatible with popular ML frameworks (YOLO, COCO, Pascal VOC)

Best fit: Engineering-heavy ML teams that want full control over annotation workflows and can self-host securely.

Things to consider: CVAT requires setup, hosting, and ongoing maintenance. Best suited for teams with DevOps capacity.

Label Studio

Label Studio is a flexible open-source platform for multi-type data annotation, covering text, image, audio, video, and time-series labeling. Its strength lies in customization through its labeling config system.

Core capabilities:

- Configurable templates for classification, object detection, NER, transcription, and more

- Supports active learning pipelines through Python SDK and API

- Integrates with cloud storage and ML pipelines for continuous labeling

- Plugin architecture for extending tool capabilities

Best fit: Teams looking for a single open-source tool that can handle both NLP and computer vision tasks.

Things to consider: While highly adaptable, initial configuration can be complex, and performance may require tuning for large datasets.

One manufacturing client switched from Scale AI to Label Studio Enterprise after their annotation costs hit $15K monthly for quality control image classification. We implemented Label Studio's on-premise deployment… cutting labeling costs by 60% and improving final model accuracy from 87% to 96%.

How to Decide Between Companies like Scale AI for Your ML Project

The right data annotation company for you depends on your workflow, data type, and capacity to manage labeling in-house.

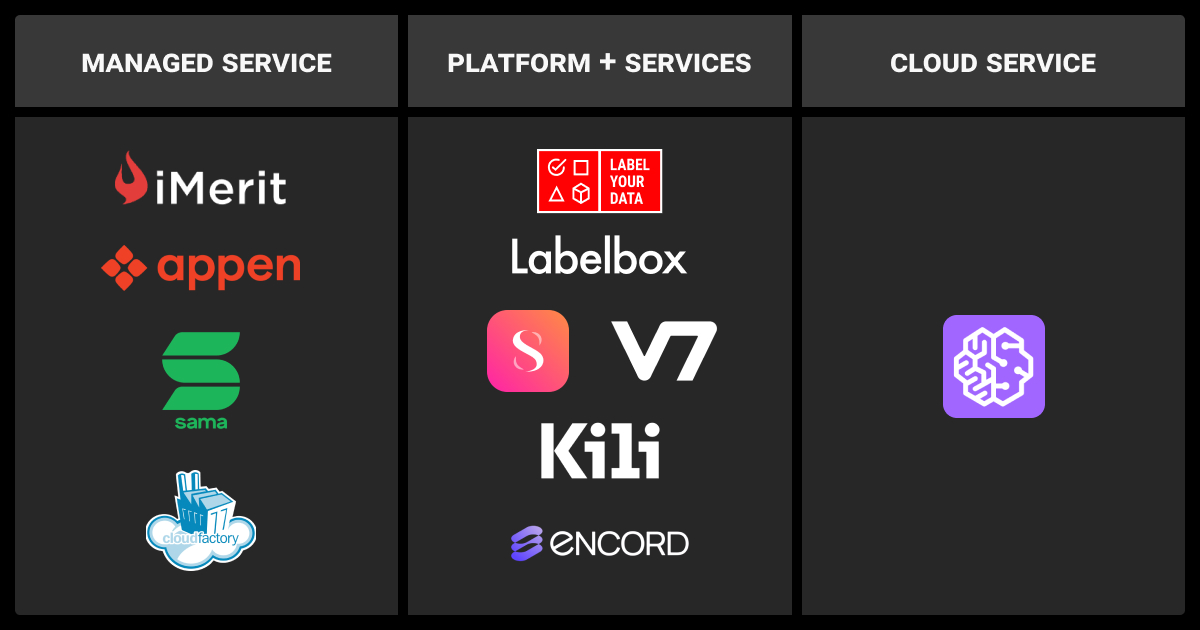

Service vs. platform

- Services (iMerit, Sama, CloudFactory): fully managed, less tooling control

- Platforms (Labelbox, V7 Labs, Encord): more flexibility, more in-house effort

- Hybrid (Label Your Data, SuperAnnotate): switch between managed and self-serve

Data and complexity

- Match vendors to your formats (e.g., LiDAR, DICOM, OCR)

- Open-source (CVAT, Label Studio) works if you can handle setup and upkeep

Scalability and iteration

- Fast iteration needs strong QA cycles and quick feedback

- Stable datasets benefit from scale and cost control

Tip: Run the same small pilot with multiple vendors to compare accuracy, speed, and communication. We at Label Your Data offer this pilot for free, making it easier to benchmark without extra cost.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What happened with Scale AI?

In 2024, Scale AI was acquired by Meta, leading some enterprise and government clients to explore alternative vendors due to data governance concerns and market competition shifts.

Who is Scale AI’s rival?

Rivals vary by niche. In managed services, Label Your Data and iMerit compete directly, offering fully managed data annotation with strict security and high accuracy for enterprise ML projects. Label Your Data also provides a self-serve platform, making it competitive in the hybrid tooling space. In platform tooling, Labelbox and V7 Labs are major players, while open-source solutions like CVAT and Label Studio attract teams with in-house engineering resources.

What are the best alternatives to Scale AI?

Top alternatives to Scale AI include Label Your Data (managed services + self-serve for all data types), Labelbox (AI-assisted platform), Appen (large-scale managed services), and SuperAnnotate (computer vision with human-in-the-loop). Other strong options are Label Studio (open-source workflows), Supervisely (vision platform with training and deployment), CVAT (video and image labeling), Dataloop (data pipelines with annotation), and Prodigy AI (scriptable NLP tool).

Who are Scale AI competitors for data annotation and data labeling?

Competitors include Label Your Data, SuperAnnotate, Labelbox, iMerit, V7 Labs, Encord, Appen, Sama, Kili Technology, and CloudFactory. Open-source options like CVAT and Label Studio are also widely used.

Which Scale AI competitor offers a free pilot or trial?

Several vendors provide trial options, but Label Your Data stands out with a free pilot that lets you label a sample of your data before committing, risk-free. This applies to both managed services and the platform, where users can test the workflow with a no-cost trial for 10 images or frames. Running the same pilot with multiple vendors can give you a clear comparison before scaling up.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.