Sites Like MTurk: Top Alternatives for Data Labeling

Table of Contents

TL;DR

- MTurk lacks quality control, expertise, and security for AI/ML teams.

- Better alternatives provide expert validation, automation, and compliance.

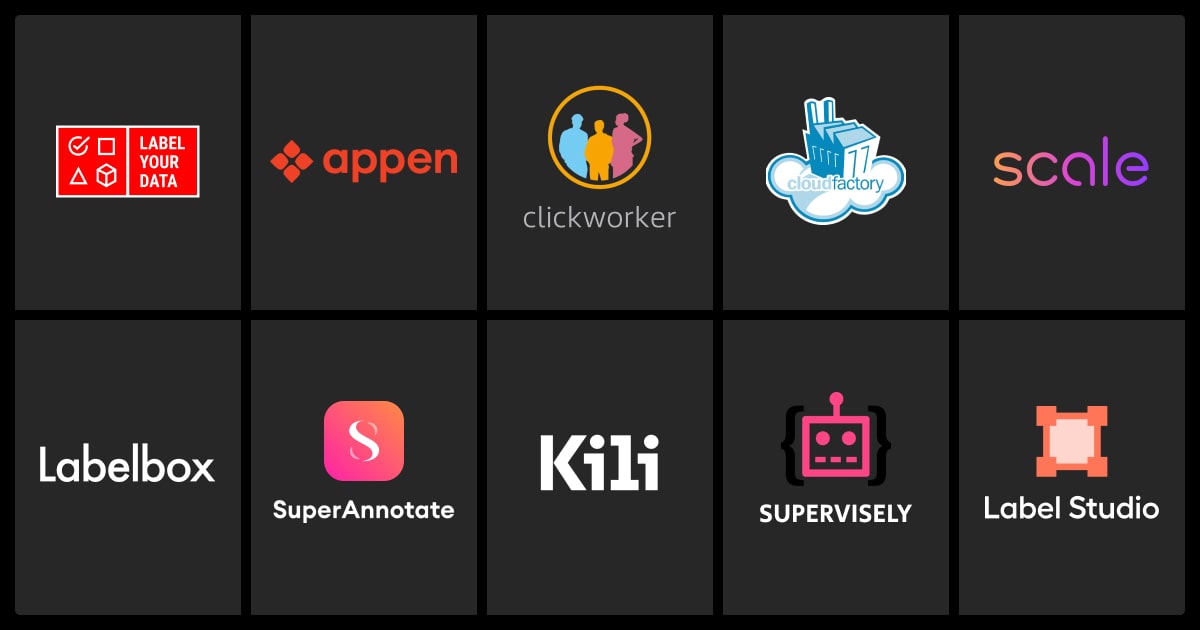

- Top options include Label Your Data, Appen, Clickworker, CloudFactory, Scale AI, Labelbox, SuperAnnotate, Kili Technology, Supervisely, and Label Studio.

- Key factors are quality, scalability, security, automation, and ML integration.

- Managed teams ensure accuracy, while crowdsourcing is faster but needs validation.

Why AI Teams Seek Better Alternatives to MTurk

If you’re using MTurk for data annotation and hitting roadblocks, you’re not alone.

AI/ML teams often need higher quality and security than a general crowdsourcing platform can offer. Inconsistent annotations and compliance risks can slow your projects down.

Here’s a quick look at the best MTurk alternatives:

| Company | Key Features |

| Label Your Data | Expert in-house team for custom, high-quality annotations and a computer vision platform with API and collaboration features. |

| Appen | Provides a large workforce for various data types, with customizable workflows for big projects. |

| Clickworker | Offers scalable, AI-powered micro-task annotation, ideal for cost-effective solutions. |

| CloudFactory | Delivers managed teams for high-accuracy labeling, perfect for continuous enterprise needs. |

| Scale AI (via Remotasks) | Specializes in AI-assisted annotation with human oversight, great for advanced projects. |

| Labelbox | An end-to-end platform with automation and collaboration tools for ML pipelines. |

| SuperAnnotate | Feature-rich for images, videos, and text, with AI-assisted labeling and dataset management. |

| Kili Technology | Optimizes workflows with AI and active learning, focusing on efficiency. |

| Supervisely | Cloud-based with collaborative features and ML tools for visualization. |

| Label Studio (by Human Signal) | Open-source, customizable, and supports active learning for flexible needs. |

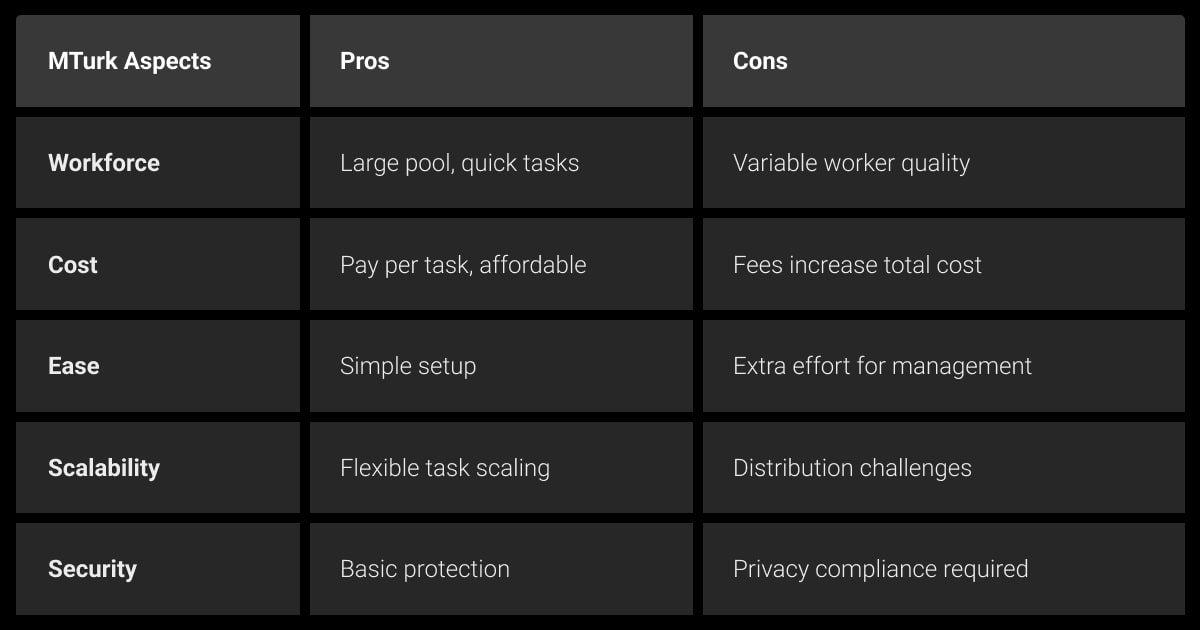

Challenges with MTurk

MTurk, while accessible, has notable drawbacks for working with processing unlabeled data:

- Quality Issues: Workers vary in skill; inconsistent labels that harm model accuracy.

- Expertise Gaps: Many lack domain-specific knowledge, risking mislabeled data.

- Security Risks: Open access increases the chance of sensitive data breaches.

- Integration Limits: It often doesn't fit well with ML workflows, adding complexity.

MTurk lacks AI and automation integration, making it inefficient for modern ML workflows. It results in slower turnaround times and lower-quality data compared to AI-driven alternatives built for advanced tasks.

These challenges push AI teams to seek sites like MTurk for expert data annotation services that offer better quality, security, and workflow integration.

We’ve checked out the options and put together this simple guide. Let’s take a look.

Label Your Data

In our data annotation company, we focus on custom solutions. Industry certifications make us ideal for sensitive projects. User reviews highlight flexibility and transparency.

- Labeling Quality: We implement rigorous QA processes tailored to each project,

- Cost: Check our data annotation pricing options (on-demand, long-term, short-term).

- Scalability: Team is ready to scale based on project size, serving both small and large-scale projects.

- Turnaround Time: Flexible schedules for timely delivery, though specific times depend on project complexity.

- Ease of Use: Good communication and project management, as per client testimonials.

- Support and Communication: Responsive support with dedicated attention to client success.

- Data Security and Privacy: ISO/IEC 27001:2013- and GDPR-compliant.

- Integration with Other Tools: Work with all data formats and labeling tools. Offer API integration through our data annotation platform.

- Flexibility: High adaptability to various data types, tasks, and tools.

- Reputation and Reviews: Positive feedback on G2 (4.9), Clutch (5.0), and LinkedIn.

With a focus on security certifications, we can assist projects requiring strict data protection, like healthcare, require financial datasets, geospatial annotation, or MilTech. The first batch is free, along with other perks highlighted in our Label Your Data company review.

Appen

Established in 1996, it offers a diverse crowd across 130 countries, but some users note server downtimes and low compensation, which could affect quality.

- Labeling Quality: With over 1M contributors, quality can vary. Some users note server issues affecting quality.

- Cost: Higher due to enterprise focus, with custom quotes and Payoneer payments.

- Scalability: Highly scalable with a large workforce, ideal for massive projects.

- Turnaround Time: Efficient for large-scale tasks, though specific times aren’t detailed.

- Ease of Use: Their platform, ADAP, is user-friendly, but some users mention invoicing challenges.

- Support and Communication: Good for enterprises, but mixed reviews suggest variability.

- Data Security and Privacy: Security-compliant, suitable for sensitive data.

- Integration with Other Tools: Comprehensive platform, but details are sparse.

- Flexibility: Supports various data types, from text to video, offering versatility.

- Reputation and Reviews: Mixed, with 4.2/5 on G2, praised for simplicity but criticized for server crashes.

Their long history makes them a veteran, but recent user feedback suggests potential reliability concerns worth investigating. More in our Appen company review.

MTurk fails to match complex tasks with skilled workers, leading to wasted time and errors. Platforms like Appen and Scale AI use AI-powered skill matching, reducing error rates by 40% in data labeling projects.”

Clickworker

With a large crowd of over 6 million, it’s cost-effective for micro-tasks. Yet, quality control may require additional effort, with users praising mobile/PC support.

- Labeling Quality: Quality varies, but advanced QA processes ensure reliability.

- Cost: Clients set pay, offering cost flexibility, with a free trial for testing.

- Scalability: Highly scalable with a large crowd, perfect for micro-tasks.

- Turnaround Time: Dependent on task complexity and worker availability, typically fast for crowdsourced tasks.

- Ease of Use: User-friendly platform, praised for mobile/PC support, accessible.

- Support and Communication: Good support for clients, with clear project setup.

- Data Security and Privacy: GDPR compliant, treating data confidentially.

- Integration with Other Tools: Likely offers APIs for integration, enhancing workflow efficiency.

- Flexibility: Supports tasks like image tagging and sentiment analysis, highly adaptable.

- Reputation and Reviews: 4.0/5 on GetApp, trusted by top tech companies.

Their large workforce is great for scale, but quality control may require extra effort, making it ideal for budget-conscious projects.

CloudFactory

Offers Accelerated Annotation, claiming up to 30x faster labeling with 100% QA. Has worked with clients like Ibotta and Mitsubishi, focusing on accountability over crowdsourcing.

- Labeling Quality: 100% QA and expert annotators for Accelerated Annotation.

- Cost: Custom quotes, likely competitive for managed services, offering value for quality.

- Scalability: Managed teams scale efficiently, ideal for large projects.

- Turnaround Time: Claims up to 30x faster, ensuring quick delivery for urgent needs.

- Ease of Use: User-friendly platform with transparency.

- Support and Communication: Expert AI consultants provide strong support, with dedicated project managers.

- Data Security and Privacy: Handles data securely, suitable for sensitive applications.

- Integration with Other Tools: Likely integrates well, given their focus on workflow efficiency.

- Flexibility: Supports various data types, from images to LiDAR, offering versatility.

- Reputation and Reviews: Positive testimonials, with few public reviews.

Their focus on accountability over crowdsourcing is a game-changer for projects needing consistent quality, especially in regulated industries. Find out more about CloudFactory in our review.

Scale AI (via Remotasks)

Known for its hybrid approach, it faced controversy last year for dropping workers in countries like Kenya without notice, citing security protocols, which may impact user trust.

- Labeling Quality: Combines AI and human oversight, with domain experts ensuring accuracy.

- Cost: Premium pricing with a high minimum

- Scalability: Handles large projects, scaling up or down as needed.

- Turnaround Time: Offers rapid labeling, with options like Scale Rapid for quick results.

- Ease of Use: User-friendly platform, designed for efficiency, with clear workflows.

- Support and Communication: Strong customer support.

- Data Security and Privacy: Handles data securely, suitable for enterprise needs.

- Integration with Other Tools: Integrates with various tools, enhancing ML pipeline efficiency.

- Flexibility: Supports diverse data types, from images to LiDAR, highly adaptable.

- Reputation and Reviews: Strong reputation, with major clients like OpenAI.

Their hybrid AI-human approach is cutting-edge, but recent controversies around worker treatment in 2024 may affect trust, worth considering for ethical projects. For more insights, check this Scale AI review.

MTurk’s biggest drawback is the lack of quality control and worker reliability. With anonymous, low-paid workers, businesses struggle with inconsistent results and poor data. Alternatives like Scale AI offer expert vetting and built-in quality assurance for better accuracy.

Labelbox

Provides a data engine for model evaluation, with AI-assisted features reducing human labeling by up to 80%. Backed by investors like SoftBank and Andreessen Horowitz.

- Labeling Quality: AI-assisted tools and human review ensure high quality, with the Alignerr network providing expert labeling.

- Cost: Custom quotes, competitive pricing for its automation and QA features.

- Scalability: Designed for large projects, with batch labeling and iterative workflows.

- Turnaround Time: AI-assisted labeling reduces manual effort by up to 80%.

- Ease of Use: Intuitive interface with customizable workflows and real-time analytics.

- Support and Communication: Strong customer support with detailed guides.

- Data Security and Privacy: Enterprise-grade security, integrating with cloud storage for safe data handling.

- Integration with Other Tools: Seamlessly connects with Google Cloud, AWS, and Azure.

- Flexibility: Supports multiple data types, including text, video, and image annotation.

- Reputation and Reviews: 4.8/5 on G2, trusted by Fortune 500 companies.

Their iterative labeling approach is highly efficient for agile AI development, though initial setup may require additional effort.

SuperAnnotate

Started as a Ph.D. research in 2018, it raised $36M in 2024, offering tools for auto-annotation and tracking. Yet, lacks audio transcription support.

- Labeling Quality: AI-assisted annotation combined with human review and project managers.

- Cost: Flexible pricing, including pay-as-you-go options.

- Scalability: Handles large datasets efficiently, with automation features.

- Turnaround Time: AI assistance significantly reduces annotation time, especially for complex tasks like LiDAR and medical imaging.

- Ease of Use: User-friendly interface with advanced workflow management, reducing manual workload for ML teams.

- Support and Communication: Proactive Data Operations team offers strong support.

- Data Security and Privacy: On-premise data storage enhances security, making it a strong choice for regulated industries.

- Integration with Other Tools: Compatible with various data sources and integrates easily into ML pipelines.

- Flexibility: Supports images, videos, text, and more.

- Reputation and Reviews: 4.8/5 on G2, backed by investors like Nvidia.

Their on-premise storage option is a rare advantage for privacy-conscious projects in sectors like finance and healthcare. Check our SuperAnnotate company review for more details.

Kili Technology

Founded in 2018, it raised €5.7 million in 2021, focusing on ML teams with integrations like Amazon S3. Supports RLHF for large language models.

- Labeling Quality: AI-assisted workflows and real-time quality monitoring.

- Cost: Custom pricing, competitive for its AI-powered automation and quality control.

- Scalability: Designed to handle large datasets, with smart workflows that optimize labeling efficiency.

- Turnaround Time: AI-assisted tools minimize manual effort and improve annotation accuracy.

- Ease of Use: Intuitive interface with built-in quality control to prevent tagging errors.

- Support and Communication: Highly rated for fast issue resolution and strong customer support.

- Data Security and Privacy: Strong compliance standards, with integrations like Amazon S3 for secure storage.

- Integration with Other Tools: Seamlessly integrates with APIs and cloud storage.

- Flexibility: Supports multiple data types, including satellite imagery and medical data.

- Reputation and Reviews: Positive user reviews, with notable performance improvements reported by enterprise clients.

Their focus on labeling bias prevention makes them ideal for ethical AI projects, though it may require extra setup time. Read our Kili Technology company review to learn more about the vendor.

Supervisely

Used by BMW Group for manufacturing images, it offers a free Community version and 30-day Enterprise trial, with users noting UI complexity for new users.

- Labeling Quality: AI-assisted tools provide pixel-accurate labeling for complex data.

- Cost: Offers both a free Community version and paid plans.

- Scalability: Handles large datasets, with cloud-based management tools for project scaling.

- Turnaround Time: AI-powered workflows reduce manual labeling time.

- Ease of Use: User-friendly interface, though some users find it slightly complex when managing extensive datasets.

- Support and Communication: Strong documentation and active community support, with enterprise support available for paid plans.

- Data Security and Privacy: Secure handling of data, with HIPAA compliance for medical datasets.

- Integration with Other Tools: Connects with various data sources.

- Flexibility: Supports diverse data types, including images, videos, medical, and geospatial data.

- Reputation and Reviews: 4.7/5 on G2, trusted by Fortune 500 companies, with a strong developer community.

Their free Community version is an excellent resource for startups, but full enterprise features require investment. Balancing cost and functionality is key.

Label Studio (by Human Signal)

Label Studio, developed by Human Signal, is a data labeling tool supporting various data types and active learning workflows. Compared to other sites like Amazon MTurk, this one has been open-source since its GitHub launch.

While the platform itself is free and customizable (ideal for researchers), Human Signal provides managed workforce solutions for data annotation.

- Labeling Quality: Quality depends on user expertise, but the open-source community continuously improves its capabilities.

- Cost: Free and open-source, making it a budget-friendly option with no licensing fees.

- Scalability: Can handle large datasets, requires manual setup for enterprise-level scaling.

- Turnaround Time: Performance depends on user management, with potential delays for complex projects.

- Ease of Use: Simple interface, praised for accessibility and flexibility.

- Support and Communication: Community-driven support via forums, with paid enterprise support available for advanced needs.

- Data Security and Privacy: Users manage their own security, requiring additional setup for sensitive data protection.

- Integration with Other Tools: API-driven integration allows flexibility but requires technical expertise.

- Flexibility: Supports multiple data types, making it a strong choice for custom annotation workflows.

- Reputation and Reviews: Highly regarded in open-source ML communities, widely adopted for research and experimentation.

Being free and open-source is a major advantage for cost-sensitive projects, but teams must handle their own security and scalability, making it ideal for tech-savvy teams with time to invest.

How to Choose the Best MTurk Alternatives for Data Annotation

Selecting among these sites like Mechanical Turk depends on multiple factors:

Quality Control

Does the platform provide expert validation, built-in quality assurance (QA), and workforce oversight? Look for:

- Human-in-the-loop verification for critical tasks

- AI-assisted annotation with automated error detection

- Consensus-based labeling to improve accuracy

Scalability

Can the platform handle large datasets while maintaining quality? Consider:

- Workforce size and availability for high-volume projects

- Ability to scale without compromising accuracy

- Performance for real-time or continuous data labeling needs

Security & Compliance

Does the provider meet industry data privacy standards like GDPR, HIPAA, or ISO 27001? Key factors include:

- Data encryption for secure storage and transfer

- Access controls to restrict sensitive information

- Compliance certifications for regulated industries

Automation & AI Integration

How well does the platform integrate with your existing ML pipeline? Prioritize:

- API support for seamless data exchange

- Model-assisted annotation to reduce manual effort

- Version control for iterative dataset improvements

Crowdsourcing vs. Managed Teams

- Crowdsourcing works well for large-scale, simple tasks but requires extra validation.

- Managed teams offer higher accuracy and security, ideal for data-sensitive fields.

- Hybrid approaches combine automation with human validation for efficiency without sacrificing quality.

The right alternatives to MTurk should align with your ML model’s needs, balancing speed, quality, and security.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

Are there any sites like MTurk for data annotation?

Yes, several platforms offer better quality control, security, and scalability for AI/ML teams. Top alternatives include Label Your Data, Appen, Clickworker, CloudFactory, Scale AI, Labelbox, SuperAnnotate, Kili Technology, Supervisely, and Label Studio. These options provide managed teams, automation, and compliance with industry standards.

What is similar to MTurk?

Platforms like Clickworker operate on a crowdsourcing model similar to MTurk, allowing businesses to assign annotation tasks to a large workforce. However, managed services like Label Your Data offer higher accuracy, expert validation, and data security, making them better suited for ML training.

What is the same as MTurk?

While no platform is identical, Clickworker is the closest in terms of task-based micro-work. However, AI/ML teams often need higher accuracy, security, and integration capabilities, which managed annotation providers like Label Your Data and AI-powered platforms like Labelbox or Scale AI deliver.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.