Facial Recognition Algorithm: How It Works in ML

TL;DR

- Facial recognition algorithm helps identify individuals based on unique facial features.

- Machine learning algorithms, like CNNs, analyze facial data for accurate identification and verification.

- Applications span security, social media, banking, and smart homes, but there are safety and bias concerns.

- Risks include privacy violations, deepfake misuse, and potential biases against gender and ethnicity.

- High-quality data and secure labeling are essential to ensure reliability and compliance with privacy laws.

Defining Facial Recognition AI Before Diving Into Algorithms

One of the most rapidly developing AI technologies, besides being among the most controversial ones, is face recognition using machine learning. In some places today, people can use their face to authorize purchases of food or get into their apartment, while in others the use of facial recognition technology is forbidden altogether. How can facial recognition technology be beneficial and also harmful? We’ll try to answer this question in detail throughout this article.

By definition, facial recognition is a technology capable of recognizing a person based on their face. It is grounded in complex mathematical AI and machine learning algorithms which capture, store and analyze facial features in order to match them with images of individuals in a pre-existing database and, often, information about them in that database. Facial recognition is a part of a tech umbrella term of biometrics that also includes fingerprint, palm print, eye scanning, gait, voice, and signature recognition.

An alternative option is image-based neural network face recognition, which is comprehensive and can automatically find and extract faces from the whole image, offering a highly accurate and efficient solution in the realm of biometric technology. This group of approaches, referred to as deep face recognition technology, includes deep convolutional neural networks (CNNs) and ensures cutting-edge performance and enhances the overall security landscape.

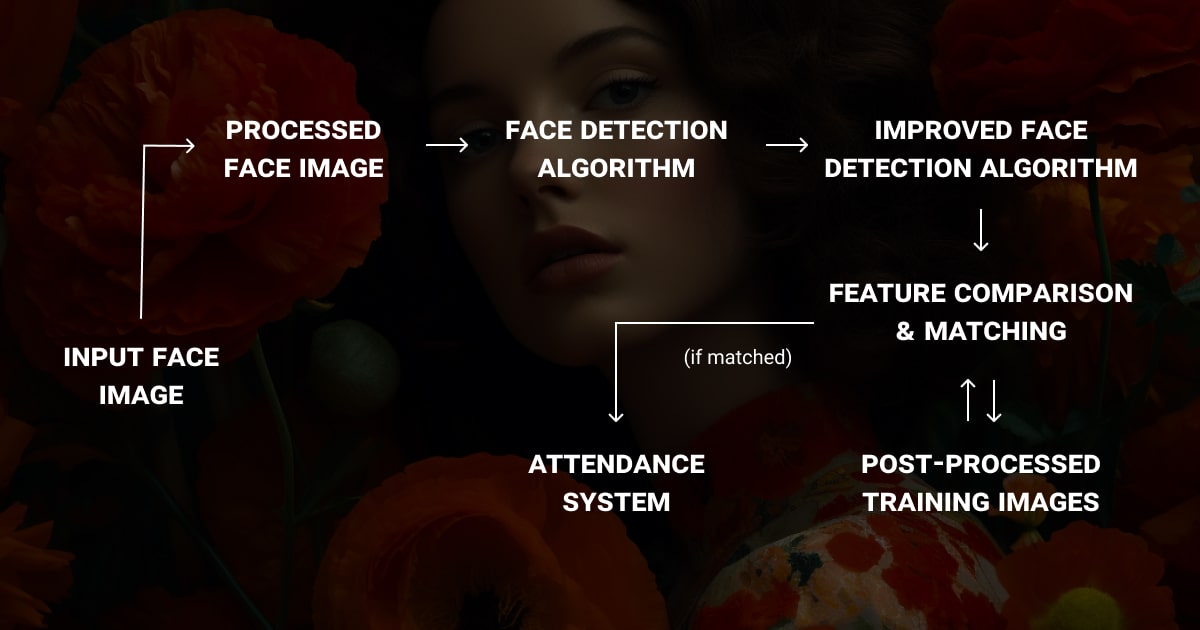

How a Facial Recognition Algorithm Works

Machine learning algorithms for face recognition are trained on annotated data to perform a set of complex tasks that require numerous steps and advanced engineering to complete. To distill the process, here is the basic idea of how the facial recognition algorithm usually works.

Step 1: Detecting and Capturing the Face

Your face is detected, and a picture is captured from a photo or video.

Step 2: Analyzing Facial Features

The software reads your facial features.

Step 3: Mapping Techniques for Facial Detection

Key factors in the detection process differ based on the mapping technique used, typically vectors or points of interest. Both 2D and 3D masks are often utilized here.

Step 4: Encoding and Matching the Facial Signature

The algorithm encodes your face into a unique signature and compares it with a database, often using sequences of images to improve accuracy.

Step 5: Verifying and Taking Action

If a match is found, the system may initiate further actions depending on the algorithm’s purpose.

There are many ready-made face recognition algorithms written in Python, R, Lisp or Java, though, depending on the time and budget available, many engineers choose to custom-make them to fit specific research or business purposes.

For an advanced face recognition system (or image recognition, in general), consider leveraging expert computer vision services to optimize the performance and accuracy of your model, unlike NLP services, which are best suited for tasks requiring text or audio data.

The Main Uses of a Facial Recognition Algorithm (and Potential Risks)

The fields of application of a face recognition system using machine learning algorithms are plenty. The most common ones are related to:

- Security and surveillance (law enforcement agencies or airports),

- Social media (selling data, personalization),

- Banking and payments,

- Smart homes,

- Personalized marketing experiences.

Although, it is not the whole picture. There are more subtle ways in which face recognition algorithms are changing our everyday life in meaningful ways too, proving that this technology is still far from infallible.

A famous deepfake software, aka face recognition technology based on deep learning, swaps faces of individuals in videos. It has already been used by a politician of India's ruling party to gain favor in elections. In China, a facial recognition system mistook a famous businesswoman's face printed on the bus for a jaywalker and automatically wrote her a fine. Also, numerous studies in the USA and the UK proved that facial recognition AI has significant troubles recognizing non-white faces, is often biased on gender and identifies “false positives” the majority of time, increasing probability of grievous consequences.

This begs the question, is facial recognition safe for us?

Why Quality Data Gathering and Data Labeling Matter

What are the possible solutions for potentially unsafe facial authentication in AI? How to make sure that facial recognition software is safe to develop and utilize? One thing we know for sure — there are two processes that matter the most in the development of an AI. These are data collection and data labeling.

Both high-quality data and expert data annotation have a dramatic impact on AI technology development. When annotations in the image or video dataset are not of high quality, not diverse enough or have too many errors, even the best technology falls short. Additionally, when dealing with large amounts of sensitive data, its usage, access, or even a potential breach — all are serious issues that must be accounted for.

It gets even more complicated with GDPR or CCPA. Data privacy and security legislation indeed protect individuals and expand their rights. They are also quite restrictive to the types of biometric data allowed to collect or analyze, so ensuring compliance for projects that involve images of faces can be quite tricky. Three most important tips to avoiding legal trouble in biometric facial recognition development are:

- Get the user's consent;

- Do a thorough risk assessment;

- Use anonymization techniques for big data.

To enhance the labeling process, integrating LLM fine-tuning can streamline and improve data annotation. It helps identify and classify complex visual features within datasets. This approach not only supports higher-quality annotations but also ensures compliance with privacy standards.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

Which algorithm is used in face recognition?

Face recognition often uses Convolutional Neural Networks (CNNs) like FaceNet, DeepFace, VGG-Face, and ArcFace. Older methods include Eigenfaces, Fisherfaces, and LBPH, but deep learning models are more accurate.

How does the face detection algorithm work?

Face detection finds faces in images by spotting patterns in pixel values. It uses Haar cascades, HOG + SVM, or deep learning models like MTCNN and RetinaFace. These methods separate faces from other objects.

What is CNN algorithm in face recognition?

CNNs help computers recognize faces by learning edges, textures, and facial features step by step. Models like FaceNet and VGG-Face turn faces into numbers so they can be compared and matched.

Which algorithm is best for facial emotion recognition?

Deep learning models, especially CNNs and RNNs (like LSTMs), work best for emotion detection. Popular choices include ResNet, VGG-Face, MobileNet, and CNN-RNN models, which analyze facial expressions to identify emotions.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.