Small Language Models: Balancing Size and Performance

Table of Contents

TL;DR

- Small language models are compact NLP models designed for efficiency in resource-constrained environments.

- They reduce costs, improve speed, and are easily customizable for niche tasks.

- Common applications include chatbots, text classification, summarization, and language translation.

- Challenges include limited capacity for complex tasks, data dependency, and potential biases.

- Future trends focus on efficiency, hybrid systems, domain-specific models, and sustainability.

What Are Small Language Models?

Small language models are compact neural networks designed to handle natural language processing (NLP) tasks efficiently. Unlike their larger counterparts, they use fewer computational resources while still achieving reasonable performance on specific tasks.

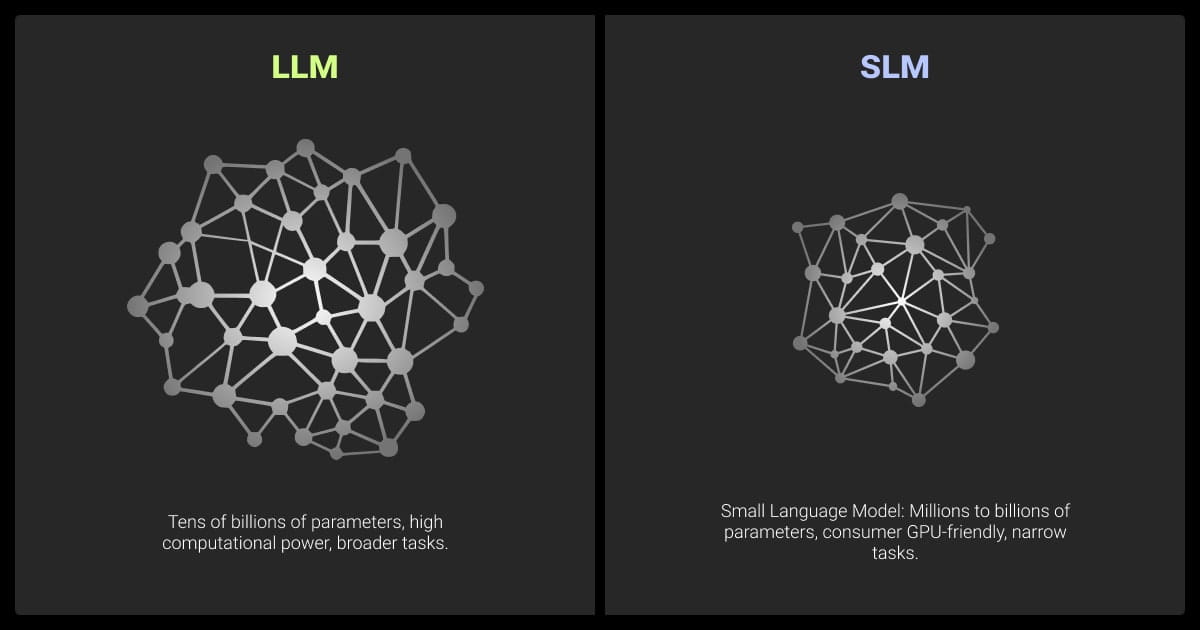

How are they different from large language models? Small language models focus on doing more with less. While LLMs handle diverse and complex queries, SLMs excel in specialized tasks and low-resource deployments. For example, Phi-3.5 is among the best small language models that deliver strong results in reasoning tasks, even outperforming some LLMs in efficiency.

While large language models rely on massive datasets and billions of parameters, SLMs typically operate with a few million to a few billion parameters, offering a balance between performance and resource efficiency.

Key Features of Small Language Models

Compact Size

Small language models typically have parameter sizes ranging from a few million to several billion, compared to the hundreds of billions in LLMs.

Efficiency

They require less computational power and memory, making them ideal for resource-constrained environments like mobile devices and edge computing.

Customization-Friendly

SLMs can be easily fine-tuned for specific tasks, enabling higher precision in niche domains.

Faster Processing

Their smaller size leads to faster response times, crucial for real-time applications like chatbots or voice assistants.

Small Language Models Examples

| Model | Parameters | Key features | Best for |

| DistilBERT | ~66M | Lightweight version of BERT, faster and smaller | Sentiment analysis, text classification |

| Phi-3.5 | ~3.8B | Long context window (128K tokens), multilingual | Document summarization, logical reasoning |

| TinyLlama | ~1.1B | Optimized for mobile and edge devices | Commonsense reasoning, mobile AI |

| MobileBERT | ~4.4M-29M | Designed for mobile devices | On-device NLP |

| Cerebras-GPT | 111M-2.7B | Compute-efficient and optimized for small devices | Lightweight NLP tasks |

Why Choose Small Language Models?

Small language models are the right choice when:

- Resource constraints are a concern: They run efficiently on low-power hardware like Raspberry Pi or smartphones.

- Real-time performance is critical: Their faster inference times make them suitable for latency-sensitive tasks.

- Niche applications are needed: They perform better in domain-specific use cases with fine-tuned training on specialized datasets.

For example, DistilBERT is 40% smaller than BERT but retains 97% of its accuracy on standard benchmarks, making it a common choice in applications where speed and size matter.

Why Small Language Models Matter in Machine Learning

Small language models (SLMs) are transforming machine learning workflows by providing efficient, cost-effective solutions for natural language processing (NLP) tasks.

Their ability to balance performance with resource constraints makes them invaluable in real-world machine learning applications, from training pipelines to deployment.

Key Advantages of SLMs in Machine Learning

Efficiency in Training

SLMs require fewer computational resources during training, making them accessible even for teams with limited hardware or budgets. Techniques like knowledge distillation and pruning reduce training time while maintaining acceptable levels of accuracy. They can be quickly fine-tuned with smaller datasets, which is ideal for domain-specific ML tasks.

Streamlined Deployment

Due to their smaller size, SLMs integrate seamlessly into machine learning pipelines, particularly in edge computing and mobile applications. They enable on-device machine learning, reducing reliance on cloud infrastructure and enhancing privacy.

Real-Time Inference for ML Applications

SLMs process data faster, delivering real-time predictions and responses. This makes them ideal for latency-sensitive machine learning tasks such as chatbot deployment, recommendation systems, or predictive text input.

Cost-Efficient Machine Learning

These models reduce costs not just in training, but also in inference and maintenance. They lower the barrier to entry for startups and smaller organizations, enabling machine learning projects without significant infrastructure investments.

Adaptability to Specialized Tasks

Small language models are easier to fine-tune for specific ML applications like medical NLP, legal document classification, or customer support automation. Their reduced complexity ensures quicker adaptation to niche requirements.

Top Use Cases for Small Models in ML Workflows

Small language models enhance machine learning processes in scenarios like:

- On-Device Learning: Running lightweight models on devices like smartphones or IoT systems without requiring external servers.

- Low-Resource Applications: Training and deploying models in environments with limited computational power.

- Privacy-First ML: Processing sensitive data locally to meet privacy and compliance standards.

- Quick Experimentation: Using smaller models to test hypotheses rapidly before scaling to larger models.

While small language models are often used for NLP tasks, they also complement AI workflows in areas like image recognition by processing associated metadata or descriptions.

Small Language Models vs Large Language Models

Small language models vs large language models are designed to tackle natural language processing (NLP) tasks, but they differ significantly in size, capabilities, and use cases. Understanding their differences helps you choose the right model among different types of LLMs.

| Feature | SLMs | LLMs |

| Size | Millions to a few billion parameters | Hundreds of billions or trillions of parameters |

| Hardware Needs | Can run on standard hardware, even mobile | Requires GPUs or cloud-based infrastructure |

| Training Time | Shorter training time | Long and resource-intensive training |

| Cost | Cost-effective to train and deploy | Expensive to develop, train, and deploy |

| Inference Speed | Low latency, ideal for real-time tasks | Higher latency due to size and complexity |

| Task Complexity | Best for simple or niche tasks | Handles complex, multi-domain applications |

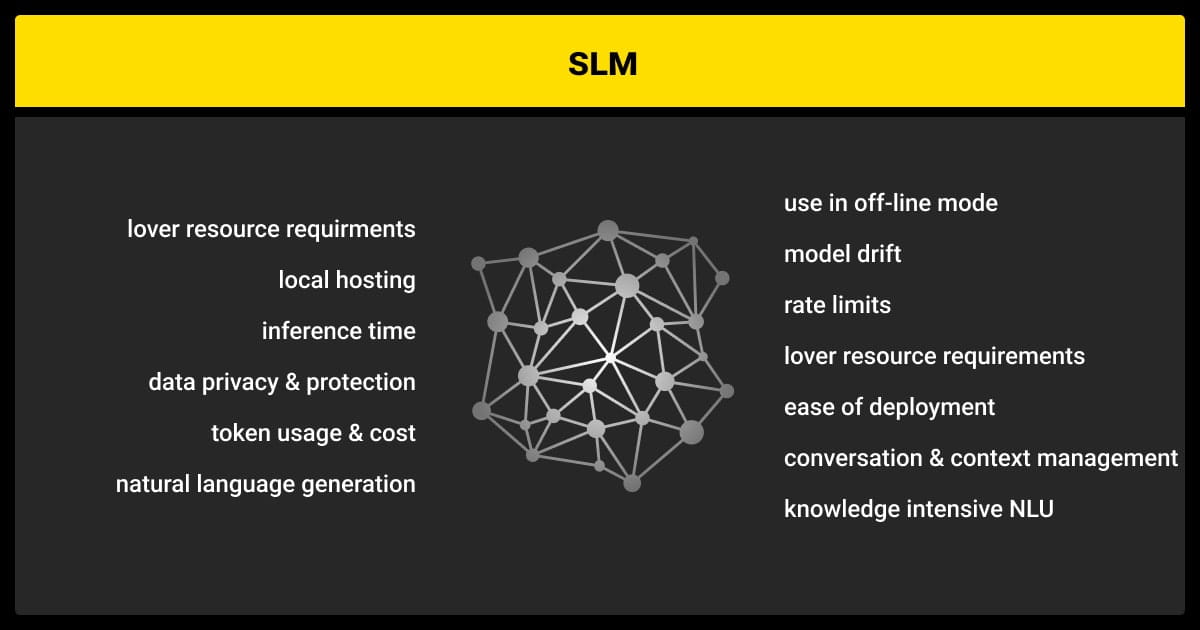

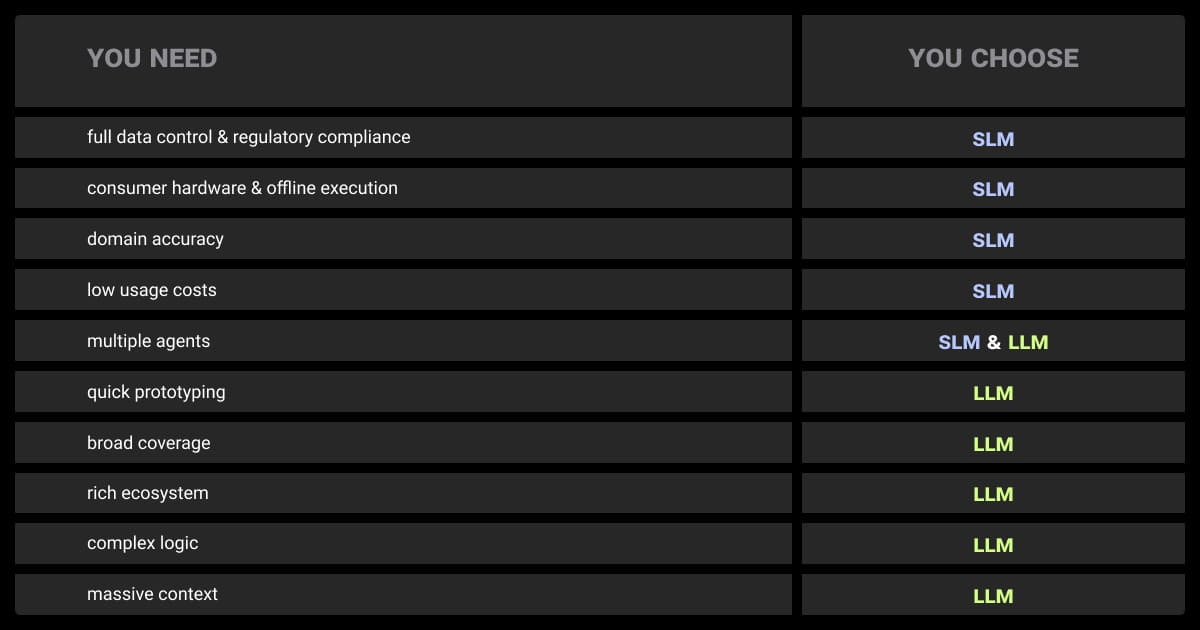

When to Use Small Language Models

Small language models excel in scenarios where efficiency and cost matter more than general-purpose capabilities. They are ideal for:

- Low-Resource Environments: Deploying models on mobile or IoT devices with limited computational power.

- Privacy-Sensitive Applications: Performing on-device inference to keep data secure.

- Niche Use Cases: Handling specialized tasks like medical text analysis or sentiment classification.

- Real-Time Applications: Chatbots, predictive text, or other latency-sensitive tasks.

When to Use Large Language Models

Large models are better suited for complex, multi-faceted tasks requiring a wide range of knowledge. They are ideal for:

- General-Purpose Applications: Answering diverse queries across multiple domains.

- Creative Content Generation: Writing essays, creating stories, or generating detailed reports.

- Advanced NLP Tasks: Summarizing long texts, understanding nuanced language, or handling multilingual content.

- Research and Development: Exploring state-of-the-art NLP capabilities.

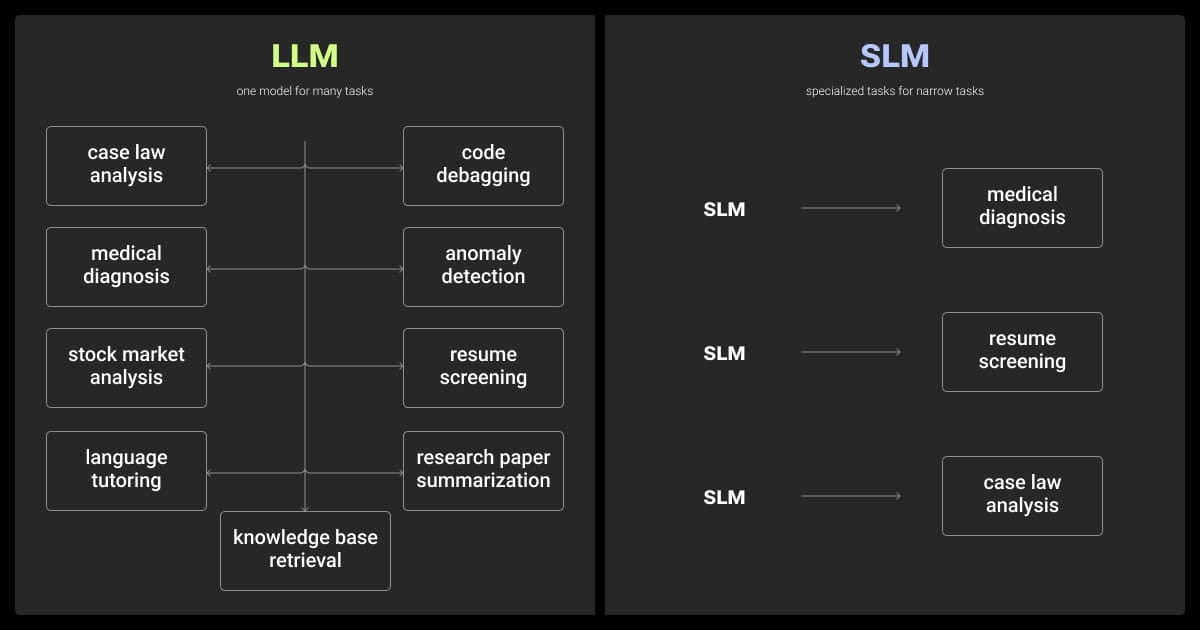

Hybrid Use Cases: Combining Small and Large Models

In some workflows, small and large language models can complement each other:

- Task Routing: Use a small model to handle simple queries and forward complex ones to a large model.

- Layered Systems: Deploy small models for front-end applications while using large models for backend processing.

- Pre-Processing with Small Models: Use SLMs to clean or pre-process data before feeding it into larger models.

The best small language models prioritize efficiency, cost, and speed, making them accessible and practical for focused tasks. Large language models, on the other hand, excel in versatility and complexity but demand more resources. Choosing the right model depends on your goals, available resources, and the nature of your application.

How Small Language Models Work

Small language models rely on a streamlined architecture and advanced optimization techniques to perform natural language processing (NLP) tasks efficiently. Their design focuses on reducing size and computational requirements while maintaining practical performance.

Core Components of Small Language Models

Transformer Architecture

Like their larger counterparts, SLMs are built using transformer models, which enable efficient handling of sequences like text.

Key components include:

- Self-attention mechanism: Helps the model focus on the most relevant words in a sentence.

- Feedforward layers: Process the context created by self-attention to generate predictions.

- Layer normalization: Keeps outputs stable and consistent across layers.

Parameter Optimization

SLMs have fewer parameters than large models, typically ranging from millions to a few billion. This reduction minimizes memory usage and computational costs.

Training Approach

SLMs are trained on similar datasets as large models but rely on techniques to compress knowledge without losing performance.

Techniques that Enable Small Models

| Technique | How It Works | Benefit |

| Knowledge Distillation | A smaller model (student) learns from a larger, pre-trained model (teacher). | Retains performance while reducing size |

| Pruning | Removes unimportant parameters or connections to reduce model complexity. | Speeds up inference and reduces storage needs |

| Quantization | Converts data precision from higher (e.g., 32-bit) to lower (e.g., 8-bit). | Reduces memory usage and computational load |

Knowledge distillation trains a smaller ‘student’ model to mimic a larger 'teacher' model, preserving accuracy while reducing size. This is particularly useful in resource-constrained environments like mobile or edge devices.

How Inference Works in Small Language Models

Inference refers to the process where the trained model generates predictions or outputs based on input data. Here’s how it works in SLMs:

- Input Encoding: The model converts text input into numerical representations (tokens).

- Contextual Understanding: Using self-attention, the model identifies relationships between words to understand context.

- Prediction: The model generates the next word, sentence, or classification output based on learned patterns.

How to Build and Fine-Tune Small Language Models

Building and fine-tuning small language models (SLMs) involves adapting pre-trained models or training from scratch using optimized techniques. Their smaller size makes them easier to customize for specific tasks while reducing costs and resource demands.

Steps to Build a Small Language Model

Start With Pre-Trained Models

Use existing pre-trained models like DistilBERT or TinyBERT as a starting point. Pre-trained models already have general language knowledge, saving time and computational resources.

Prepare High-Quality Data

Collect domain-specific data tailored to the target application (e.g., medical, legal, or customer service). Preprocess data by tokenizing, cleaning, and formatting it to match the input requirements of the model.

Apply Compression Techniques

- Knowledge Distillation: Train a smaller “student” model by learning from a larger “teacher” model.

- Pruning: Remove unnecessary parameters or connections to reduce size and speed up processing.

- Quantization: Reduce data precision (e.g., converting from 32-bit to 8-bit) to optimize memory usage.

Train the Model

Use frameworks like Hugging Face, PyTorch, or TensorFlow for training. Adjust hyperparameters such as batch size, learning rate, and training epochs for optimal performance.

How to Fine-Tune Small Language Models

Fine-tuning allows SLMs to adapt to specific tasks by retraining them on task-specific datasets. This customization improves accuracy and relevance.

Select the Right Model

Choose a pre-trained model suited for the task. Among some small language models examples are:

- DistilBERT: For text classification and sentiment analysis.

- GPT-Neo: For content generation or summarization.

Prepare a Task-Specific Dataset

Collect labeled data specific to the task (e.g., customer sentiment labels, medical terminology). Using LLM data labeling ensures precise and high-quality annotations, improving model performance.

Leveraging data annotation services is crucial for preparing high-quality datasets that improve the accuracy of small language models during fine-tuning.

Customize the Model

Freeze early layers of the model to preserve general language knowledge. Train only the task-specific layers on your dataset.

Evaluate and Refine

Test the fine-tuned model on a validation dataset to assess accuracy and relevance. Adjust hyperparameters or expand the dataset if performance falls short.

Pruning removes less important parameters, while quantization reduces precision, helping decrease size without drastically compromising performance. Fine-tuning smaller models on specific tasks further enhances their efficiency for narrow domains.

Best Practices for Building and Fine-Tuning

- Start Small: Use smaller datasets or freeze layers initially to save time and resources.

- Avoid Overfitting: Use techniques like regularization or early stopping to ensure generalizability.

- Leverage Transfer Learning: Adapt pre-trained models to similar tasks to boost performance with minimal training.

Tools for Building and Fine-Tuning

| Tool/Framework | Features | Use Case |

| Hugging Face | Pre-trained models and fine-tuning APIs | Quick customization and deployment |

| PyTorch | Flexible deep learning framework | Training and optimizing models |

| TensorFlow | Scalable machine learning library | Large-scale model training |

Pro tip: Accurate data annotation is the backbone of training effective small language models, ensuring precise outcomes for tasks like classification or sentiment analysis. Partnering with a reliable data annotation company can simplify the process of collecting and labeling domain-specific datasets for training SLMs.

By following these steps and leveraging best practices, you can efficiently build and fine-tune small language models tailored to your specific needs.

Efficient coding practices and optimized algorithms can significantly improve performance without inflating size. Tailoring small models to specific needs ensures they remain robust within constrained environments.

Challenges in Using Small Language Models

- Limited Capacity for Complex Tasks: SLMs struggle with multi-domain tasks and nuanced problem-solving due to fewer parameters.

- Dependency on High-Quality Data: Poor-quality or unbalanced datasets can lead to inaccurate outputs and biases.

- Reduced Generalization: SLMs often require re-training when applied to tasks outside their fine-tuned scope.

- Risk of Hallucinations: They can produce factually incorrect or misleading outputs, reducing reliability.

- Performance Trade-Offs: Smaller size compromises precision, context length, and overall accuracy.

- Ethical and Bias Concerns: SLMs may inherit biases from training data, affecting fairness in results.

How to Solve These Challenges

- Focus on task suitability: Use SLMs for targeted, resource-efficient tasks rather than complex, multi-domain applications.

- Use high-quality data: Train models on curated, domain-specific datasets to improve accuracy and reduce bias.

- Combine with LLMs: Use hybrid systems where SLMs handle simpler tasks and LLMs process more complex ones.

- Regular monitoring: Continuously evaluate outputs to detect errors or biases and refine models accordingly.

For businesses aiming to train small language models effectively, understanding data annotation pricing helps in budgeting for high-quality labeled datasets that enhance model performance.

About Label Your Data

If you choose to delegate data annotation, run a free pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is an example of a small language model?

DistilBERT is a popular example of a small language model. It’s a compressed version of BERT, designed to be faster and smaller while retaining most of its performance.

What is the difference between LLM and SLM?

LLMs (large language models) are powerful but resource-heavy, designed for complex tasks requiring vast knowledge. SLMs (small language models) are compact, efficient, and optimized for specific or resource-constrained applications.

What is an example of a SLMs?

TinyBERT, MobileBERT, and GPT-Neo are notable small language models examples. These models are lightweight and commonly used in real-time or on-device applications.

What are the top small language models?

Top small language models include DistilBERT, TinyLlama, MobileBERT, GPT-Neo, and Cerebras-GPT. Each offers a balance of performance and efficiency, catering to various tasks like text classification, summarization, and translation.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.