LLM Fine-Tuning Tools: Best Picks for ML Tasks

Table of Contents

- TL;DR

- Why LLM Fine-Tuning Tools Are Essential in 2025

- BasicAI

- Label Studio

- Kili Technology

- Labelbox

- Hugging Face

- SuperAnnotate

- Databricks Lakehouse

- Llama Factory

- Windows AI Studio

- OneLLM

- Oobabooga

- Label Your Data

- Cloud and Open-Source LLM Fine-Tuning Tools

- Why Do You Need LLM Fine-Tuning Tools

- How to Use LLM Tools for Fine-Tuning

- About Label Your Data

- FAQ

TL;DR

Why LLM Fine-Tuning Tools Are Essential in 2025

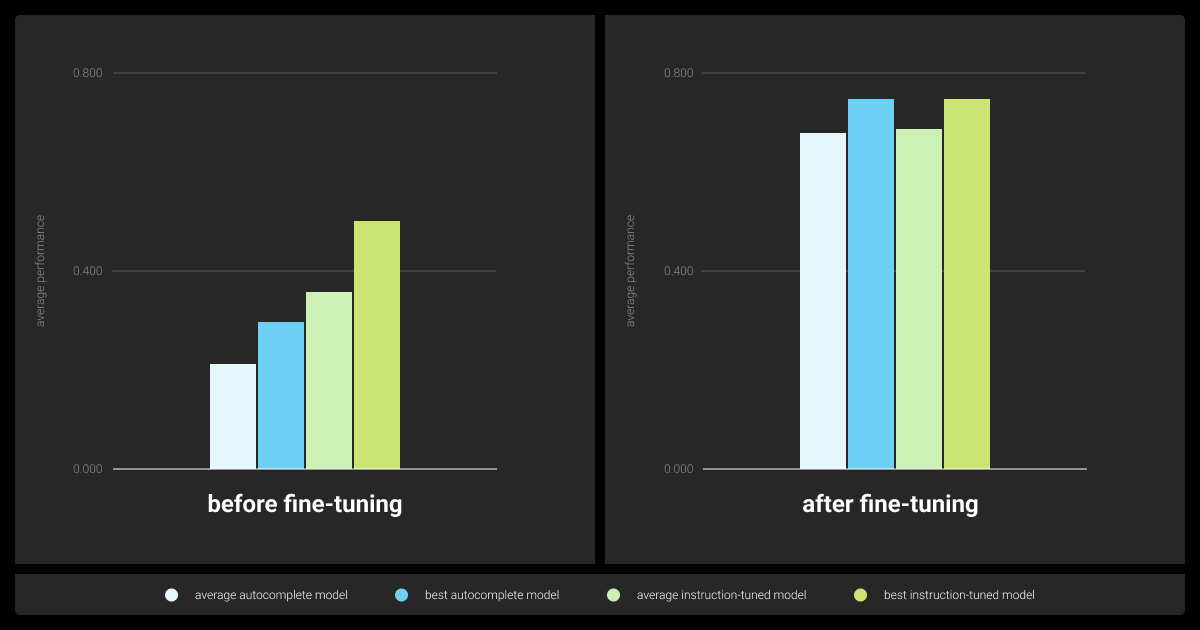

The surge of large language models (LLMs) continues to evolve rapidly in 2024, with fine-tuning emerging as a critical technique for enhancing their performance across diverse applications. A recent study indicates that LLM tools can improve model accuracy by up to 20% through fine-tuning, significantly boosting their effectiveness in specialized tasks.

Here’s a summary of the top LLM fine-tuning tools we’ll discuss. If any of these catch your interest, keep reading to dive deeper into what makes each tool stand out and how it can help optimize your models.

We will also explore some of the top cloud and open-source LLM fine-tuning tools later in this article.

BasicAI

BasicAI is a data annotation platform designed to support the development and fine-tuning of Large Language Models (LLMs) and generative AI by providing an advanced toolset for creating high-quality training datasets.

Key Features:

Versatile Annotation Capabilities: Supports text, image, video, and audio data types for comprehensive AI data preparation.

Collaboration and Workflow Management: Includes project management tools for seamless team collaboration, role-based access, task assignment, and progress tracking.

AI-Powered Automation: Integrates machine learning models to automate repetitive tasks, reducing manual effort and increasing efficiency.

Quality Assurance: Offers quality control mechanisms like consensus scoring and validation workflows to ensure annotation accuracy and consistency.

Customizable Toolset: Allows users to tailor annotation tools to specific project needs.

Scalability: Handles large-scale annotation projects, suitable for enterprises with extensive data requirements.

Use Cases:

BasicAI is ideal for LLM fine-tuning and generative AI training with its detailed text annotation capabilities. It also supports computer vision tasks like object detection and image segmentation, offers precise audio annotation for speech recognition, and aids in annotating medical images and records for AI diagnostics. Additionally, it is perfect for scalable enterprise AI development projects.

Label Studio

Label Studio is an advanced platform designed for creating customized annotation tasks tailored to fine-tuning LLMs. It supports a wide range of data types, making it highly adaptable.

Key Features:

Custom Data Annotation: Enables precise labeling of data specific to various requirements, such as text generation, content classification, and style adaptation. This feature ensures the creation of high-quality, task-specific datasets necessary for effective fine-tuning.

Collaborative Annotation: Facilitates teamwork by allowing multiple annotators to work on the same dataset, ensuring consistent and accurate annotations. Features like annotation history and disagreement analysis further enhance annotation quality.

Integration with ML Models: Supports active learning workflows where LLMs pre-annotate data, which is then corrected by humans, streamlining the annotation process and improving efficiency.

Label Studio 1.8.0 enhances LLM fine-tuning with a new ranker interface, generative AI templates for supervised fine-tuning, and human preference collection. It also includes an improved labeling experience with stackable side panels, annotation tab carousel, and better request handling. These features streamline the process and improve data quality for fine-tuning models.

Use Cases:

Label Studio is best suited for enterprises that require high-quality, task-specific datasets and efficient annotation processes to enhance the fine-tuning of LLMs.

Kili Technology

Kili Technology is a comprehensive platform designed for fine-tuning large language models. It focuses on optimizing model performance through high-quality data annotation and integration with ML workflows. The Kili Technology company enhances the fine-tuning process by providing a robust environment for creating high-quality, domain-specific LLMs.

Key Features:

Collaborative Annotation: Enables multiple users to work together, ensuring data accuracy and consistency.

Multi-Format Support: Handles text, images, video, and audio annotations.

Active Learning: Incorporates machine learning models for pre-annotation and iterative learning.

Quality Control: Features for managing annotation quality, including validation and review processes.

Use Cases:

Kili Technology enhances customer service automation by fine-tuning models to provide accurate and relevant responses. In healthcare, it improves medical text processing and diagnostics by customizing LLMs to handle specialized medical data. For the legal industry, they optimize models to efficiently analyze and interpret complex legal documents, streamlining legal research and documentation processes.

Labelbox

Labelbox provides a comprehensive framework for fine-tuning LLMs, offering tools for project creation, annotation, and iterative model runs. Its cloud-agnostic platform ensures compatibility with various training environments.

Key Features:

Iterative Model Runs: Supports continuous improvement through iterative fine-tuning based on performance diagnostics, helping to identify high-impact data and optimize the model iteratively.

Google Colab Integration: Facilitates seamless integration with Google Colab, allowing the importation of necessary packages and tools directly within the notebook, enhancing the fine-tuning process.

Reinforcement Learning from Human Preferences (RLHP): Enhances model performance by incorporating human feedback iteratively, ensuring the model adapts to real-world requirements effectively.

Use Cases:

Labelbox is ideal for organizations needing a flexible, scalable solution for iterative model improvement and integration with existing cloud services.

Hugging Face

Hugging Face is a leading provider of LLM tools for fine-tuning. Their platform is renowned for its flexibility and comprehensive support for various models like CodeLlama, Mistral, and Falcon.

Key Features:

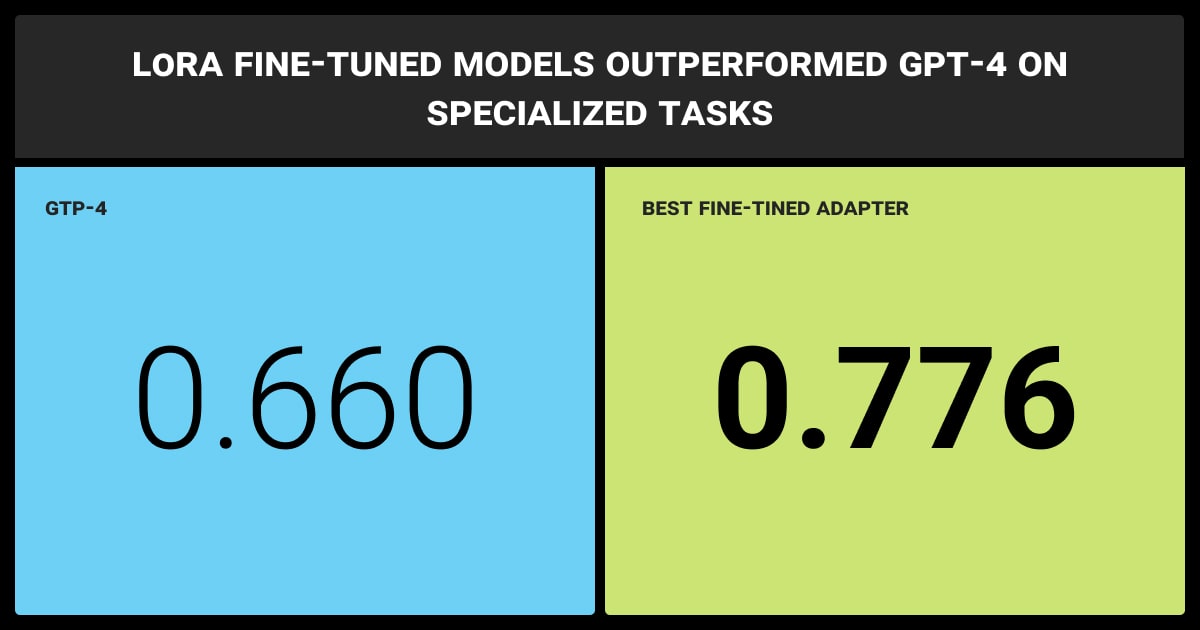

Parameter-Efficient Fine-Tuning (PEFT): Techniques such as LoRA (Low-Rank Adaptation) and QLoRA (Quantized Low-Rank Adaptation) significantly reduce memory and computational requirements, making fine-tuning more accessible. These methods involve updating a smaller subset of the model's parameters, reducing resource usage while maintaining performance.

Extensive Library: The TRL library and other Hugging Face tools simplify the integration and setup of fine-tuning tasks. This includes adding special tokens for chat models, resizing embedding layers, and setting up chat templates, which are crucial for training conversational models.

Use Cases:

Hugging Face is ideal for businesses and researchers who need a flexible and scalable solution for fine-tuning various LLMs and who want to leverage state-of-the-art techniques like PEFT.

SuperAnnotate

SuperAnnotate focuses on parameter-efficient fine-tuning (PEFT), making it ideal for hardware-limited environments by reducing memory and computational requirements.

Key Features:

PEFT Techniques: LoRA and QLoRA reduce trainable parameters by thousands of times, preventing catastrophic forgetting and ensuring efficient use of resources.

Task-Specific Fine-Tuning: Allows precise adjustment of models to excel in particular tasks or domains.

Use Cases:

The SuperAnnotate company is suitable for businesses with limited hardware resources requiring efficient fine-tuning methods to maintain model performance across multiple tasks.

Databricks Lakehouse

Databricks Lakehouse is tailored for distributed training and real-time serving of generative AI models, making it a powerhouse for large-scale applications.

Key Features:

Distributed Training: Optimizes model performance through distributed training environments.

Real-Time Serving: Facilitates real-time deployment and serving of fine-tuned models, ensuring high efficiency and performance.

Use Cases:

Perfect for enterprises looking for robust, scalable solutions for fine-tuning and deploying generative AI models in real-time.

Llama Factory

Llama Factory is an open-source UI platform for fine-tuning large language models. It simplifies the fine-tuning process through an intuitive interface and advanced optimization tools like Unsloth, making it accessible for both beginners and experts in the field.

Key Features:

User-Friendly Interface: Provides an easy-to-navigate GUI for managing the fine-tuning workflow.

Integrated Unsloth Optimization: Reduces memory usage by 62% and speeds up fine-tuning by 2.2x.

Click-to-Optimize: A dedicated "Unsloth" button for hassle-free model optimization.

Open Source: Fully transparent and free to modify, ensuring adaptability to various projects.

Use Cases:

Llama Factory accelerates LLM fine-tuning for natural language processing tasks, such as sentiment analysis and chatbots. It's ideal for researchers optimizing domain-specific models with limited resources or small teams looking for an intuitive yet powerful fine-tuning solution.

Windows AI Studio

Windows AI Studio is a VS Code plugin for fine-tuning LLMs directly within the IDE. It combines ease of access with advanced capabilities, enabling developers to fine-tune models on modest hardware setups.

Key Features:

VS Code Integration: Works seamlessly within the popular IDE.

Hardware Efficiency: Optimized for laptops with limited VRAM and RAM.

Python Code Compatibility: Allows users to write and customize their fine-tuning workflows.

Open Source: Freely available for individual developers.

Use Cases:

Windows AI Studio empowers hobbyists and individual developers to fine-tune smaller LLMs for projects such as custom chatbots, educational tools, and lightweight NLP applications.

OneLLM

OneLLM is a web-based platform designed for end-to-end fine-tuning and deployment of large language models. Its features enable streamlined dataset creation, training, and real-time testing of models.

Key Features:

Dataset Builder: Provides templates for dataset creation, automatically converting data to valid JSON formats.

Real-Time Testing: Enables testing of models directly in the browser.

Comparative Analysis: Includes heatmap reports and scoring tools for evaluating model iterations.

API Key Integration: Tracks model usage and monitors real-world performance.

Use Cases:

OneLLM is tailored for startups and individual developers optimizing LLMs for dynamic use cases, such as customer support systems, content generation, and application-specific NLP tasks.

Oobabooga

Oobabooga is a versatile tool commonly used for managing and fine-tuning large language models. While often associated with inference, it also supports fine-tuning workflows for various LLM tasks.

Key Features:

Multi-Functionality: Combines fine-tuning and inference capabilities.

Community Support: Backed by an active user community.

Flexibility: Compatible with various model architectures and use cases.

Use Cases:

Oobabooga is ideal for experimental projects where both fine-tuning and inference are needed, such as real-time chatbot applications, language translation systems, and educational NLP tools.

Label Your Data

We at Label Your Data optimize your LLMs for better performance and accuracy. Our LLM fine-tuning services address issues like data gaps, hallucinations, and deployment complexities.

Key Features:

Inference Calibration: Adjusting LLM outputs for accuracy and tone.

Content Moderation: Filtering undesirable content in social networks.

Data Enrichment: Enhancing LLMs with business-specific data.

Data Extraction: Training models to extract information from texts and images.

LLM Comparison, Prompts Generation, Image Captioning: Customizing models for specific tasks.

Use Cases:

Our services are ideal for AI corporations and API users needing accurate inference calibration, social networks requiring content moderation, customer support chatbots needing enriched data, and enterprises seeking efficient data extraction.

Run a free pilot with us to experience our LLM services firsthand!

Cloud and Open-Source LLM Fine-Tuning Tools

We should mention that the list of LLM companies and platforms provided above is not exhaustive, though it covers some of the most prominent and widely used solutions for LLM fine-tuning in 2024. Here are a few more tools and platforms that are also noteworthy in this domain:

Here are a few more tools and platforms that are also noteworthy in this domain:

OpenAI’s API

OpenAI offers fine-tuning capabilities for their models, including GPT-4. The API allows users to fine-tune models with their datasets, enhancing the model's performance for specific applications. OpenAI provides comprehensive documentation and support for various fine-tuning tasks.

Azure Machine Learning

Microsoft Azure Machine Learning provides robust support for training and fine-tuning large models. It includes features like automated machine learning (AutoML), distributed training, and integration with various ML frameworks, making it a powerful tool for fine-tuning LLMs.

Google Cloud AI Platform

Google Cloud AI Platform offers a managed service for training and deploying machine learning models. It supports TensorFlow, PyTorch, and other ML frameworks, providing tools for data preparation, training, and fine-tuning large language models.

Amazon SageMaker

Amazon SageMaker is a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy machine learning models quickly. SageMaker supports fine-tuning of models with custom datasets and offers features like automatic model tuning (hyperparameter optimization).

EleutherAI's GPT-Neo and GPT-J

EleutherAI offers open-source alternatives to GPT-3, such as GPT-Neo and GPT-J. These models can be fine-tuned using standard machine learning libraries like Hugging Face Transformers, providing flexibility and control over the fine-tuning process.

Weights & Biases (W&B)

W&B provides a suite of tools for experiment tracking, model management, and collaboration. It integrates well with popular ML frameworks and supports fine-tuning workflows, making it easier to manage and optimize large-scale model training projects.

These tools and platforms, combined with those listed previously, provide a comprehensive suite of options for fine-tuning large language models. They cater to a variety of needs and technical environments.

Axolotl

Axolotl is an open-source tool designed for fine-tuning large language models with ease. It allows users to configure and deploy fine-tuning workflows in cloud environments, supporting large-scale and distributed setups. Axolotl’s flexibility and resource-efficient configuration make it a popular choice for developers working on custom LLM projects.

H2O LLM Studio

H2O LLM Studio is an open-source, no-code platform for fine-tuning large language models. Designed for adaptability, it can be integrated into cloud workflows, offering an intuitive interface for managing the entire fine-tuning process. H2O LLM Studio is ideal for users seeking a balance between GUI simplicity and cloud compatibility.

These tools and platforms, combined with those listed previously, provide a comprehensive suite of options for fine-tuning large language models. They cater to a variety of needs and technical environments.

Why Do You Need LLM Fine-Tuning Tools

Large language models (LLMs) are powerful but generalized. And so the tools used for different LLM fine-tuning methods help tailor these models for specific tasks, improving performance and accuracy. Even the top LLM models require advanced fine-tuning tools that ensure efficiency, accuracy, and adaptability.

Here’s why LLM fine-tuning tools are essential:

Task Customization

Fine-tuning tools adapt LLMs to domain-specific needs, like legal analysis for finance or customer service automation in retail, ensuring relevance and accuracy.

Enhanced Performance

Training on task-specific data improves the model’s predictions, making outputs more accurate and reliable.

Resource Efficiency

Fine-tuning uses pre-existing models, saving time and computational resources compared to training from scratch.

Adaptability

LLM fine-tuning techniques keep models relevant with evolving data, easily adjusting to new information and scaling with growing datasets.

Multilingual and Multimodal Support

These tools extend LLMs to handle multiple languages and data types, making AI solutions more inclusive and versatile.

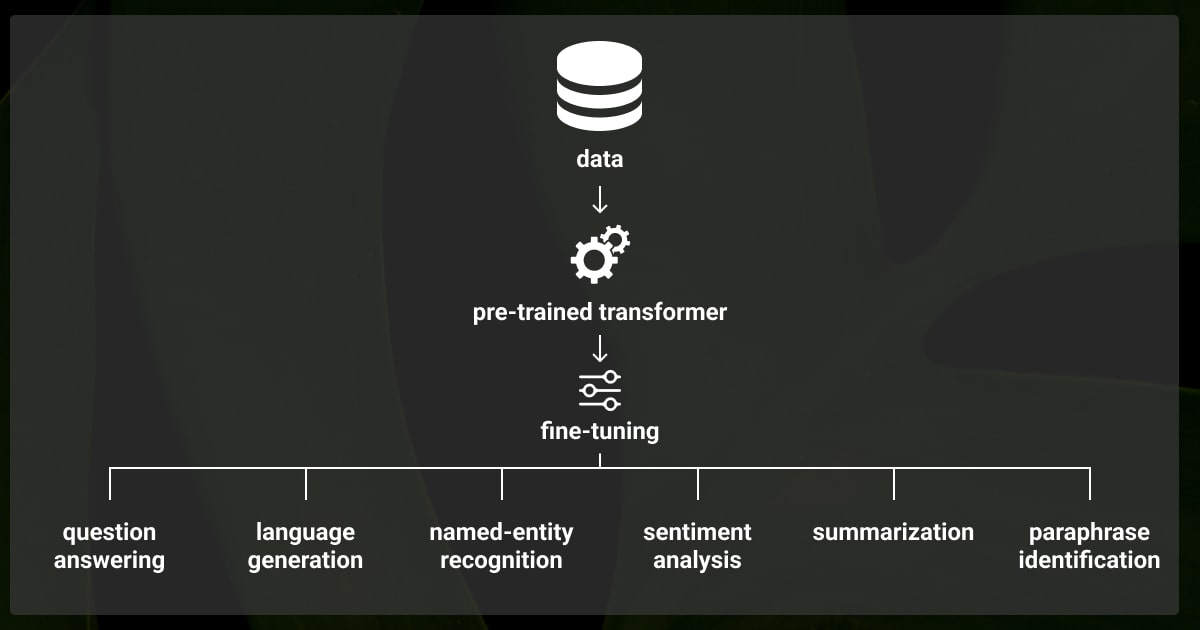

How to Use LLM Tools for Fine-Tuning

Here’s a quick guide to using LLM tools for the fine-tuning step:

Data Preparation

Collect and preprocess a relevant dataset to ensure quality and format consistency. Or let our expert team handle the task for you with our additional data services.

Model Selection

Choose a suitable pre-trained model, considering size, architecture, and training data.

Environment Setup

Configure hardware and software for efficient training, using libraries like TensorFlow or PyTorch.

Training

Adjust hyperparameters and train the model on the specific dataset, monitoring progress with evaluation tools.

Evaluation and Refinement

Assess the model’s performance, iterating on training to refine and enhance accuracy.

Deployment

Integrate the fine-tuned LLM model into your application environment for real-world use.

As such, fine-tuning tools are crucial for customizing LLMs to specific tasks, improving their efficiency, adaptability, and performance in diverse applications. Let’s now take a look at the most popular LLM tools for fine-tuning as of 2024.

Experience the benefits of fine-tuning your LLMs with our expert NLP services. Optimize your models for better performance and accuracy.

About Label Your Data

If you choose to delegate LLM fine-tuning, run a free pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

No Commitment

Check our performance based on a free trial

Flexible Pricing

Pay per labeled object or per annotation hour

Tool-Agnostic

Working with every annotation tool, even your custom tools

Data Compliance

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What are the best tools to fine-tune LLM?

What are LLM tools?

LLM tools are platforms, frameworks, or services designed to work with large language models. They support various tasks like training, fine-tuning, data annotation, and deployment. These tools enhance the capabilities of LLMs by making them more accurate, efficient, and tailored to specific tasks or industries.

What are LLM agent tools?

What are the key considerations for choosing between LLM service providers for fine-tuning?

When selecting between top LLM providers of fine-tuning services, consider their expertise in handling domain-specific tasks, the robustness of their data annotation and quality control processes, and their ability to integrate with your existing infrastructure. Providers like Labelbox, SuperAnnotate, and Label Your Data support iterative model runs, seamless integration with machine learning workflows, and flexible, cloud-agnostic deployment options.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.