Panoptic Segmentation: How It Works

Table of Contents

- TL;DR

- What Is Panoptic Segmentation?

- How Panoptic Segmentation Works

- Key Concepts: Stuff vs. Things

- Applications of Panoptic Segmentation

- Challenges and Limitations

- Popular Tools and Datasets for Panoptic Segmentation

- Evaluation Metrics

- Future Trends in Panoptic Segmentation

- About Label Your Data

- FAQ

TL;DR

- Panoptic segmentation combines semantic and instance segmentation to label every pixel and assign unique IDs to objects.

- Uses models to label broad areas (stuff) and identify objects (things), merging outputs into one segmentation.

- Applications include autonomous vehicles, medical imaging, robotics, AR/VR, and environmental monitoring.

- Challenges include high computational needs, dataset limitations, and difficulty adapting to new environments.

- Future trends focus on real-time use, multimodal data, and domain adaptation.

What Is Panoptic Segmentation?

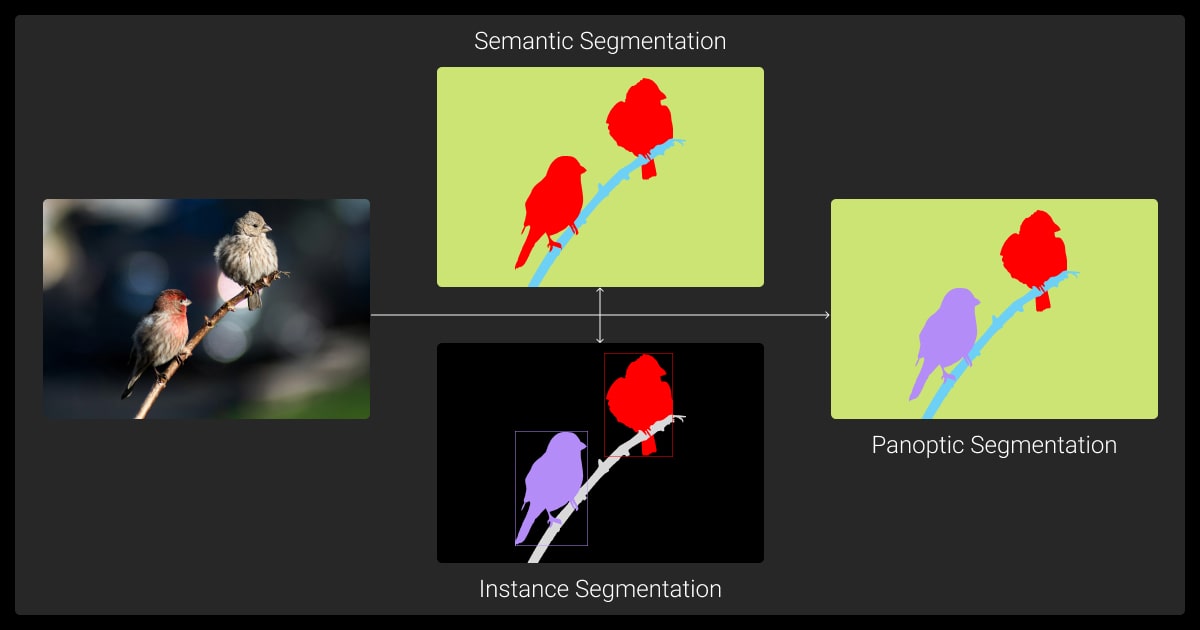

Panoptic segmentation is a way to analyze images by combining two tasks: semantic segmentation and instance segmentation. It labels every pixel in an image with a category and gives each object a unique ID when needed.

By labeling every pixel and separating objects, this technique provides a complete view of any scene. Whether it’s helping self-driving cars detect obstacles or assisting doctors with precise medical imaging, panoptic segmentation is a cornerstone of modern computer vision.

Solving Overlaps in Scene Analysis

Traditional image segmentation methods either labeled broad areas, like roads, or separated objects, like cars, but couldn’t do both at once. This left gaps in understanding complex scenes.

Panoptic segmentation was created to combine these approaches, giving every pixel both a category and, when needed, a unique object ID. Older methods handle parts of the problem separately:

| Type | What It Does | Problem |

| Semantic Segmentation | Labels areas like "sky" or "road." | Can’t tell apart objects of the same type. |

| Instance Segmentation | Finds and separates objects like cars or people. | Ignores broad areas like the sky. |

| Panoptic Segmentation | Combines both, labeling everything in the scene. | Needs more computing power. |

Panoptic segmentation combines the best parts of two methods to give a clear and complete view of any scene.

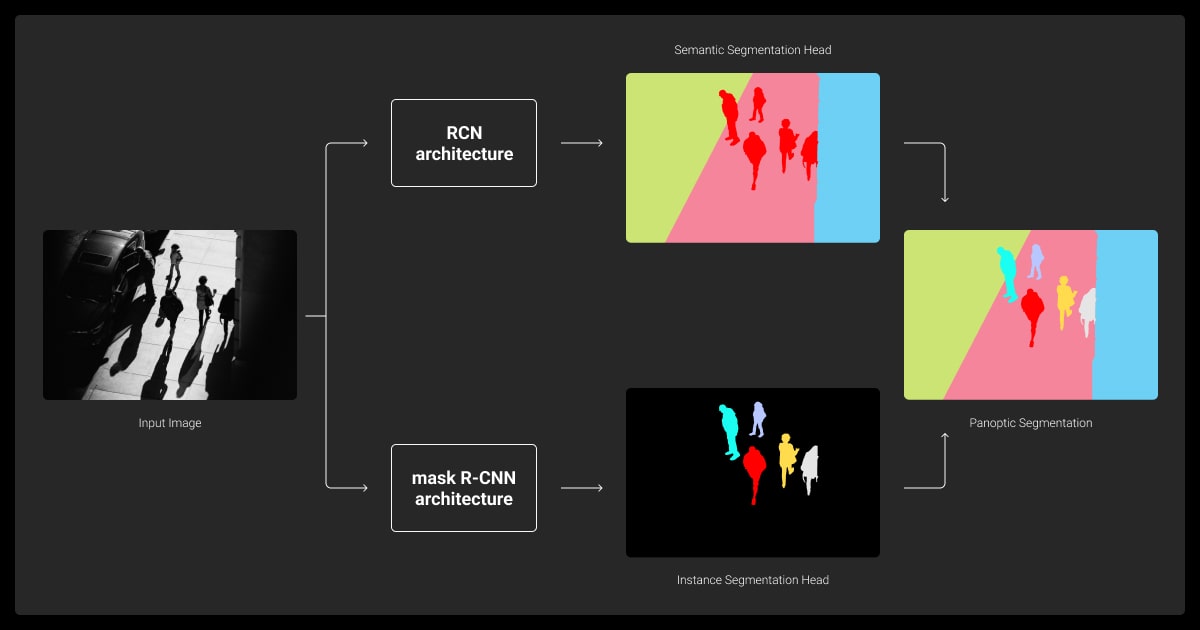

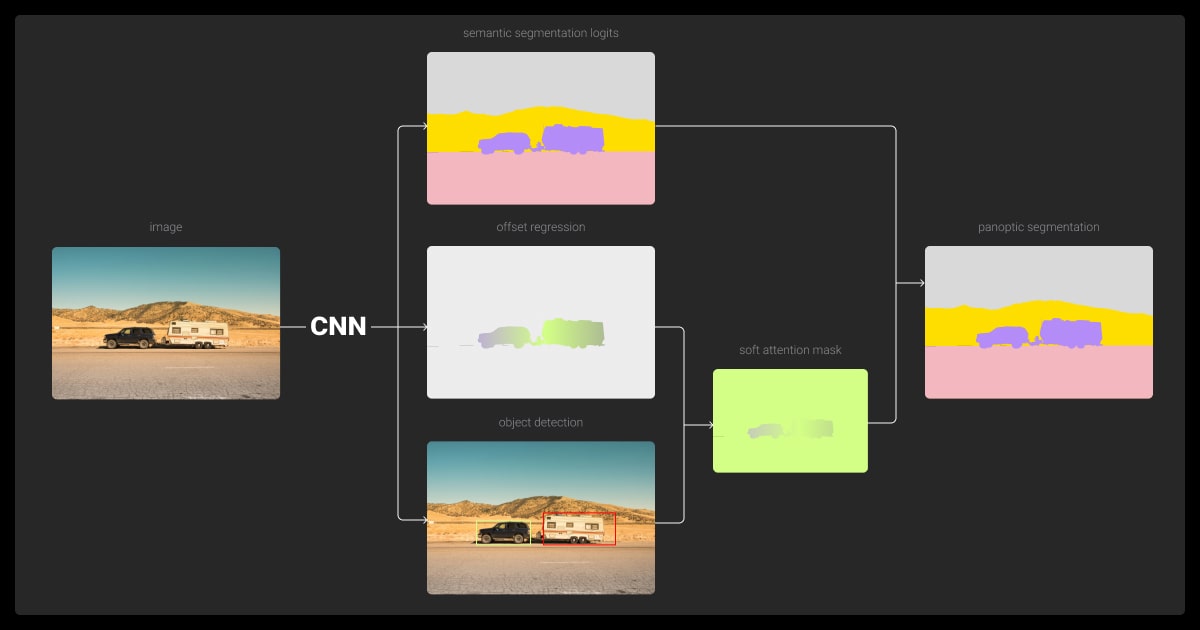

How Panoptic Segmentation Works

Panoptic segmentation combines two data annotation tasks—semantic segmentation and instance segmentation—into one process. It identifies the category of every pixel in an image, while also separating individual objects when needed.

Panoptic segmentation vs instance segmentation lies in its ability to not only identify individual objects but also label entire image regions for a complete scene understanding.

Steps in Panoptic Segmentation

- Input: An image is uploaded for analysis.

- Processing: Semantic segmentation labels broad areas, and instance segmentation identifies and separates objects.

- Fusion: Results from both processes are combined into one output.

- Output: Each pixel is assigned a class label and, for objects, a unique instance ID.

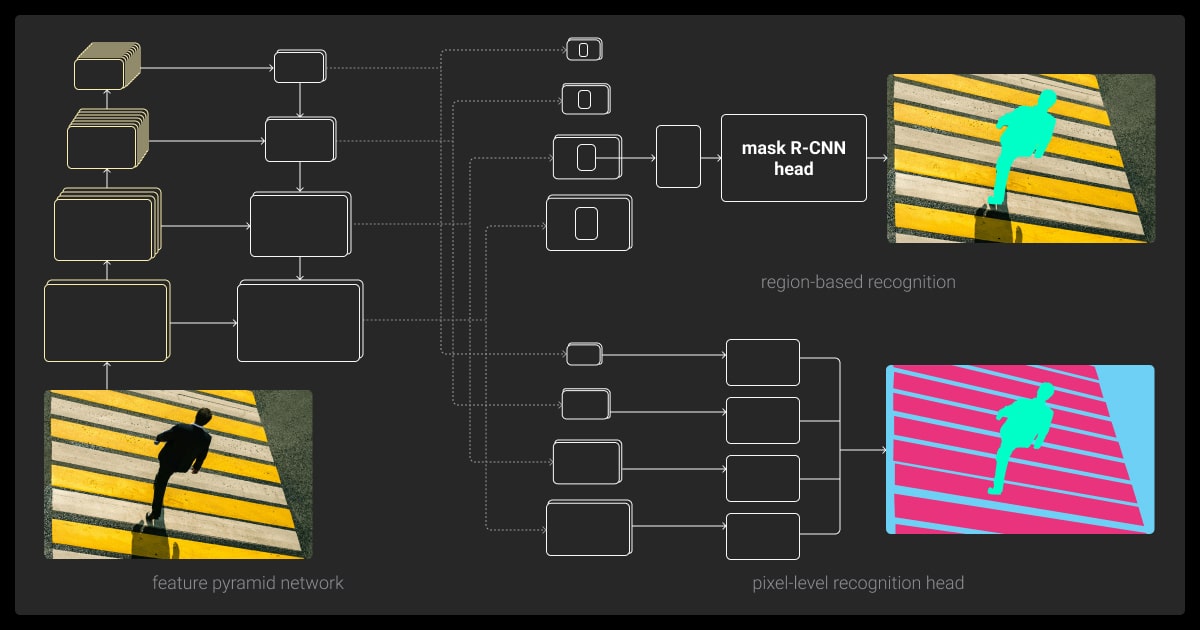

What Makes Panoptic Segmentation Work?

It relies on two key components:

- Semantic Model: Labels areas like roads, sky, or water.

- Instance Model: Finds and separates countable objects like cars or people.

These systems use deep learning techniques to analyze the image and produce accurate segmentation.

The Role of Fusion

Fusion combines semantic and instance outputs into a single image. For example: a car’s roof might overlap with the road below it. Fusion ensures the pixels belong to the correct category, such as “car” or “road,” without confusion.

Why it’s effective:

- Provides a full view of scenes, covering categories and objects.

- Used in transportation, robotics, and medical imaging.

Panoptic segmentation works by combining two tasks into one clear output. This makes it a reliable tool for understanding complex images in real-world scenarios.

The real bottleneck emerges in what we call 'Contextual Ambiguity Zones'—those complex visual scenarios where object boundaries blur, overlap, and challenge traditional machine perception paradigms. Our models now handle segmentation scenarios with over 92.7% accuracy across highly complex, multi-object environments.

Key Concepts: Stuff vs. Things

Unlike image classification vs. object detection, panoptic segmentation evaluates both the regions and individual objects within a scene.

Panoptic segmentation labels everything in an image, putting pixels into two groups:

- Things: Countable objects like cars or trees.

- Stuff: Areas like the sky or a field.

This distinction is key to how the method provides detailed and complete scene understanding. It helps with:

- Simplified Analysis: Differentiates objects and areas for clearer scene understanding.

- Improved Accuracy: Treats objects and regions separately for better precision.

- Dual Strategy: Combines object-level focus with area-wide labeling.

- Comprehensive View: Balances the big picture and detailed object detection.

This dual approach is what makes the method effective in many industries.

Applications of Panoptic Segmentation

Panoptic segmentation is used in industries that require detailed image recognition. By labeling every pixel and distinguishing objects, it solves complex real-world challenges.

Self-Driving Cars

Identifies objects like vehicles and pedestrians (things) and labels areas like roads and sidewalks (stuff) to ensure safe navigation and real-time decision-making.

Medical Imaging

Segments tumor cells individually and labels surrounding tissues, improving precision in diagnostics and treatment planning.

Smart Cities

Monitors traffic, improves road safety, and supports waste management by sorting recyclables from trash.

Augmented and Virtual Reality

Identifies objects for interaction and labels environments for realistic simulations, benefiting training, gaming, and design.

Robotics

Enables robots to recognize and manipulate objects while understanding spatial contexts like tables or floors.

Environmental Monitoring

Geospatial annotation benefits from panoptic segmentation, enabling detailed labeling of satellite or aerial imagery for environmental monitoring.

One of the hardest parts of panoptic segmentation is handling instances with extreme occlusion or class ambiguity—like distinguishing between two overlapping objects of the same type or separating objects from complex backgrounds. Incorporating auxiliary depth information alongside RGB data has dramatically improved precision.

Panoptic segmentation’s ability to label every pixel with a category or instance makes it ideal for applications requiring both high detail and context.

Challenges and Limitations

Panoptic segmentation delivers detailed scene analysis but faces several challenges due to its complexity and resource demands.

High Computational Costs

Requires large datasets, detailed annotations, and powerful hardware, making real-time applications costly.

Dataset Limitations

Existing datasets like COCO and Cityscapes don’t generalize well to unique environments like agriculture or underwater scenes.

Accuracy Issues

Overlapping objects and distinguishing “things” from “stuff” in complex scenes can reduce precision.

Real-Time Constraints

Balancing speed and accuracy remains difficult, especially for robotics and autonomous vehicles.

Generalization Problems

Models trained on specific domains struggle in new environments, and domain adaptation remains underdeveloped.

Manual Annotation Burden

Annotating every pixel with class and instance IDs is time-intensive and labor-intensive.

Efficient models like EfficientPS, synthetic datasets, transfer learning, and improved domain adaptation methods are helping mitigate these limitations. Collaborating with a data annotation company can simplify the process of creating labeled datasets for segmentation tasks.

One of the toughest challenges in panoptic segmentation is getting the balance right between labeling every pixel in an image (semantic segmentation) and separating individual objects of the same class (instance segmentation). Overlapping objects or blurry edges often lead to mislabeling, which throws off the results.

Popular Tools and Datasets for Panoptic Segmentation

Panoptic segmentation requires advanced tools and high-quality datasets to label every pixel accurately. The right combination ensures reliable performance across different tasks.

| Tool/Framework | Description | Key Features |

| Detectron2 | Facebook AI’s library for detection and segmentation. | Pretrained models, modular design. |

| TensorFlow | Popular framework for custom segmentation models. | Flexible, strong community support. |

| PyTorch | Framework for developing and training segmentation models. | Dynamic computation graph, ease of use. |

| EfficientPS | Optimized architecture for panoptic segmentation. | Unified instance and semantic segmentation. |

| DeepLab | Semantic segmentation model adapted for panoptic tasks. | Atrous convolution for dense predictions. |

Key Datasets

| Dataset | Use Case | Details |

| COCO | Diverse, everyday scenes. | 1.5M object instances across 80 categories. |

| Cityscapes | Urban street environments. | High-res images with 30 classes. |

| Mapillary Vistas | Street-level imagery. | Multiple resolutions, 65 annotated classes. |

| ADE20K | General-purpose scenes. | Dense annotations across 150 categories. |

| KITTI | Driving data. | Outdoor scenes from vehicle-mounted cameras. |

| Pastis | Agriculture-specific tasks. | Annotations for plants, soil, and crops. |

When working with large datasets, understanding image annotation pricing can help teams plan their labeling workflows efficiently.

Choosing the Right Resources

- Beginners: Start with Detectron2 and COCO for ease of use.

- Specialized Applications: Use Pastis (agriculture) or Mapillary Vistas (street imagery).

- Advanced Customization: Opt for PyTorch or TensorFlow for more flexibility.

Combining robust tools with high-quality datasets ensures successful panoptic segmentation. Choose resources that align with your project’s goals and domain.

Evaluation Metrics

Each machine learning algorithm requires tailored metrics to ensure accurate evaluation of its segmentation capabilities.

Panoptic segmentation uses metrics to evaluate how well models label every pixel in an image. These metrics measure both semantic segmentation (broad region labeling) and instance segmentation (object identification).

Panoptic Quality (PQ)

Panoptic quality is the primary metric for panoptic segmentation, combining two components:

- Segmentation Quality (SQ): Measures how closely each segment matches the ground truth.

- Recognition Quality (RQ): Evaluates how accurately objects and regions are labeled.

Key measurements:

- True Positives: Correctly labeled segments.

- False Positives: Extra segments not in the ground truth.

- False Negatives: Segments that should exist but are missing.

Other Metrics

Additional metrics are used for specific object detection evaluations:

- IoU (Intersection over Union): Measures overlap between predicted and ground truth regions; common in semantic segmentation.

- Average Precision (AP): Focuses on object detection accuracy; popular in instance segmentation.

- Mean IoU (mIoU): Averages IoU scores across all classes, helpful for large-scale datasets.

Challenges in Evaluation

- Imbalanced Data: Some datasets have more "stuff" than "things," skewing results.

- Small Object Detection: Smaller objects often lower RQ due to detection difficulty.

- Overlapping Classes: Consistent labeling becomes harder when objects overlap.

Models trained on labeled datasets often struggle to generalize when applied to unlabeled data, highlighting the importance of comprehensive training data.

When to Use Each Metric

| Metric | Use case |

| PQ | For a comprehensive evaluation of both semantic and instance segmentation. |

| IoU/mIoU | When focusing on large, continuous regions (e.g., sky, roads). |

| AP | For applications emphasizing object detection, like autonomous vehicles. |

Metrics like PQ simplify panoptic segmentation evaluation by combining precision and recognition into a unified score. Complementary metrics like IoU and AP add deeper insights for specific use cases.

Future Trends in Panoptic Segmentation

Panoptic segmentation is evolving with advancements aimed at improving efficiency, real-time performance, and adaptability.

Unified Models

End-to-end architectures like EfficientPS combine semantic and instance segmentation seamlessly.

Real-Time Use

Optimized for fast decisions in applications like autonomous vehicles and robotics.

Multimodal Integration

Incorporating data from LiDAR, depth maps, and thermal imaging for better accuracy in complex environments.

Domain Adaptation

Training models to perform well in new settings without extensive data.

Video Segmentation

Extending capabilities to action recognition and object tracking in video data.Future improvements in efficiency and adaptability will expand panoptic segmentation’s use across industries, enabling more precise and versatile applications. Many companies now outsource panoptic segmentation tasks to specialized data annotation services for efficiency and accuracy.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is the difference between semantic and panoptic segmentation?

Semantic segmentation labels each pixel with a category, while panoptic segmentation adds an instance ID to distinguish objects within the same category, providing a more detailed scene understanding.

What are the classes of panoptic segmentation?

Panoptic segmentation divides pixels into two types: "things" (countable objects like cars or people) and "stuff" (amorphous regions like sky or grass).

What is a panoptic model?

A panoptic model is a machine learning model designed to perform panoptic segmentation, combining semantic and instance segmentation into one unified output.

What is the format for panoptic segmentation?

Panoptic segmentation outputs an image where each pixel has a class label (e.g., “road” or “car”) and, for objects, a unique instance ID to identify individual items.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.