Data Collection Tools: Best Options for AI Teams

Table of Contents

- TL;DR

- Essential Features in Data Collection Tools for AI

- Types of Data Collection Tools

- What to Look For in Data Collection Tools

- Top Data Collection Tools for AI Teams

- Selecting the Right Data Collection Tool for Your AI Project

- Enhancing Data Collection with Automation

- When to Consider Outsourcing Data Collection

- About Label Your Data

- FAQ

TL;DR

- Modern data collection tools must support a wide range of data types, from text and images to video and audio.

- Look for options that balance scalability and performance to manage growing datasets efficiently.

- Carefully integrate these with your existing ML pipeline for frictionless development.

- Tools like SurveyCTO, Zapier, and Airtable streamline collection, organization, and ingestion.

- Automation and outsourcing are critical strategies for scaling high-quality data acquisition.

Essential Features in Data Collection Tools for AI

Data is the foundation of any successful machine learning system, and you need lots of it. But, more important than quantity is quality. Which means that you need to up your game when it comes to collecting your dataset.

Data collection tools make this much simpler. They streamline the process and are a big upgrade to manual scraping and messy spreadsheets. Whether you’re looking for data collection tools in healthcare or aggregating video feeds for object detection, you need to choose the right type.

Here’s what to look for.

Support for Diverse Data Types

AI teams rarely work with just one kind of data. You might have to label images with image annotation tools for a vision model one day and collect user behavior logs for a recommendation engine in another.

That’s why the best data collection tools can handle a range of formats, including text, images, video, audio, structured or unstructured tabular data.

The more flexible AI data collection tools are, the more use cases they support. You should look for features that:

- Support multi-modal inputs

- Compatibility with JSON, CSV, or Parquet outputs

- Native previewers for media files

Scalability and Performance

Once you set up a promising proof of concept, you need large amounts of data, fast. If you use a survey data collection tool that can handle 500 survey responses, but get 50,000, it’s bound to crash.

You need to make sure that your data collection platform supports:

- Large volumes

- High-speed ingestion

- Concurrent submissions

You might want to look into cloud-native tools that incorporate an auto-scaling infrastructure. You should also consider digital data collection tools that offer offline data collection and later sync capabilities if your sources are distributed across low-connectivity environments.

Integration Capabilities

Most companies cobble together various AI workflows because they work with so many different tools. Any AI data collection system you choose should slot in perfectly, not fight it.

Look for tools with REST APIs, webhook support, and native connectors for platforms like AWS, Google Cloud, or data warehouses like Snowflake. The fewer manual transfers you do, the less the chances of errors creeping in.

Also consider support for event-driven architectures and stream processing frameworks like Kafka if you're working in real-time environments.

BigQuery centralizes data from multiple sources and scales automatically, eliminating infrastructure limits. Its built-in ML tools cut down on ETL steps and speed up model deployment.

Types of Data Collection Tools

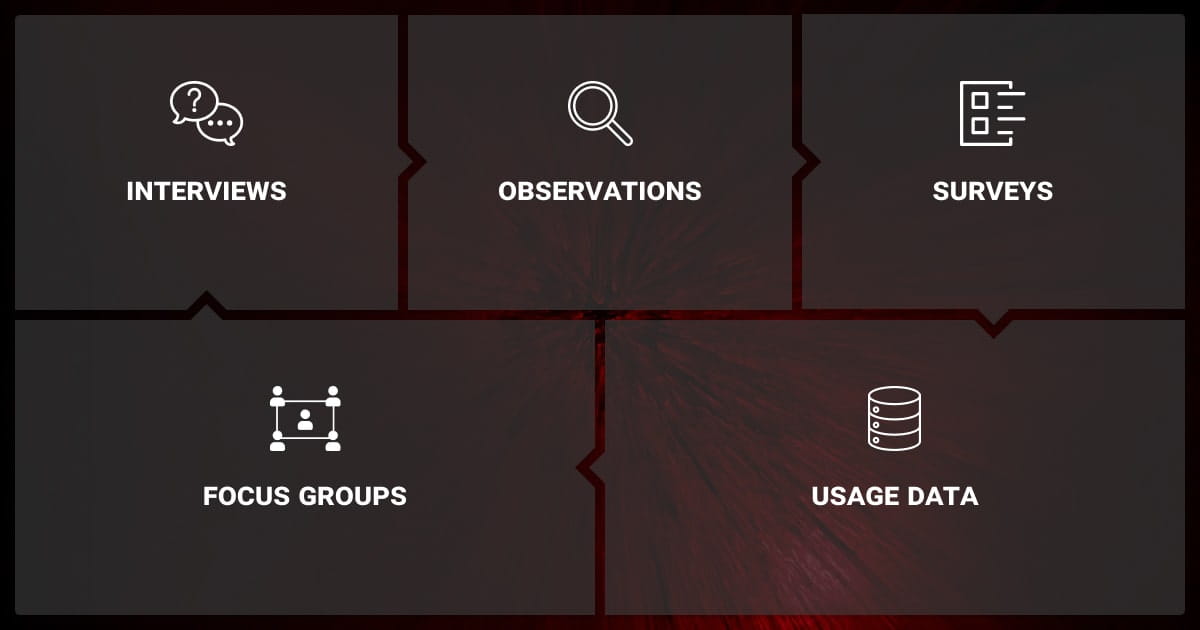

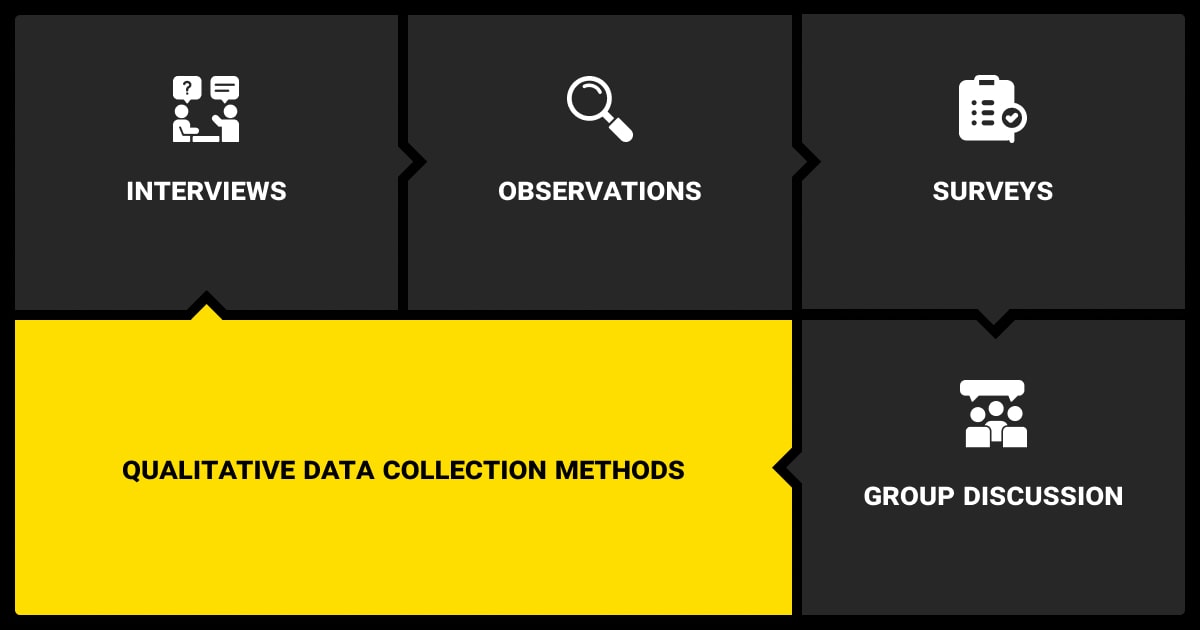

Before jumping into specific tools, we should consider the different types of collection methodologies. They generally fall into two camps: qualitative and quantitative.

Quantitative data collection tools measure tangible metrics. For example, how many teenagers use a particular product.

Qualitative data collection tools, on the other hand, measure less tangible things like the reasons behind the numbers. So we can call them behavioral data collection tools as well. For example, why teenagers choose those products.

For the purposes of this article, we’ll concentrate on the quantitative techniques, including:

Interviews

Useful for gathering in-depth feedback or subjective input, especially in user research or annotation quality studies.

Observation

A great fit for behavioral data, often gathered via screen recording tools, field notes, or video.

Focus Groups

Ideal for early-stage dataset validation or interpreting model output with multiple stakeholders.

Usage Data

Logged through product analytics, backend event tracking, or embedded scripts.

Surveys

The go-to for gathering structured responses at scale; highly customizable and easily automated.

You’ll need to choose the best method or combination depending on your project and data you need. You’ll also have to consider how it slots into your downstream tasks. And whether bringing in data collection services is a smarter option than managing everything in-house. Especially when speed or scale becomes a bottleneck.

What to Look For in Data Collection Tools

These tools must ensure your data stays clean, traceable, and legally safe throughout the ML lifecycle.

Build in Quality from the Start

Look for schema validation, input rules, and QA checks. Features like uncertainty sampling or auto-flagging help focus annotation on the most useful data.

Track Changes and Versions

Choose tools that log who collected what and when. Versioning support or integrations with DVC/MLflow make retraining and audits easier.

Stay Compliant by Design

If handling personal data, ensure encryption, access control, and anonymization are built in. GDPR, HIPAA, or SOC 2 compliance is critical when outsourcing or using external annotation tools.

Top Data Collection Tools for AI Teams

There are a lot of data collection tools in research, but we’ve zeroed in on the following because of their:

- Versatility

- Ease of use

- Adoption in AI workflows

SurveyCTO

This comprehensive platform is among the top mobile data collection tools. It’s secure, stable, and optimized for fieldwork. Organizations like The World Bank and Oxfam use it.

It may work for you if you need to gather feedback from a lot of people across different geographical areas. You can:

- Collect data online

- Enforce input validation

- Pipe everything into cloud storage or data annotation tools

Zapier

Zapier isn’t one of your typical tools for data collection. But it does play a powerful role in automating collection and transfer workflows. It connects thousands of apps, allowing you to auto-ingest:

- Form responses

- Webhook payloads

- API outputs

You can use it to automate responses from Typeform to Airtable, pipe events into a storage bucket, or log structured data to Google Sheets in real time.

Yet, Zapier is best suited for automating low-frequency, structured data transfers. It’s not designed for real-time ingestion at ML-scale.

Google Forms

This is one of the easiest free data collection tools to use. It allows you to create surveys and collect structured data. You can then drop the results seamlessly into Google Sheets.

It doesn’t offer advanced features like conditional logic or media uploads at scale, but it’s good for quick data-gathering. Say, for example, LLM fine-tuning services need to train their machine learning algorithm on questions a customer might ask. They could run a quick survey on FAQs.

It’s a great tool for:

- Internal data annotation

- Gathering machine learning and training data

- Feedback loops

- Survey distribution

What’s even better is that it’s so simple to use.

Microsoft Forms

This suite of online data collection tools is similar to Google Forms but plugs into the Microsoft Office ecosystem. If you’re already using Outlook, Sharepoint, or Excel, it makes sense to use Microsoft Forms.

You simply pull the results into Power BI, sync with Excel macros, or automate the flows via Power Automate.

Microsoft Forms offers highly secure cloud security, making it ideal when you’re working in a regulated environment.

*Both Google Forms and Microsoft Forms are not suitable for collecting sensitive data requiring compliance with GDPR, HIPAA, or for large-scale automation needs.

Airtable

While not a native data ingestion tool, Airtable excels at organizing datasets, assigning labeling tasks, and tracking data QA progress.

Airtable is the ideal mix of spreadsheets and databases. It has a clean, collaborative interface that is easy to use. You can:

- Create dynamic data schemas

- Use formulas

- Assign fields to teammates

- View submissions as grids, galleries, or kanban boards

LLM fine tuning and data annotation services use it for organizing training data, tagging content, or building custom dashboards. It’s also very extensible.

Dataloop’s active learning helped us cut annotation time by 50% by focusing only on uncertain samples. This made labeling faster and more targeted for rare-event detection.

Selecting the Right Data Collection Tool for Your AI Project

Choosing the right real time data collection tools isn’t just about checking boxes. You need to look at your data type, team size, and operational environment.

Assessing Project Requirements

Start with the basics:

- What kind of data are you collecting?

- Are you surveying users, logging software events, or scraping product images?

- Will this be a one-time project or an ongoing stream?

If you need multiple input types, like collecting photos and metadata from mobile users for image recognition software, you’ll need more advanced tools like SurveyCTO or Airtable.

Evaluating Tool Features

Look at :

- Data type support for your machine learning datasets

- How easily the tool integrates with your existing stack

- Does it offer API or webhook access?

- How easy is it going to be for your team to use?

If you have a team of machine learning engineers creating LLM fine-tuning tools, you can choose a more technical tool. If your people have no programming experience, you’ll want something that’s more intuitive.

Either way, you want a polished UI to avoid frustration.

Considering Budget Constraints

You need to balance the features you need with your budget. Google Forms and Airtable have free tiers for automated data collection whereas SurveyCTO and Qualtrics are more expensive.

The trade-off must be worth it for your company. Are you getting more security, uptime, or better analytics? If not, keep looking.

Enhancing Data Collection with Automation

Manual collection can stall your project. To scale efficiently, you should automate where you can.

Leveraging APIs for Data Ingestion

APIs are the backbone of modern data collection. Whether you’re capturing sensor logs, syncing responses from a chatbot, or feeding app usage data into a model, you need robust API support.

Tools like SurveyCTO, Airtable, and even Microsoft Forms (via Power Automate) allow you to hook data directly into:

- Training pipelines

- CI/CD flows

- Cloud storage

Implementing Webhooks for Real-Time Data

You can use webhook integrations to enable real-time data collection. A webhook makes it possible to react to events instantaneously. For example, triggering a retraining job when there’s enough new data.

They’re essential for use cases that require real-time updates, such as:

- Fraud detection

- Customer support automation

- Streaming analytics

Webhooks enable push-based updates, but aren’t ideal for high-frequency or latency-sensitive systems. In such cases, consider pub/sub or streaming pipelines.

Automated event tracking reduced our data processing time by 75% and improved dataset quality. Reliable automation is key to scaling ML data pipelines efficiently.

When to Consider Outsourcing Data Collection

Even with the best tools, internal teams hit limits—bandwidth, budget, or know-how. That’s when it’s time to outsource.

Resource Limitations

If in-house capabilities are insufficient, outsourcing can provide access to specialized expertise and infrastructure.

If your engineers are spending more time managing data collection than modeling, you may need external help. Dedicated data vendors can:

- Deploy field teams

- Handle edge-case collection

- Build custom pipelines

This allows your team to stay focused.

Quality Assurance

External providers often have specialized, hard-to-replicate QA processes like:

- Double annotation

- Consensus scoring

- Anomaly detection

This improves the reliability of your datasets and reduces downstream debugging.

Scalability Needs

Some use cases—like collecting annotated video from multiple geographies—are just too big to handle alone. You can outsource to effectively scale large, diverse datasets while maintaining quality and compliance.

A professional data annotation company will also use reliable data anonymization tools, helping you protect your respondents’ data.

Outsourcing also reduces risks tied to compliance and localization, especially when working across jurisdictions with data sovereignty laws.If you’re evaluating options for scaling data collection or labeling, speak to us to get tailored data annotation pricing that match your project scope and data types.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What are data collection tools?

Data collection tools are platforms or software that help you gather, organize, and store data for analysis or model training. The best tools support various data types, integrate smoothly with your ML pipeline, and scale as your needs grow.

What are the 5 ways of collecting data?

The main methods include interviews, observations, focus groups, usage logs, and surveys. Each one serves a different purpose depending on whether you need structured responses, behavioral insights, or user feedback.

What are the 4 types of data collection?

You’ll typically work with quantitative, qualitative, primary, and secondary data. Some projects need original data from scratch, while others rely on pre-existing sources or a combination of both.

What is data collector tool?

It’s a tool built to capture raw information automatically from different sources—like user input, sensors, software events, or online platforms. It simplifies the process so you can collect structured data without manual work.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.