How to Train an LLM: Complete Workflow Guide

Table of Contents

- TL;DR

- Before You Begin: Planning the LLM Training Pipeline

- How to Train an LLM: Data Strategy

- Unsupervised Pre-Training

- How to Train an LLM with Supervised Fine-Tuning

- Reinforcement Learning from Human Feedback (RLHF)

- How to Train an LLM Efficiently: Key Techniques

- Deployment and Ongoing Monitoring

- Common Pitfalls and How to Avoid Them

- What’s Next in How to Train an LLM

- About Label Your Data

- FAQ

TL;DR

- When training a language model, you need a well-structured pipeline that includes data collection, preprocessing, model selection, and optimization techniques.

- Improve your model’s performance by starting with unsupervised pre-training and then fine-tuning the results.

- You can ensure that AI gets it right with reinforcement learning.

- You’ll need a highly efficient system and careful monitoring to keep the costs under control.

Before You Begin: Planning the LLM Training Pipeline

You can’t start training without a clear plan—or you’ll burn through time and budget fast. Set clear objectives, define the scope, and understand the trade-offs you need to make before you even start preparing your dataset.

So, let’s look at this before we consider how to build an LLM.

Start by Defining Objectives

Before you start, decide what tasks or domains your LLM will serve. Different types of LLMs have different needs.

General-purpose models like GPT-4 and LLaMA need a massive machine learning dataset and extensive training resources. The model will be able to do a lot more general tasks.

With specialized models like medical or legal LLMs, you can get away with using more defined domain-specific data and tailored training strategies. This costs less overall, but your model will have limited capabilities.

Consider Scope, Budget, and Constraints

The next step is balancing your performance goals with the available resources:

- Compute and memory limitations: Do you have access to high-end GPUs (e.g., A100, H100) or TPUs?

- Time and human resource requirements: Training a large machine learning algorithm can take weeks or months.

- Trade-offs between model size, data volume, and training time: A larger model is more accurate, but requires more data, storage, and computing power.

But you do need more memory for image recognition and video annotation services.

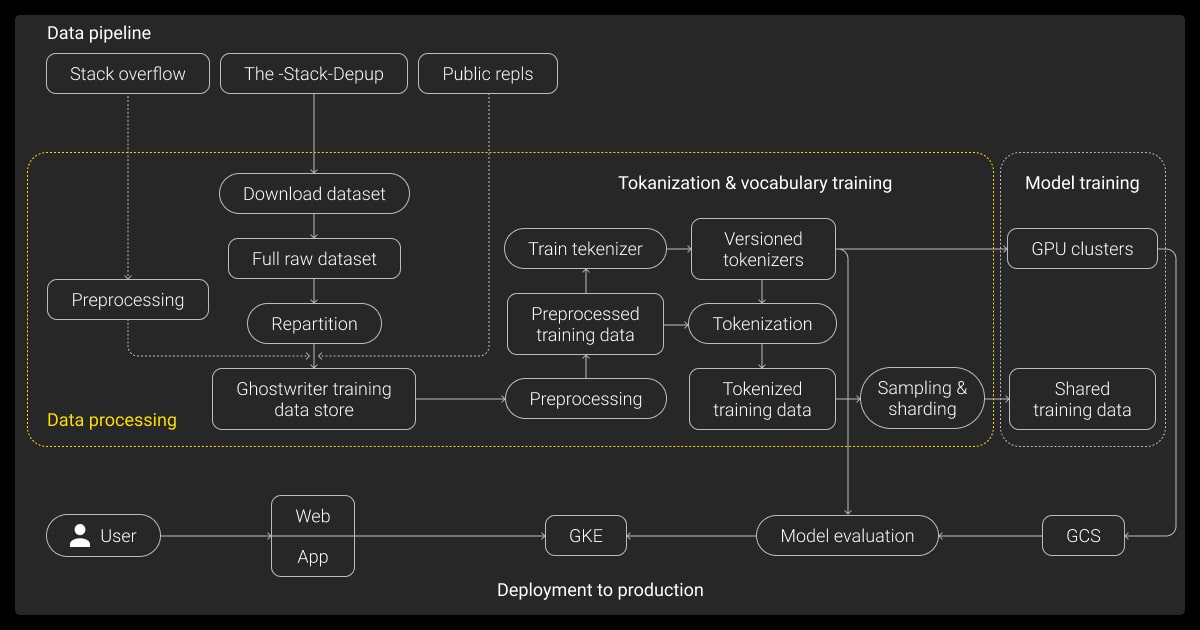

How to Train an LLM: Data Strategy

Data can make or break your LLM. If you use poor-quality or biased data, you’ll get suboptimal performance. So, the first step in how to train an LLM is curating and pre-process the information you’ll train your model on.

If you don’t have the expertise or skills to get this right, it pays to hire data collection services and a reputable data annotation company.

Building the Right Dataset for Pretraining

Pretraining an LLM involves curating vast corpora that include:

Code, documents, and web text

Open-source repositories, high-quality datasets like Common Crawl, and books give your model an introduction to diverse language structures.

Broad vs. deep domain inclusion

Will your model be more general in nature? If so, you’ll want a broader dataset. Is it more specialized? Your dataset should match.

Reducing low-quality or duplicated content

It’s better to work with a smaller set of highly relevant and valuable examples than having your model sort through rubbish.

Data Cleaning and Normalization

You must clean pretraining data to remove noise and standardize formats. Steps you can take include:

- Deduplication: Eliminate repeated text to save your model time.

- Normalization: Convert text into standardized formats to improve tokenizer efficiency.

- Removing low-quality data: Get rid of gibberish, scraped web pages, and adversarial text for better outcomes.

Tokenization Techniques

Tokenization breaks raw text into subword units. You need to choose the right technique to improve performance:

- Byte Pair Encoding (BPE): This technique balances efficiency and vocabulary size. GPT-4 uses this method.

- WordPiece: This is ideal for masked language models. BERT uses this method.

- SentencePiece: This is great for multilingual models because it allows you to process data without predefined word boundaries.

You also have to consider your vocabulary size. The larger it is, the more expressive your model can be, but the more memory it uses.

Maintaining data quality through version control is a game-changer. It caught contamination issues in my last project that would’ve cost us months of debugging.

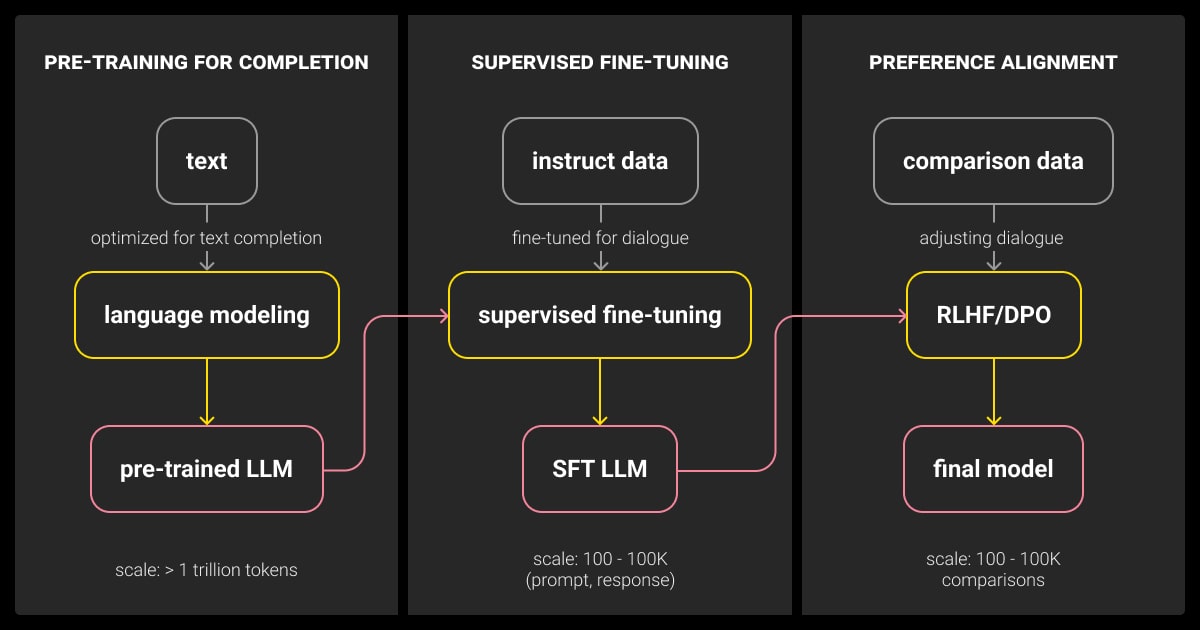

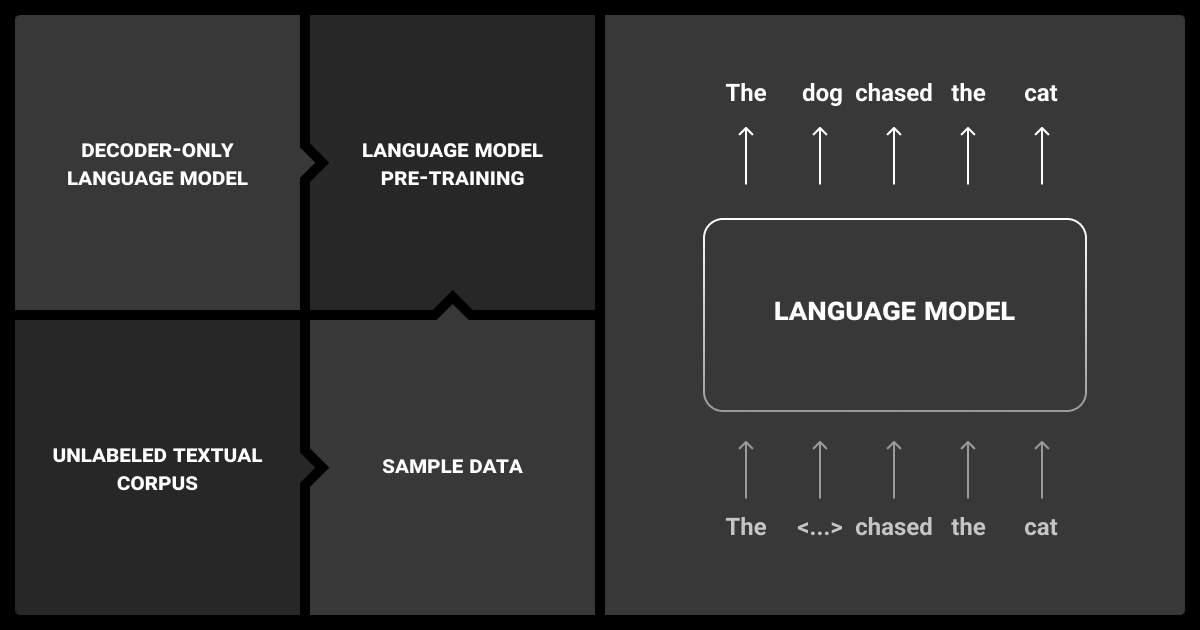

Unsupervised Pre-Training

When comparing pre-training vs fine tuning, pre-training is the heavier lift. This is where you’ll need the most computing power. It requires vast amounts of unlabeled data and computation to help the model learn general language patterns from scratch.

Architecture and Frameworks

You’ll start by considering which transformer to use. GPT, Llama, Falcon, and MPT are all good options, with their own pros and cons.

The next step is to decide whether to start from scratch or use checkpoints. The latter is more cost-efficient because you use an existing model.

Finally, consider your frameworks. PyTorch and Hugging Face offer scalable, efficient implementations. DeepSpeed optimizes training on large clusters.

Training Objectives and Implementation

How do we start to put things together?

- Causal language modeling (CLM): Used in GPT-style models, predicts the next token in a sequence.

- Masked language modeling (MLM): Used in BERT-style models, predicts missing words in a sentence.

Whatever methods you use, you need to monitor the training. Loss curves can indicate learning progress. For example, pretraining Llama with Hugging Face transformers.

Scaling Considerations

To efficiently train large models, you’ll benefit through:

- Data parallelism vs. model parallelism: Data parallelism splits data across GPUs; model parallelism splits the model itself.

- Mixed-precision training (fp16/bf16): Reduces memory usage and speeds up computations.

- Checkpointing: Saves your progress at certain stages so you don’t lose out if the model fails.

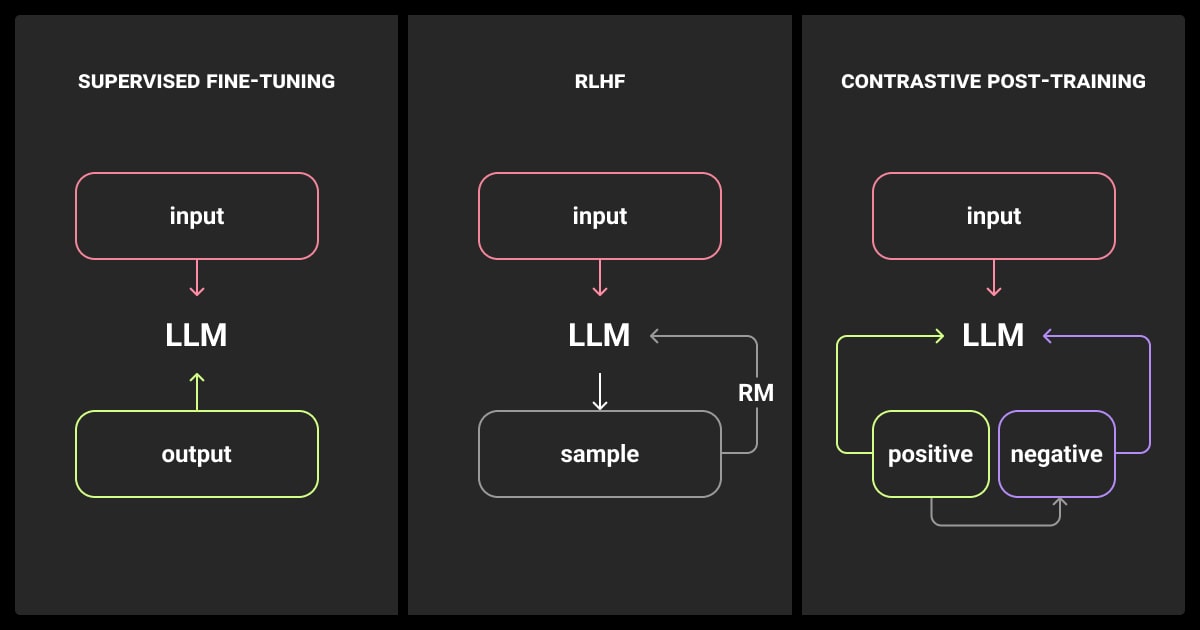

How to Train an LLM with Supervised Fine-Tuning

Now it’s time for LLM fine tuning to make the model task-specific. Here, you’ll move from unsupervised training to human data annotation.

LLM data labeling can be labor-intensive, which is why so many companies use data annotation services.

Instruction Tuning with Prompt-Response Pairs

To reduce data annotation pricing, you should:

- Select high-quality, task-specific data.

- Augment/replace the pretraining data with Supervised Fine-Tuning (SFT) so your model can adapt.

- Use Low-Rank Adaptation (LoRA) methods to fine tune more cost-effectively.

Evaluation Metrics for Fine-Tuned Models

To assess performance, use accuracy, BLEU, and ROUGE—standard benchmarks for translation, summarization, and classification tasks. If humans look over the results, your model is better at contextual understanding and being coherent.

Effective fine-tuning anticipates challenges beyond the dataset. It means aligning training with workflows, user interactions, and compliance requirements. Without this context, even accurate models can fail in production.

Reinforcement Learning from Human Feedback (RLHF)

This stage of LLM reinforcement learning helps align your LLM with human expectations, reducing biases and improving the user experience. Basically, a human checks the answers the AI produces and gives feedback to the machine.

Reward Model Construction

- Pairwise ranking when you collect human feedback

- Training the reward model to distinguish between high- and low-quality outputs.

Optimizing with PPO or DPO

- Proximal Policy Optimization (PPO): Helps fine-tune model behavior based on human feedback.

- Direct Preference Optimization (DPO): Alternative to PPO, reduces computational costs.

- Mitigating reward hacking: Ensuring the model doesn’t exploit loopholes in the reward function.

How to Train an LLM Efficiently: Key Techniques

Now, let’s learn how to reduce costs without harming performance.

Data Efficiency: Do Less, Get More

Here are a few techniques to start with:

- Perplexity filtering excludes low-information-density text.

- Loss-aware sampling focuses the model on the most valuable data.

- Data deduplication gets rid of redundant information.

- Intelligent curriculum strategies to gradually introduce complex data so as not to overwhelm the model.

Compute Optimization

You can reduce the amount of computing power with:

- Model pruning to get rid of unnecessary LLM parameters for faster inference.

- Quantization, where you convert model weights into lower-precision formats to enhance speeds.

- Use Long Range (LoRA) technology for faster training.

- Spot-instance planning that allows you to use cloud-based GPU instances at lower costs.

Deployment and Ongoing Monitoring

The next stage is to deploy the model and monitor its performance.

Exporting and Serving the Trained Model

You can use:

- ONNX and TorchScript are two formats that allow you to optimize LLM inference.

- Use serverless deployment to reduce the infrastructure overhead with cloud-based solutions.

Monitoring Outputs in the Wild

Now, let’s look at how you optimize your model after you let it loose:

- Logging and drift detection allow you to identify and address performance degradation.

- Feedback loops let the model improve itself by interacting with users.

- Retraining triggers tell you when to initiate fine-tuning based on observed inaccuracies.

Common Pitfalls and How to Avoid Them

You’re bound to face challenges, no matter how well you plan.

Training Instability

Diverging loss, NaNs indicates numerical instability or poor weight initialization. If this becomes an issue, you can adjust the learning rate schedule and regularization techniques.

Overfitting and Underfitting

What is your validation loss really telling you?

- Overfitting: Your model memorizes data instead of generalizing, making it accurate in cases with familiar patterns.

- Underfitting: Here, your model fails to learn meaningful patterns, meaning it can’t function.

You can use early stopping and cross-validation techniques to overcome these challenges.

Hidden Biases

You’ll need to trace the bias back to the dataset. If you use an unbiased set, it’ll reinforce biases. It’s easier to fix it in training by using diverse, balanced datasets. You can fix it afterward by fine-tuning the model again using de-biasing techniques.

One of the most overlooked aspects of training is exposing LLMs to adversarial attacks during fine-tuning—like prompt injection and jailbreak attempts. It builds resilience before deployment, not after it breaks.

What’s Next in How to Train an LLM

The future looks bright. We’re looking at:

- Sparse models and mixture of experts, which improve the LLM architecture.

- Retrieval-augmented training, which improves responses using external knowledge sources.

- More open-source collaboration will increase transparency and accessibility in AI development.

Most exciting of all? We’ll use innovative techniques like Test-Time Compute (TAO) to fine-tune models without labeled data. Read more about it here.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

Can I train my own LLM?

Yes, you can train your own LLM, but you’ll need significant computing power, large and high-quality datasets, and expertise in deep learning.

How are LLMs typically trained?

You usually start training LLMs by pretraining the model on a large dataset, without any supervision. When you’ve got the basics right, you fine tune the model and then use reinforcement learning to further improve your results.

How long does it take to train an LLM?

It can take anything from a few weeks to several months, depending on the LLM model size and the hardware you have.

How much does it cost to train an LLM?

Take a deep breath, it can be breathtakingly expensive. Research estimates that ChatGPT cost $100 million to develop. DeepSeek was a lot less at $5.5 million. Want to know more about why the costs are so different? Read our DeepSeek vs ChatGPT comparison.

Now that we have your attention, these are massive models. You can train smaller ones for tens of thousands of dollars.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.