Neural Network Architectures: Top Frameworks Explained

Table of Contents

TL;DR

- Neural network architectures help with tasks like recognizing images, processing speech, and generating text.

- Common types include feedforward networks, CNNs, RNNs, LSTMs, and transformers—each built for different jobs.

- Top frameworks like TensorFlow, PyTorch, and Keras make it easier to build and use neural networks.

- Pick the right architecture based on your task, the kind of data you have, and how fast you need results.

- Use clean data, start simple, fine-tune settings, and use pretrained models to save time.

What Are Neural Network Architectures in Deep Learning?

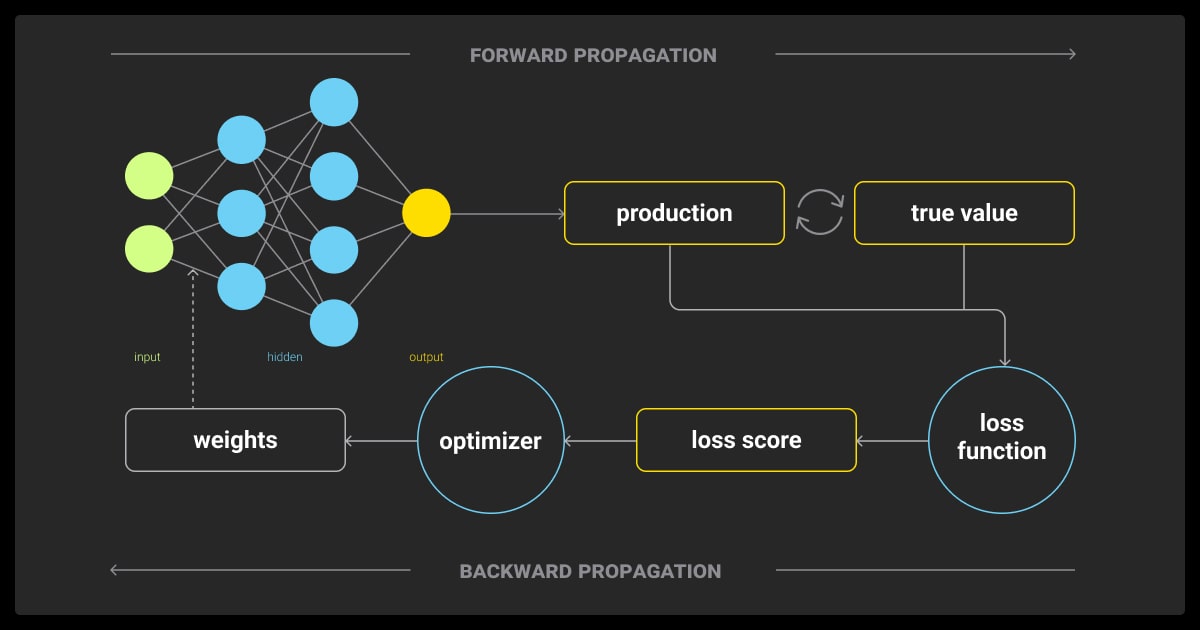

Neural network architectures are the building blocks of deep learning. They help computers learn from data and solve complex tasks, like recognizing images or understanding language.

These architectures work like the human brain, using layers of connected nodes (called neurons) to process and analyze information.

Every neural network serves a specific purpose. Some are great for pattern recognition in pictures, while others are built for tasks like predicting future events or automatic speech recognition. Picking the right architecture is key to building a successful machine learning algorithm.

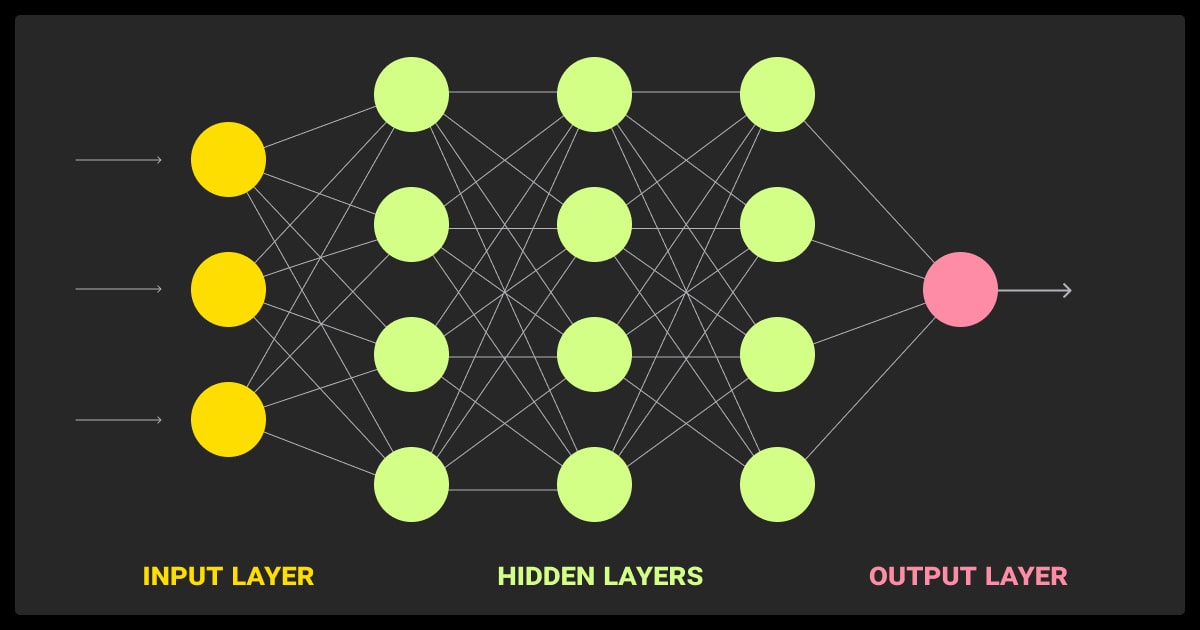

Here’s a quick look at the structure of a neural network architecture:

- Input Layer: Takes in the raw data (like images, text, or numbers).

- Hidden Layers: Process the data and find patterns.

- Output Layer: Gives the final result, such as a label (or a prediction.

Benefits of Neural Network Architectures

- Flexible: Useful for tasks like price prediction, face recognition, and image generation.

- Scalable: Handle large datasets and complex features effectively.

- Accurate: Deliver reliable results in speech recognition and language translation.

Next, we’ll explore different neural network architectures and how they are used in the real world.

Types of Neural Network Architectures

Neural networks come in different types, each suited for specific tasks. Whether it’s analyzing images, financial datasets, or predicting the next word in a sentence, there’s an architecture for every need.

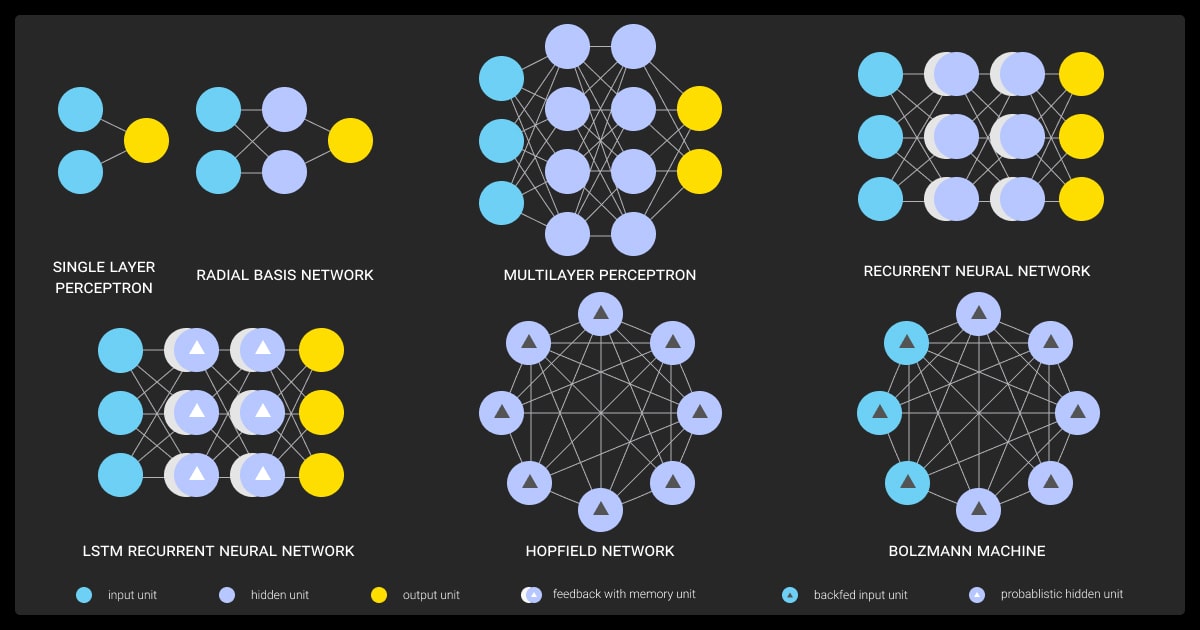

Feedforward Neural Networks (FNNs)

Process data in one direction—from input to output—without feedback loops. Here, data flows layer by layer. Each layer processes and transforms the data before passing it on.

Best for:

- Simple prediction tasks

- Image classification

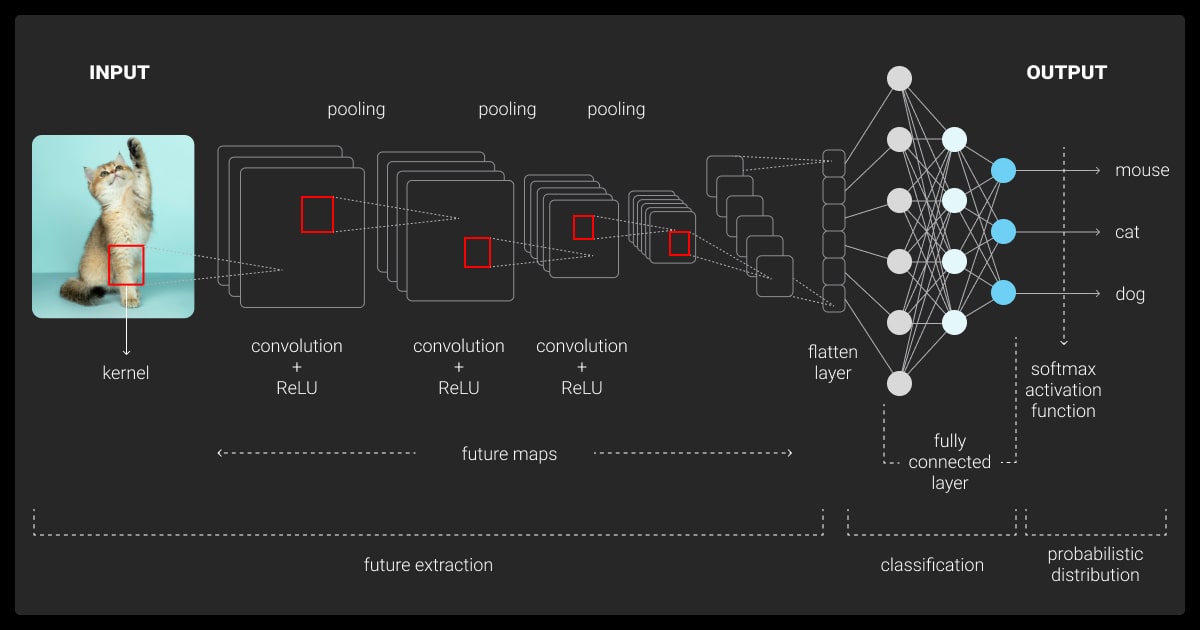

Convolutional Neural Networks (CNNs)

Convolutional neural network architectures use filters to detect features in image recognition tasks and pooling layers to reduce the size of data. All while preserving key information.

Best for:

- Image recognition

- Object detection

- Medical imaging

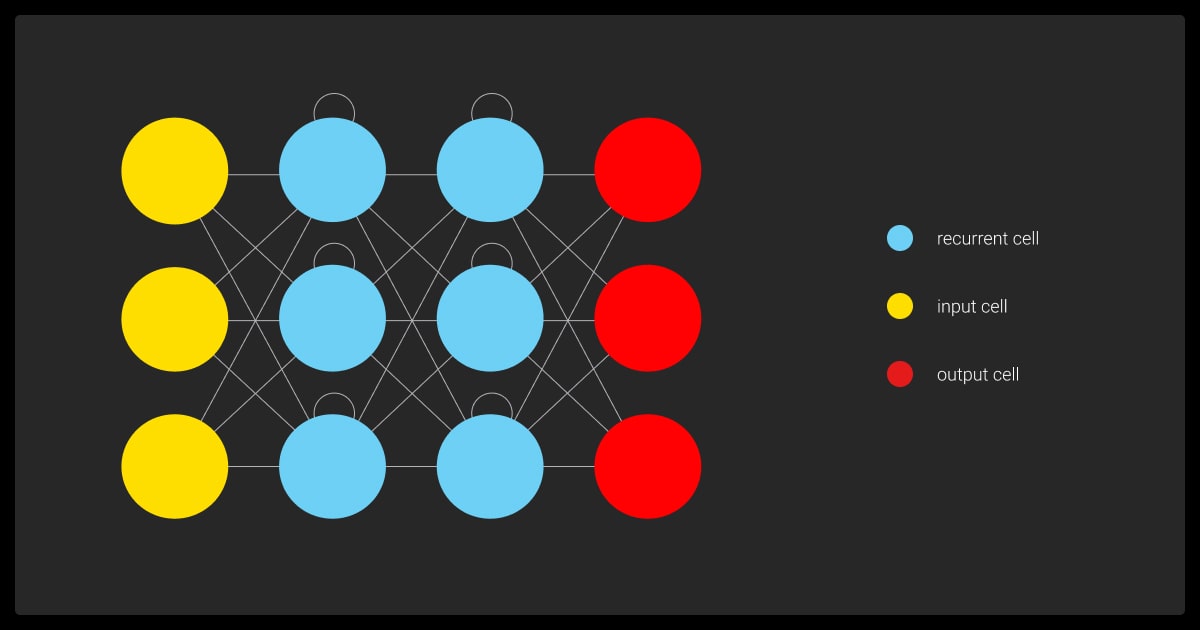

Recurrent Neural Networks (RNNs)

Handle sequential data, remembering past inputs to predict the future. They process data step by step, passing information from one step to the next.

Best for:

- Time-series forecasting

- Speech recognition

- Language modeling

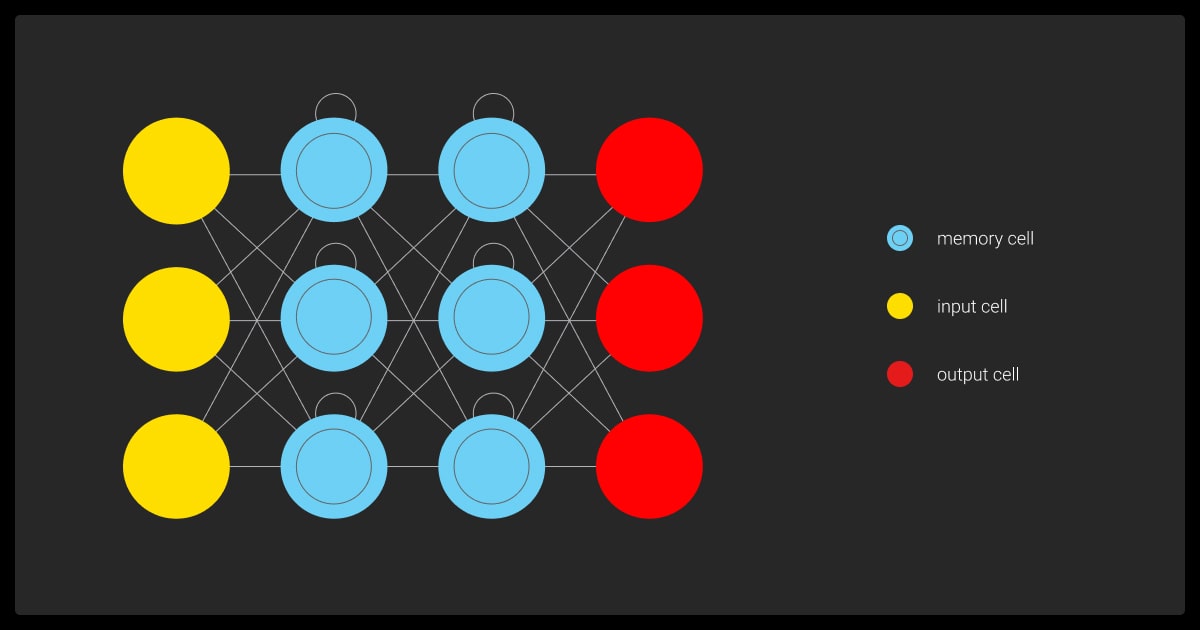

Long Short-Term Memory Networks (LSTMs)

A special type of RNN that remembers long-term patterns, solving the “forgetting” problem in standard RNNs.

Best for:

- Sentiment analysis

- Stock market prediction

- Machine translation

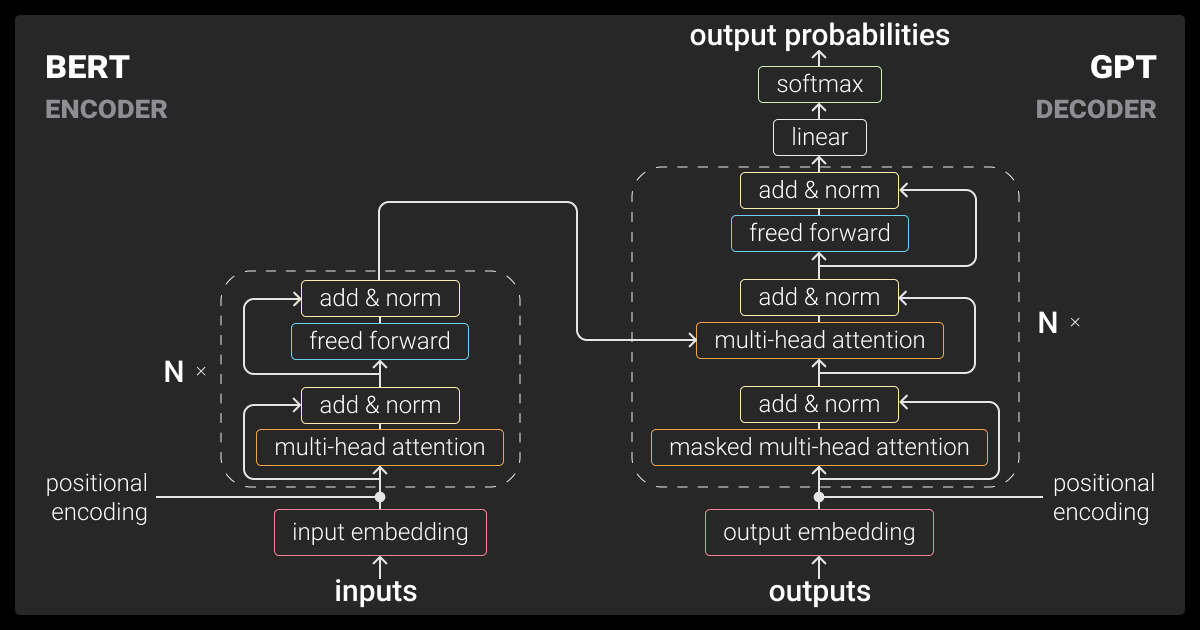

Transformer Networks

Transformers are popular neural network architectures for processing text. Unlike RNNs, they process entire sequences at once for faster and more accurate results.

Best for:

- Machine translation

- Text summarization

- Question-answering systems

Different types of LLMs, like BERT and GPT, are built on transformer networks for advanced text processing.

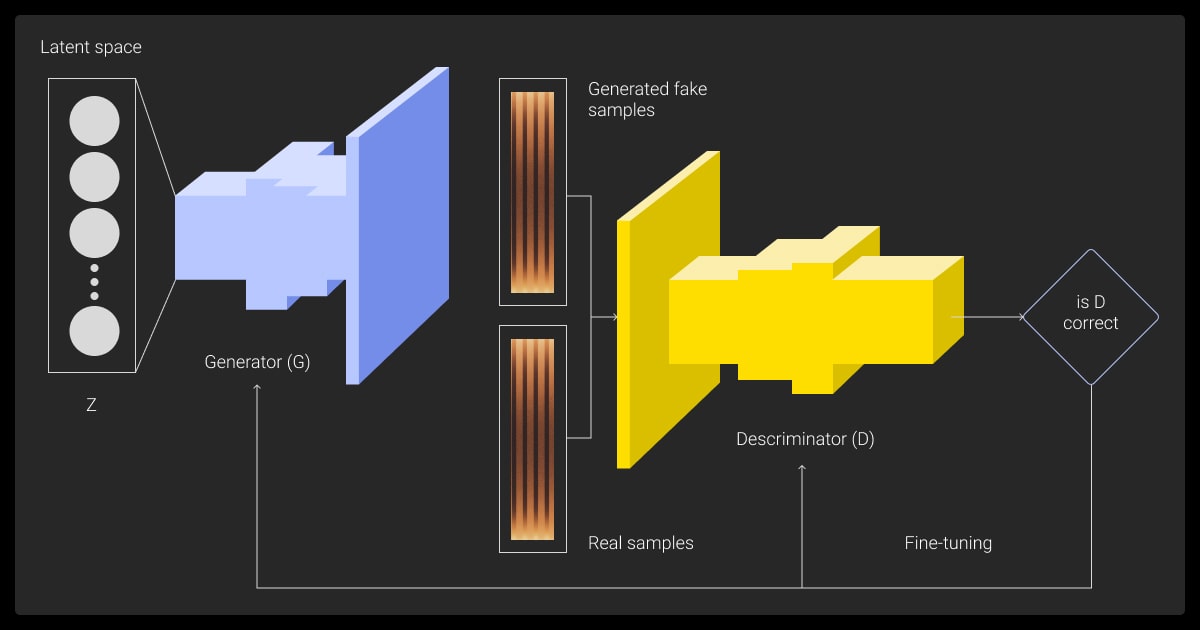

Generative Adversarial Networks (GANs)

GANs generate new data by pitting two neural networks—a generator and a discriminator—against each other. They are widely used for creating realistic images, videos, and even synthetic datasets.

Best for:

- Image generation

- Data augmentation

- Style transfer

Popular Frameworks for Building Neural Networks

Building neural networks from scratch is complex, but with powerful frameworks, it becomes much easier. These tools provide ready-to-use functions for designing, training, and deploying common neural network architectures.

Let’s explore the most popular options:

TensorFlow

TensorFlow is an open-source platform developed by Google, known for its scalability and support for both research and production.

Key features:

- Flexible architecture for building any neural network.

- Supports distributed training for large datasets.

- TensorBoard for visualizing training progress.

Best use case: Large-scale machine learning projects and production deployment.

PyTorch

A popular choice among researchers, developed by Facebook AI. Known for its simplicity and dynamic computation graph.

Key features:

- Easy debugging with Python-like syntax.

- Dynamic graphs allow flexible model adjustments.

- Strong community support.

Best use case: Research projects and fast prototyping.

Keras

A high-level API for building neural networks, often used with TensorFlow for rapid development.

Key Features:

- Simple and user-friendly interface.

- Quick prototyping with minimal code.

- Compatible with other frameworks like TensorFlow.

Best use case: Beginners and fast experiments.

Other Noteworthy Frameworks

- MXNet: Great for deep learning in the cloud.

- Caffe: Known for speed in image processing tasks.

- H2O.ai: Focuses on machine learning in business applications.

Choosing the right framework depends on your project’s goals, dataset size, deployment needs, and data annotation pricing.

When choosing a neural network framework, the key feature I prioritize is scalability. A framework needs to handle increasing data volumes and model complexity efficiently while maintaining performance. TensorFlow and PyTorch stand out because they support distributed training, GPU acceleration, and seamless deployment options.

How to Choose the Right Neural Network Architecture

Selecting correctly among various types of neural network architectures is essential for achieving accurate results. The choice depends on your project’s goals, data type, and performance needs.

Here’s a step-by-step guide to help you make the best decision:

Define Your Task

Different architectures are built for specific tasks. Start by identifying what you need to accomplish:

- Image-related tasks: Use convolutional neural network architectures (CNNs).

- Sequential data: Recurrent neural networks (RNNs) or LSTMs work best.

- Text generation or translation: Transformer networks are ideal.

For example, OCR deep learning models rely on neural networks for recognizing text in scanned documents and images.

Consider Your Data Size and Type

- Large datasets: Deep neural network architectures offer better performance but require more training time and resources.

- Limited data: Simpler models like feedforward neural networks can still perform well.

Evaluate Training and Deployment Requirements

Think about your technical constraints and deployment needs:

- For fast research: Use PyTorch for flexibility and ease of debugging.

- For scalable production: TensorFlow is a reliable option.

- Cloud-based solutions: Frameworks like MXNet offer seamless cloud integration.

Compare Architectures Side by Side

| Architecture | Best For | Example Tasks |

| Feedforward NN | Simple prediction tasks | Regression, binary classification |

| CNN | Image-related data | Object detection, facial recognition |

| RNN | Sequential data | Time-series forecasting, NLP |

| LSTM | Long sequences | Sentiment analysis, machine translation |

| Transformer | NLP, parallel processing | Machine translation, text summarization |

By carefully assessing your task, data, and requirements, you can confidently select the right neural network architecture for your project.

Each framework excels in specific tasks—whether it’s classification, regression, or generative tasks. A framework optimized for image processing may offer pre-built convolutional layers, while a natural language processing framework may focus on transformers and attention mechanisms.

Best Practices for Working with Neural Network Architectures

Successfully building and deploying neural networks requires more than just picking the right architecture. These best practices will help you improve performance, reduce errors, and optimize your workflow:

Prepare Your Data Properly

High-quality data is crucial for training neural networks.

- Clean the data: Remove errors and inconsistencies.

- Normalize features: Ensure all inputs are on the same scale.

- Augment data: Use techniques like flipping, rotating, or cropping for image data to reduce overfitting.

Data annotation is the foundation of training reliable neural network models for various machine learning tasks.

Start with a Simple Architecture

Complex architectures can be tempting, but it’s best to start simple and scale as needed.

- Test with a basic feedforward neural network first.

- Gradually add layers or switch to more advanced architectures (like CNNs or LSTMs) as needed.

Monitor and Tune Hyperparameters

Hyperparameters like learning rate, batch size, and number of hidden layers affect your model’s performance.

- Use tools like Grid Search or Bayesian Optimization for tuning.

- Regularly monitor validation accuracy to avoid overfitting or underfitting.

Leverage Pretrained Models

Save time and resources by starting with pretrained models.

- Use models like ResNet or BERT for image or text tasks.

- Fine-tune them to match your specific use case.

LLM data labeling plays a crucial role in fine-tuning transformer-based architectures for text generation and summarization tasks.

Optimize Training for Efficiency

Training deep neural network architectures can be resource-intensive. It starts with converting unlabeled data into labeled datasets.

Next, you can:

- Use GPU or TPU acceleration to speed up training.

- Implement regularization techniques like dropout to reduce overfitting.

You can also outsource to a data annotation company for better model performance thanks to high-quality training data.

And here’s a catch about community support:

I now prioritize frameworks with active GitHub discussions, regular updates, and plenty of Stack Overflow answers—it’s saved us countless hours of debugging and implementation time.

Adopting these best practices will help you build robust and efficient neural network models that deliver high accuracy and performance. But it all starts with high-quality data annotation services.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What are the different types of neural network architectures?

The most common neural network architectures include Feedforward Neural Networks (FNNs) for simple tasks, Convolutional Neural Networks (CNNs) for images, Recurrent Neural Networks (RNNs) for sequences, Long Short-Term Memory Networks (LSTMs) for long-term patterns, and Transformer Networks for text processing.

What is the best neural network architecture?

It depends on the task. CNNs are best for images, RNNs or LSTMs for sequences, and Transformers for text.

Which is best, CNN or RNN?

CNNs are better for images, while RNNs handle sequences like speech or time-series data. Use both for mixed tasks like video analysis.

What is the basic architecture of a deep neural network?

A deep neural network has three main parts: the input layer for receiving data, hidden layers for processing, and the output layer for results like predictions or labels.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.