Top 10 SuperAnnotate Competitors for Vision and Multimodal Tasks

TL;DR

- SuperAnnotate is a tool-first platform strong in computer vision, auto-annotation, and workflow tools.

- Teams look beyond it for broader modality support, workforce reliability, and enterprise-scale compliance.

- The best alternatives include Label Your Data, V7, Labelbox, Encord, Kili, CVAT, Dataloop, AWS Ground Truth, Roboflow, and Vertex AI.

- Each competitor has a different focus: hybrid service models, cloud-native integration, or open-source flexibility.

- The right choice depends on your priorities: scalability, pricing transparency, compliance depth, and annotation workflows.

Why Look Beyond SuperAnnotate?

SuperAnnotate is known as a tool-first annotation platform with some workforce support. It works well for computer vision tasks (like image recognition), offers an AI-assisted editor, and has auto-annotation features. The vendor also provides optional workforce support through its marketplace, making it attractive to ML teams that want a mix of automation and managed data annotation.

Still, many ML teams evaluate SuperAnnotate alternatives. The main reasons are:

- Scaling: harder to extend across data types and larger pipelines

- Pricing: higher tiers can limit long-term projects

- Features: other vendors lead in auto-labeling, QA, or active learning

- Workforce: less suited when you need managed labeling teams

Our review draws on in-depth research and external rankings from G2 and SoftwareSuggest, where SuperAnnotate’s competitors consistently appear. Continue to read this guide if you’re looking for stronger options with more flexibility, broader modality support, or closer integration with your ML stack.

How to Evaluate SuperAnnotate Competitors

When comparing SuperAnnotate with alternatives, focus on measurable factors that directly affect your workflow and cost. A useful way to compare data annotation companies is to focus on these factors:

Modalities supported

Does the platform handle only computer vision, or does it extend to text, audio, and multimodal data?

Workforce model

Is annotation handled by a managed workforce, a crowdsourced marketplace, or in-house teams supported by tooling?

Quality assurance (QA)

What built-in tools exist to review labels, catch errors, and maintain consistency over time?

Compliance and security

Can the vendor provide enterprise guarantees like SOC 2, HIPAA, or GDPR compliance for sensitive data?

Integration options

How well does the tool connect to your stack via APIs, SDKs, or cloud-native integrations (AWS, GCP, Azure)?

Pricing flexibility

Is the model per-label, per-hour, subscription-based, or hybrid? Clear data annotation pricing is critical when scaling to millions of items.

Integrations should extend beyond labeling workflows and connect directly to your machine learning algorithm pipeline, so annotated data flows seamlessly into training and testing. Let’s put this into practice by profiling the best SuperAnnotate alternatives for data labeling.

Teams often overlook the critical impact of customizable workflow automation and seamless human-in-the-loop (HITL) integration on dataset quality and workflow efficiency. The ability to automate complex preprocessing, intelligent task routing, and dynamic QA triggers is paramount.

See which vendor better aligns with your ML workflow, budget, and compliance requirements.

Top SuperAnnotate Alternatives in 2025

SuperAnnotate is only one option in a crowded space. Competing platforms differ in focus: some prioritize automated data labeling, others emphasize managed services, and a few stand out for open-source flexibility.

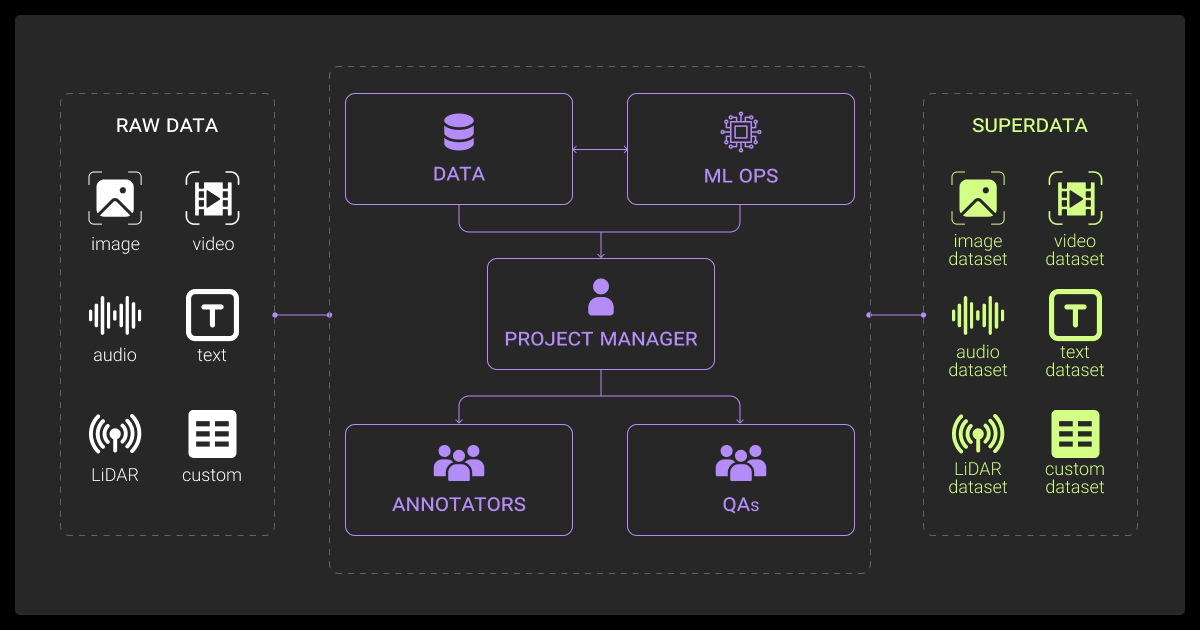

Label Your Data

Label Your Data combines a data annotation platform with a managed workforce. Unlike tool-first competitors, it emphasizes quality assurance and security alongside scalable services. The company supports all major data types (image, video, text, audio, 3D) and works across industries including healthcare, geospatial, academia, and autonomous systems. Here’s why Label Your Data is often considered the best SuperAnnotate competitor for labeling tasks:

Strengths:

- Hybrid model (platform + workforce) ensures consistency at scale

- Strong QA with review cycles and accuracy reporting

- Flexible engagement: pilot projects, enterprise contracts, or ongoing services

Limitations:

- Less self-serve tooling compared to tool-first vendors

- Not positioned as a low-cost marketplace solution

Best fit: Teams that need reliable annotation services with full QA support, especially in regulated industries or research environments.

V7 (Darwin)

V7 is a tool-first platform built around automation and computer vision. Its Auto-Annotate feature accelerates polygon masks, bounding boxes, and interpolation for video. It also supports compliance-driven industries with DICOM handling for medical imaging.

Strengths:

- Strong automation for vision data

- Enterprise features: role-based QA, collaboration tools, and compliance support

- Good for large datasets with interpolation and object tracking

Limitations:

- Primarily focused on vision; less suited for multimodal projects

- Advanced features locked behind enterprise pricing

Best fit: Teams in healthcare, industrial vision, or robotics who need fast, accurate image/video labeling with compliance assurances.

Labelbox

Labelbox takes an API-first approach, targeting teams with engineering capacity and MLOps maturity. It provides a flexible SDK and API for integrating data labeling directly into ML workflows. Workforce support is optional, with the platform built mainly for teams that bring their own annotators.

Strengths:

- Deep API integration with Python SDK for ML pipelines

- Customizable ontologies and strong data governance tools

- Suited for large teams that want to embed annotation into CI/CD pipelines

Limitations:

- Pricing can escalate quickly for high-volume labeling

- Requires in-house expertise to unlock full value

Best fit: Engineering-heavy teams with existing infrastructure that need maximum control and customization rather than managed services.

Encord

Encord is a data-centric platform designed for continuous learning and active learning workflows. It focuses on iterative feedback loops where model predictions improve labeling efficiency over time. It supports vision, video, and medical imaging, with tools to track data quality at scale.

Strengths:

- Built-in active learning and model-in-the-loop workflows

- Detailed data quality analytics for reducing drift

- Strong compliance support for healthcare and enterprise environments

Limitations:

- More complex setup compared to plug-and-play platforms

- Enterprise pricing models may be restrictive for smaller teams

Best fit: Teams experimenting with active learning pipelines and those who want to link annotation directly to model training and monitoring.

Kili Technology

Kili Technology offers a tool-first collaborative platform with strong annotation workflows. It emphasizes team collaboration, QA, and pre-labeling automation across vision and text annotation tasks.

Strengths:

- Intuitive UI with collaboration features

- Built-in pre-labeling and model-assisted annotation

- Industry-specific tutorials (geospatial, NLP, document review)

Limitations:

- Less automation depth compared to V7 or Roboflow

- Higher cost tiers aimed at enterprise buyers

Best fit: Enterprise teams balancing automation with human QA, particularly where collaboration and compliance are critical.

The feature that often gets overlooked is the depth of collaborative annotation tools. Real-time comment threads, version history, and integrated QA workflows had a massive impact on dataset consistency. Export flexibility also reduced our preprocessing time by roughly 25%.

CVAT

CVAT is a widely used open-source annotation tool created by Intel. It supports images, video, and 3D data, and offers flexibility to integrate custom models for auto-labeling. Because it’s open source, it’s free to use but requires engineering effort for setup and scaling.

Strengths:

- No licensing fees; fully open source

- Highly flexible with plugin and BYOM (bring-your-own-model) support

- Strong community backing and wide format compatibility

Limitations:

- Requires infrastructure setup and maintenance

- No managed workforce or enterprise support out of the box

Best fit: Cost-sensitive teams with technical capacity to self-manage and customize open-source pipelines.

Dataloop

Dataloop positions itself as a tool-first platform with automation features for large-scale pipelines. It supports image, video, and text annotation and integrates with cloud providers to handle big workloads.

Strengths:

- Automated workflows for scaling pipelines

- Strong integrations with cloud storage and ML systems

- Useful for enterprise teams managing high annotation throughput

Limitations:

- Less emphasis on human workforce support

- Pricing may be high for smaller teams

Best fit: Teams managing large-scale vision and video annotation projects where automation and integration with cloud-based ML stacks are priorities.

Amazon SageMaker Ground Truth

Ground Truth is an AWS-native service for data labeling. It connects directly to Mechanical Turk and other managed workforce providers, enabling fast access to annotators. It supports multiple modalities and integrates seamlessly with SageMaker for model training.

Strengths:

- Direct integration with the AWS ecosystem

- Access to a large on-demand workforce via Mechanical Turk

- Scales well with other SageMaker services for training and deployment

Limitations:

- Vendor lock-in to AWS

- Quality can vary with Mechanical Turk annotators unless managed carefully

Best fit: Teams already invested in AWS infrastructure that want annotation tied closely to SageMaker workflows.

Roboflow

Roboflow is a computer vision–focused platform built for fast preparation of machine learning datasets and model training. It’s popular with startups and smaller teams because of its ease of use, strong auto-annotation features, and free tier for prototyping.

Strengths:

- Auto-labeling with models like SAM and Grounding DINO

- End-to-end workflow: upload, annotate, train, deploy

- Community-driven with many open datasets available

Limitations:

- Limited beyond computer vision

- Advanced features require paid plans

Best fit: Startups or research teams iterating quickly on vision projects that need rapid prototyping and minimal setup.

Vertex AI

Vertex AI is Google Cloud’s end-to-end ML platform, offering dataset labeling as part of its managed services. It ties directly into Google’s AI workflows, including AutoML, BigQuery, and MLOps tools.

Strengths:

- Deep integration with Google Cloud ecosystem

- Supports multimodal labeling (images, text, video)

- Enterprise-ready with compliance and scaling features

Limitations:

- Vendor lock-in to GCP

- Best features require broader adoption of Google Cloud tools

Best fit: Teams already building on Google Cloud that want labeling tightly integrated with training, deployment, and monitoring.

What I notice most is how support teams treat you once the contract is signed. Some platforms check in only when you raise a ticket, while the best ones proactively flag labeling issues and suggest workflow tweaks. That support translates into higher dataset quality because errors get caught early.

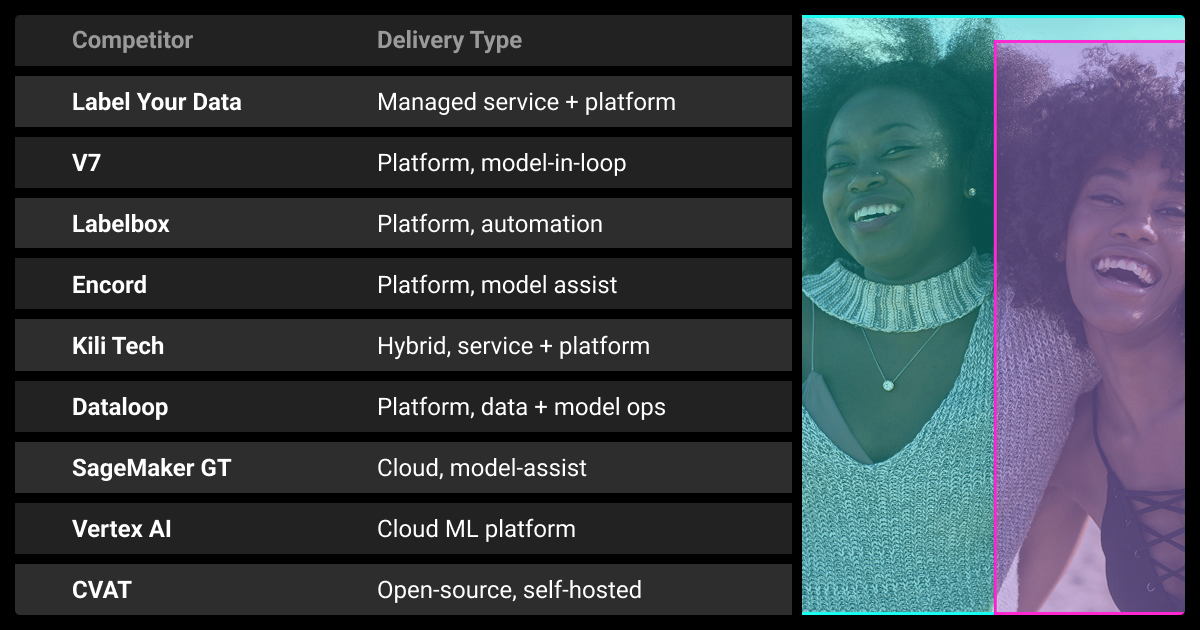

Comparing SuperAnnotate Competitors Side-by-Side

A side-by-side view shows how these platforms differ in focus, supported data types, and ideal use cases.

| Company | Focus (Tool / Hybrid / OSS) | Modalities Supported | Best For |

| Label Your Data | Hybrid (workforce + platform) | Image, video, text, audio, 3D | Teams needing managed services with strong QA and security |

| V7 (Darwin) | Tool-first | Image, video, medical | Compliance-heavy industries (healthcare, industrial vision, robotics) |

| Labelbox | API-first tool | Image, text, video | Engineering-led teams with MLOps maturity |

| Encord | Tool-first with active learning | Image, video, medical | Teams experimenting with continuous and active learning pipelines |

| Kili Technology | Tool-first | Image, text, documents | Enterprise teams focused on collaboration and QA |

| CVAT | Open-source | Image, video, 3D | Cost-sensitive teams with technical expertise |

| Dataloop | Tool-first | Image, video, text | Large-scale pipelines with cloud-based integration |

| Amazon SageMaker Ground Truth | Hybrid (tool + workforce via AWS) | Image, text, video, 3D | Teams in AWS ecosystem needing workforce access |

| Roboflow | Tool-first | Image (vision-focused) | Startups and researchers needing fast prototyping |

| Vertex AI | Cloud-native | Image, text, video | Teams building on Google Cloud for full AI lifecycle integration |

Use this table as a quick way to benchmark tools not just by features but by fit. You can also share it with your team and together shortlist platforms aligned with your project’s infrastructure, workload type, and scale requirements before running a pilot.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is SuperAnnotate best for?

SuperAnnotate is best for computer vision annotation projects where automation and workflow management matter most. It’s strong in bounding boxes, segmentation, and video annotation, with auto-labeling and a clean UI for teams managing image-heavy pipelines.

Who are the main competitors to SuperAnnotate?

The main SuperAnnotate competitors include Label Your Data, V7, Labelbox, Encord, Kili Technology, CVAT, Dataloop, AWS SageMaker Ground Truth, Roboflow, and Vertex AI. Each offers different trade-offs in workforce support, automation, and pricing.

Is there a free alternative to SuperAnnotate?

Yes. Open-source tools like CVAT and Label Studio are free to use, though they require in-house engineering support for setup, scaling, and QA. They’re popular among research teams and startups with technical capacity but limited budgets.

Which is better, Labelbox or SuperAnnotate?

Labelbox is better suited for engineering-heavy teams that want API-first integration and already manage their own workforce. SuperAnnotate is stronger for teams that prioritize a ready-to-use tool with built-in automation for computer vision projects.

Can open-source tools replace SuperAnnotate?

They can, but only if your team has the resources to maintain them. CVAT and Label Studio offer strong computer vision annotation capabilities, but they lack enterprise-grade compliance, managed workforce options, and built-in QA found in commercial platforms.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.