Transfer Learning: Enhancing Models with Pretrained Data

Table of Contents

- TL;DR

- What Is Transfer Learning?

- How Transfer Learning Works

- Key Components of Transfer Learning

- Applications of Transfer Learning

- Implementing Transfer Learning: Step-by-Step Guide

- Challenges and Common Pitfalls in Transfer Learning

- Best Practices for Successful Transfer Learning

- Next Steps: Getting Started with Transfer Learning

- About Label Your Data

- FAQ

TL;DR

- Transfer learning reuses pretrained models to solve new, related tasks faster and with less data.

- It works by freezing general layers, replacing the output layer, and fine-tuning the model.

- Common use cases include image classification, text analysis, and specialized fields like medical imaging.

- Challenges include negative transfer, overfitting on small datasets, and computational demands.

- Follow best practices: choose the right model, adjust layers carefully, and validate performance.

What Is Transfer Learning?

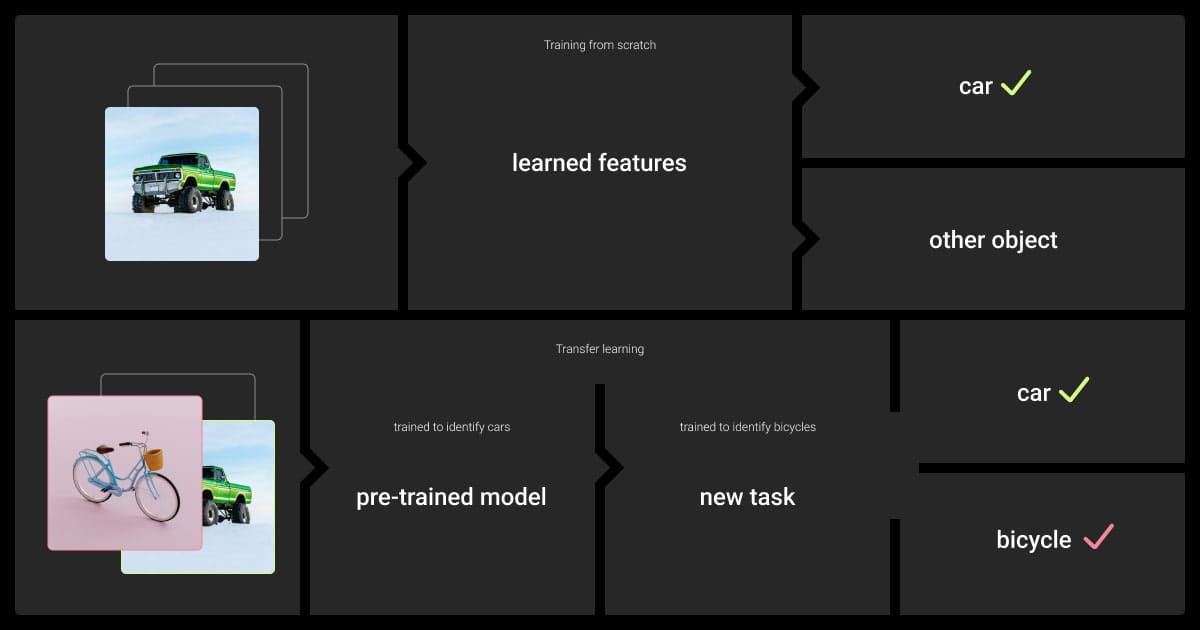

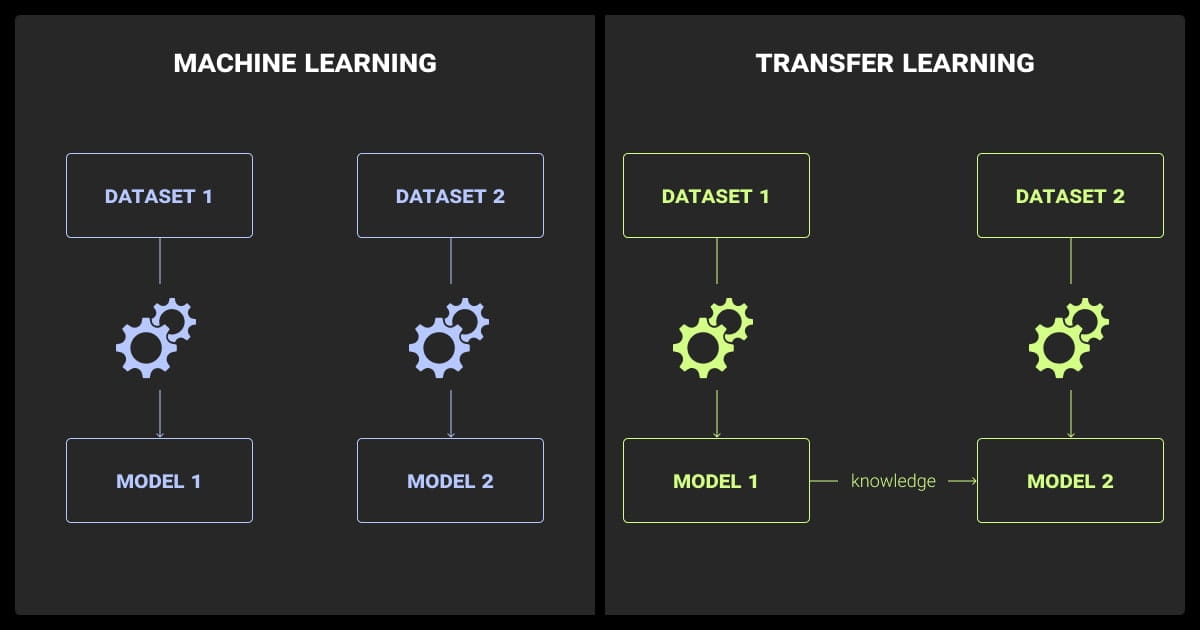

Transfer learning means using a machine learning model trained for one task to help with another, similar task. Instead of building a new model, you start with one that already knows useful patterns. For example, transfer learning models trained to recognize animals in photos can be adjusted to identify different dog breeds.

This method acts as an extension to a traditional machine learning algorithm, allowing pretrained models to solve new tasks more efficiently. Transfer learning saves time and works well when you don’t have a lot of data.

Why Use Transfer Learning?

Now that you know what is transfer learning in machine learning, here are three main reasons to use it:

- It’s faster: Starting with a pretrained model reduces training time.

- It needs less data: It works well with small datasets.

- It gives better results: Models trained on big datasets can handle new tasks more accurately.

Transfer Learning Example

Imagine you want to build a model to identify bird species, but only have a few hundred photos. You can start with a pretrained image model like ResNet, which is trained on ImageNet. Replace its last layer with one tailored to your bird categories, then train the updated model on your bird images. This way, you get a good-performing model without needing a huge dataset or lots of time.

How Transfer Learning Works

Transfer learning machine learning works by reusing parts of a model that’s already trained. It takes what the model has learned—like patterns, shapes, or language structures—and applies it to a new task.

For instance, a model designed to classify objects in images can be modified to identify particular vehicle types.

The Basics

Here’s how transfer learning usually works:

Choose a Pretrained Model

Pick one trained on a task similar to yours (e.g., ResNet for image tasks or BERT for text tasks).

Freeze Layers

Keep early layers unchanged to retain general knowledge.

Modify the Output Layer

Replace the last layer with one designed for your task.

Fine-Tune

Train the updated model on your new data to improve performance.

Consider a pretrained model’s adaptability to your specific use case. It's not just about picking the most popular model; it's about finding one that aligns with your business goals and can be fine-tuned to meet your unique requirements.

Frozen vs. Trainable Layers

In transfer learning, some layers stay the same, while others are updated:

- Frozen Layers: Early layers that detect basic features, like edges or textures in images.

- Trainable Layers: Later layers are adjusted to fit the specific patterns of your new task.

This setup allows the model to adapt quickly without needing to relearn everything.

An Example of Fine-Tuning

Say you’re building a model to detect diseases in X-rays:

- Start with a pretrained model like ResNet, designed to recognize shapes in images.

- Freeze the first layers. These detect general features like edges and patterns.

- Replace the last layer to classify "healthy" or "diseased."

- Train the model on your X-ray dataset for a few more steps to fine-tune it.

This way, the model uses its existing knowledge while learning to focus on your specific task.

Key Components of Transfer Learning

Transfer learning relies on specific parts of a model and its workflow to adapt to new tasks. Understanding these components helps you choose the right approach for your project.

Pretrained Models

Pretrained models are at the heart of transfer learning. These are machine learning models trained on large datasets to identify patterns, such as shapes in images or word relationships in text.

Some popular pretrained models include:

It’s easy to get drawn to big names or models with state-of-the-art accuracy, but I've learned that a model’s ability to generalize across contexts is often more important.

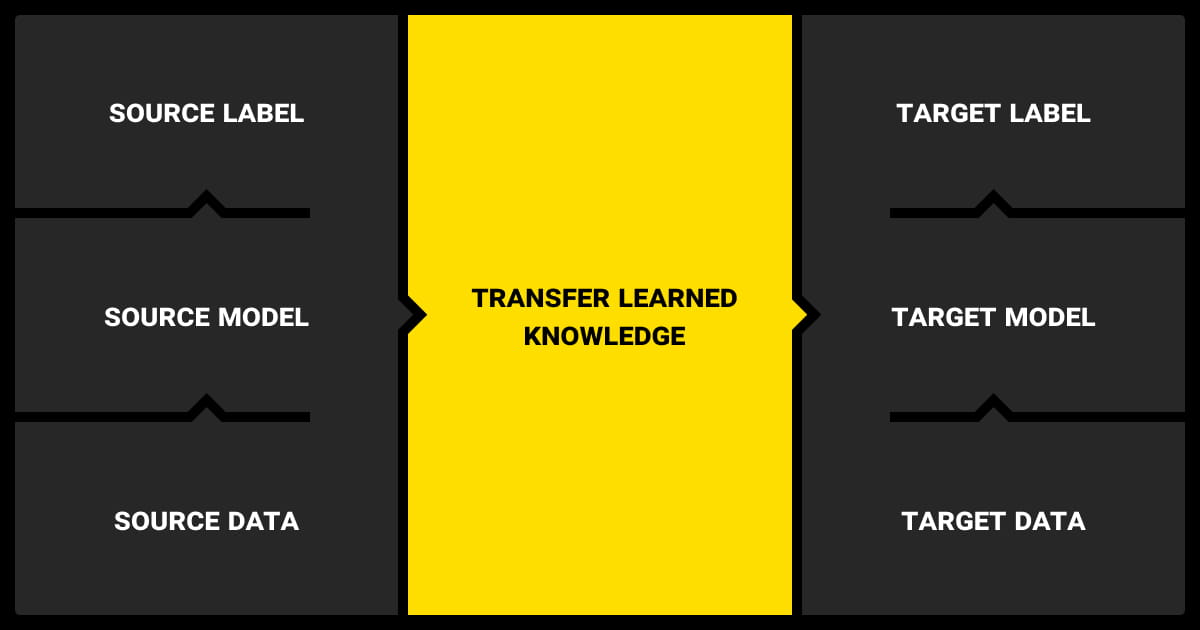

Source Task vs. Target Task

Transfer learning machine learning works by transferring knowledge from a source task to a target task:

- Source Task: The problem the model was originally trained to solve. Example: Identifying objects in ImageNet.

- Target Task: The new problem you want to solve. Example: Identifying specific car models.

The closer the two tasks are, the better transfer learning usually performs.

Frozen and Trainable Layers

Neural networks in transfer learning have two types of layers:

- Frozen Layers: These retain general knowledge, like recognizing edges or shapes, and remain unchanged during training.

- Trainable Layers: These adapt to learn task-specific details, such as identifying dog breeds.

By freezing some layers, you save time and prevent the model from relearning basic patterns.

Choosing the Right Model

The success of transfer learning depends on the pretrained model you select. Consider:

Domain Similarity

For example, if your task is image-related, use a model like ResNet trained on ImageNet.

Model Size

Larger models like GPT-3 need more computational power, but offer broader capabilities.

Adaptability

Choose a model that can be fine-tuned for your specific data.

Applications of Transfer Learning

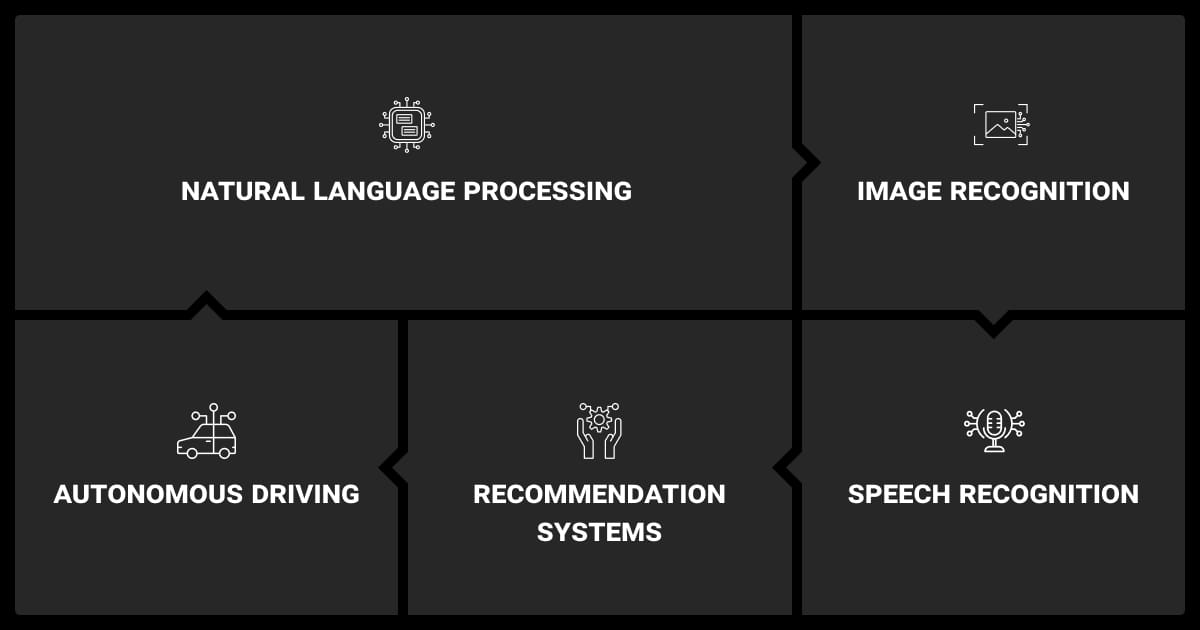

Transfer learning machine learning is widely used because it saves time and works well across different fields. Below are some of the most common applications.

Computer Vision

Transfer learning is common in image-related tasks. Pretrained models like ResNet or Inception are used for:

- Object detection (e.g., vehicles in traffic).

- Image classification (e.g., medical X-rays as "healthy" or "diseased").

- Facial recognition (e.g., in surveillance systems).

Image recognition tasks, such as classifying objects in photos, can leverage pretrained models for faster and more accurate results. Similarly, OCR deep learning models, when fine-tuned using transfer learning, can enhance accuracy in text extraction from complex documents.

Natural Language Processing (NLP)

Models like BERT and GPT are pretrained on large text corpora and adapted for tasks like:

- Sentiment analysis: Classify text as positive or negative.

- Text summarization: Create concise summaries of articles.

- Language translation: Convert text from one language to another.

Transfer learning supports different types of LLMs, such as BERT and GPT, by adapting them for specific language tasks.

Specialized Domains

Transfer learning is also used in niche areas like:

- Healthcare: Diagnose diseases from medical scans or patient data.

- Autonomous Vehicles: Identify road signs or obstacles.

- Environmental Monitoring: Track changes in forests using satellite images.

Case in point, transfer learning can improve models trained on financial datasets by adapting them to handle tasks like fraud detection or risk analysis. Or, it can be used to improve automatic speech recognition by adapting general-purpose language models to specific accents or environments.

Advanced Applications

Cross-Modal Transfer Learning

Combine knowledge from different types of data (e.g., text and images). Example: Generate an image based on a text description.

Domain Adaptation

Apply a model trained on one dataset to another with slightly different characteristics. For example, use a model trained on U.S. driving scenes for European roads.

Few-Shot Learning

Fine-tune a model to perform well with very few labeled examples. This is useful for rare events or uncommon datasets.

Implementing Transfer Learning: Step-by-Step Guide

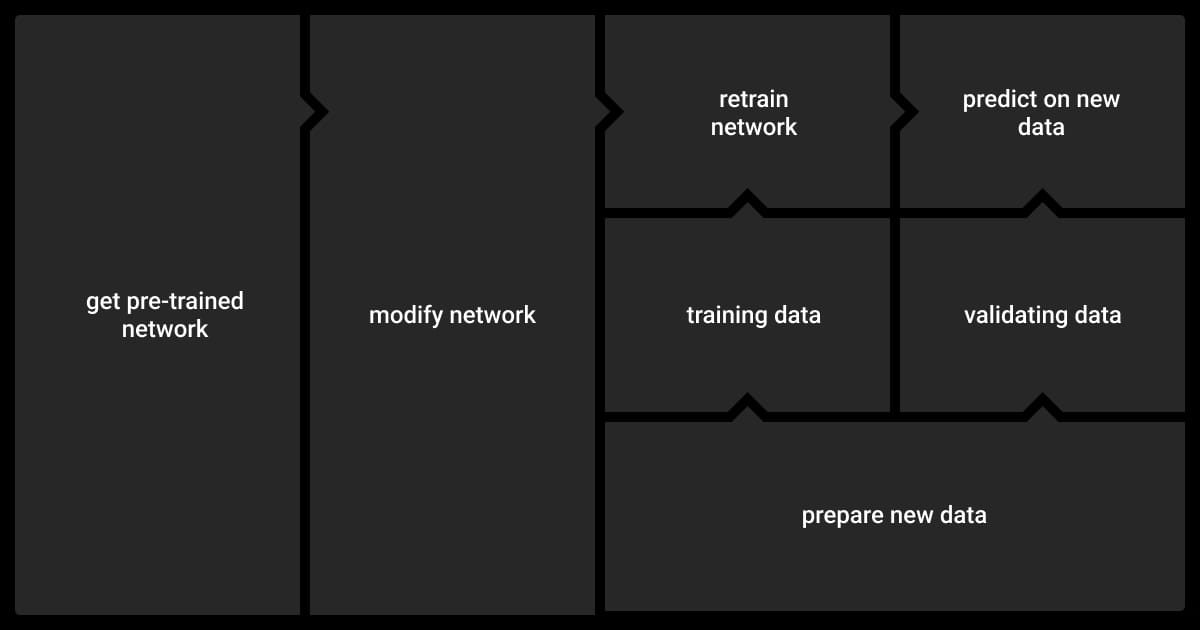

Transfer learning is easier to implement when broken into manageable steps. Here’s how you can do it:

Step 1: Choose a Pretrained Model

Select a model trained on data similar to your task. For images, use models like ResNet or Inception. For text, choose models like BERT or GPT.

Step 2: Load the Pretrained Model

Load the model architecture and weights. Ensure the input format (e.g., image size or text type) matches the model’s requirements.

Step 3: Freeze Early Layers

Keep the initial layers unchanged to retain the model's general knowledge. These layers often detect basic patterns, like edges in images or common word relationships in text.

Step 4: Add Custom Layers

Replace the final layer with a new one designed for your specific task, such as adding a classification layer for new categories.

Step 5: Compile and Train the Model

Train the updated model on your data. Use a lower learning rate to fine-tune the new layers without overwriting the pretrained knowledge.

Step 6: Fine-Tune if Needed

If performance is unsatisfactory, unfreeze additional layers and retrain with a small learning rate to improve the model’s accuracy.

Practical Tips

- Prepare your data: Match the format required by the pretrained model.

- Monitor overfitting: Use validation data to track the model’s performance.

- Start with few epochs: Check results before running longer training cycles.

- Use labeled datasets from a reliable data annotation company for better results.

Challenges and Common Pitfalls in Transfer Learning

Transfer learning provides many advantages, but it also presents some challenges. Missteps can lead to poor performance or wasted effort. Here are the common pitfalls to watch out for:

Negative Transfer

Negative transfer happens when knowledge from the pretrained model doesn’t apply well to the new task. Select a pretrained model that was trained on data similar to your target task.

Overfitting on Small Datasets

When the new dataset is too small, the model might memorize it instead of learning general patterns. Freeze more layers or use data augmentation to artificially expand your dataset.

Dataset Mismatch

Transfer learning works best when the source and target datasets share similarities. Transfer learning models trained on high-resolution photos may struggle with low-resolution images or grayscale data. To fix this, preprocess the new dataset to align with the pretrained model’s requirements.

Misusing Frozen Layers

Freezing too few layers can cause the model to relearn basic patterns unnecessarily, while freezing too many can limit adaptation. Experiment with freezing different layers and validate the results.

Computational Demands

Some pretrained models are large and require significant resources to fine-tune, especially on high-resolution data. Use smaller or more efficient models, like MobileNet, if hardware or time is limited.

Dependence on High-Quality Data

Transfer learning heavily relies on well-labeled datasets to fine-tune pretrained models. Understanding data annotation pricing helps you budget effectively and get quality annotations needed for successful transfer learning.

The critical consideration that most engineers overlook is what I call 'Performance-to-Complexity Transfer Ratio'—how efficiently a pretrained model can be adapted to your specific domain without introducing excessive computational overhead.

Best Practices for Successful Transfer Learning

To get the most out of transfer learning, follow these proven strategies. They’ll help you avoid common pitfalls and optimize your model’s performance.

| Strategy | Action | Why It Matters |

| Choose a Model | Pick one trained on similar data. | Ensures compatibility and faster setup. |

| Freeze Layers | Keep early layers frozen; fine-tune later. | Balances generalization and task adaptation. |

| Lower Learning Rate | Use a small learning rate for fine-tuning. | Preserves pretrained knowledge. |

| Prep Your Data | Match model input format, use augmentation. | Avoids errors and boosts performance on small datasets. |

| Test Incrementally | Start with a few epochs before fine-tuning. | Saves time by identifying setup issues early. |

| Validate & Iterate | Use validation data; try different models. | Improves performance and task fit. |

Transfer learning relies heavily on high-quality data annotation to fine-tune models for specific tasks. Choosing reliable data annotation services ensures better results.

Next Steps: Getting Started with Transfer Learning

If you’re ready to try transfer learning, here’s how you can begin:

- Choose frameworks like TensorFlow, PyTorch, or Hugging Face for pretrained models and tools.

- Start small with tasks like image classification or text analysis using lightweight models like MobileNet.

- Use datasets such as ImageNet, COCO, or Kaggle to practice fine-tuning techniques.

- Test multiple pretrained models and monitor metrics like accuracy and loss to improve results.

- Explore tutorials, blogs, and forums like Stack Overflow or GitHub for guidance and troubleshooting.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is meant by transfer of learning?

Transfer of learning refers to the process where knowledge or skills gained in one context are applied to another context. In machine learning, this means using a pretrained model from one task to improve performance on a new, related task.

What is the difference between CNN and transfer learning?

A Convolutional Neural Network (CNN) is a type of deep learning algorithm designed for tasks like image recognition. Transfer learning, on the other hand, is a method where a pretrained model (which could be a CNN) is adapted to a new task. In short, a CNN is a tool, while transfer learning is a technique that can use CNNs.

What is another word for transfer learning?

Another term for transfer learning is knowledge transfer. This emphasizes the reuse of knowledge from one model or task to another.

How does transfer learning benefit small datasets?

Transfer learning helps when labeled data is limited. Pretrained models already know key features, so they work well even with smaller datasets.

What is fine-tuning in the context of transfer learning?

Fine-tuning adjusts a pretrained model by training it further on new data to make it fit the target task better. Only some layers or the entire model can be updated.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.