Transfer Learning vs Fine Tuning: Key Differences for ML Engineers

Table of Contents

- TL;DR

- Why Getting Transfer Learning vs Fine Tuning Right Saves Training Time

- Feature Extraction vs Fine-Tuning: Core Differences

- Fine Tuning vs Transfer Learning: Accuracy, Data, Compute Trade-offs

- How to Decide Between Transfer Learning vs Fine Tuning

- Implementation Tips and Pitfalls in Transfer Learning and Fine-Tuning

- About Label Your Data

-

FAQ

- What is the difference between transfer learning and fine-tuning medium?

- What is the difference between fine-tuning and learning?

- What is the difference between fine-tuning and feature extraction in transfer learning?

- What are the five types of transfer learning?

- How does fine-tuning differ for LLMs and smaller models?

TL;DR

- Transfer learning reuses pre-trained models to save training time and compute.

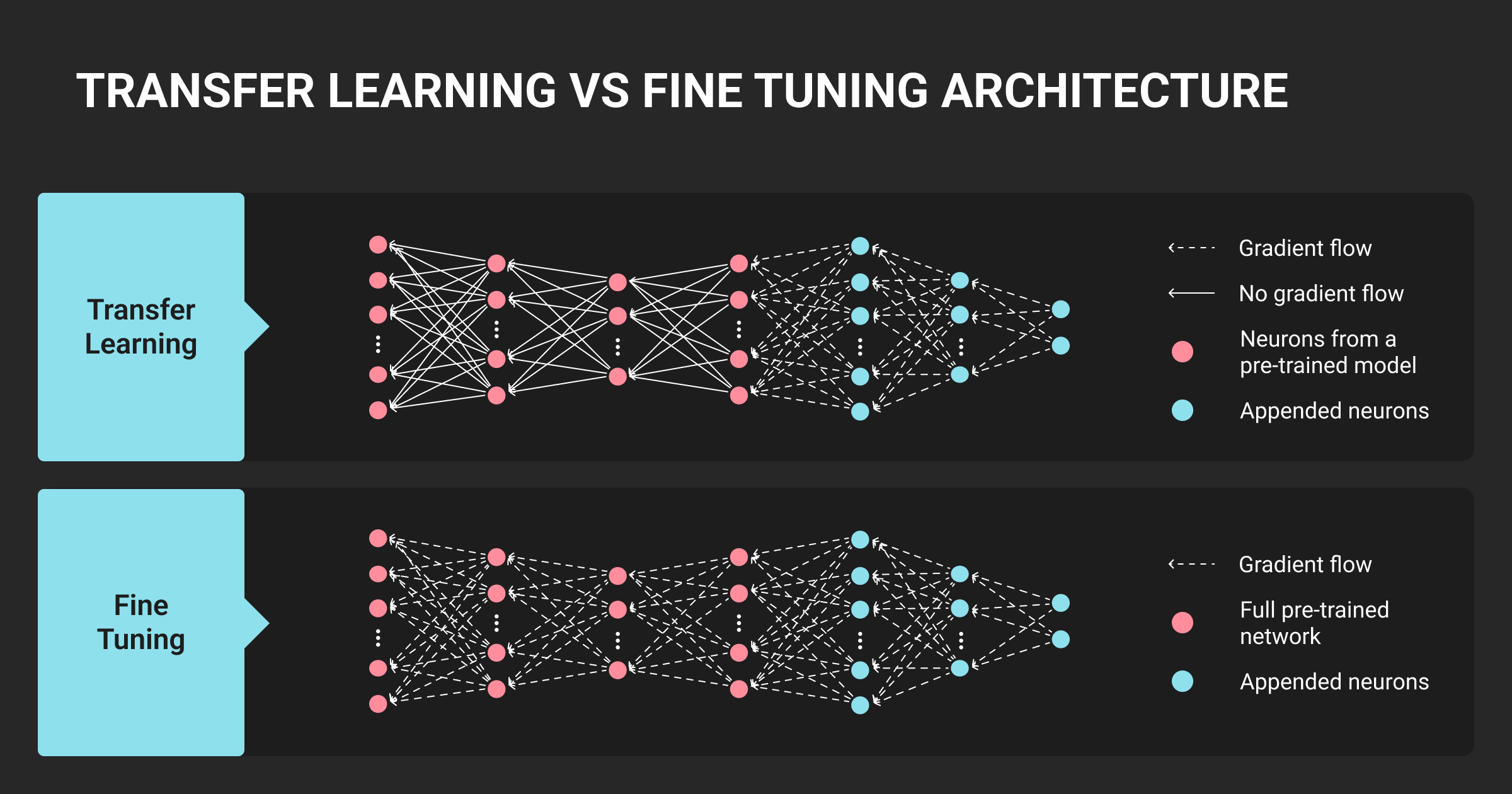

- Feature extraction freezes most layers and trains only the output head – fast, low-cost, ideal for small or related datasets.

- Fine-tuning unfreezes top layers and continues training for domain-specific adaptation – higher accuracy but more compute.

- The right method depends on data size, task similarity, and resources.

- Start simple, measure, fine-tune when needed, and optimize before scaling.

Why Getting Transfer Learning vs Fine Tuning Right Saves Training Time

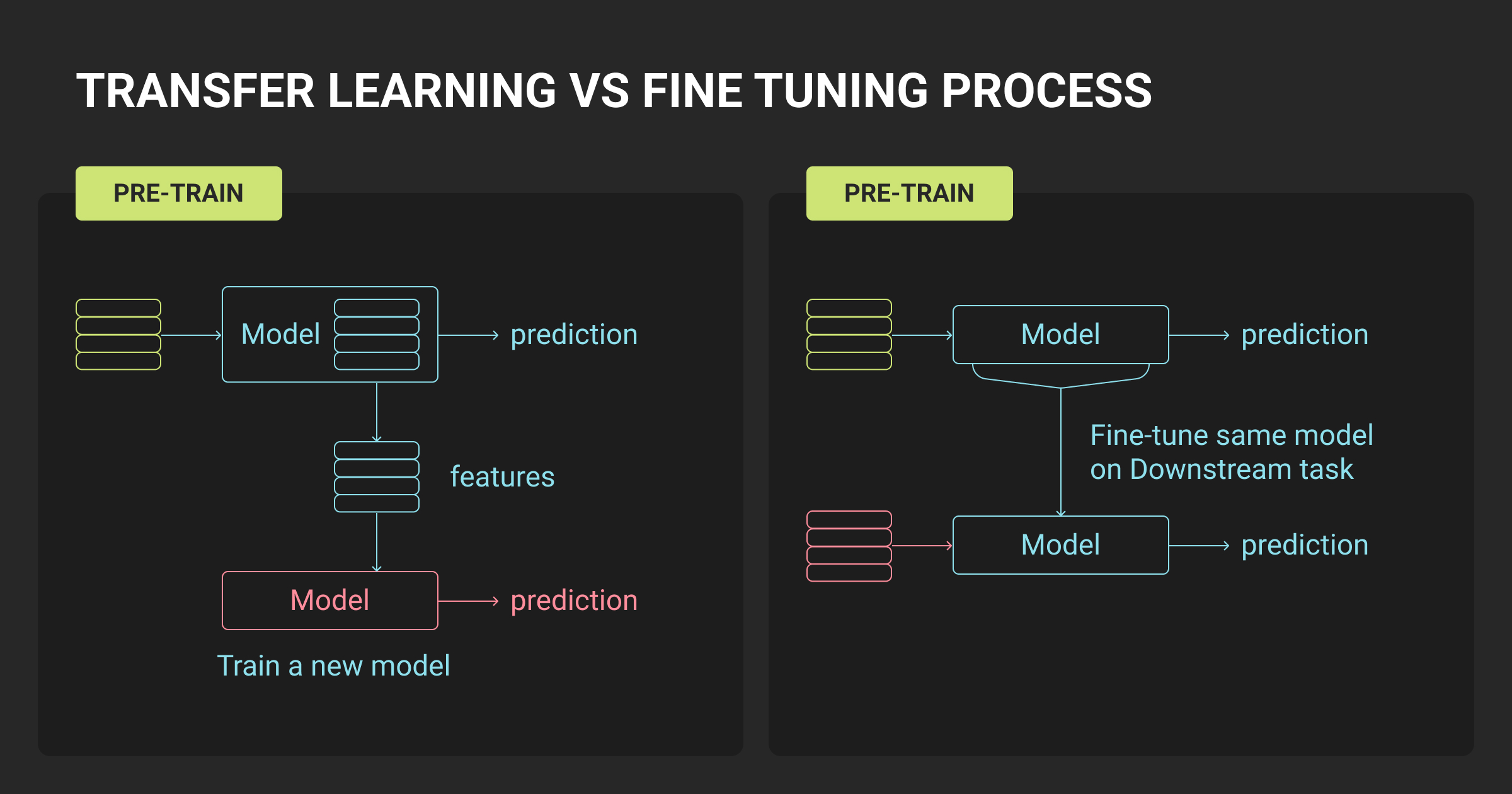

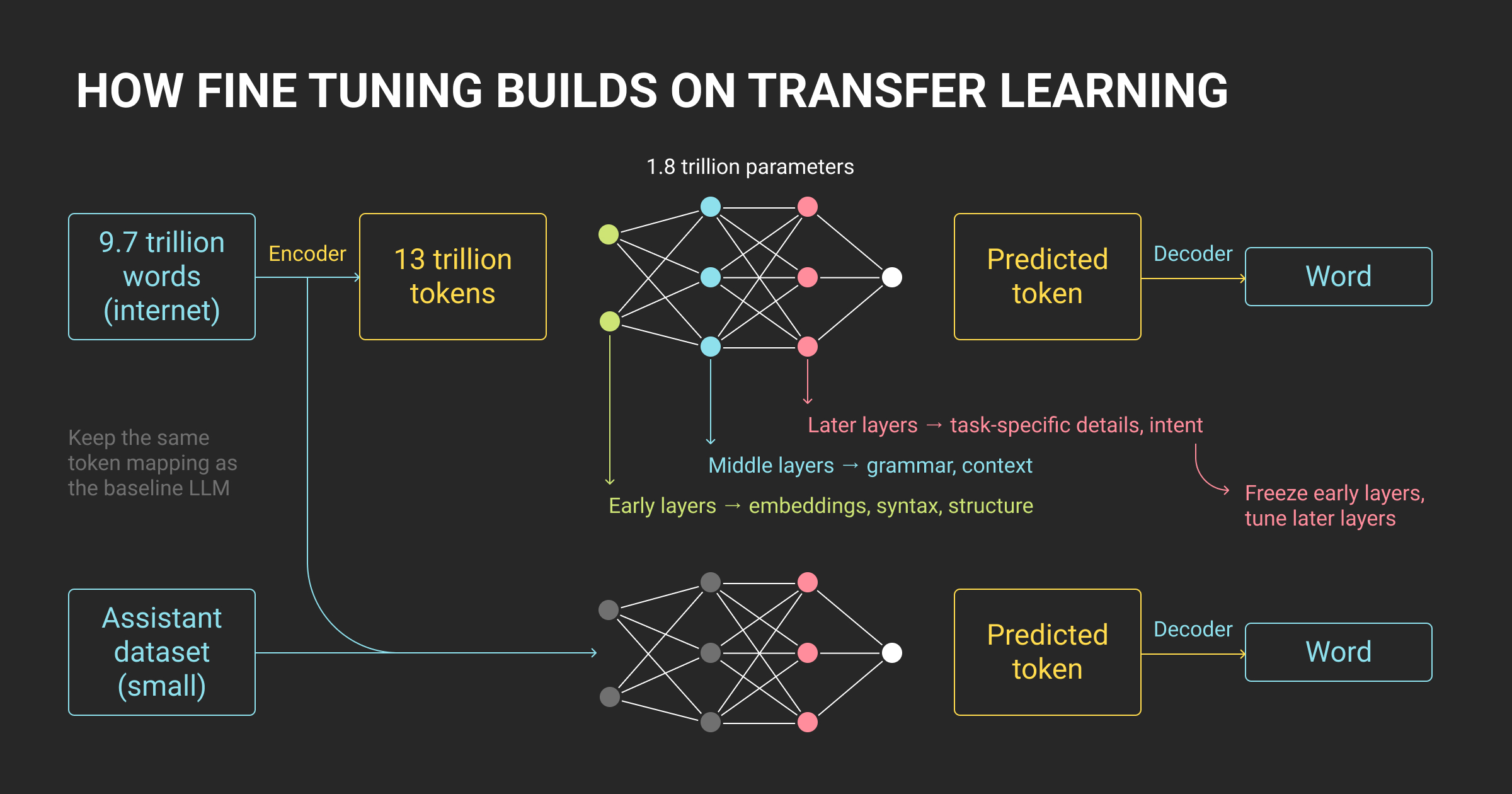

Transfer learning is a framework for reusing knowledge from one model to another. Fine-tuning is a specific technique within that framework. The goal is to adapt a pre-trained model to a new task without training from scratch.

In most computer vision, NLP, and speech recognition pipelines, training from zero is inefficient. Transfer learning allows ML engineers to build on large foundation models trained on diverse machine learning datasets, while fine-tuning adjusts part of that model to match new data.

The main difference is how much of the model you retrain:

- Feature extraction freezes most of the base model and trains only the final classifier layer

- Fine-tuning unfreezes some upper layers and continues training with a smaller learning rate

Choosing between transfer learning vs fine tuning and feature extraction affects compute cost, training time, and accuracy. Feature extraction gives quick, low-cost results. Fine-tuning achieves higher precision when you have enough data and GPU resources.

In short, transfer learning provides the foundation; feature extraction delivers the baseline; fine-tuning pushes performance further when conditions allow.

Feature Extraction vs Fine-Tuning: Core Differences

Feature extraction and fine-tuning differ mainly in how much of a pre-trained model you modify. Both use existing model weights, but the level of retraining determines accuracy, speed, and resource demands.

Feature extraction is best when your dataset is small or closely related to the model’s original domain. Fine-tuning works better when the target data diverges from the base model’s training set, and you can afford longer training cycles.

| Aspect | Feature Extraction | Fine-Tuning |

| Training Scope | Freeze base layers; train classifier head only | Unfreeze upper blocks and head for continued training |

| Data Needs | Small to moderate datasets | Larger datasets required for stability |

| Compute Demand | Low | High (GPU-intensive) |

| Adaptability | Limited to similar domains | Handles domain shifts effectively |

| Overfitting Risk | Low | Higher if data is small or unbalanced |

Both methods depend on high-quality labeled datasets. That’s why it’s a common practice for ML teams to pair model adaptation with reliable data annotation services from Label Your Data or use our self-serve data annotation platform to manage training data at scale.

Feature extraction

Feature extraction uses the pre-trained model as a fixed feature generator. You train a new output layer for your specific labels. This method is efficient, reduces overfitting, and works well for visual tasks such as image classification where new data shares patterns with ImageNet or similar corpora. However, its adaptability is limited. If your target data diverges significantly, the frozen layers may fail to capture new patterns.

Fine-tuning

Fine-tuning allows the model to relearn features from higher-level representations. By unfreezing the top layers and retraining with a smaller learning rate, the model adapts more deeply to the new dataset. This improves performance for domain-specific tasks, such as medical imaging or specialized NLP models, but increases computational cost and risk of overfitting. Careful learning rate control and staged unfreezing are key to maintaining stability.

If your new task comes with only a small labeled dataset, it's usually best to begin with transfer learning. Freezing most layers and fine-tuning just the top layers lets you leverage the pretrained model's knowledge without overfitting.

Transfer learning and fine-tuning are core techniques used across computer vision, NLP, and audio modeling – from image recognition systems to LLM fine tuning. ML engineers at any data annotation company or research lab rely on these strategies to adapt pre-trained models efficiently.

Fine Tuning vs Transfer Learning: Accuracy, Data, Compute Trade-offs

Choosing between transfer learning vs fine-tuning methods depends on task similarity, dataset size, and available compute. Fine-tuning generally improves accuracy but at higher cost, while feature extraction is faster and more stable when data is limited.

When fine tuning vs transfer learning performs better

If your new dataset is small and closely matches the pre-trained model’s domain, transfer learning with frozen layers works best. For larger or more distinct datasets, fine-tuning improves domain adaptation but demands more compute and careful training control.

| Condition | Best Approach | Reason |

| Small dataset, similar domain | Feature extraction | Prevents overfitting; quick setup |

| Small dataset, different domain | Partial fine-tuning | Balances adaptation with control |

| Large dataset, similar domain | Fine-tuning | Leverages size for improved accuracy |

| Large dataset, different domain | Full fine-tuning | Enables deep domain adaptation |

Large models pre-trained on broad data offer strong generalization, but fine-tuning adapts them to niche domains. The trade-off is simple: more flexibility requires more training time and stronger hardware.

Task similarity and dataset size

The closer your task is to the source model’s domain, the less retraining you need. Vision models pre-trained on ImageNet often perform well out of the box on related categories, while NLP models fine-tuned on domain-specific text (e.g., medical or legal) show stronger gains when tasks diverge.

Compute and latency

Feature extraction demands minimal GPU power and is easy to parallelize. Fine-tuning introduces longer epochs, larger gradients, and slower convergence. In production, this affects both cost and latency. Teams often pre-train on cloud GPUs, then deploy frozen models to edge devices for faster inference.

When the target task has scarce labeled data, transfer learning with frozen base layers and adapters like LoRA or prefix-tuning is often preferable. When the domain differs substantially—like medical imaging vs ImageNet—full fine-tuning is worth the added compute cost.

How to Decide Between Transfer Learning vs Fine Tuning

The best way to decide between fine-tuning vs transfer learning depends on how much adaptation your model needs and how much data or compute you can afford. Start simple, measure performance, then scale training complexity only when results plateau.

Step 1: Start with feature extraction

Begin by freezing the pre-trained layers and training only the classifier head. This sets a quick baseline with minimal resources. It’s a low-risk first pass that reveals how well pre-trained features transfer to your dataset. If accuracy is sufficient, there’s no need for further training.

Step 2: Fine-tune when accuracy stalls

If the model underfits or domain mismatch appears, unfreeze upper layers and fine-tune with a lower learning rate. Adjust layer depth gradually and monitor validation loss closely. Fine-tuning is effective when your data diverges from the original model’s training set or when you have enough samples to avoid overfitting.

Step 3: Optimize before scaling up

Before moving to larger models or new architectures, improve your pipeline efficiency. Clean data, augment samples, and experiment with regularization or learning rate schedules. These optimizations often close the accuracy gap without increasing compute costs.

Before scaling, it’s worth reviewing data annotation pricing and data annotation quality. Even modest improvements in dataset accuracy can yield better results than additional compute cycles.

If failure means lost revenue, use transfer learning and iterate quickly. If failure means lost trust or regulatory problems, invest in full fine-tuning upfront. My team runs both methods in parallel: transfer learning for fast demos, fine-tuning in the background for production.

Implementation Tips and Pitfalls in Transfer Learning and Fine-Tuning

Small configuration choices can make or break transfer learning results. Engineers often lose performance from learning rate mismatch, unstable BatchNorm layers, or skipping staged training.

Two-stage training

In transfer learning vs fine tuning, a two-stage approach works best. Train the classifier head first while keeping all base layers frozen. Once it stabilizes, unfreeze selected layers for fine-tuning. This staged approach prevents large weight updates from damaging pre-trained features and allows better control over learning dynamics.

Learning rates and schedulers

Use smaller learning rates for unfrozen layers – typically 10× lower than the head. This keeps fine-tuning stable and prevents catastrophic forgetting. Discriminative learning rates or cosine annealing schedules can further improve convergence when training deeper architectures.

BatchNorm and regularization

During fine-tuning, keep BatchNorm layers in inference mode to preserve pre-trained statistics. Combine dropout and weight decay to control overfitting, especially when data volume is small. Regular monitoring of validation loss and layer-wise freezing decisions can prevent instability in late epochs.

Whether optimizing a machine learning algorithm for NLP or vision, remember that model stability depends as much on training data quality as hyperparameter tuning. A few well-chosen parameters often yield more improvement than expanding the dataset or model size.

For production rollouts, Label Your Data’s annotation workflows and review layers help stabilize retraining cycles when models are fine-tuned frequently.

About Label Your Data

If you choose to delegate LLM fine-tuning, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is the difference between transfer learning and fine-tuning medium?

Transfer learning is the broader process of reusing a pre-trained model on a new task. Fine-tuning is one of its methods, where part of the model is retrained on domain-specific data. The “medium” here refers to the model’s adaptation level: transfer learning focuses on feature reuse, while fine-tuning adjusts learned representations for the target task.

What is the difference between fine-tuning and learning?

Learning involves training a model from scratch using randomly initialized weights. Fine-tuning starts with an already trained model and refines its parameters for a new objective. Fine-tuning is faster, needs less data, and leverages existing knowledge from the base model.

What is the difference between fine-tuning and feature extraction in transfer learning?

Feature extraction keeps most model layers frozen and trains only the final classifier, using pre-trained features as fixed representations. Fine-tuning unfreezes selected layers for additional training, allowing deeper adaptation. Feature extraction is faster and safer for small datasets; fine-tuning achieves higher accuracy when enough data is available.

What are the five types of transfer learning?

The five main types of transfer learning are inductive, transductive, unsupervised, domain adaptation, and multitask learning. In inductive transfer learning, the source and target tasks differ but share domains. Transductive transfer learning applies when tasks are the same but domains differ. Unsupervised transfer learning focuses on adapting models to unlabeled target data. Domain adaptation minimizes distribution shifts between source and target datasets, while multitask learning trains multiple related tasks together to improve shared representations.

How does fine-tuning differ for LLMs and smaller models?

Fine-tuning large language models (LLMs) follows similar principles to transfer learning. The difference lies in scale – types of LLMs like Gemini or GPT variants require far more compute and data management. Many ML teams compare Gemini vs ChatGPT to evaluate performance, latency, and adaptability for specific business cases.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.