Bounding Box Annotation: Best Practices

Table of Contents

TL;DR

- Bounding box annotation labels objects with rectangles for object detection models.

- Faster than pixel-level segmentation, making it ideal for real-time use.

- Accuracy boosts model precision, recall, and performance.

- Best practices: precise alignment, rotatable boxes, and consistent labeling.

- For complex scenes, consider polygons or segmentation masks with strict quality control.

What Is Bounding Box Annotation?

Bounding box annotation helps train AI models by outlining objects within an image using rectangular boxes. They define the object’s position and size, creating labeled data that a machine learning algorithm can use to identify similar objects in new images.

It is one of the most used techniques for labeling images in object detection and image recognition. Unlike semantic segmentation, which provides pixel-level details, bounding box annotation offers a simpler, faster approach by using rectangular markers.

Whether you're working with unlabeled data or refining an existing dataset, precise annotations improve model accuracy.

How Bounding Box Annotation Works

Image bounding box annotation involves manually or automatically drawing rectangles around objects in an image. Each box is assigned a class label, telling the AI what it represents.

Even when using AI-assisted tools, human oversight remains crucial to verify and correct the annotations, ensuring high-quality data for training. For example, in an autonomous driving dataset, bounding boxes might label cars, pedestrians, and traffic signs.

A bounding box annotation tool streamlines this process, allowing annotators to label images faster and more consistently. When working with video data, a video bounding box annotation tool enables frame-by-frame labeling, ensuring accurate object tracking across sequences.

Bounding Boxes for AI Training

Bounding boxes are the foundation of many ML algorithms that rely on labeled images for training. They are widely used in:

- Autonomous Vehicles: Identifying cars, cyclists, and road signs.

- Retail and E-Commerce: Detecting products in images for better search results.

- Medical Imaging: Locating tumors and abnormalities in X-rays or MRIs.

- Manufacturing: Inspecting objects on production lines for quality control.

Bounding boxes are also applied to financial datasets, where precise labeling of documents and transaction images aids in fraud detection and automated processing.

This precision enhances detection rates and improves key performance metrics such as precision, recall, and overall model confidence, benefiting various types of LLMs that process visual and multimodal data.

Bounding Boxes vs. Other Annotation Methods

Bounding box annotation is effective for many tasks. But when comparing image classification vs. object detection, some objects require more detailed labeling methods:

| Annotation Type | Best Used For | Example Applications |

| Bounding Boxes | Standard object detection | Vehicles, people, furniture |

| Polygons | Irregularly shaped objects | Animals, logos, machinery parts |

| Keypoint Annotation | Object tracking, pose estimation | Human skeleton tracking, facial recognition |

| Semantic Segmentation | Pixel-level accuracy | Medical imaging, autonomous driving |

The choice of annotation method should align with your specific application requirements and the nature of the objects within your dataset.

Best Practices for Accurate Bounding Box Annotation

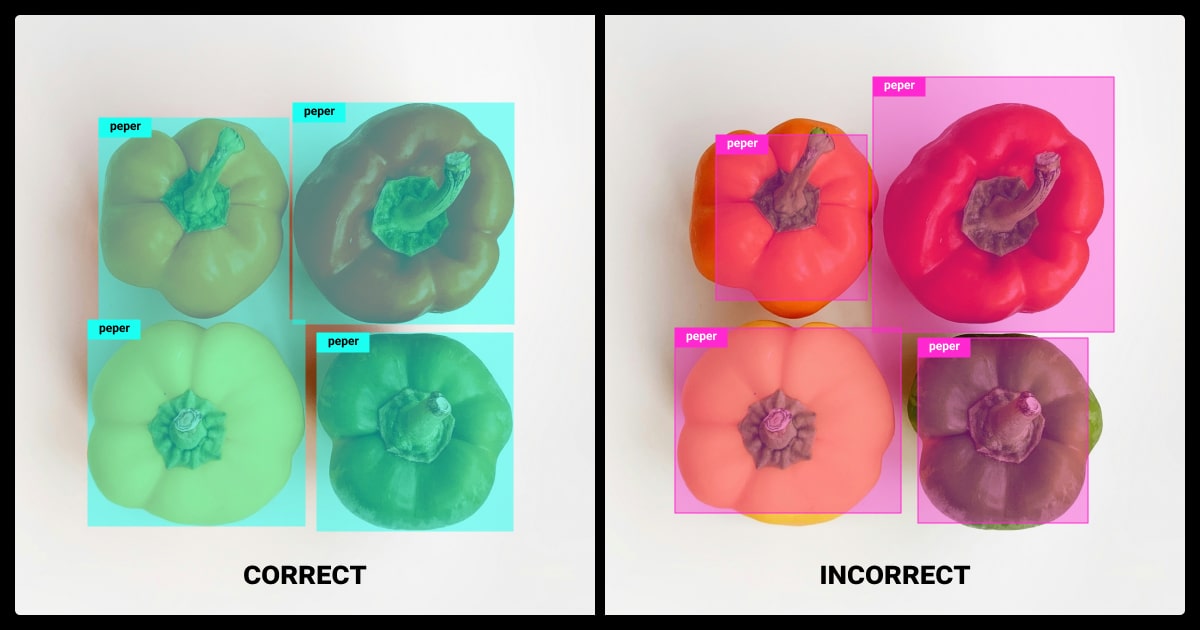

Inaccurate bounding box annotation can lead to poor detection results. Loose boxes, inconsistent labels, and improper shapes can mislead the model, reducing its performance.

Here’s how to annotate images effectively using bounding boxes:

Pixel-Perfect Accuracy

Bounding boxes should be aligned as closely as possible with object contours, ensuring minimal background space while avoiding cutting off any part of the object. The overlap between the actual object’s box and the model’s predicted box is measured with Intersection over Union (IoU), which helps assess annotation accuracy.

Best practices for accuracy:

- Use precise box alignment, avoiding gaps between the object and bounding box edges.

- Adjust bounding box placement manually when needed to improve precision.

- Validate annotation quality with IoU-based evaluation techniques.

Rotatable Bounding Boxes for Better Precision

Standard bounding boxes work best for well-aligned, structured objects, but when dealing with tilted or irregularly shaped objects, standard boxes can include too much irrelevant background. Rotatable bounding boxes help in these scenarios by aligning more closely with the object’s orientation.

In cases requiring depth estimation, a 3D bounding box annotation tool provides an additional dimension, improving accuracy for applications like autonomous vehicles and robotics.

When to use rotatable bounding boxes:

- Traffic sign detection, where signs may be angled.

- OCR deep learning models, where text may be slanted or curved.

- Satellite vs. drone imagery, where objects like buildings or vehicles may not be aligned with the image axes.

Aligning the box with the object’s orientation reduces irrelevant background and enhances detection accuracy.

Label Consistency and Detailed Classification

Inconsistent labeling leads to poor model training and higher error rates. Without a structured classification system, as required in LLM data labeling, AI models struggle to differentiate between similar objects.

Common labeling mistakes:

- Assigning broad labels that merge different object types (e.g., grouping motorcycles, cars, and trucks under “vehicles”).

- Changing label names inconsistently throughout the dataset.

- Misclassifying objects due to unclear annotation guidelines.

Best practices:

- Establish strict label hierarchies (e.g., “Vehicle → Car → Sedan”).

- Ensure label standardization across the entire dataset.

- Use automated quality control tools to flag inconsistent annotations.

Consistency in bounding box annotations comes from clear guidelines and AI-assisted tools. A detailed annotation guide ensures uniformity, while AI-powered pre-labeling with tools like Labelbox or Roboflow speeds up the process. Adding quality control, whether through a second reviewer or automated checks, further reduces inconsistencies.

How to Annotate Complex Datasets with Overlapping Objects

Some datasets contain multiple overlapping objects or crowded environments, making bounding box annotation challenging. In such cases, using alternative annotation methods like polygons or segmentation masks may be more effective.

The quality of input images affects annotation accuracy. Dim lighting, low contrast, and poor image resolution can make it harder for models to detect objects accurately.

Strategies for handling low-quality images:

- Enhance image resolution when possible before annotation.

- Use AI-assisted annotation models to improve bounding box placement in complex, low-contrast images.

Quality Control in Bounding Box Annotation

Even with careful labeling, annotation mistakes happen. Inconsistent boxes, inaccurate object placement, and label mismatches can degrade model performance. A strong quality control process helps detect and fix errors before training begins.

Common Errors in Bounding Box Image Annotation

Mistakes in bounding box image annotation can confuse AI models, leading to false detections or missed objects. Some of the most frequent errors include:

- Misaligned boxes: Boxes that don’t fully enclose the object.

- Overlapping boxes: Multiple boxes assigned to the same object.

- Incorrect labels and inconsistent annotation: Annotators use different criteria.

Best practices:

- Train annotators with clear annotation guidelines before labeling starts.

- Leverage automated tools to identify misaligned bounding boxes.

- Perform regular quality audits on large datasets.

Manual vs. Automated QA

Annotation verification can be done manually or with AI-assisted tools. Each method has its advantages:

| QA Method | Best For | Example Use Case |

| Manual Review | Complex objects, small datasets | Medical imaging annotations |

| Automated QA | Large-scale datasets | Bounding boxes for retail product detection |

| Hybrid Approach | Ensuring consistency | Autonomous vehicle datasets |

For example, a data annotation company working on self-driving car datasets combines manual reviews with automated IoU checks to validate thousands of bounding boxes quickly.

Best practices:

- Use AI-based validation for large datasets.

- Apply manual spot-checking for high-accuracy tasks.

- Set IoU thresholds to flag incorrect annotations automatically.

Quality Control and Data Annotation Pricing

Quality assurance adds cost to the annotation process but improves dataset reliability. The cost of fixing mislabeled data later is much higher than implementing a review process upfront.

How quality affects data annotation pricing:

- High-accuracy annotation → More expensive, requires expert reviewers

- Simple object detection → Lower cost, AI-assisted tools reduce labor

- Rework due to annotation errors → Increases overall pricing, delays project completion

For businesses outsourcing data annotation, understanding pricing models helps balance cost and accuracy. Some data annotation providers charge per image, while others offer subscription-based plans for ongoing projects.

Best practice:

- Choose data annotation services based on your accuracy needs

- Balance automation and manual validation to reduce costs

- Avoid cheap annotation providers if high accuracy is required

Advanced Techniques for Bounding Box Annotation

Bounding boxes are effective, but they aren’t always the best choice for every dataset. Some objects require alternative annotation methods, such as edge cases. Their examples, such as occlusions or extreme object sizes, need specialized handling.

Hybrid Annotation Frameworks

Bounding boxes work well for structured objects, but combining them with segmentation masks, polygons, or 3D cuboids improves accuracy in complex datasets.

For example, in medical imaging datasets, bounding boxes alone fail to capture fine-grained details of tumors. Combining bounding boxes with segmentation masks improves model precision in distinguishing between healthy and affected areas.

- Use bounding boxes for standard objects and segmentation for irregular shapes.

- Apply 3D cuboid annotations for depth-aware object detection.

- Test different annotation techniques to find the most efficient method for your dataset.

Handling Edge Cases

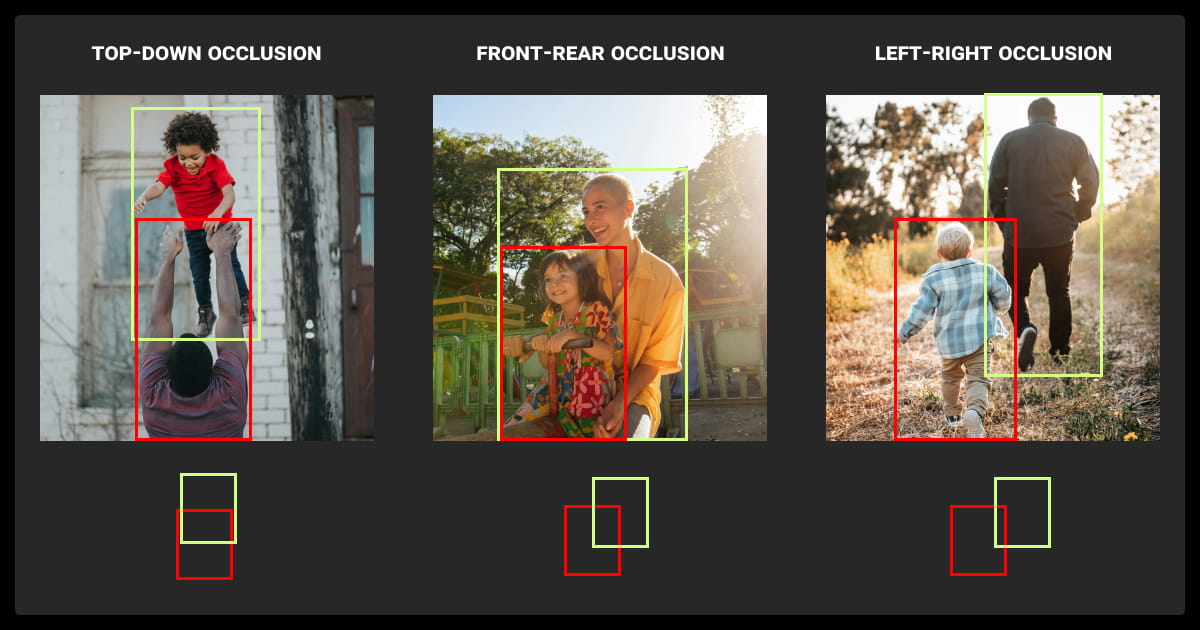

Some objects are difficult to annotate due to occlusion, extreme size variations, or environmental interference. These cases require specific strategies to maintain dataset quality.

Annotating Occluded Objects Correctly

Occlusion occurs when one object partially blocks another, making annotation more challenging. AI models must still recognize these objects based on their visible parts.

- Label only the visible portion of an occluded object.

- If occlusions are frequent, consider using instance segmentation instead of bounding boxes.

- Use context-based labeling when occlusion is predictable (e.g., pedestrians partially blocked by vehicles).

Handling Extreme Object Scales

Some datasets contain objects of vastly different sizes, which can distort model learning. If bounding boxes are too large, small objects may get ignored; if too small, large objects may be over-segmented.

For example, in aerial drone footage, distant vehicles appear much smaller than closer ones. If not normalized, varying scales can confuse the model.

- Adjust bounding box sizes dynamically based on object scale.

- Use instance segmentation for highly detailed small objects.

- Break large objects into multiple bounding boxes when needed.

Bounding box annotation alone isn't always enough for complex AI tasks. Using hybrid annotation techniques and specialized handling for edge cases leads to better model accuracy and higher-quality training data.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is a bounding box annotation?

A bounding box annotation is the process of drawing a rectangle around an object in an image to define its position and dimensions. This labeling technique provides structured data that helps train machine learning models to detect and classify objects.

What is the purpose of using bonding boxes in image annotation?

The purpose of using bounding boxes is to create clear, structured labels that indicate where objects are located and how large they are. This information is critical for teaching AI models to recognize and differentiate between objects in new images.

What is a bounding box used for?

Bounding boxes are used to identify and localize objects within images, providing the necessary coordinates for object detection algorithms. They serve as the foundation for training models in various computer vision applications.

What are the rules for bounding boxes?

Rules for bounding boxes include ensuring that they closely fit the object without excessive background and maintain consistency across annotations. They should be precisely aligned with the object's edges to improve metrics like Intersection over Union (IoU).

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.