Text Annotation Tool: A Guide for ML and NLP Teams

TL;DR

- Open-source text data annotation tools give full control and zero cost but require self-hosting, while SaaS platforms add collaboration and compliance.

- Active learning tools reduce annotation effort by 50%+ by showing only uncertain examples, while managed services provide expert annotators and multi-layer QA for mission-critical datasets.

- Tool choice depends on three factors: team size (solo vs 5-20 vs enterprise), data sensitivity (public vs HIPAA-regulated), and workflow (DIY labeling vs model-in-the-loop vs fully outsourced).

How to Choose the Right Text Annotation Tool

The right data annotation tool for text annotation depends on three constraints: team size, data security requirements, and how much of the labeling workflow you want to manage yourself.

The wrong text annotation tool reveals itself when you’re three months in and can’t scale past your current dataset size.

Team size determines what breaks first. Solo Ml engineers need fast data annotation and retraining cycles, not reviewer workflows. At 5-10 annotators, disagreements pile up (is “Apple” a company or fruit?), and you need conflict resolution. At 15+, coordination dominates. Without task routing, people duplicate work.

Data sensitivity cuts options in half. Medical or financial datasets require HIPAA/GDPR compliance. Check certifications first. Security requirements eliminate more text data annotation tools than missing features. You need certified SaaS or self-hosted infrastructure you control.

Academic AI researchers face similar challenges: we worked with Technological University Dublin on research document annotation with strict data governance, which required certified processes and expert-level accuracy above 95%.

Labeling workflow determines daily usage. Labeling yourself? Active learning skips examples your model handles confidently. Have a 70% accurate model? API pre-labeling saves hours. Zero bandwidth for managing annotators? Managed services cost more but handle staffing, data compliance, domain expertise, and QA.

Most machine learning teams we worked with at Label Your Data prototype with free text annotation tools on small machine learning datasets, then pay for scale when production requirements tighten.

Modern tools should expose dashboards showing label distribution, throughput, drift, and annotator accuracy. This visibility is essential for maintaining dataset balance and diagnosing bias early.

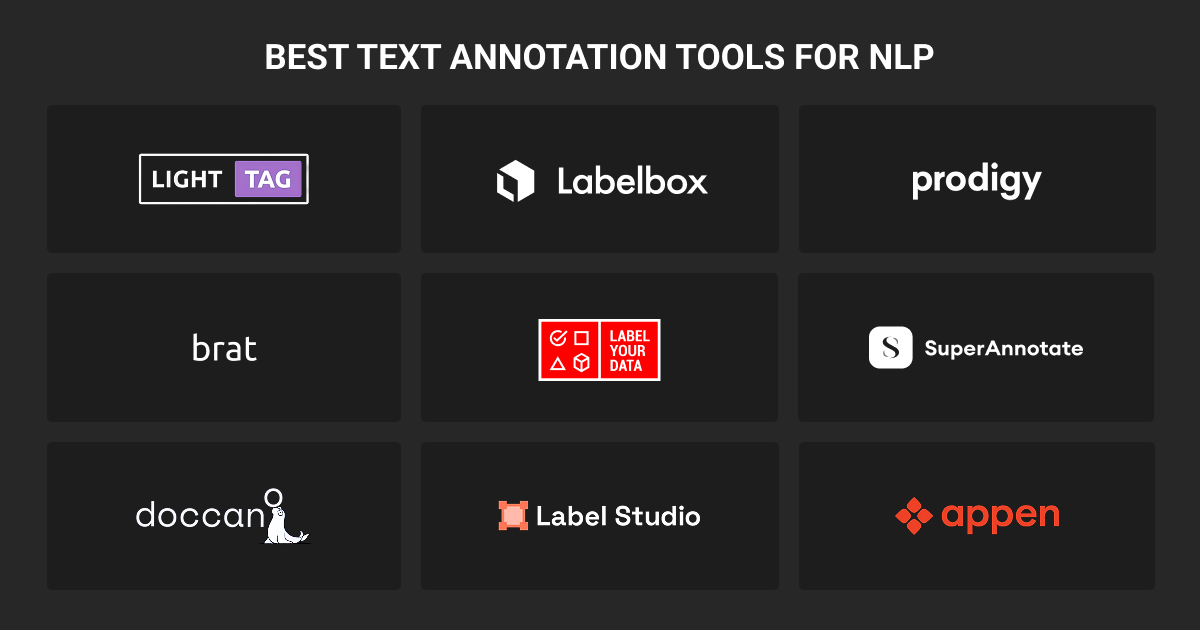

Top Text Annotation Tools for NLP Projects (2025)

These nine text annotation tools are split into three categories: open-source projects you host yourself, SaaS platforms you pay per month, and managed services that handle annotation for you.

We believe managed services belong in any honest comparison, since ML teams regularly evaluate DIY platforms against outsourced options when scaling annotation work. Your choice depends on whether you optimize for cost, speed, or label quality.

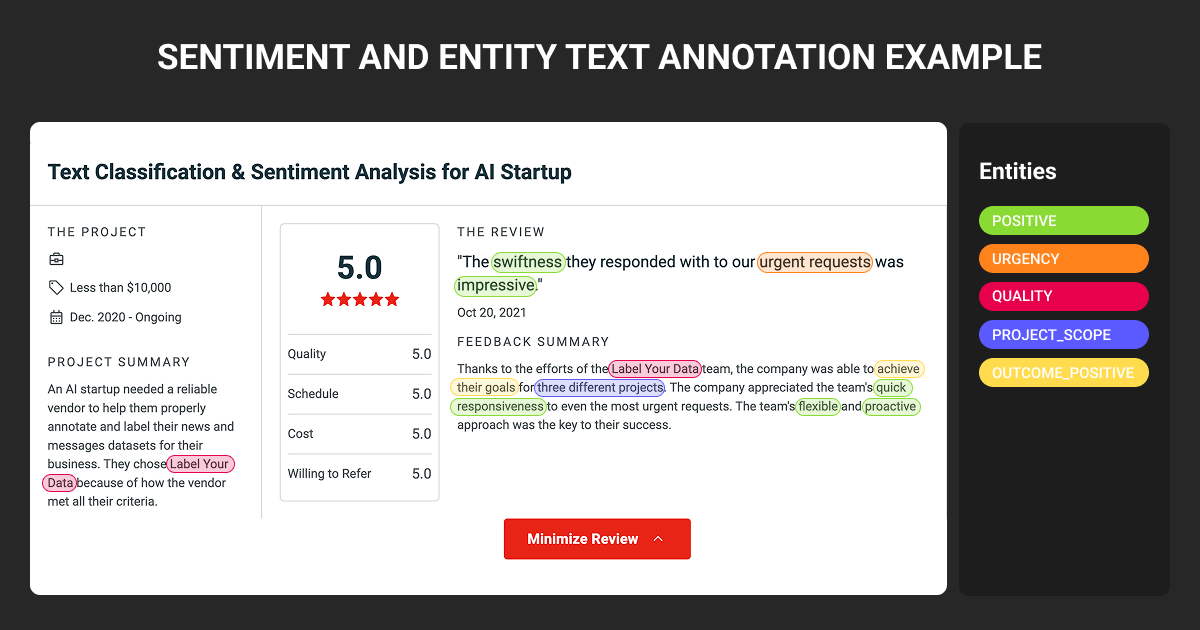

Label Your Data

Label Your Data is a managed service for text and document annotation in 55 languages. The main difference is that we provide expert annotators with domain training (medical coders, legal specialists, financial analysts) who handle your projects end-to-end.

Our multi-layer QA workflow runs first-pass annotation, review, then expert validation. This reduces downstream model errors compared to single-pass labeling. We integrate with Label Studio, Doccano, or your existing tools. You keep your infrastructure while outsourcing annotation labor and quality control.

- Best for: Teams needing high-quality labels on sensitive text data without hiring annotators

- Key features: HIPAA/ISO 27001 compliant, flexible scaling, 55 languages, custom workflows for edge cases

- Pricing: Custom per project (based on volume, domain complexity, security needs)

- Limitations: Slower time-to-first-label than in-house tools, requires vendor coordination

Labelbox

Labelbox combines annotation, data management, and model evaluation in one platform. The text editor handles NER with character-level spans, entity relationships, document classification, and multi-turn conversations for LLM fine tuning. Model Foundry evaluates GPT-4, Claude, and other LLMs directly against your ontology for RLHF data.

Model-assisted labeling imports predictions from HuggingFace or custom models for pre-labeling. Native LLM integration uses models as judges for auto-QA and scoring. Quality tools include consensus scoring, gold standard benchmarking, and real-time agreement metrics.

Native PDF annotation preserves document layout using text layer or OCR. HIPAA-compliant with role-based access for teams of 10+.

- Best for: Mid-to-large teams building production NLP with LLM evaluation needs

- Key features: Model Foundry for LLM eval, native PDF support, multimodal data types

- Pricing: Free tier available, Starter around $0.10/LBU, enterprise custom (LLM API costs extra)

- Limitations: LBU pricing adds up for large datasets, learning curve for model-centric workflows

For a detailed AI text annotation tool comparison with alternatives, see our Labelbox competitors guide.

Prodigy

Prodigy is a Python text data annotation tool from the spaCy team, built for rapid model iteration. Active learning is the core feature: your machine learning algorithm scores uncertainty and shows only examples it doubts most. This reduces annotation work by orders of magnitude.

This automatic text annotation tool runs locally via command line with a minimal web UI for binary decisions (accept/reject). Supports NER, text classification, dependency parsing, POS tagging, relation extraction. Custom recipes (Python scripts) extend it to any task or integrate any model.

Recent versions added basic multi-user support with task routing and inter-annotator agreement metrics. LLM-assisted recipes use GPT-3/4 to propose annotations for human verification.

- Best for: ML engineers and researchers doing iterative model development

- Key features: Active learning efficiency, custom Python recipes, spaCy integration

- Pricing: $390 one-time per user, team licensing available

- Limitations: No web UI for team coordination, requires Python skills, designed for single users

LightTag

LightTag is a SaaS platform focused on team coordination and quality control. Real-time collaboration with project dashboard, built-in conflict resolution, and annotation scoring. Inter-annotator agreement metrics calculate precision/recall per annotator automatically. Visual dashboards highlight inconsistencies.

AI suggestions learn from your annotations and pre-label new data. Supports NER (including nested entities), text classification, character-level relations, attribute tagging, and conversational dialogue annotation. Large taxonomy support with search filters handles 100+ label classes.

Integration with Primer’s autoML and Data Map features now available. HIPAA-compliant for healthcare data. On-premise deployment option added for air-gapped environments.

- Best for: Teams of 3-20 annotators where data quality and consistency matter most

- Key features: IAA metrics, conflict resolution, HIPAA-compliant, Primer integration

- Pricing: Free tier (5,000 annotations/month), custom enterprise pricing

- Limitations: Text-only (no image/video), smaller ecosystem than Label Studio/Labelbox

brat

brat is an open-source web tool for structured linguistic annotation, widely used in academic NLP for over a decade. Supports span-based NER, binary relations, n-ary events (semantic role labeling), attributes (negation, speculation), and entity normalization to external knowledge bases like Wikipedia.

Visualization renders text with colored entity highlights and relationship arcs. This makes complex annotation structures easy to read. Fully configurable via annotation.conf files to define custom schemas without code. Runs on a web server (Apache, Nginx) with minimal backend. Stores annotations as standoff files (keeps original text untouched).

Multi-user support with basic permissions. Diff/compare feature loads two annotators' work side-by-side to calculate agreement. MIT license, free to use. Minimal ML integration (external API for auto-annotations). Mature project with maintenance-focused updates.

- Best for: Researchers building linguistic corpora, academic annotation projects

- Key features: Rich visualization, n-ary events, standoff format, free (MIT license)

- Pricing: Free text annotation tool

- Limitations: No active learning, minimal multi-user features, dated UI, setup needs technical knowledge

SuperAnnotate

SuperAnnotate started in computer vision, now supports NLP. Text editor handles classification, NER (nested entities), relation extraction, coreference resolution, and summarization annotation. Agent Hub deploys custom or LLM models for pre-labeling and validation. Token-wise span control prevents partial-token selection errors.

Multimodal support handles text, image recognition, video, 3D point clouds in one interface. Enterprise vendor coordination tracks multiple annotation teams, centralizes QA, versioning, and cost tracking. Custom ontologies support medical coding, legal taxonomy, or domain-specific hierarchies.

Real-time project dashboards show quality metrics and cost tracking. SOC 2, GDPR compliant, HIPAA-ready via secure cloud. On-premise deployment available.

- Best for: Large enterprises managing multimodal data (text plus vision) in one system

- Key features: Agent Hub (model-in-loop), multimodal, vendor coordination, NVIDIA partnership

- Pricing: Pro Plan from $499/month (10 users), $29/user add-on, enterprise custom

- Limitations: NLP depth lags Labelbox/LightTag (historically vision-focused), pricing scales quickly

For an in-depth vendor evaluation, see our SuperAnnotate review.

doccano

doccano is an open-source Python/Django web app built for ease of use and rapid deployment. Supports text classification (single/multi-label), sequence labeling (NER, POS tagging, chunking), and sequence-to-sequence (summarization, translation, QA). Multilingual support works out-of-the-box. Handles right-to-left scripts and diverse languages.

One-click deployment via Docker or cloud templates (AWS, Azure, Heroku). Intuitive UI with drag-and-drop label creation and visual entity highlighting. REST API handles programmatic project/task/annotation management. Auto-labeling via REST: connect custom models or cloud APIs (AWS Comprehend) to pre-label data. Doccano shows predictions for human review.

Multi-user with role-based access. Export to JSON, CSV, JSONL. MIT license, free to use.

- Best for: Teams without dedicated DevOps, projects with limited budget, researchers prototyping

- Key features: One-click Docker setup, REST API, multilingual, MIT license

- Pricing: Free text annotation tool

- Limitations: Development slower than SaaS platforms, no built-in IAA stats, performance issues above 50k items

Label Studio

Label Studio is the most flexible open-source platform. Supports text, images, audio, video, time-series. Massive template library with 50+ pre-built configs. XML-based customization for unique tasks. Text tasks include classification, NER, relations, sentiment, intent, question-answering, paraphrase annotation, coreference, structured text-to-text.

ML backend integration connects custom models via API for pre-labeling and active learning loops. Prompts feature (Enterprise) uses LLMs to pre-label thousands of tasks and benchmark LLM outputs against ground truth. Multi-user collaboration with workspace organization, role-based workflows, comments, notifications. Cloud storage connectors for S3, GCS, Azure Blob, Databricks.

- Best for: Teams needing maximum flexibility without vendor lock-in, hybrid deployment needs

- Key features: Template library, ML backends, Prompts (LLM integration), Python SDK, webhooks

- Pricing: Free community, Starter Cloud $250-$1,000+/month, enterprise custom

- Limitations: Community edition lacks role workflows and active learning (Enterprise only), steeper learning curve than Doccano

Appen

Appen is a managed data annotation company with a global network of 1M+ remote contributors across 170+ countries. Provides crowdsourced annotation at scale with workforce quality management. Handles text classification, NER, sentiment, entity linking, speech transcription, intent classification, multilingual text (100+ languages), toxicity detection.

Quality control uses honeypot tests (known answers catch bad annotators), plurality (multiple workers per item, majority/weighted voting), and tiered review (high-performers review others). Platform tracks annotator performance with auto-ban for low performers. API integration handles programmatic job creation, monitoring, and data retrieval.

Hybrid human-AI combines crowd labels with ML algorithms to balance cost and quality.

- Best for: Large-scale projects needing diverse, multilingual, or broad-audience annotation

- Key features: 1M+ global workforce, 100+ languages, SOC 2/ISO certified, API integration

- Pricing: Custom, typically $10,000-$100,000+ based on volume and turnaround

- Limitations: Slower time-to-label (job setup overhead), quality varies without careful task design, less suitable for proprietary data (crowd sees it)

For detailed platform analysis, see our Appen review.

Your text data annotation tool needs built-in conflict resolution that surfaces disagreements before they poison your dataset. If your tool just averages confidence scores without flagging semantic conflicts, you're training your model on garbage.

Compare Text Annotation Tool Features Before You Commit (Quick-Scan Table)

Most teams narrow down to 2-3 text annotation tools based on deployment model, then compare data annotation pricing and feature gaps.

| Tool | Type | Best For | Starting Price | Active Learning | HIPAA/SOC 2 |

| Label Your Data | Managed Service | Teams outsourcing annotation with compliance needs | Custom (project-based) | Via integrated tools | HIPAA, ISO 27001 |

| Labelbox | SaaS Platform | Mid-to-large teams with LLM evaluation needs | Free tier, ~$0.10/LBU | Yes (model-assisted) | HIPAA (Aug 2025) |

| Prodigy | Desktop Tool | Solo ML engineers doing iterative development | $390 one-time/user | Yes (core feature) | Self-hosted |

| LightTag | SaaS Platform | Teams prioritizing annotation quality and IAA | Free (5k annotations/mo) | Yes (AI suggestions) | HIPAA |

| brat | Open Source | Academic researchers building linguistic corpora | Free (MIT license) | No | Self-hosted |

| SuperAnnotate | SaaS Platform | Enterprises managing multimodal data | $499/mo (10 users) | Yes (Agent Hub) | SOC 2, HIPAA-ready |

| Doccano | Open Source | Budget-conscious teams, rapid prototyping | Free (MIT license) | Via REST API | Self-hosted |

| Label Studio | Open Source/SaaS | Teams needing maximum flexibility | Free, Starter $250+/mo | Enterprise only | Enterprise only |

| Appen | Managed Service | Large-scale multilingual projects | Custom ($10k-$100k+) | Platform varies | SOC 2, ISO |

When to Use a Text Annotation Tool vs a Service

Use tools for prototyping with small datasets (under 10k examples), when ML engineers can manage workflows, or when working with public data. Open-source platforms cost nothing and let you iterate fast without vendor coordination.

Use services when annotation quality impacts production model performance, when you need domain expertise (medical, legal, financial), or when compliance requirements eliminate DIY options. Managed data annotation services handle hiring, training, QA, and certifications. You pay more per label but avoid infrastructure overhead.

Most production teams combine both: NLP text annotation tools for edge case refinement and active learning loops, services for bulk labeling and expert review. Your ML engineers focus on model development, while domain experts handle data quality at scale.

About Label Your Data

If you choose to delegate text annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is the common tool used for text annotation?

Label Studio leads open-source NLP text annotation tools with 20k+ GitHub stars. Labelbox dominates enterprise due to LLM evaluation and compliance features. Academic researchers use brat for linguistic tasks. Solo engineers prefer Prodigy for active learning, teams of 5-20 use LightTag for collaboration, and large organizations outsource to Label Your Data or Appen.

What is the best way to annotate a text?

Define clear labels with examples and edge cases first. Use active learning to label uncertain examples as it reduces work by 50%+. Have 2-3 annotators label the same data to measure agreement (target 80%+). Pre-label with models when possible, then human review. For production, use a two-pass workflow: initial annotation plus expert validation.

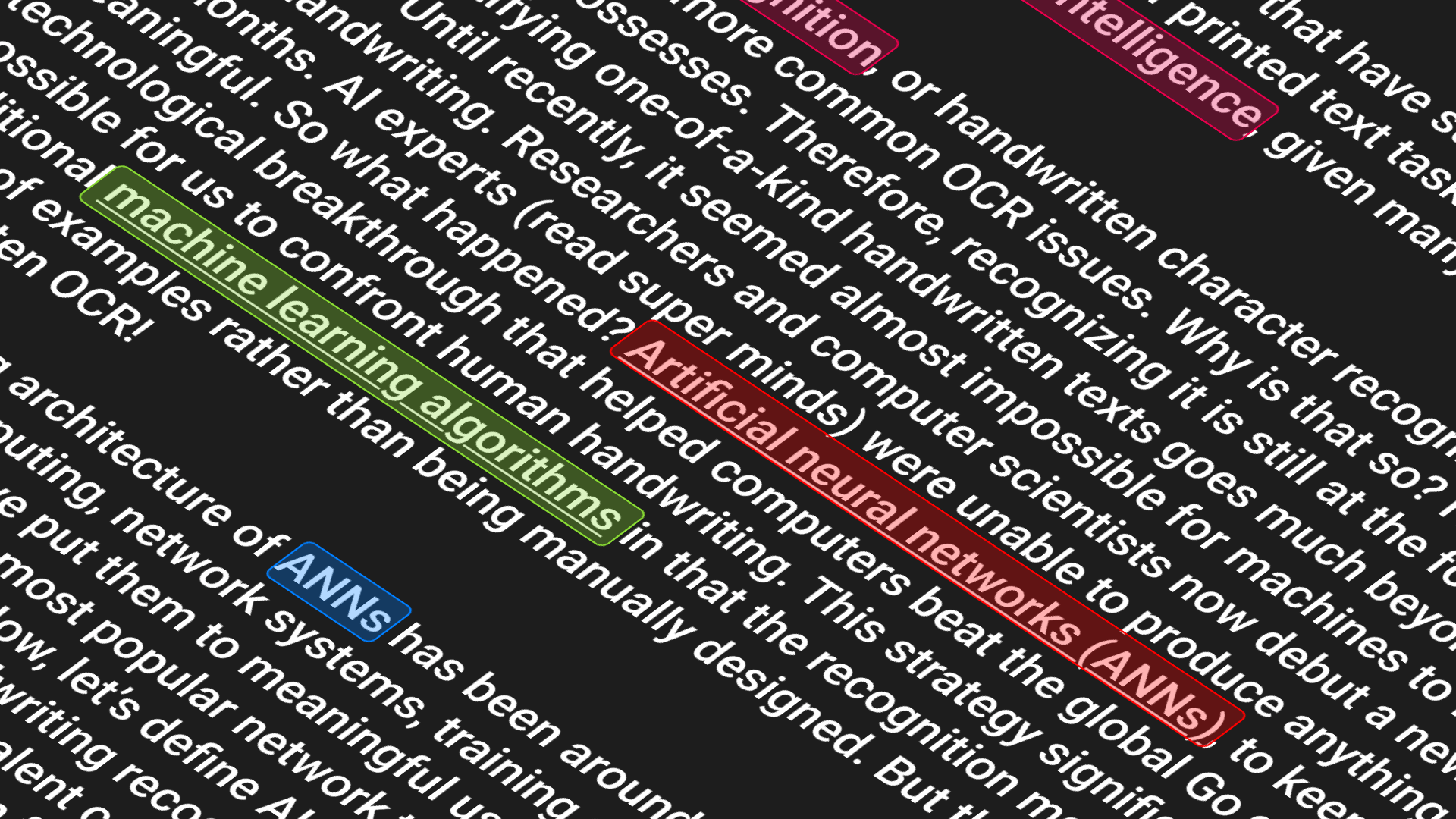

What is a text annotation?

Labeling text data to train ML models. Common tasks: named entity recognition (people, places, organizations), text classification (spam detection), sentiment analysis, relation extraction, and intent detection. Quality annotations directly determine model accuracy.

Can ChatGPT annotate text?

Yes, with limits. Works for basic tasks (sentiment, simple classification) and fast pre-labeling via API. Struggles with domain expertise (medical, legal), subjective judgment (hate speech, moderation), and consistency. Best practice: use LLMs for initial labels at $0.01-0.10 per example, then human review. Reduces costs 40-60% while maintaining quality.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.