What Is Perception in Machine Learning?

Table of Contents

Teaching machines to perceive the world like humans is one of the major goals in artificial intelligence. Following this idea, researchers have been exploring the possibility of giving robots human-like vision and perception abilities for a while now.

Although the name speaks for itself, machine perception covers a large scope of research. What is this research about, you ask? Machine perception is the ability that AI systems might develop in an effort to mimic human perceptual skills. This field of AI primarily deals with object detection, object recognition, and navigation issues. As such, machine perception is closely intertwined with computer vision, pattern recognition, robotics, and image processing

What is the final goal of machine perception in artificial intelligence? Well, AI-powered systems and technologies that can perceive their environment and take necessary actions can be of great use for different industries, such as industrial assembly and inspection, healthcare (automated X-ray screening), or even space exploration.

Machine perception works with sensory data to perform the required task. However, machine perception is part of a more large-scale concept known as machine understanding, which aims to build machines that can think and understand the information they are given. What’s more, a great deal of machine perception research involves the issues of concept formation in the search for an ideal perceptual model.

So, how different is machine perception from that of humans? And how far are sophisticated AI systems from developing human-like senses using sensory data? In this article, we’ll delve into the concept of machine perception and answer all the questions.

With that said, let’s get started!

What Does Machine Perception Stand For in AI?

As you already know, the basic principle guiding all machine learning systems is to help them interpret features of the human brain as accurately as possible. This way, machines get a better understanding of the world around them, but they still learn to develop and apply their sensory capabilities.

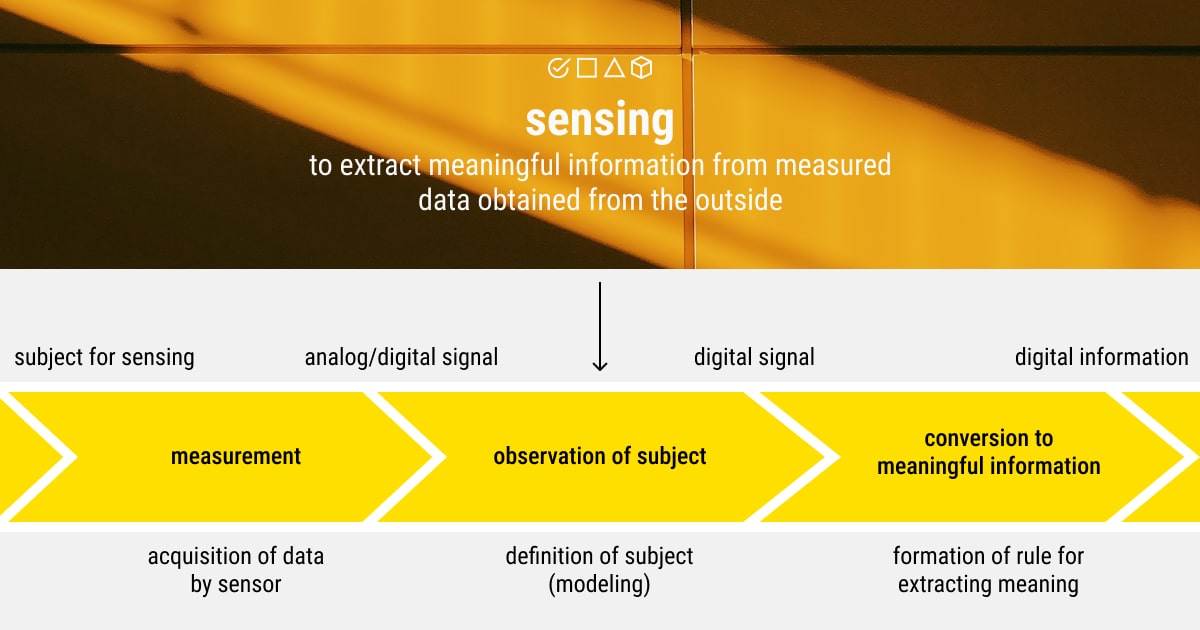

So, what is machine perception, and how does it work in practice? Perception in AI implies the ability of machines to use input data from sensors (e.g., cameras, LiDAR, RADAR, microphones, wireless signals, tactile sensors, etc.) to learn about many facets of the world. For example, machine perception is when it can tell the object’s position or movement trajectory in the scene. However, it’s not only about vision issues, but also hearing and other sensory systems.

Acoustics, vision, and machine learning make up the three primary subfields of research in machine perception. In addition, machine perception has a variety of uses, including the study and management of intelligent machines like autonomous systems. It’s also applied for developing intelligent robots, as well as voice recognition systems and translation. As such, AI perception is a crucial step in helping machines make sense of and respond to changes in the environment.

Perception in Artificial Intelligence: Technologies & Applications

As a form of artificial intelligence, machine perception aims to provide computer systems with the required hardware and software to identify pictures, sounds, and even touch. This way, the interaction between humans and machines can be greatly enhanced.

The attached hardware is the primary means through which computers absorb information from and react to their surroundings. Up until recently, machines could only process input through a keyboard or a mouse, but technological advancements in hardware and software have made it possible for AI systems to perceive the world in a way that is comparable to humans. This includes developments in computer vision, machine hearing, and machine touch, as well as recent advances in AI capable of sensing smell and taste.

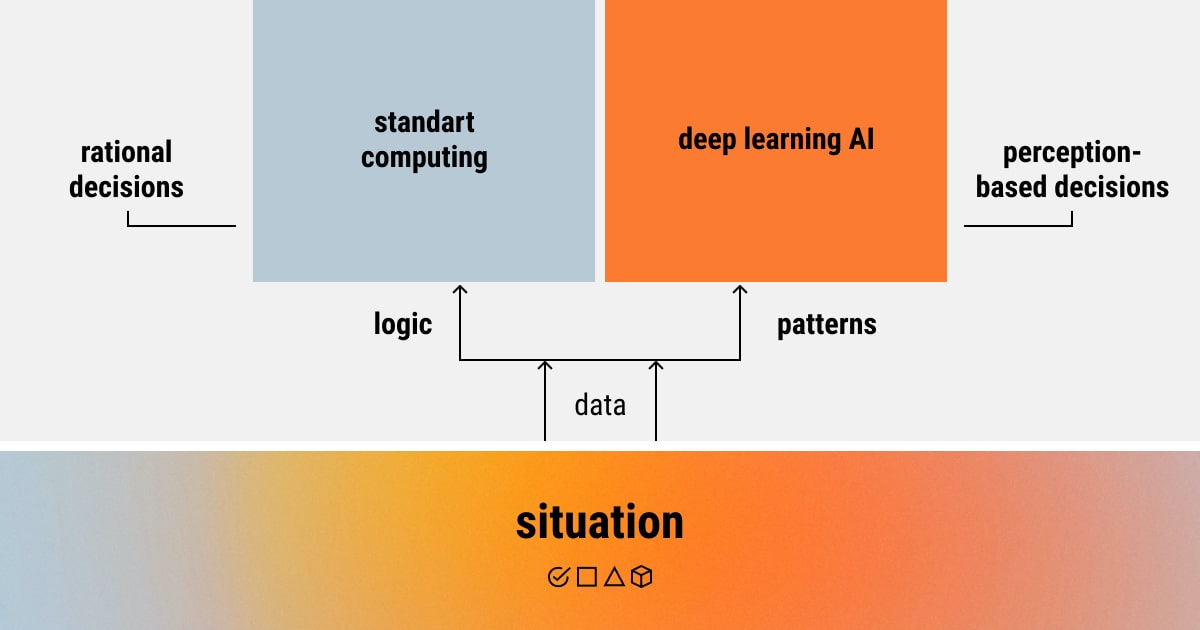

The main goal of machine perception is to provide machines with sensorimotor features to help them imitate human perceptual abilities through a technical system. Yet, the question remains: humans have a neural system to recognize patterns and be able to respond to the external world, but how do machines handle this task with no nervous system?

ML-based sensing systems work with patterns — machines learn and classify them, which is the process known as pattern recognition. To cut a long story short, machines are taught to recognize patterns in every task to which they are assigned, including image recognition, handwriting recognition, object detection, etc., by analyzing sensory data. ML pattern recognition is, in fact, very similar to that of humans because they both aim to determine if a certain input contains a pattern.

Machine Vision

The field of computer vision has gained traction over recent years with the purpose to teach machines to see and comprehend visual data in a way similar to humans. CV covers methods for collection, processing, analyzing, and understanding real-world images/videos and high-dimensional data. This way, ML models can make decisions by producing numerical or symbolic information. Machine vision is widely applied across major industries today, in the form of face recognition technology, medical imaging analysis, human pose tracking, object detection, 3D scene modeling, video surveillance, and video recognition, to name a few.

Machine Hearing

The key abilities of human hearing have already been integrated into the ML systems (e.g., Alexa, Google Assistant, Siri) to help them make sense of the audio data (think speech or music). Both human and machine approaches to listening (aka computer audition) have one thing in common: the attention-driven perception of sound. Machine hearing has a wide range of applications, such as music recording and compression, speech synthesis, as well as voice recognition. Many of these ML solutions are integrated into smartphones, voice assistants, and vehicles. Moreover, machines developed the ability to focus on one sound while blocking out surrounding noise and competing sounds, which is known as auditory scene analysis.

Machine Touch

While the process of machines recognizing visual or audio data seems a perfectly workable idea, one may question their ability to develop a sense of touch. Nevertheless, machine touch is a reality today, machines and computers can now process tactile information for a tactile perception of the surface properties and dexterity. This, in turn, allows machines to acquire intelligent reflexes and better interact with the environment. However, one tactile sensation is yet to be discovered by machines, that is pain (measuring physical human discomfort).

Machine Olfaction & Taste

Machine learning is rapidly advancing, and now machines can be trained to experience a sense of smell and taste. AI researchers are creating machines that can detect and measure odors, known as machine olfaction. This is possible by using a tool known as an “electronic nose” to detect and classify substances in the air. What’s more, there is also a technology called an “electronic tongue” to similarly detect and measure tastes. It can convert sensory data into taste patterns.

What Benefits Can Machine Perception Offer?

Encouraging perception in machine learning has a number of benefits for modern businesses:

- Accuracy. Computational data collection and analysis require a precise approach. As such, analyzing data using models based on human senses will be more accurate than using just human analysis.

- Efficiency. System analysis and processing can go considerably more quickly than a human employee does. There will be fewer mistakes and more time if the number of activities that are prone to human error will be reduced.

- Predictive analytics. One of the ways to replace consumer testing is to get data that has been processed using senses similar to those of humans. Machine perception may assist businesses in anticipating how a customer or user would perceive a new good, place, or service.

- Recommendations. Perceptive intelligence enhances the predictive power of ML models, and can also anticipate what kind of products and services people will want to purchase. Proposing new goods and services based on client preferences supported by data, thus, creates an extra possibility for income.

- Robotics. The manufacturing industry massively implements machines with robotic capabilities. Companies may greatly limit the amount of faults by adding machine vision or tactile response skills. Intelligent robots that can see mistakes and react to equipment failure might spare the company from having to pay for expensive repairs and replacements.

Data Labeling for Machine Perception: Automated or Manual?

For any AI project, to make your ML model work, you need a decent amount of data. Of course, it has to be annotated, so that the system will produce accurate and reliable results. Many studies show that the majority of the effort dedicated to working on the project in artificial intelligence goes into obtaining high-quality labeled data.

Therefore, data labeling is crucial for visual perception AI models (e.g., self-driving vehicles, drones, or robots), which require tagged photos to comprehend the surroundings and take appropriate action. Here, the process of labeling visual data, such as images or videos, plays a fundamental role in computer vision since it makes the target objects visible and recognizable to machines. More specifically, image annotation is of great importance in labeling image data using the necessary tools and software.

Moreover, you need to choose the appropriate method of data labeling that fits your project best. There are two known types of annotating data, automatically or manually. Both offer their pros and cons, so your decision will be based on your particular requirements. Even though a human-led approach is still often used, technology is heading in the direction of entirely automatic data labeling.

Final Words on Perception in AI: How Technology Is Serving Human Senses

Machine perception in artificial intelligence is indeed a fast-growing field with a wide range of current and future applications. It’s an intriguing area of study because it demonstrates the effort that humans and AI systems make to bring us closer together.

Only the fundamentals of machine perception have been discussed thus far, along with a brief discussion of their capacity for human-like sight, hearing, smell, and taste. We emphasized how this fascinating ability of ML systems tackles the issue of teaching machines to understand and comprehend images, sounds, music, and other real-world information. Hence, a great deal of new applications emerged thanks to AI perception models, which require well-annotated data for the most precise results.

It’s worth noting that this process is lengthy and laborious, which means that sometimes you might need help from professionals who can provide you with training datasets for your ML model.

The Label Your Data team can be your loyal partner in this journey. So, don’t hesitate and send your sensory data to us to receive a secure and high-quality data labeling service!

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.