What Do You Know About Data Annotation in AI?

You can never have too much annotated data in AI. The more labeled data you have, the greater your chances are of developing an accurate and upscale model for the task at hand and setting new AI records.

On our blog, we shared a lot about both labeled and unlabeled data, major types of data annotation, key applications of such data across big industries, and much more. And still, we never talked about how this process goes at Label Your Data and how our team has mastered the skill of preparing the most crucial resource for any AI-driven project — data.

Today, we’ll uncover the secrets behind the intricate process of data labeling and talk about the value of manual work in today’s automated and tech-savvy business environment. Why is human labor still of such importance in the field that strives to make AI superior? Doesn’t it contradict the expansive goal of cultivating independent and conscious AI with no human involvement?

Yes, the data annotation process goes that far. But before we answer these questions, we’ll introduce you to the inside workings of a data labeling company and all the magic of the AI project's inception.

Shall we begin?

It All Starts with Data, Labeled Data

Annotated data is commonly considered a stepping stone in working on cutting-edge AI applications and complex ML tasks, such as self-driving vehicles, movie recommendation systems, advanced health care, and stock market predictions, to name a few. Data labeling is indeed a laborious activity that is better left to human annotators who provide the required accuracy and expertise needed for this procedure, especially when dealing with highly specialized data.

The name speaks for itself: data annotators put a considerable amount of effort into making raw and unstructured data a meaningful source of information for machines to learn and train from. They choose relevant tags (aka labels) for each piece of data so that your model can better understand what’s inside a labeled dataset.

We like to think of our company as a team of true data virtuosos. Our annotators can turn your raw, unlabeled data into high-quality, labeled datasets with tags according to your particular task and project’s goals. Such remote data annotation services are your best bet when you lack an in-house team of annotation experts.

Make sure to check our services and contact our team if you’ve found exactly what you need! If not, Label Your Data also provides custom data labeling services to match your vision and an ML task in question.

Key Data Annotation Techniques

Let’s get this clear: there’s a lot of data that businesses are dealing with on a daily basis. Such a dynamic growth of data over recent years has led to the phenomenon of big data in AI, two crucial areas in computer science nowadays.

As such, there are different types of this data, and each one requires a unique approach. Why, you ask? If you have the text data to annotate (e.g., a text document), it cannot share the same labels with the image data (e.g., a picture of the text document). Because both types of data are seen differently by humans, the goal is to teach robots to do the same, which is why many annotation techniques exist today.

As you already know, the bulk of work involving data annotation falls under one of two categories of AI: computer vision (CV) and natural language processing (NLP). One of them works with visual data (i.e., images, videos, photos), and the other, respectively, with text and audio data (OCR is an exception since it works with images that contain text).

Computer Vision

CV is one of the most popular AI initiatives right now, which you may find on your smartphone (face recognition), in industrial applications (autonomous vehicles), and public spaces (emotion recognition).

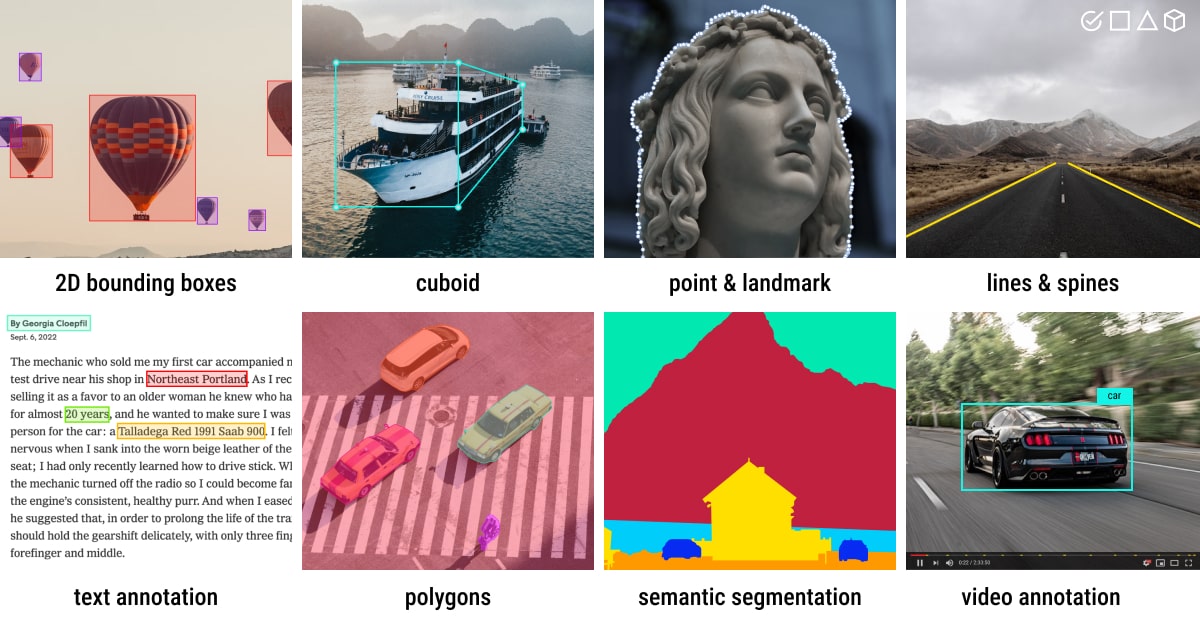

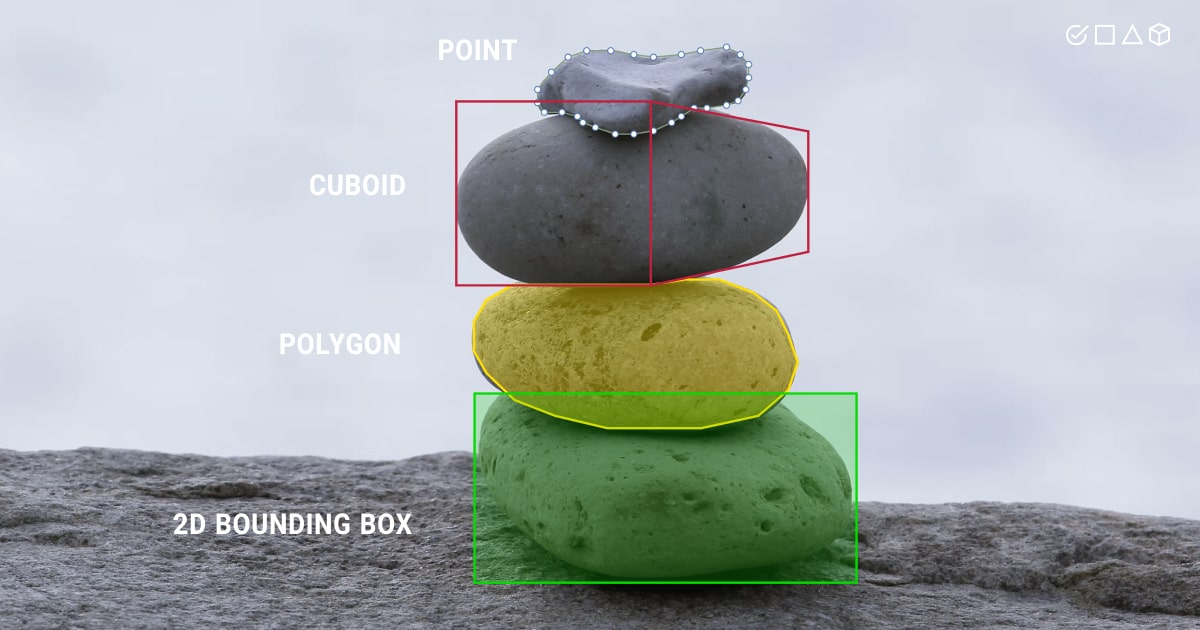

2D boxes (aka bounding boxes)

The most popular type of data annotation in machine learning, which implies drawing two-dimensional frames around the target objects on the image. This annotation technique helps the machine classify these objects into predetermined classes.

Polygons

When you need to capture the shape of the object of interest to enhance the model training, you can use polygonal annotation that draws outlines around it. This type of data annotation enables machines to detect objects by their shapes.

OCR (Optical Character Recognition)

OCR is a tedious process of analyzing images of text and converting them into text data. You can use it to teach an ML algorithm how to convert scanned documents or photocopies into machine-encoded text.

3D Cuboids

Sometimes, you need to add depth to the image, which is when annotators use 3D boxes to draw them around objects. This helps them achieve a three-dimensional representation of these objects, including height, width, and depth, as well as rotation and their relative location.

Semantic Segmentation

In this case, each pixel of the image is assigned a particular class label, after which the pixels belonging to the same class are grouped together using an ML model. You then get a map to train your model on, which contains clusters of various types of objects. Read more in our recent article dedicated to this topic.

Key Points (aka landmark annotation)

With the help of this data annotation type, the machine learning system can plot the crucial points (which is why it’s called “key points”) connected with each other to determine the forms of natural objects. It can be used, for example, for face recognition or tracking the movement of pedestrians on the street.

Image Categorization

One of the most often sought objectives in data annotation and machine learning is the classification of images. With this technique, it’s easier to teach a machine to classify pictures into preset groups so that it can tell what is depicted in that image.

Video Annotation

While annotating a video seems a lot similar to labeling an image, breaking it up into individual frames should come first. After that, you can perform object detection to locate each item on the frames and object segmentation to predict composition.

LiDAR (aka sensor fusion)

Based on 3D point cloud data gathered from multiple sensors, an ML model can predict objects. To increase the fidelity of such predictions, LiDAR and RADAR are employed.

Natural Language Processing

Computer vision is the latest craze to hit on AI, but NLP didn’t lag behind either. There are several useful applications of NLP that we might have never even thought about. They include speech recognition assistants, QR code scanners, and smart CRM systems that build out customer profiles after a single point of contact. All this requires properly annotated data.

Text Classification

Classifying text into groups (based on its content) is an essential part of NLP tasks, such as topic labeling and spam detection.

Audio-To-Text Transcription

The annotation of the transcription data is used to train an ML model to convert audio to text. It's useful in a number of scenarios, such as the transcription of important (public) speeches or meetings. Additionally, voice assistants may be trained using this type of data annotation.

Sentiment Analysis

The textual data can be analyzed not only from a visual point of view, but also from an emotional side of it. Sentiment analysis helps detect the tone of the text message (e.g., positive or negative), which is especially useful for social media/market analysis, learning about customer experiences with the product or service, and measuring brand reputation in the market.

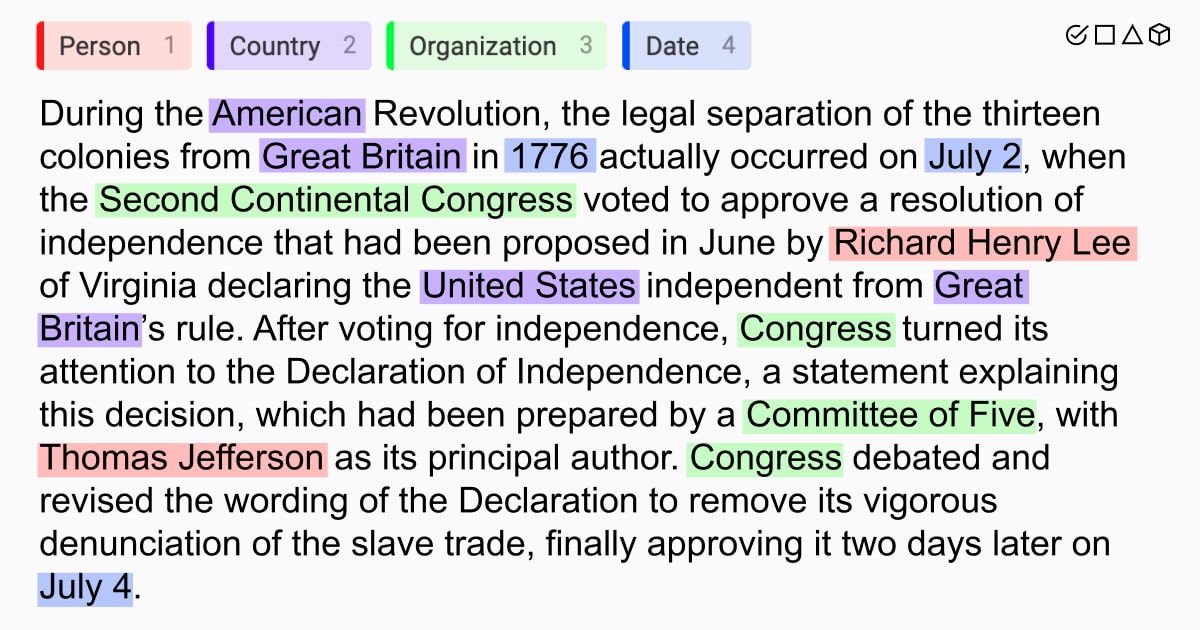

NER (Named Entity Recognition)

Our team has worked with this type of data annotation a lot. The foundation of NER is the identification and classification of entities, which are particular words or phrases inside a text (think names, locations, price, etc.). The ML models built to summarize and search through massive amounts of text benefit from NER.

How Do We Annotate Data at Label Your Data?

The majority of the team has more than ten years of experience dealing with tech-savvy clientele, although our adventure has only been going on for two years. In that time, many clients have been met and thousands of data have been collected and annotated. Hence, we can safely say that we have a wealth of knowledge regarding this ostensibly simple data annotation process to share with you. So, let’s get started!

Collecting Data

First, if the client doesn’t provide their own data for the project, we can help by collecting the necessary data as per the client’s request. The type of data, the volumes, and the strategy of collecting it is usually dictated by the client as well. There’s a golden rule that our team follows at this stage: the more data, the better. In addition, there are a few important factors to keep in mind when collecting data for the AI project, including quality, quantity, relevance, bias, and, of course, security.

Dedication to the Project

Our data annotators work on their projects for 8 to 10 hours each day. It may be technically challenging for the annotators to provide meaning to the pictures, texts, and audio files during the data annotation process. Data labeling, however, is more than just technical work. It is, in fact, a thorough display of organizational hierarchy and authority.

Many data labeling companies focus on providing high-quality data at the most affordable price. Our competitive edge at Label Your Data is the security of the annotation process. We believe that annotators must view the world through the lens of the machines, interpret what they see, and label data appropriately for the machine to be able to perceive the world like humans.

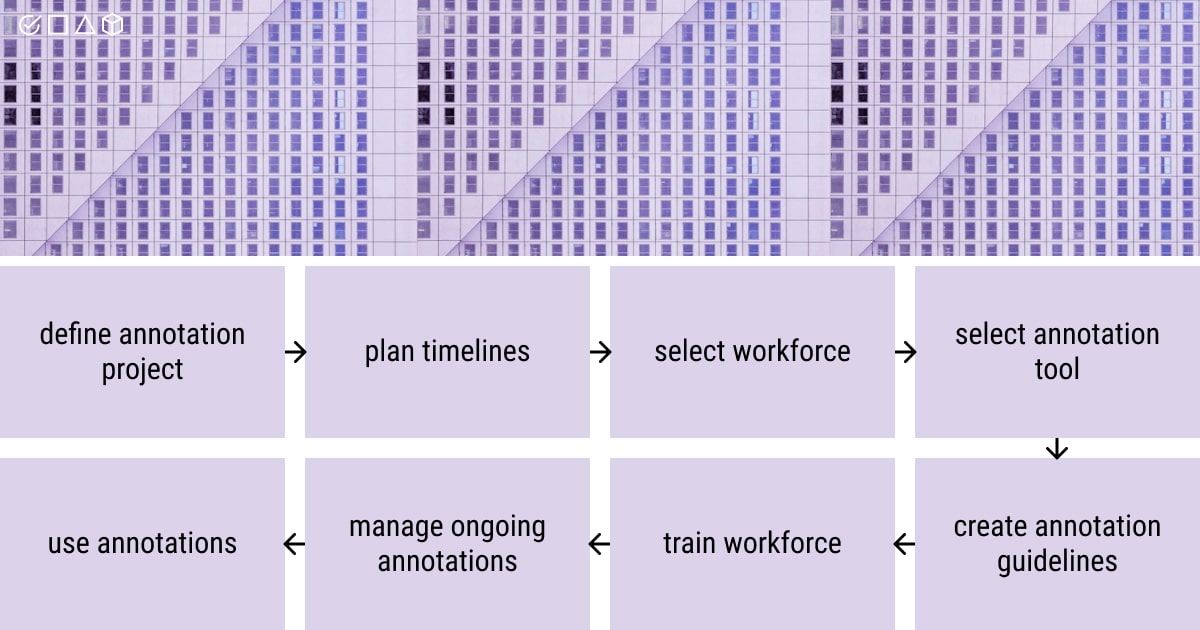

Data Annotation Checklist from Label Your Data

Simply said, all that is required is that you specify the goal of your project. Then you gather the data necessary to complete your ML task. The next step is dataset annotation itself, which is performed by experts in the field and used to train your machine learning model. Looks quite easy, doesn’t it?

Well, yes and no. Our team has prepared a list of the most essential points to consider when working on a data labeling task in AI. Make sure to go through each point for the most accurate and high-performing model.

- Know your data and the annotation type required to process it and prepare for the model training.

- Decide on the data collection methods and the level of automation used throughout the labeling process.

- Team up with the subject matter specialists.

- Take into account the timeline and resources (people and money).

- Learn the workforce options beforehand (it’s always best to let experts do their job).

- Don’t disregard data security and privacy concerns, the task difficulty, data volume (equals annotation volume), and the need for QA.

- Select the labeling tools that best suit your task (or read our article about the most popular annotation tools in the market).

*At Label Your Data, we offer a pilot project, so that the client may determine whether we can meet their needs and whether our annotation services are compatible with their goals and the task at hand.

Managing the Annotation Process

- After the above-mentioned work was completed on our side, we agree on the annotation tools, policies, workflow, and data requirements with our client.

- To meet all client’s expectations, it is crucial to set realistic quality, timing, and productivity objectives for the team based on the pilot project.

- Sometimes, it’s useful to perform quality assurance (QA) to verify that the team creates accurate and reliable annotations.

- Project managers should provide feedback from the client to fix all the mistakes timely (part of QA). They ought to monitor stats on productivity, timing, security, and quality, too.

- Technical support, including hardware and access to professional annotation tools, is critical for the labeling team to avoid any possible delays with the ongoing project.

When Annotation Is Finished

- After the project is finished, it’s crucial to evaluate the overall quality of the annotations to guarantee the quality and validity of the performed annotations before they are sent to the client for usage further down the line.

- Implement mechanisms to identify data drift and abnormalities that can call for an additional annotation process.

- Take into account strategic changes to the annotation workflow if more annotations are required to meet the project’s objectives so that future labeling efforts would be much more effective.

What’s Next? Scaling Up and Building an AI Project!

Data annotation is a hidden gem that underpins almost every project in machine learning. Professionally labeled data drives automation and numerous ML solutions that humanity relies on today.

However, the value of annotated data and, thus, due attention to the labeling process, is still not yet fully understood and recognized. But the fact remains that the accuracy and quality of the data annotation process define the accuracy and quality of the AI project in question. It all boils down to the fundamental axiom in machine learning: garbage in, garbage out.

We hope that this article will help ML engineers and data scientists to learn how to create and manage annotation projects, since they are mostly educated about the machine learning workflow only.

If you want your AI project to succeed, make sure you have enough annotated data to train your model. Label Your Data can help you with this task, so send your request and begin conquering new AI horizons!

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.