8 Document Annotation Tools for NLP Model Training (2025)

Table of Contents

TL;DR

- Label Studio leads open-source with LLM integration and Python SDK; free self-hosted option cuts costs for technical teams.

- Label Your Data provides managed document annotation through expert teams for complex, regulated use cases where self-serve tools fall short.

- Enterprise platforms offer compliance certifications, native PDF support, and GPT-4 pre-annotation at premium pricing.

- LLM-assisted workflows reduce annotation time 40-70% when humans verify low-confidence predictions and edge cases.

How to Pick a Document Annotation Tool for NLP Training Tasks

Machine learning engineers training NLP models on text-heavy documents need tools that handle more than basic tagging. Your choice depends on task complexity, team size, compliance requirements, and how the document annotation tool integrates with your training pipeline.

Key decision factors:

- Annotation formats: NER (span or character-level), nested entities, relations, document classification, sequence-to-sequence

- PDF and OCR support: Native PDF rendering vs. text extraction, OCR validation workflows, document digitization pipelines for scanned archives

- LLM integration: GPT-4 or open model pre-annotation, confidence thresholding, active learning loops

- ML pipeline fit: Python SDKs, REST APIs, export formats (JSON, JSONL, COCO), webhooks

- Quality control: Inter-annotator agreement (IAA) metrics, review workflows, audit logs

- Hosting: Self-hosted open-source, cloud SaaS, on-premise with compliance (SOC2, HIPAA, GDPR)

Research shows LLM-assisted workflows significantly reduce data annotation effort when humans verify low-confidence predictions and models handle straightforward cases. For regulated industries, compliance certifications and audit trails are non-negotiable. Consider scalability limits (some open-source tools slow beyond 100k tasks) and hidden costs like LLM API fees at scale.

Here’s how the leading document annotation tools stack up against these criteria in 2025.

For annotating document-heavy data, the most effective approach has been a combination of visual and semantic annotation. Tools that let you highlight sections, tag relationships between blocks, and preserve the physical layout of the document have been lifesavers. Being able to mark tables, headers, footers, and even things like side notes gives the model a sense of hierarchy.

Label Your Data

Label Your Data delivers document annotation through managed workflows with expert human teams.

The data annotation platform itself focuses on computer vision, but the data annotation services handle text data projects through human-powered annotation pipelines with strict quality assurance (QA). Teams get end-to-end workflows: data ingestion, expert annotation with multi-layer QA, and delivery in preferred formats (JSON, CSV, COCO).

This service model works well for regulated industries (legal, medical, academic) and projects with messy OCR or multilingual requirements where domain expertise matters.

Best for:

- Teams needing managed annotation services for complex documents

- Regulated use cases requiring compliance-grade QA workflows

- Projects where annotation quality directly determines model performance

Limitations:

- Not a self-serve annotation editor (service team model, not DIY tool)

- Custom pricing based on project scope, not per-task rates

- Full NLP platform tooling currently in development

Get instant data annotation pricing estimates for your document annotation project with our free cost calculator.

Label Studio

Label Studio is the most widely adopted open-source annotation platform for NLP, supporting sequence labeling (NER with spans), text classification, relations, and sequence-to-sequence tasks.

It handles multimodal AI data, which fits projects combining OCR with visual context. For PDFs, it converts documents to paginated images with OCR layers. The platform integrates external ML models via its ML Backend, allowing you to plug in HuggingFace or OpenAI GPT models for LLM-assisted labeling.

Recent releases introduced interactive LLM Prompt mode for multistep annotations (NER + document classification + QA in one prompt).

Best for:

- Cost-conscious teams needing full control (free, self-hosted)

- ML engineers prioritizing Python/API ecosystem integration

- Projects requiring LLM-assisted pre-annotation with human review

Limitations:

- Requires PDF-to-image conversion and OCR preprocessing

- Performance limits around 100k tasks per project for multipage documents

- Enterprise features (advanced QA, compliance) require paid tier

SuperAnnotate

SuperAnnotate is an end-to-end commercial platform originally built for computer vision, now supporting comprehensive NLP tasks including sentiment analysis, text classification, NER (with nested entities), relation extraction, coreference resolution, and QA pair labeling.

The Agent Hub enables model-in-the-loop automation: deploy LLMs or custom models for pre-labeling and quality checks. Token-aware span selection auto-adjusts to whole words, preventing partial-token errors common in manual annotation.

The platform handles multimodal projects, letting teams annotate text extracted from PDFs alongside images in unified workflows. Real-time collaboration features include project tracking, role-based permissions, comment threads, and version control on annotation jobs.

Best for:

- Enterprise teams managing large-scale multimodal projects (text + vision)

- Organizations needing token-level precision for production NER models

- Projects requiring robust collaboration and project management features

Limitations:

- Cloud-only (no self-hosted option)

- No native PDF viewer (requires text extraction first)

- Custom pricing requires sales contact, limited transparency on costs

Kili Technology

Kili Technology is a commercial platform focused on seamless ML pipeline integration, offering comprehensive NLP support including text classification, NER with nested entities, sentiment analysis, and conversation/ranking annotations for LLM fine tuning (RLHF).

Teams building LLM fine-tuning services use Kili for preference ranking and human feedback workflows. The platform renders PDFs natively and provides OCR validation workflows for scanned documents.

Kili supports hundreds of concurrent annotators with consensus metrics, review workflows, and role-based access. It’s SOC2 and ISO27001 certified with GDPR and HIPAA compliance, offering cloud SaaS and on-premise deployments.

Best for:

- Teams training LLMs or requiring RLHF annotation workflows

- Regulated industries needing on-premise deployment with compliance certifications

- Projects requiring character-level precision for high-stakes entity extraction

Limitations:

- OCR pipeline setup can add initial implementation friction

- Batch labeling limited to classification tasks (not available for NER or relations)

- Custom pricing (free trial up to 100 annotations, then usage-based tiers)

Encord

Encord Document is part of Encord’s multimodal data development platform, offering unified annotation for documents, images, videos, and DICOM files.

It supports text classification, NER, entity linking, sentiment tagging, QA pairs, and translation with native PDF rendering that displays text and page images side-by-side. The platform's Agents framework integrates GPT-4o, Gemini Pro 1.5, and custom models for auto-labeling and categorization.

Encord emphasizes large-scale data management and mlti-user collaboration, including QA dashboards, ontology versioning for hierarchical label schemas, and quality metrics through Encord Active. It’s SOC2 Type II, GDPR, and HIPAA-certified with private cloud integration options.

Best for:

- Complex multimodal document pipelines (PDFs with embedded tables, charts, images)

- Enterprise teams needing petabyte-scale dataset management and curation

- Organizations requiring compliance certifications with private cloud deployment

Limitations:

- Enterprise-only pricing (custom contracts, no public free tier)

- Learning curve for setting up ontologies and Agents optimally

- Overkill for simple text-only annotation projects

Doccano

Doccano is a popular open-source web app for text annotation, supporting sequence labeling (NER, POS tagging), text classification (single or multi-label), and sequence-to-sequence tasks like summarization or translation. Recent versions added relation annotation between entities.

It provides a REST API for programmatic data upload, annotation export, and importing model predictions for pre-labeling. Multiple users can work concurrently with simple role distinctions, though quality control is basic, no built-in adjudication UI or IAA metrics dashboard.

Teams typically do dual annotation and compute agreement externally. It’s Python/Django-based, with Docker images for deployment.

Best for:

- Small to mid-sized academic or research projects with budget constraints

- Teams comfortable with self-hosting and scripting model-in-loop workflows

- Quick prototypes requiring basic NER or classification annotation

Limitations:

- No native PDF support (requires external OCR and text extraction)

- Performance issues and UI lag reported for datasets over 100k texts

- Basic collaboration features compared to enterprise platforms

TagTog

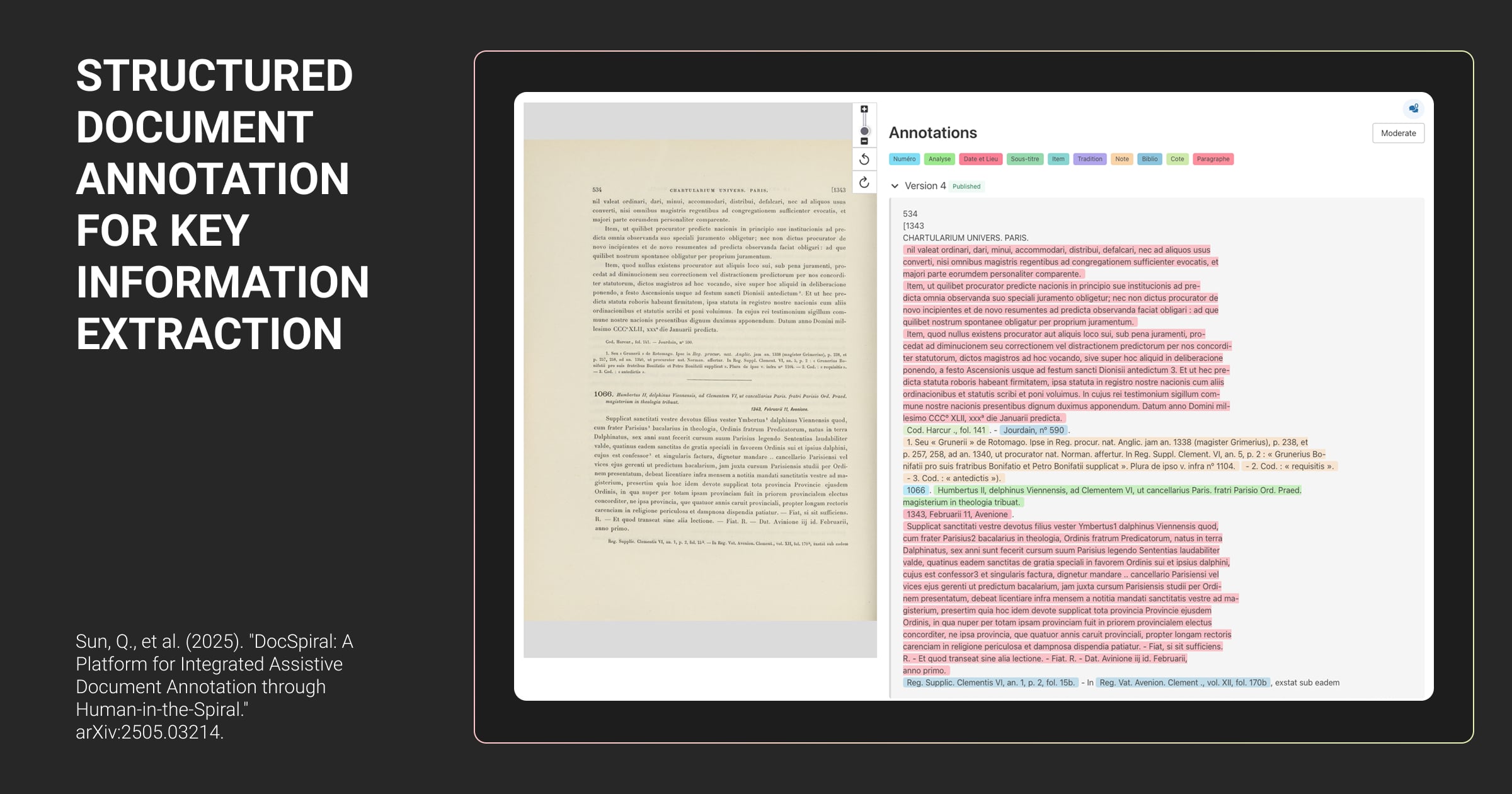

TagTog is a web-based annotation platform (now part of Primer AI) with a built-in PDF viewer for highlighting text directly on native PDFs.

It supports overlapping spans, nested entities, entity attributes, typed relations, and document-level classification, covering NER, entity linking, relation extraction, and document categorization in one tool. The platform includes automatic annotation features and enables active learning loops.

Multi-user projects include role tracking, IAA metrics, and progress dashboards. Both cloud (hosted) and on-premise editions are available.

Best for:

- Complex NLP projects and legal document annotation tools requiring overlapping spans or nested entities

- Teams working primarily with PDFs needing native document context

- Projects leveraging active learning with custom model integration

Limitations:

- Learning curve due to many UI options and annotation modes

- Closed-source (limited customization beyond API capabilities)

- Free tier limited to 5,000 annotations/month, then $0.03 per annotation

LightTag

LightTag is a team-oriented document annotation software (now under Primer AI) focused on fast, accurate text span annotation and classification with character-level precision.

The editor supports multiple overlapping spans and works without forced token boundaries, important for languages with complex tokenization or code annotation. AI suggestions account for approximately 50% of annotations on average, with the system learning to predict while annotators verify and correct.

The platform emphasizes quality control with built-in review modes, annotator agreement analytics, and issue tracking. Multi-language support includes RTL scripts and CJK characters, with cloud SaaS or on-premise deployment options.

Best for:

- Teams prioritizing annotation speed with AI-assisted workflows

- Projects requiring robust QA metrics and team performance tracking

- Multilingual text annotation with complex tokenization requirements

Limitations:

- Text-only (no support for images, PDFs, or multimodal data)

- Closed-source platform (data hosted with Primer unless on-premise)

- Free tier available, paid plans for larger teams with custom enterprise pricing

Quick Comparison of Leading Document Annotation Tools

Choosing between document annotation tools requires evaluating how each tool fits your specific NLP technique, team structure, and deployment constraints. The best document parsing tools AI annotation combine native PDF rendering with OCR validation for accurate text (OCR data) extraction.

The table below summarizes key capabilities across NLP feature completeness, PDF handling, automation support, and hosting flexibility.

| Tool | NLP Features | PDF/OCR Support | LLM Integration | Hosting | Pricing | Best Use Case |

| Label Your Data | NER, classification, sentiment, entity linking (via service) | PDF with OCR (managed) | GPT-4 integration (managed workflow) | Cloud (managed service) | Custom (project-based) | Complex documents, regulated industries, managed QA |

| Label Studio | NER (overlapping), relations, classification, seq2seq | PDF to image + OCR layer | Excellent (ML Backend, GPT-4, OpenAI prompts) | OSS + Cloud + Enterprise | Free (OSS), $149/mo+ (Cloud) | Cost-conscious teams, Python/API integration, self-hosted control |

| SuperAnnotate | NER (nested), sentiment, classification, relations, translation, QA | Text extraction (no native PDF viewer) | Agent Hub (LLM pre-labeling, ChatGPT) | Cloud + On-premise | Free tier + Custom enterprise | Enterprise multimodal projects, token-level precision |

| Kili Technology | NER (nested, character-level), relations, classification, RLHF/SFT | Native PDF + OCR validation | ChatGPT integration (70% time savings) | Cloud + On-premise | Free (100 annos), Custom enterprise | LLM fine-tuning, regulated industries, character-level precision |

| Encord | NER, classification, sentiment, QA, translation, RLHF | Native PDF + multimodal | Agents (GPT-4o, Gemini Pro 1.5) | Cloud + Private cloud | Custom enterprise | Multimodal pipelines, petabyte-scale, compliance-critical |

| Doccano | NER, classification, relations, seq2seq | Plain text only (no PDF) | Limited (API for model imports) | Self-hosted (OSS) | Free | Academic projects, small teams, basic NER/classification |

| TagTog | NER (overlapping), relations, entity linking, doc classification | Native PDF viewer | Internal ML + dictionary + API models | Cloud + On-premise | Free (5k/mo), $0.03/anno | Complex annotations, PDFs, active learning loops |

| LightTag | NER, classification, relations (character-level) | Text only (no PDF) | AI suggestions (~50% automation) | Cloud + On-premise | Free tier, Custom enterprise | Team efficiency, QA focus, multilingual text |

The data reveals clear patterns in tool positioning:

- Open-source options offer flexibility and cost control but require DevOps resources

- Enterprise platforms provide compliance certifications and LLM integration at premium pricing

- Service-based models handle complexity through managed teams with human experts

For ML engineers, the choice of document annotation tools hinges on technical capacity, compliance requirements, and annotation volume.

The key to high-leverage document AI is combining layout, structure, semantics, and relations within one governed pipeline. With prelabeling, active learning, and programmatic rules, we can keep quality high while driving annotation cost per page steadily down.

Best Practices for Document Annotation in 2025

High-quality annotation document workflows require systematic workflows that balance automation speed with human precision, and maintain audit trails for reproducibility.

Use LLM-assisted pre-annotation with human verification

Large language models generate first-pass labels 40-70% faster than manual annotation. Set confidence thresholds: auto-accept high-confidence predictions, route ambiguous cases to human review. Human-in-the-loop ML workflows catch edge cases that automation misses (sarcasm, domain-specific meanings, rare outliers).

Enforce token-level or character-level span control

Precise boundary selection matters for model performance. Tools that force word-boundary selection create problems for subword tokenization and non-English languages. Use character-level precision for legal and financial documents where single-character errors invalidate extractions. The benefits of integrated document annotation tools include preserving layout context that helps annotators select accurate span boundaries.

Measure inter-annotator agreement rigorously

Calculate Cohen’s Kappa or Gamma scores to track consistency. High IAA confirms clear guidelines; low IAA flags ambiguous instructions. Implement double annotation on subsets, with expert adjudication for conflicts.

Maintain annotation versioning and audit logs

Track changes to annotation schemas and datasets over time. Audit logs record who labeled what and when (required for regulated industries). For healthcare, finance, or legal document annotation tools, consider solutions with SOC2, HIPAA, or GDPR compliance.

Prioritize data security and ethical considerations

Document machine learning datasets often contain sensitive information. Use tools with role-based access control, encryption, and PII detection features. When using LLMs for pre-annotation, ensure sensitive data doesn’t reach external APIs (consider on-premise models for confidential projects).

The most impactful shift we made was moving from simple entity labeling to a relational annotation approach. Instead of just drawing bounding boxes around a 'due date' and an 'invoice number,' we configured our tasks to force annotators to explicitly draw a directional link between the two. This seemingly small change fundamentally alters the task from identification to interpretation.

About Label Your Data

If you choose to delegate document annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What is document annotation?

Document annotation is the process of labeling text in documents, such as PDFs, scans, or plain text files, to train NLP models. Annotators mark entities, relationships, sentiment, or categories that machine learning algorithms use to learn patterns for tasks like entity recognition, classification, or data extraction.

How to annotate a document example?

- Choose an annotation tool that fits your task (NER, classification, relations).

- Upload your document, define your label schema (entity types, categories), and mark relevant text spans or assign document-level labels.

- Export annotations in format compatible with your ML pipeline (JSON, JSONL, COCO).

- For production workflows, use LLM pre-annotation followed by human review.

What is annotation in document?

Annotation in documents refers to adding structured labels or metadata to text that makes it machine-readable. This includes tagging named entities (people, organizations, locations), marking relationships between entities, assigning sentiment or intent labels, or categorizing entire documents by topic or class.

What tools support PDF annotation?

Document annotation software likeTagTog, Kili Technology, and Encord Document offer native PDF rendering. Label Studio converts PDFs to paginated images with OCR layers. SuperAnnotate handles text extracted from PDFs. Doccano requires external OCR preprocessing. For complex layouts with tables or multi-column text, choose tools with native PDF support.

What’s the best free document annotation tool?

Among the best free document annotation tools are Label Studio, doccano, and TagTog. Label Studio (open-source) offers the most complete feature set for free: NER, relations, classification, LLM integration, and Python SDK. Doccano is another free option for basic text annotation but lacks PDF support. TagTog provides a generous free tier (5,000 annotations/month).

Can I use GPT or LLMs to pre-annotate documents?

Yes. Modern tools like Label Studio, Kili Technology, SuperAnnotate, and Encord integrate GPT-4 or other LLMs for pre-annotation. LLMs generate first-pass labels that humans verify and correct. Research shows this reduces annotation time by 40-70% while maintaining quality. Always implement human review because LLMs hallucinate and miss edge cases.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.