Snorkel AI Competitors: Shortlist for ML Teams

Table of Contents

- TL;DR

- Why ML Teams Look Beyond Snorkel AI

- How to Compare Snorkel AI Competitors

- Service-First Snorkel AI Competitors with Managed Labeling and QA Expertise

- Platform-First Snorkel AI Competitors for In-House Labeling Teams

- Ecosystem Competitor for Cloud-Based MLOps Pipelines

- How to Pick the Right Snorkel AI Alternative for Your Workflow

- About Label Your Data

- FAQ

TL;DR

- Snorkel AI’s niche is weak supervision and programmatic labeling (labeling functions, rapid dataset iteration) for data-centric ML teams.

- Teams still compare alternatives for multimodal coverage, human-in-loop QA, compliance, and clearer cost models.

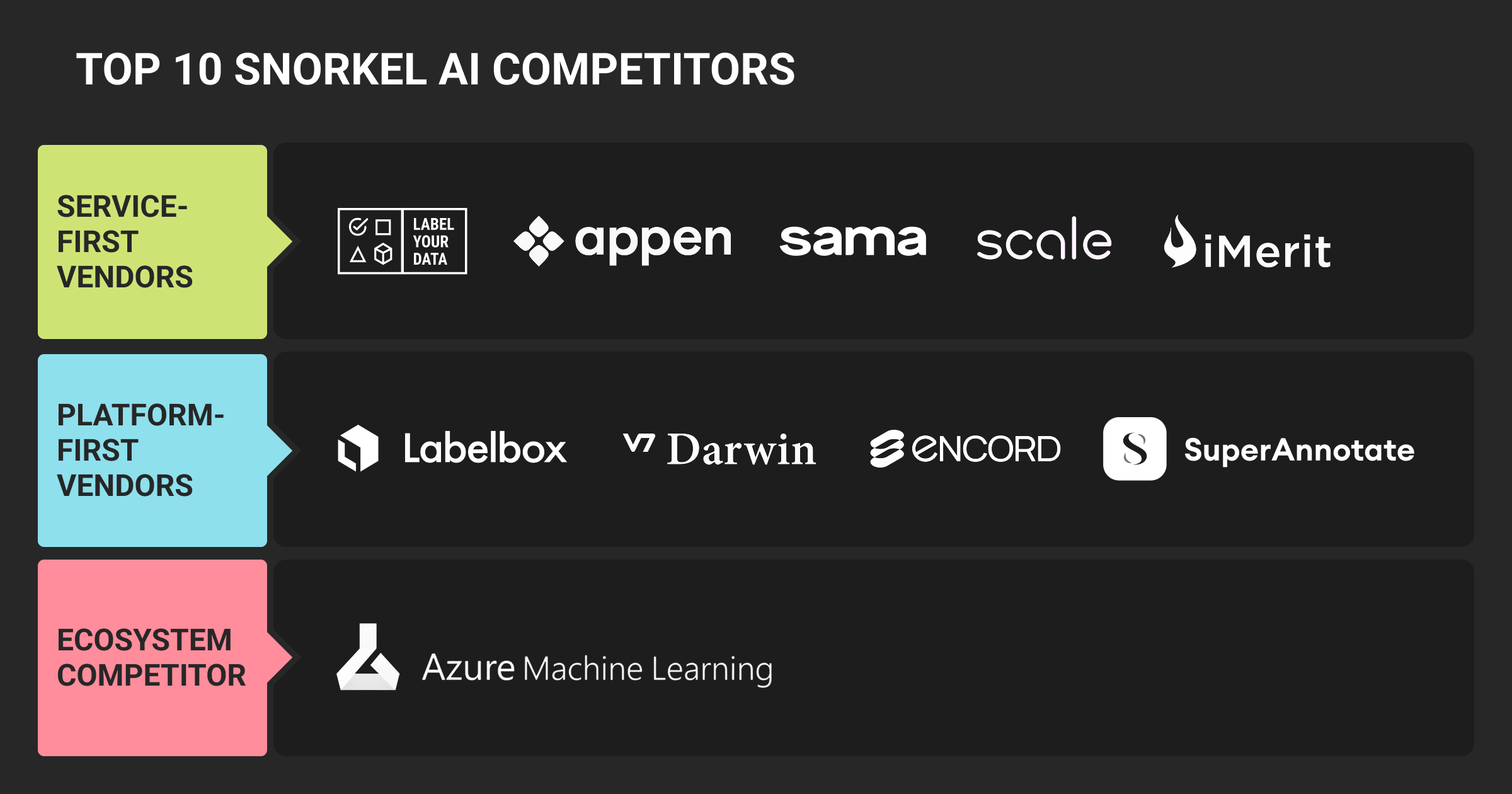

- Service-first rivals (Label Your Data, Scale AI, iMerit, Appen, Sama) trade programmatic speed for expert review, SLAs, and managed quality.

- Platform-first tools (Labelbox, SuperAnnotate, Encord, V7) prioritize in-house control, APIs, and active-learning workflows.

- Azure ML is the key ecosystem option if deep cloud integration beats best-of-breed tooling in your org.

Why ML Teams Look Beyond Snorkel AI

Snorkel AI introduced programmatic labeling and weak supervision, helping engineers generate labeled data efficiently with code instead of manual annotation. This method accelerates model development, especially in text and document-heavy use cases. For many teams, Snorkel remains one of the fastest paths to building high-quality training machine learning datasets.

Still, engineers often look elsewhere. Snorkel’s automation performs best in structured text but falls short with complex or multimodal data like image recognition, audio, or video. These domains rely on contextual understanding and human judgment, where programmatic labeling lacks nuance. As models expand into real-world edge cases, human annotation becomes essential for maintaining accuracy and reliability.

Scalability and pricing also influence the search for alternatives. Snorkel AI’s enterprise focus can be restrictive for smaller teams, and its integration options are limited for organizations running hybrid MLOps stacks. Competitors such as Label Your Data, Scale AI, and Labelbox offer transparent data annotation pricing, managed human review, and smoother integration with cloud-based pipelines.

Many teams move beyond Snorkel AI once automation alone stops improving results. Hybrid solutions with human-powered data annotation services offer better control over data quality, auditability, and scalability. Unlike Snorkel’s programmatic-only labeling approach, these alternatives combine automation with expert QA for higher accuracy.

The most important factor when evaluating a Snorkel AI rival is how well the platform fits into the team’s workflow. Transparency in data labeling, model monitoring, and versioning often determines scalability. I’d rather have an open, stable tool that engineers can build on than something that feels perfect until you need to make a single adjustment.

How to Compare Snorkel AI Competitors

When evaluating Snorkel AI competitors, ML teams should focus on how well each solution fits their workflow, data types, and quality expectations. Not every tool serves the same purpose. Some prioritize automated data annotation and scalability, while others excel at human oversight and precision.

Key criteria for comparison:

- Automation versus human QA: Determine how much manual verification is possible and how errors are flagged before deployment

- Supported data types: Verify support for text, image, video, or multimodal annotation pipelines

- Integration and deployment: Check whether APIs align with your MLOps tools or preferred cloud environments

- Customization and workforce options: Assess flexibility in handling domain-specific or regulated data

- Pricing and scalability: Compare licensing, per-label, or managed-service models for long-term cost predictability

Choosing the right alternative depends on your project’s maturity.

Startups benefit from easy-to-use platforms with quick setup; enterprises gain from hybrid systems combining automation with expert QA; regulated domains rely on secure, auditable pipelines with clear documentation and human supervision.

When evaluating AI platforms, the real ROI comes from how well they adapt over five or ten years, not just the first deployment. Open developer ecosystems and strong community support are non-negotiable for staying competitive. Combine that with enterprise-grade compliance, and you have a foundation that scales without slowing innovation.

Service-First Snorkel AI Competitors with Managed Labeling and QA Expertise

Service-first vendors combine automation with managed human QA. They’re best for teams working with ambiguous, regulated, or high-risk data, where annotation quality and auditability outweigh speed.

Label Your Data

Label Your Data operates as a hybrid data annotation company that integrates semi-automation with human-in-the-loop QA. It supports text, image, audio, and video labeling through a secure data annotation platform designed for enterprise compliance. The team specializes in edge-case triage, ambiguity resolution, and dataset evaluation for production-grade AI systems.

- Strengths: Consistent QA, flexible scaling, transparent pricing, and support for multimodal data

- Limitations: Requires integration for full automation workflows

- Best for: AI/ML teams prioritizing accuracy, compliance, and human-supervised data pipelines

Scale AI

Scale AI delivers full-stack data operations pipelines for large enterprises. Its combination of automation and a vast managed workforce enables high-volume labeling across text, image, video, and 3D data. The company provides programmatic quality assurance and integrates seamlessly with existing ML workflows.

- Strengths: Enterprise throughput, reliability under scale, support for synthetic data generation

- Limitations: Cost and complexity for smaller teams

- Best for: Organizations managing massive datasets or building foundation models at scale

iMerit

iMerit focuses on domain-trained annotation for specialized sectors such as finance, healthcare, and geospatial analytics. It emphasizes dataset security and workforce vetting, ensuring compliance with ISO and GDPR standards. The company offers consultative project design for high-risk domains and is often used by clients who require controlled environments.

- Strengths: Secure infrastructure, experienced annotators, and strong vertical expertise

- Limitations: Less suitable for rapid prototyping or dynamic project pivots

- Best for: Enterprises that need quality-controlled annotation under strict governance

Appen

Appen runs one of the world’s largest annotation workforces, supporting over 180 countries and 235 languages. It provides data collection services and large-scale labeling but can suffer from inconsistency on nuanced or technical projects. Recent platform improvements added auto-QA and crowd quality scoring.

- Strengths: Global coverage, multilingual data, scalable pricing tiers

- Limitations: Variable quality control, slower turnaround for edge cases

- Best for: Teams that need broad language coverage or early-stage dataset creation at scale

Sama

Sama centers its model on ethical AI and secure data pipelines. It uses a managed workforce trained under ISO 9001 and GDPR compliance, making it one of the few vendors with traceable QA chains. Sama’s workflows combine automation and expert reviewers to ensure precision in image recognition, autonomous vehicle, and medical imaging tasks.

- Strengths: Ethical workforce, transparent process, measurable QA metrics

- Limitations: Slightly slower turnaround due to layered review steps

- Best for: Enterprises seeking compliance-ready datasets and socially responsible sourcing

Platform-First Snorkel AI Competitors for In-House Labeling Teams

Platform-first competitors build products that let ML teams own their annotation pipelines.

These tools focus on automation, collaboration, and MLOps integration — ideal for engineers who want full control and flexibility rather than managed services.

Labelbox

Labelbox is an enterprise data-centric platform that combines annotation tools, automation APIs, and quality assurance workflows. It’s used by ML engineers to create, manage, and iterate datasets directly within their MLOps stack. The platform supports text, image, video, and 3D modalities and integrates easily with model training pipelines.

- Strengths: Active learning integrations, API-first design, advanced consensus metrics for QA.

- Limitations: High cost at scale and steep learning curve for non-technical users.

- Best for: Teams with strong engineering capacity looking for deep customization and automation-first pipelines.

For a deeper look at other annotation platforms, see our guide on Labelbox competitors.

SuperAnnotate

SuperAnnotate is a visual-first annotation tool designed for computer vision and multimodal AI projects. It offers powerful auto-labeling features, version control, and collaboration tools for distributed annotation teams. Optional workforce support is available, but the platform’s core strength lies in its annotation efficiency and workflow management.

- Strengths: Excellent for image and video labeling, built-in auto-annotation, clean UI for QA.

- Limitations: Limited text or audio annotation capabilities.

- Best for: Vision-heavy projects in manufacturing, robotics, or medical imaging.

Encord

Encord takes a data-centric, active learning approach, blending annotation with model evaluation and continuous improvement. Its workflows are built around feedback loops, where models automatically flag low-confidence predictions for review. Encord supports healthcare, robotics, and geospatial use cases, with a strong emphasis on governance and reproducibility.

- Strengths: Integrated monitoring, customizable workflows, scalable annotation for evolving datasets

- Limitations: Complex setup for smaller teams and fewer pre-built templates than competitors

- Best for: Teams running iterative ML pipelines that require model-driven labeling and validation

V7 Darwin

V7 Darwin is known for speed and automation in computer vision annotation. It combines model-assisted labeling with built-in dataset versioning and validation. The platform offers support for segmentation, object detection, and classification, plus integrations for MLOps tools like Weights & Biases.

- Strengths: Fast labeling workflows, dataset versioning, and strong support for image recognition pipelines

- Limitations: Limited NLP or tabular data support, paid add-ons for advanced features

- Best for: AI teams focusing on visual ML tasks that demand quick iteration and automation-first workflows

These platform-first competitors appeal to ML teams that prefer autonomy and fine-grained control over data annotation. They trade managed expertise for scalability, flexibility, and API-driven integration, giving engineers more freedom to adapt the workflow to their own infrastructure.

Ecosystem Competitor for Cloud-Based MLOps Pipelines

Some ML teams prefer annotation tools that are built directly into their cloud environments rather than managed separately.

Ecosystem vendors integrate data labeling into full ML stacks: combining dataset preparation, training, deployment, and monitoring within a single interface. This approach minimizes tool fragmentation and simplifies compliance, versioning, and governance.

Azure Machine Learning

Azure ML offers integrated data labeling within Microsoft’s end-to-end MLOps suite, allowing teams to annotate, train, and deploy models in the same environment. The labeling component supports text, image, and object detection tasks, and connects directly to Azure Data Lake and Cognitive Services. Built-in security and enterprise compliance controls make it especially attractive for regulated industries like healthcare or finance.

- Strengths: Seamless integration with Azure ecosystem, strong security, and native model deployment

- Limitations: Less flexible than standalone annotation platforms and limited collaboration features for large annotation teams

Best for: Enterprises already using Azure infrastructure that need a compliant, centralized labeling pipeline

To future-proof AI strategy and ensure long-term ROI, teams should prioritize Snorkel AI competitors that offer open, modular architectures. Platforms that integrate cleanly with machine learning frameworks, MLOps pipelines, and human-in-the-loop annotation strategies — without vendor lock-in — deliver the most lasting value.

How to Pick the Right Snorkel AI Alternative for Your Workflow

The right platform depends on how your machine learning algorithm processes and learns from labeled data. Each vendor type solves a different problem: from full-service annotation to self-managed tooling or integrated cloud ecosystems.

Service-first vendors like Label Your Data, Scale AI, and Sama are best for teams handling ambiguous or compliance-sensitive data. They combine managed workforces with automation to ensure quality, auditability, and domain expertise. This model works well for regulated sectors or when labeling errors carry significant risk.

Platform-first vendors such as Labelbox, SuperAnnotate, and V7 Darwin appeal to ML engineers who want control over data pipelines. They provide automation APIs, workflow customization, and analytics for continuous QA. This option suits organizations that already have internal annotation teams or MLOps maturity.

Ecosystem vendors like Azure ML fit enterprises that value integration and security over flexibility. They streamline data annotation within a unified cloud infrastructure, reducing friction between model training, deployment, and governance.

In short:

- Startups or research teams benefit most from platform-first flexibility

- Enterprises working with regulated or high-stakes data prefer service-first reliability

- Large organizations standardized on a cloud stack gain efficiency from ecosystem integration

Each category addresses a distinct stage of ML maturity. Choosing the right one ensures scalability, compliance, and dependable model performance.

About Label Your Data

If you choose to delegate data labeling, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

What does Snorkel AI do?

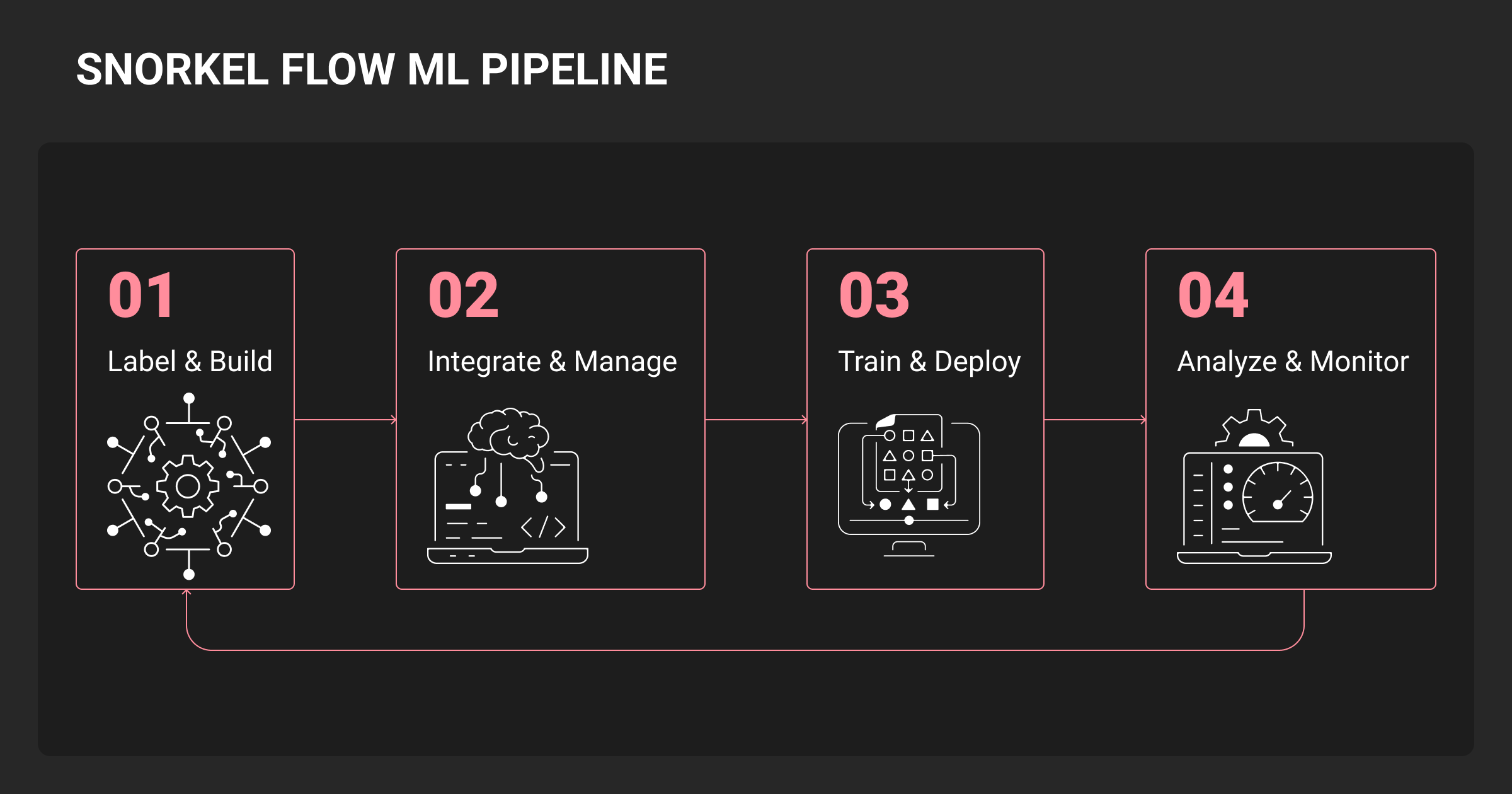

Snorkel AI automates dataset labeling through weak supervision — using rules, pre-trained models, and heuristics instead of manual labeling. It’s designed for ML teams that need to accelerate data preparation for NLP, tabular, or document-based tasks, reducing reliance on large human annotation teams.

What companies are similar to Snorkel AI?

Competitors fall into three groups: service-first providers (Label Your Data, Scale AI, iMerit, Appen, Sama), platform-first tools (Labelbox, SuperAnnotate, Encord, V7), and one major ecosystem alternative (Azure ML). These vendors differ in how much they automate vs. add human QA, and in integration depth with your MLOps stack.

What type of company is Snorkel AI?

A data-centric ML platform vendor focused on weak supervision and programmatic labeling. Teams write labeling functions to generate training data, then use Snorkel Flow for curation and model training. Typical adopters are enterprise ML teams working in NLP and document-heavy domains that value speed and governance.

Who are the main Snorkel AI competitors?

Key Snorkel AI competitors include Labelbox, Scale AI, SuperAnnotate, Encord, and Label Your Data. These platforms provide more visual or hybrid human-in-loop labeling, helping teams manage image, video, and multi-modal data — areas where Snorkel’s automation alone is insufficient.

Can Snorkel AI be used for image data?

Only partially. Snorkel AI supports some image data labeling via weak supervision but lacks robust pipelines for image recognition or pixel-level annotation. Teams typically combine Snorkel with specialized data annotation platforms such as Label Your Data or V7 for visual datasets.

Is Snorkel AI a good company?

For teams that want code-driven data development, Snorkel is strong: programmatic labeling, data curation, and model training in one environment (Snorkel Flow). It speeds iteration and treats data governance like software. The trade-off is less built-in human expertise for nuanced or regulated work, so some teams layer managed services on top.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.