Top Appen Alternatives for Data Labeling and AI Training

Table of Contents

- TL;DR

- Why ML Teams Are Looking for Companies Like Appen

- How We Compared Appen Alternatives for Data Labeling

- Top Annotation Sites Like Appen for AI and ML Teams

- How to Run a Vendor Pilot (and Not Regret the Contract)

- Quick Appen Alternatives Overview

- Key Takeaways for ML Teams

- About Label Your Data

- FAQ

TL;DR

- Appen’s QA gaps and opaque processes are driving ML teams to hybrid and platform-first alternatives with transparent quality metrics.

- Managed vendors like iMerit and Sama deliver domain expertise; hybrid solutions like Label Your Data balance security, accuracy, and iteration speed.

- The smartest teams test before signing: short vendor pilots reveal real data quality faster than long procurement cycles.

Why ML Teams Are Looking for Companies Like Appen

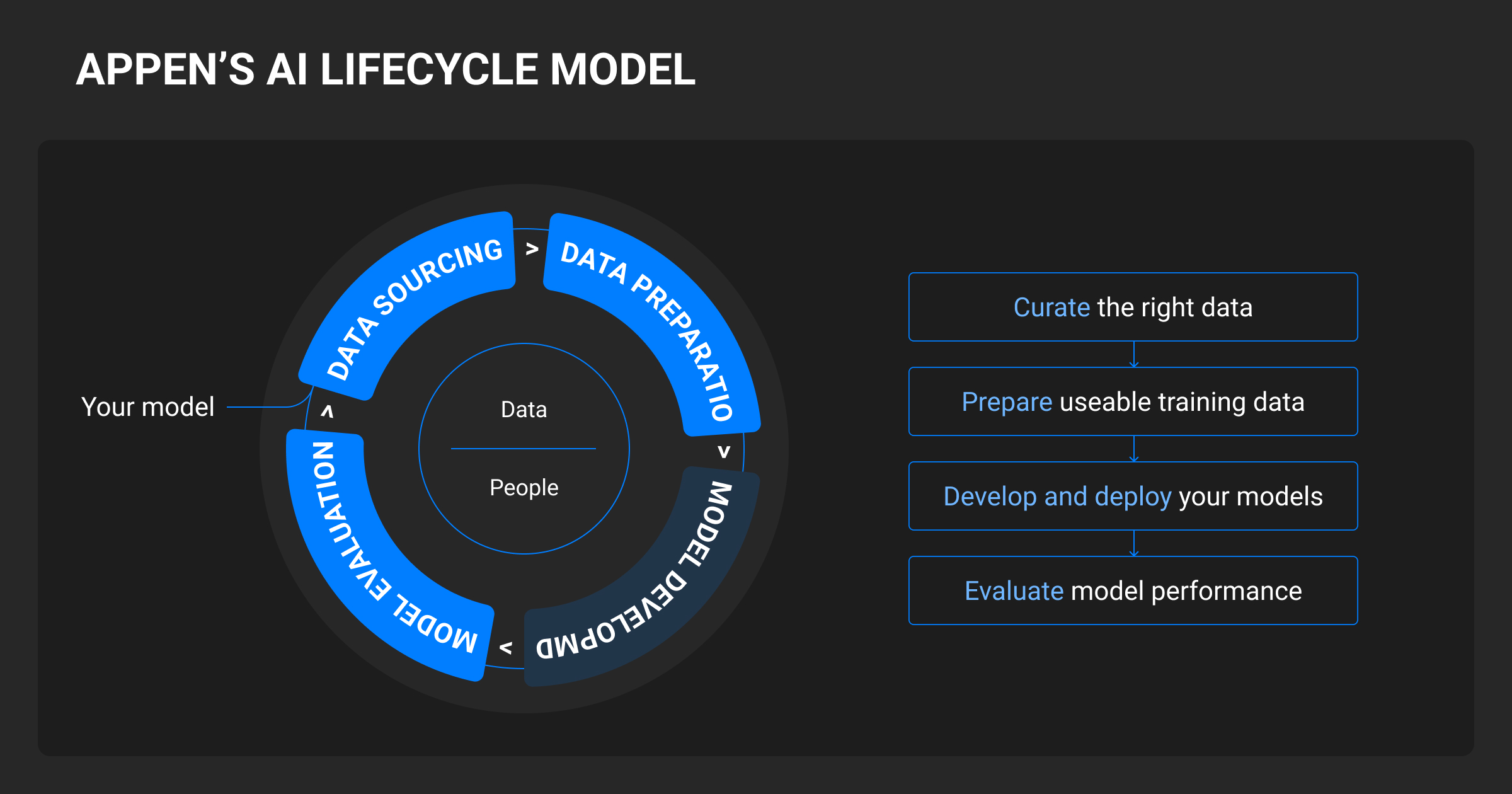

Appen remains one of the most established players in large-scale data annotation, but the market has evolved around new expectations for transparency, speed, and control. Many ML teams now look for vendors that provide more visibility into QA metrics, flexible workflow options, and direct integration with their existing MLOps pipelines.

Appen’s global workforce model offers reach and scalability, yet some teams find it harder to adapt to projects that require rapid iteration or domain-specific oversight (see our Appen review for a closer look).

As annotation complexity grows, so does the need for continuous feedback loops and real-time quality tracking – capabilities that emerging annotation companies are building directly into their platforms.

Enterprises are also raising the bar on governance. With more regulated machine learning datasets moving through AI pipelines, annotation platforms now emphasize measurable quality assurance, documented compliance, and secure review environments.

Rather than replacing Appen outright, many ML teams are expanding their vendor portfolios to include hybrid and platform-based providers. These newer models combine automation with human review, making it easier to scale without losing quality or accountability.

How We Compared Appen Alternatives for Data Labeling

To evaluate the top Appen alternatives, we used the same vendor assessment framework applied by enterprise AI teams when selecting a long-term partner for data labeling.

Each provider was analyzed based on real customer feedback, G2 reviews, Reddit discussions, and verified performance data. The goal was to save you from repeating months of vendor research and pilot testing that often reveal the same trade-offs.

We scored every company across seven core pillars:

- Data quality and QA architecture: multi-stage review systems, inter-annotator agreement (IAA), gold-standard checks, and error-tracking workflows.

- Operational transparency: access to dashboards, error taxonomies, and detailed feedback loops.

- Integration and automation: API and SDK support, active learning compatibility, and CI/CD alignment.

- Domain expertise: availability of trained annotators in medical, financial, and geospatial datasets.

- Security and compliance: SOC 2, ISO 27001, HIPAA readiness, and on-premise or private-cloud deployment options.

- Cost efficiency: total cost of ownership measured by rework rates, throughput, and time-to-first-batch.

- Scalability and support model: managed workforce versus hybrid or platform-first structures, SLA coverage, and surge capacity.

Read on to see which companies deliver consistent quality, speed, and compliance for different annotation project types and risk levels.

In healthcare AI, choosing a data annotation partner goes far beyond price. It’s about clinical literacy and precision. In one pilot, the cheaper vendor mislabeled blood panel edge cases, while the medically trained team improved accuracy significantly. Long-term value depends on context awareness and adaptive annotation tools that minimize human error.

Top Annotation Sites Like Appen for AI and ML Teams

Many ML teams are moving beyond Appen toward vendors that offer clearer QA visibility, faster iteration, and domain-specific expertise. These providers help ensure that every labeled dataset directly improves your machine learning algorithm performance and reliability.

Here are 11 proven alternatives built for scalable, production-grade AI pipelines.

Label Your Data

Label Your Data is the leading data annotation company that combines semi-automation (through their data annotation platform) with expert human QA (98%+ annotation accuracy benchmark).

Their global team of data experts specializes in high-risk datasets where accuracy and traceability matter most, including medical, financial, defense tech, geospatial, and autonomous system data. ML teams note transparent quality metrics, data annotation pricing, inter-annotator agreement tracking, and customizable pipelines through API or SDK integrations.

Features:

- QA-first hybrid model with measurable accuracy

- Secure VPC setup for regulated industries

- Full support for image, text, video, audio, and 3D point cloud data

Best for: Enterprise ML teams requiring compliance-ready annotation, audit trails, and proven data integrity at scale.

Scale AI

Scale AI is a data annotation platform plus managed services for text, image, audio, and video. It offers model-in-the-loop workflows, active learning, and automation to speed large programs, with APIs and SDKs that fit into CI/CD. Enterprise teams increasingly adopt it for multi-modal AI projects, though costs rise with complex ontologies or accelerated delivery schedules.

Features:

- Automation assists, active learning, and QA analytics

- Multi-modal labeling with strong API/SDK integration

- Enterprise security and deployment options

Best for: Large organizations running high-volume, multi-modal labeling with strict timelines.

TELUS International

TELUS International provides large-scale managed data annotation services through a multilingual, globally distributed workforce. The company focuses on enterprise and government clients that require compliance with GDPR, HIPAA, and SOC 2 standards. It covers multiple data types, text, audio, video, and image, with quality assurance pipelines designed for scalability rather than deep specialization.

Research shows TELUS International excels at handling high-volume projects and localization tasks, but feedback from enterprise clients highlights that quality can vary across regions and project types.

Features:

- Global multilingual workforce for diverse data domains

- Secure infrastructure meeting major compliance standards

- Managed labeling with real-time QA tracking

Best for: Enterprises prioritizing scale, multilingual support, and compliance coverage over highly specialized annotation expertise.

Labelbox

Labelbox is a data annotation platform designed for in-house ML teams that prefer full control over their labeling workflows. It offers tools for image, text, video, and 3D data, with automation features like model-assisted labeling and active learning. Unlike managed-service vendors, Labelbox is product-first – built to integrate into MLOps stacks through APIs, SDKs, and cloud connectors (AWS, GCP, Azure).

Research shows that teams appreciate its flexibility and collaboration tools, though maximizing value typically requires dedicated internal operations and QA oversight.

Features:

- End-to-end platform with customizable labeling UI

- Active learning, model-assisted labeling, and analytics

- Integrates seamlessly with existing ML pipelines

Best for: ML teams that already have internal QA processes and want to own their data annotation lifecycle directly on a platform.

For tool-first teams, see our detailed guide on Labelbox competitors to compare enterprise annotation platforms.

iMerit

iMerit is a managed data annotation company specializing in complex, domain-specific datasets. It combines trained annotators with custom workflows for industries like medical imaging, finance, geospatial analysis, and autonomous vehicles. The company emphasizes data security (SOC 2 Type II, ISO 27001) and employs subject-matter experts rather than crowd-based workers.

The company performs best on specialized projects requiring expert review, such as clinical or geospatial data, where generalist vendors struggle. However, its onboarding process can be slower than average due to custom workflow setup and domain training.

Features:

- Domain-specialized annotation teams and SMEs

- Secure facilities and compliance for regulated data

- Custom QA pipelines and detailed feedback loops

Best for: AI teams in regulated or specialized domains needing expert-level accuracy and secure, fully managed data annotation services.

Sama

Sama delivers human-powered annotation services with a strong focus on ethical AI and transparent QA. The company’s model combines managed annotation teams with proprietary workflow software that tracks quality metrics and annotator performance in real time.

According to our research, the vendor performs well on large-scale image and video labeling projects. However, pricing can be higher than crowd-based alternatives, and turnaround times may increase for specialized edge cases.

Features:

- Transparent QA metrics and real-time performance dashboards

- Strong focus on ethical sourcing and workforce development

- Secure infrastructure for enterprise-grade labeling

Best for: Enterprises that prioritize responsible AI practices and need reliable, high-quality annotation for computer vision tasks.

SuperAnnotate

SuperAnnotate is a data labeling platform for computer vision workflows. It offers an intuitive interface for image, video, and 3D labeling, combining manual and automated annotation tools. Teams can use the platform independently or opt for managed services from vetted human annotators.

The vendor shows strong performance in image segmentation and instance segmentation projects, as well as seamless integrations with ML pipelines via SDKs and APIs. Its collaboration tools and quality control features make it a solid choice for multi-user teams. However, pricing scales with data volume and advanced automation use.

Features:

- Robust computer vision toolkit with polygon, keypoint, and cuboid tools

- Active learning and model-assisted annotation workflows

- Optional managed service layer for hybrid QA

Best for: ML teams running vision-heavy pipelines that need a fast, flexible, and automation-friendly data annotation platform.

Cogito Tech

Cogito Tech is a service-first data annotation company specializing in text, speech, and NLP labeling. It provides managed annotation teams that handle everything from sentiment analysis to entity recognition and intent classification. The company emphasizes multilingual capabilities and competitive pricing, making it appealing for large-scale language data projects.

The main advantage is its scalability and language coverage, particularly in customer service, e-commerce, and conversational AI. While it offers solid accuracy for NLP and audio tasks, Cogito’s tools are less advanced for complex computer vision projects.

Features:

- Multilingual NLP and audio annotation expertise

- End-to-end managed services with QA reviews

- Competitive pricing for high-volume datasets

Best for: Teams working on LLM fine-tuning or large-scale language datasets that need cost-efficient, multilingual annotation services.

Encord

Encord is a data-centric annotation platform built for iterative model improvement through continuous feedback. It supports image, video, and medical data labeling, integrating active learning and human-in-the-loop (HITL) review to refine training datasets.

According to our research, Encord stands out for its advanced QA architecture and real-time performance monitoring, which help ML teams catch and correct mislabels quickly. Its interface supports complex workflows like video segmentation and image recognition, while its API-first design fits seamlessly into MLOps pipelines. However, the platform has a moderate learning curve and may require onboarding for smaller teams.

Features:

- Active learning and feedback loop integration

- Strong support for visual data and medical imaging

- Customizable quality review and audit tools

Best for: Data-centric ML teams looking to streamline dataset refinement and scale human-in-the-loop annotation for LLM training or computer vision projects.

V7 (Darwin)

V7, also known as Darwin, is a computer vision data labeling platform designed for speed, collaboration, and model-assisted labeling. It supports image segmentation, video segmentation, and object detection workflows with integrated model training and automation loops.

V7’s strength is in real-time collaboration and AI-assisted labeling, which reduce annotation time significantly for visual datasets. The platform is popular among ML engineers building image recognition and medical imaging models. It offers integrations with major ML frameworks and cloud storage, though it has limited managed-service options compared to hybrid vendors.

Features:

- Automated annotation with smart polygon and object tracking tools

- End-to-end workflow from labeling to model training

- API integrations for continuous model iteration

Best for: Vision-driven ML teams seeking a fast, automation-heavy platform for scaling image and video segmentation pipelines efficiently.

Amazon Mechanical Turk (MTurk)

Amazon Mechanical Turk (MTurk) is a crowdsourcing platform that enables rapid, low-cost annotation through distributed human workers. It’s often used for simple tasks like image labeling, text classification, and survey data collection.

MTurk’s main advantage is speed and affordability, making it a practical choice for low-risk datasets or proof-of-concept phases. However, the open workforce model introduces challenges in quality control, data security, and consistency – areas where managed vendors perform better. It’s best suited for projects where dataset sensitivity is minimal and quality can be verified through automated checks or secondary QA layers.

Features:

- On-demand crowd workforce for diverse labeling tasks

- Cost-effective and scalable for short-term projects

- Integrates with AWS for automation and data storage

Best for: ML teams running early-stage data collection or basic labeling experiments that don’t require strict compliance, expert QA, or regulated workflows.

Explore sites like MTurk to find crowd-based annotation platforms suited for low-risk data.

When selecting a provider like Appen, prioritize accuracy, compliance, and flexibility. Data governance and established QA protocols matter as much as cost efficiency. A good partner integrates smoothly with your ML pipelines and adapts quickly to evolving annotation needs.

How to Run a Vendor Pilot (and Not Regret the Contract)

Running a vendor pilot the right way saves weeks of rework and prevents costly lock-ins later.

Define scope clearly

Limit the pilot to 1-2 annotation tasks, test 2 or 3 vendors, and keep it under two weeks. This timeframe is enough to compare speed, QA processes, and responsiveness without wasting resources.

Establish a gold standard

Create a benchmark dataset with ground truth labels to measure inter-annotator agreement (IAA) and precision per class. This ensures that every vendor is judged by the same quality baseline.

Track quality and iteration speed

Measure how fast vendors respond to feedback, the rework latency, and how efficiently they correct errors. Vendors that deliver clean iteration loops often scale better in production.

Audit security and data portability

Ask for details about SOC 2, ISO 27001, or HIPAA compliance and confirm you can easily export your labeled data in standard formats like COCO, YOLO, or CVAT XML.

Choose based on rework rate, not unit cost

The cheapest per-label quote rarely means the best outcome. The real cost is hidden in how much data must be relabeled or debugged later.

A short, well-designed trial with clear benchmarks will show which vendor delivers reliable results at scale.

Quick Appen Alternatives Overview

Here’s a snapshot for ML teams deciding between managed, hybrid, or platform-first approaches.

| Vendor | Model | Strength | Best For | Risk |

| Label Your Data | Hybrid managed | QA-first accuracy (98%) with human-in-loop review | Regulated domains, research-grade datasets | Best suited for precision over raw volume |

| Scale AI | Platform + service | Automation with multimodal coverage | Large enterprises, model training at scale | High pricing |

| TELUS International | Managed | Workforce scale and compliance readiness | Multilingual or global labeling projects | QA variance in large-scale batches |

| Labelbox | Platform | In-house control with robust MLOps integration | Teams building internal pipelines | Requires strong internal management |

| iMerit | Managed | Domain SMEs for healthcare, finance, and geospatial data | Vertical-specific annotation | Longer onboarding cycle |

| Sama | Managed | Ethical workforce with transparent QA metrics | Enterprise AI programs | Higher cost vs. offshore vendors |

| Encord | Platform | Active learning and feedback-driven workflows | Data-centric AI teams | Steeper learning curve |

| V7 (Darwin) | Platform | Automation and collaboration for vision projects | Computer vision pipelines | Limited managed service options |

| Amazon Mechanical Turk (MTurk) | Crowd | Fast, low-cost data tasks | Early-stage, low-risk data projects | No security or expert QA |

| SuperAnnotate | Platform + service | Visual-first workflows with annotation tooling | CV tasks with optional human QA | Limited customization for complex data |

Tip: Use this table as a baseline for shortlisting vendors. The right fit depends on your internal resources. Managed models deliver precision and oversight, while platform-first tools give speed and flexibility to in-house ML teams.

Key Takeaways for ML Teams

Choosing a replacement for Appen depends on your priorities, like quality control, scalability, or internal ownership. Each vendor model serves a different type of ML workflow, so align your choice with the structure of your data operations.

Managed services work best when you need consistency, SME-level accuracy, and compliance. Providers like iMerit and Sama excel in regulated domains where domain knowledge and repeatable QA are critical.

Hybrid models offer the middle ground between control and quality. Companies like Label Your Data and Scale AI combine automation with human verification, ensuring precision on complex, high-stakes data while keeping iteration speed high.

Platform-first tools such as Labelbox, Encord, and V7 give ML teams full pipeline control and seamless MLOps integration. They’re ideal for in-house setups that already have dedicated QA or automation teams.

Annotation vendors should be judged by transparency, not price. Inconsistent quality slows creative iteration. Always review their QA playbook and confirm that labeled data can be easily exported. Flexibility and clarity in how data moves through your pipeline matter more than cost alone.

Instead of relying on long RFP processes, run focused pilots: small datasets, clear quality metrics, and two-week turnaround cycles.

About Label Your Data

If you choose to delegate data annotation, run a free data pilot with Label Your Data. Our outsourcing strategy has helped many companies scale their ML projects. Here’s why:

Check our performance based on a free trial

Pay per labeled object or per annotation hour

Working with every annotation tool, even your custom tools

Work with a data-certified vendor: PCI DSS Level 1, ISO:2700, GDPR, CCPA

FAQ

Who are the main Appen competitors?

Major competitors include Label Your Data, Scale AI, TELUS International, iMerit, Sama, Labelbox, SuperAnnotate, and Encord. These companies offer a mix of managed data labeling services and platform-based annotation tools tailored for AI and ML training.

Is Appen a good company?

Appen remains one of the largest data annotation providers globally, known for its extensive workforce and experience. However, some enterprises report concerns with QA consistency and communication. Many now evaluate Appen alternatives for more transparent workflows, faster iteration, and specialized domain expertise.

What companies are similar to Appen?

Leading alternatives include Label Your Data, Scale AI, TELUS International, iMerit, Sama, and Labelbox. These vendors differ in focus: some emphasize managed annotation services with expert QA, while others provide platforms for in-house labeling and automation.

Why are ML teams moving away from Appen?

Enterprises cite inconsistent QA, unclear feedback loops, and slower rework cycles. Many now prefer hybrid or platform-based vendors that combine automation with transparent quality metrics, faster iteration, and stronger data governance.

What factors matter most when choosing a data annotation company?

Beyond pricing, the key differentiators are data quality assurance, domain expertise, integration flexibility, and security compliance. A good provider should align with your workflow, support MLOps integration, and maintain measurable QA standards such as inter-annotator agreement (IAA).

Is it better to use a managed service or an annotation platform?

Managed services deliver consistency and subject-matter expertise for regulated or complex data, while platforms give internal teams control and flexibility. Many ML teams now adopt a hybrid model – automated pre-labeling paired with human QA for critical edge cases.

Written by

Karyna is the CEO of Label Your Data, a company specializing in data labeling solutions for machine learning projects. With a strong background in machine learning, she frequently collaborates with editors to share her expertise through articles, whitepapers, and presentations.